行政院國家科學委員會專題研究計畫 期中進度報告

複雜嵌入式系統的正規整合式發展技術架構(2/3)

期中進度報告(精簡版)

計 畫 類 別 : 個別型

計 畫 編 號 : NSC 95-2221-E-002-067-

執 行 期 間 : 95 年 08 月 01 日至 96 年 10 月 31 日

執 行 單 位 : 國立臺灣大學電機工程學系暨研究所

計 畫 主 持 人 : 王凡

處 理 方 式 : 本計畫可公開查詢

中 華 民 國 96 年 08 月 17 日

行政院國家科學委員會補助專題研究計畫

□ 成 果 報 告

□期中進度報告

(計畫名稱)

計畫類別:□ 個別型計畫

計畫編號:NSC 95-2221-E-002-067-

執行期間:

2006 年 08 月 01 日至

2007 年 07 月 31 日

計畫主持人:王凡

共同主持人:

計畫參與人員: 林禮平、 林志威

成果報告類型(依經費核定清單規定繳交):□精簡報告

本成果報告包括以下應繳交之附件:

□赴國外出差或研習心得報告一份

□赴大陸地區出差或研習心得報告一份

□出席國際學術會議心得報告及發表之論文各一份

□國際合作研究計畫國外研究報告書一份

處理方式:除產學合作研究計畫、提升產業技術及人才培育研究計畫、

列管計畫及下列情形者外,得立即公開查詢

□涉及專利或其他智慧財產權,□一年□二年後可公開查詢

執行單位:

Rule-based Adaptive Test Plan Generation for Embedded Systems

∗Abstract

In previous work, test plans were either constructed manually or generated with tools adapted to specific appli-cation domains. In either way, it is labor-intensive, error-prone, and difficult to incorporate the knowledge of appli-cation domains. We propose a rule-based open framework to solve this problem. Test experts only need to material-ize their knowledge in an application domain as a set of control rules. Each time a rule is executed, our test-controller will check the available testcases and the test log, select the next testcase accordingly, execute the selected testcase, and update the test log. The design of our rule and log allows for the implementation of a wide variety of test strategies. We have implemented our ideas in the framework of TTCN-3, an international standard for testing language. We experimented our tool with requirement coverage test-ing and fault-based testtest-ing. We found that our tool could be flexibly reused for the two test strategies and significantly reduce the cost in constructing test plans.

Keywords: test plan, rule, open, TTCN-3, MSC, test au-tomation, test-control

1 Introduction

The complexity of modern embedded systems has in-creased significantly. Consequently, the necessity for au-tomated and systematic testing also has been growing sig-nificantly. At this moment, most industrial projects still rely on people to manually construct testcases and test plans. A major reason is that the engineers often found it difficult to use their domain knowledge to efficiently and mechanically create testcases and test plans. As a result, the testcases and test plans automatically generated with programs are usually bulky and inefficient in either achieving coverage or detecting faults. Our goal is to develop an open frame-work so that engineers can efficiently translate their domain knowledge to high-quality test plans.

In the framework, engineers can materialize their test-∗The work is partly supported by grants NSC 94-2213-E-002-085 and

NSC 94-2213-E-002-092.

ing knowledge in an application domain as a set of test-control rules. Each rule queries the available testcases and the test log for the selection of the next testcase to be exe-cuted. After a testcase has been executed, the test-controller updates the test log and continues to select the next rule. Many components in this framework can be reused for var-ious SUT (software under testing) and varvar-ious testing tasks. This reusability could lead to significant reduction in the cost and time to construct quality test plans.

We have carefully designed our rules and log to support a variety of testing activities. We have implemented our ideas in the framework of TTCN-3 (Testing and Test-Control

Notation, version 3), an international standard language for

test specification for a wide range of telecommunication and embedded systems. We have carried out two experiments and applied our tool to a real-world benchmark, a proto-col program for DNS (Domain Name Servers). In the first experiment, we put down rules for requirement coverage-based testing. In the second, we did for fault-coverage-based test-ing. The experiments showed the versatility, flexibility, and reusability of our tool for the efficient construction of high-quality test plans.

Following is our presentation plan. Section 2 discusses the related work. Section 3 explains our framework for au-tomated testing. Section 4 presents the definition of test-cases with branching structures. Section 5 defines our log records. Section 6 defines our test-control rules. Section 7 presents our test-control procedure. Section 8 briefly in-troduces TTCN-3 and explains the various implementation issues. Section 9 reports our experiments. Section 10 is the conclusion.

2 Related work

Xu and et al used Fuzzy Expert System to select the testcases to be executed by identifying the critical testcases [19]. But the knowledge base is not modifiable by and ac-cessible to the users.

There are also study in testing metrics in the hope that a good metric could lead to efficient test plans. For example, Elbaum et al proposed a metric called APFD for measuring the rate of fault detection. Based on the metric, they im-prove APFD with prioritized testcases [2–4]. However, the

approach only works when test costs and fault severity are uniform. This is in general the problem with testing met-rics which lack of flexibility. In contrast, our test-control rules can incorporate various strategies to cope with various situations in testing activities.

Test suite minimization techniques decrease costs by re-ducing a test suite to a minimal subset that maintains equiv-alent coverage of the original suite with respect to particular test adequacy criterions. For example, Aggrawal et al pro-posed a technique that achieved 100% code coverage opti-mally during version specific regression testing [1].

TTCN-3 is an international standard test language that not only supports the laconic and unambiguous description of testcases but itself also allows for the specification of test control flow [5–11]. But test control strategies written in TTCN-3 is another form of programs. This means that such control strategies could be complex to construct, maintain, and reuse. In contrast, a large part of our framework for test plan generation, including the engine for rule selection and execution and the program for log maintenance, is reusable. And it is usually easy for engineers to put down their testing knowledge as rules.

3 Framework for automated testing

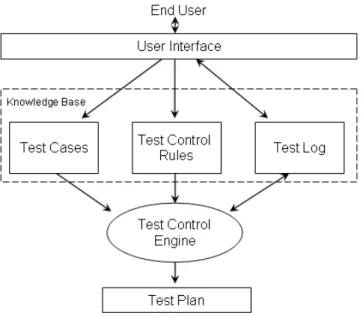

In figure 1, we have drawn the components in our frame-work with rule-based adaptive test-control. The users

in-Figure 1. Open framework for test-control

put testcases and test-control rules to our system. The rules should be written for a particular testing task. Before the test-control engine is started, the log is empty. The engine

then iteratively selects a rule, applies the rule to the test-cases and the log to select the next testcase, then execute the selected testcase, update the log, and repeat the itera-tion. We have carefully taken reusability into considera-tion in designing this framework. The test-control engine can be reused for any adaptive test plan generation task and any SUT. The testcases can be reused for different testing tasks of the same SUT. The test-control rules are the only parts that may not be reused. Considering the experience in expert systems, rule construction should be much simpler than coding programs. With this usability at hand, we feel that the framework could greatly reduce the time and cost in constructing adaptive test plans.

4 Testcases

We let N be the set of natural numbers and C be the set of all numbers and all finite character strings. We formally define testcases with branching structures as labeled trees. A labeled tree (L-tree) τ is a tuple hV, r, LV, λ, δi with the

following constraints.

• V is a finite set of nodes.

• r ∈ V is the root.

• LV is a of node types or values.

• λ : V 7→ C ∪ LV is a labeling function of the nodes. A node with no outgoing arcs is external and is called a leaf. A node with outgoing arcs is called internal. If

v is an internal node, then λ(v) is the type of v. If v

is an external node, then the parent of v has a unique child and λ(v) is the value of v’s parent.

• δ : (V × N) 7→ V is a partial function that defines

the ordered children of each node with the following restrictions.

• For each v ∈ V and each i, j ∈ N with i 6=

j, if δ(v, i) and δ(v, j) are both defined, then δ(v, i) 6= δ(v, j).

• For each v ∈ V and i > 0, if δ(v, i) is defined, then δ(v, i − 1) is also defined.

These two restrictions basically say that for each node

v, δ maps from v and a closed interval with

lower-bound zero.

Each node has a finite number of ordered children.

Our implementation and experiments were conducted in the framework of TTCN-3. Conceptually, a TTCN-3 test-case is in the shape of an L-tree hV, r, LV, λ, δi. To

repre-sent the structures of various TTCN-3 testcases, there are many labels for the types of nodes. Some of the node types in LV are as follows.

tc, if, for, while, do-while, alt, block, interleave, call, timer, action, default, port, message, condition, receive, send, label, goto, repeat, alt-step, function, create, return, stop

We require that only the root node of a testcase L-tree is la-beled with tc (for testcase). Due to page-limit, we have left the explanation of the labels in a report in our lab webpage at http://cc.ee.ntu.edu.tw/˜val.

Example 1 : We have an A-tree drawn in figure 2. Inside

Figure 2. A testcase L-tree with an alt-statement

each node, we put its name. The root is r = tc. Thus V is the set of all names inside the ovals. The order of the children of a node is from left to right. Following are the labels for the types of some internal nodes.

λ(tc1) = tc, λ(alt) = alt, λ(blk1) = λ(blk2) = block, λ(sta1) = λ(sta2) = λ(sta3) = λ(sta4) = statement, λ(rece1) = λ(rece2) = receive,

λ(oper1) = λ(oper2) = operand, λ(port1) = λ(port2) = port, λ(msg1) = λ(msg2) = message,

λ(id) = λ(name1) = λ(name2) = λ(name3)

= λ(name4) = λ(name5) = name,

λ(fail) = fail, λ(pass) = pass, λ(rep) = repeat

These types are labeled on internal nodes. Those labels on the leaves sometimes specify the values of their parents. For example, the name of the testcase is λ(tc1), the label of the leaf of node ‘tc1.’ The name of the message at node msg1 is λ(msgA). Some children of the nodes are as follows.

δ(tc1, 0) = sta1, δ(tc1, 1) = sta2, δ(sta1, 0) = alt, δ(sta2, 0) = rep, δ(alt, 0) = blk1, δ(alt, 1) = blk2, δ(blk1, 0) = oper1, δ(blk1, 1) = sta3,

δ(oper1, 0) = port1, δ(oper1, 1) = rece1, δ(rece1, 0) = msg1, δ(sta3, 0) = setv1, δ(setv1, 0) = pass, δ(blk2, 0) = oper2, δ(blk2, 1) = sta4, δ(oper2, 0) = port2, δ(oper2, 1) = rece2, δ(rece2, 0) = msg2, δ(sta4, 0) = setv2, δ(setv2, 0) = fail · · · ·

The testcase says that if message ‘msgA’ is received from port portA, verdict ‘pass’ is issued; if message ‘msgB’ is received from portB, verdict ‘fail’ is issued. The rightmost leaf is labeled with type repeat which means the whole test-case is to be repeated. A TTCN-3 testtest-case that corresponds to this A-tree is presented in subsection 8.1. ¥

Example 2 : Figure 3 is a testcase with a while-loop. A

Figure 3. A testcase L-tree with a while-loop

message is repeatedly sent until the loop condition becomes false. We may have λ(tc) = tc, λ(sta1) = statement,

λ(while) = while, λ(sta2) = statement, and λ(rece1) = receive. A TTCN-3 testcase that corresponds to this A-tree

is presented in subsection 8.1. ¥

5 Test log

A log works as a database that stores the information of past execution of testcases. To select the next testcase to ex-ecute, the test-controller need to query a log. Conceptually, a log is a sequence Γ = Φ1Φ2. . . Φnof records of testcase executions. In other words, if a log contains the information of n execution of testcases, then there should be n log L-trees. A log record used in our test-controller is represented as a three-level L-tree. The label of the root of a log record

is always log. For a log L-tree Φi, an integer i ∈ [1, n], the labels of the second-level nodes are the following.

• number: the sequence number of the record in the log,

i.e., i.

• name: the name of the testcase.

• verdict: the result of the testcase execution. Possible

values of verdict include pass (the SUT behaves ac-cording to the test purpose), fail (that the SUT violates its specification), inconc (inconclusive, i.e., neither a pass nor a failure), none (no verdict, the default value set when the testcase starts), or error (an error is de-tected in the test devices).

• faultdection: the number of faults that have been

de-tected during the execution of this testcase.

• path: the sequence of executed events of the testcase

execution.

• coverage: the coverages of the testcase execution, e.g.,

line coverage and branch coverage.

• time: the time information of the testcase execution,

e.g., execution time and response time.

• rule: the rule that chose the testcase

• relatedtestcases: related testcases that also qualified

for execution with the this rule.

A leaf, i.e., a third-level node, is labeled with a value that the test-controller assigned to the label of the corresponding parent second-level node.

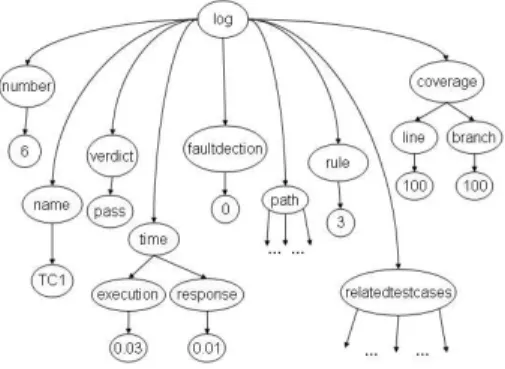

Example 3 : We have an example log with two records in

figures 4 and 5 respectively. The log record in figure 4

Figure 4. A log L-tree

shows that testcase six with name ‘TC1’ was executed, the result was ‘pass’ with no fault detected. The children of the

path node are events executed in this testcase. Due to page

limit, we did not list the event sequences. Also, the testcase line coverage and branch coverage were 100%. The exe-cution time and response time were 0.03 seconds and 0.01 seconds respectively. We also know that rule three selected this testcase.

The second log record is for testcase three. The execu-tion result is ‘fail.’ The explanaexecu-tion for the other labels is

Figure 5. Another log L-tree

similar as in the above and has been omitted. ¥

6 Test-control rules

The test-control rules are built on queries of L-trees. Suppose we are given a set ∆ of testcases and a log Γ. A

test-control rule ρ is inductively constructed as follows. ρ ::= ρ1∨ ρ2| ¬ρ1| ψ1| ψ1∼ c | ψ1∼ ψ2 ψ ::= π | hρi : π

π ::= | α/π | α[i]/π

Here ∼∈ {<, ≤, =, 6=, ≥, >} is an inequality operator. c is either a string or a number in C. α is a label on the nodes of the testcase L-trees. i is a natural number constant. Intu-itively, α[i] is the i’th child with label α.

Boolean operations like ⇒ and ∧ are defined as standard shorthands. ψ is a path reference. When it is not used in an inequality, it means to check the existence of the path.

hρi : π is a conditional path reference in testcases that

sat-isfy ρ. Thus a test-control rule can be composed of Boolean operations, path references, and inequalities. Before we for-mally present the semantics of our test-control rules, we first use the following example to explain their meaning.

Example 4 : Assume that we have a testcase set ∆ of the

two testcases in examples 1 and 2 and a log Γ with the log records in example 3. Here are two test-control rules based on ∆ and Γ.

• htc/statement/alt/block/operand/receive∧¬(tc/id = hlogi : log/namei : tc/id

• hhtc/statement/whilei : tc/statement/receivei : tc/id

The first specifies testcases that has a receive event and has not been executed before. The second specifies testcases with a while statement and a send event outside that while

statement. ¥

Now we want to define the semantics of test-control rules. Suppose we are given a set ∆ of testcases, a set Γ of log records, and a testcase τ = hV, r, LV, λ, δi ∈ ∆.

An instantiated path reference π from node v in τ and ∆, denoted Refs∆

τ(v/π), is inductively defined as follows.

• Refs∆

τ(v/ ) = {λ(δ(v, 0))} if and only if δ(v, 0) is defined.

• Refs∆

τ(v/α/π) = Refs∆τ(δ(v, k)/π) if and only if there is a k such that δ(v, k) is defined and λ(v0) = α.

• Refs∆τ(v/α[i]/π) = Refs∆τ(v0/π) if and only if there is a k such that δ(v, k) is defined such that δ(v, k) is the i’th child of v with λ(δ(v, k)) = α.

We say τ satisfies a rule ρ in ∆ and Γ, in symbols τ |=∆ Γ ρ,

if the following inductive constraints are satisfied.

• τ |=∆

Γ ρ1∨ ρ2if and only if τ |=∆Γ ρ1or τ |=∆Γ ρ2.

• τ |=∆

Γ ¬ρ1if and only if it is not true that τ |=∆Γ ρ1.

• τ |=∆

Γ ψ1if and only if there is a d ∈ Refs∆τ(ψ1).

• τ |=∆

Γ ψ1∼ c if and only if there is a d ∈ Refs∆τ(ψ1)

with d ∼ c.

• ∆, τ |=∆

Γ ψ1 ∼ ψ2 if and only if there are a d1 ∈ Refs∆

τ(ψ1) and a d2∈ Refs∆τ(ψ2) with d1∼ d2.

• Given an L-tree τ0 = hV, r, LV, λ, δi, Refs∆

τ(hρi : π) is equal to

{d | τ0∈ ∆ ∪ Γ, τ0|=∆

Γ ρ, d ∈ Refs∆τ0(r/π)}.

We may use a test-control rule to specify a set of testcases eligible for the next execution. Formally, given a set ∆ of testcases, a set Γ of log records, and a rule ρ, we denote the set as B∆ Γ(ρ) def = {τ | τ ∈ ∆ ∧¡τ |=∆ Γ ρ ¢ }.

7 Test-control engine

With the materials presented in sections 4, 5, and 6, we now present the algorithm for our rule-based test-plan gen-eration in the following.

GenerateTestPlan(∆, Λ) /* testcase set ∆; rule set Λ; */ {

Γ := ∅; repeat forever {

select a rule ρ from Λ. if (B∆

Γ(ρ) 6= ∅) {

select a testcase τ ∈ B∆ Γ(ρ).

execute τ and create a execution record γ. Γ := Γ ∪ {γ}.

} } }

The procedure iteratively selects a rule ρ from Λ to select a set B∆

Γ(ρ) of testcases. Then it selects a testcase in BΓ∆(ρ)

to execute and updates the log. It continues until the users stop it. At the time of being stopped, set Γ stores the test plan generated with our rule-based test-controller.

8 Implementation with TTCN-3

Since we have applied our ideas to TTCN-3 [5–11] in the experiments, for the readers’ benefit, we briefly explain

TTCN-3 in subsection 8.1. Then we discuss various imple-mentation issues related to TTCN-3 in subsection 8.2.

8.1 TTCN-3

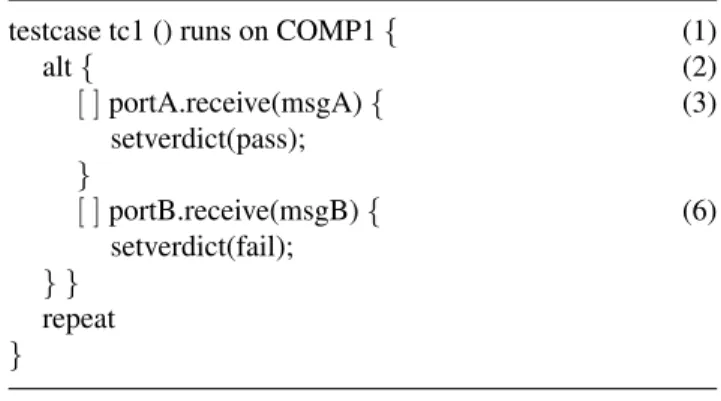

TTCN-3 is a standard language for the concise and pre-cise test specifications for a wide range of computer and telecommunication systems. Testcases and plans written in TTCN-3 assure tool vendor independence. The testcase in example 1 can be reprsented in TTCN-3 as follows. testcase tc1 () runs on COMP1 { (1)

alt { (2) [ ] portA.receive(msgA) { (3) setverdict(pass); } [ ] portB.receive(msgB) { (6) setverdict(fail); } } repeat }

This testcase contains an alt-statement and a repeat-statement. Line (1) starts the declaration of a testcase named COMP1. Line (2) starts an alt-statement with two blocks. The alt-statement is executed by selecting exactly one of the two blocks for execution. Line (3) starts the first block which contains a message-receiving statement and a setverdict statement. The message is named ‘msgA’ from port ‘portA.’ Line (6) starts the second block of the alt-statement. Figure 6 is the testcase in TTCN-3 graphical representation and is like a sequence diagram. The circle

Figure 6. A testcase in graphial representa-tion

with a horizontal line across in the left-lower corner repre-sents the repeat-statement.

The testcase in example 2 in TTCN-3 is as follows. testcase tc2 () runs on COMP1{

while(j<3) { portA.send(msgA); j:=j+1; } portA.receive(msgB); }

8.2 Implementation issues

Our test-controller starts with translating testcases in TTCN-3 into an XML format. Then we use SEDNA [16] as the testcase manager. SEDNA is an open-source and full-featured XML database management system that sup-ports queries, updates, security [16]. A query language of SEDNA is XQUERY. Our log records are also saved in an XML format so that we can query the log through SEDNA. Our test-control rules were defined in a way to parallel the syntax and semantics of a subclass of XQUERY. Each XML document actually has a root. Testcases are those first-level subtrees with root label ‘tc’ while log records are those with root label ‘log.’ The translation of rule structures with Boolean operations is kind of straightforward. The transla-tion with conditransla-tional structures like hρi : π is simply start-ing a new path reference from the root. For a new path reference of testcases, we start the path with “/tc” while for one of log records, we start the path with “/log.” For exam-ple, the first control rule in example 4 contains a search for a testcase in the outer angle brackets and another search for a log record in the inner angle brackets.

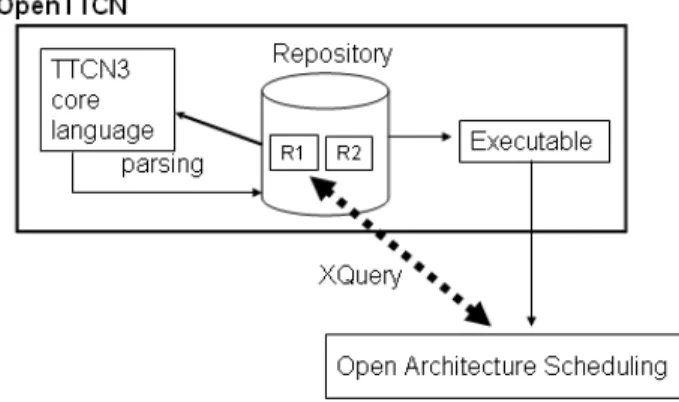

As for test execution, at this moment, we rely on Tester3, a product by the OpenTTCN company [14], to execute the testcases. Figure 7 shows the modules and data-flow within our rule-based controller. Our controller feeds test-cases to Tester3 which then translates them into the XML format. An XML file is stored by Tester3 in a module called R1 while the execution information in R2. Each time when a testcase is executed by Tester3, we get some execution in-formation from Tester3, create a log record, and then update the log with the new record.

9 Experiments

In our experiment, we use a UDP (User Datagram

Pro-tocol) program for an Internet DNS (Domain Name Server)

as our SUT. The program is executed as a shell command on a local host with a UDP port specified as a command-line parameter. The program is always listening to the Internet for incoming packets from peer entities. Typical packets can be of type register, query-name, query-IP, modify, and

Figure 7. Implementation with OpenTTCN’s tools

cancel. When a packet (request) arrives, its content is

de-coded and analyzed and a response is generated immedi-ately accordingly. The response is encoded and sent out as another UDP packet to the corresponding peer entity. If the request is unrecognized, a special ‘Unrecognized’ response is sent back to the peer. We only use the encode/decode part of the UDP protocol program as our SUT. This part needs customization. We adopt the TRI test adaptation interface developed by the OpenTTCN company.

In total, we have constructed 41 testcases in TTCN-3 for the following two experiments.

9.1 Requirement coverage testing

We constructed 25 test-control rules for requirement cov-erage analysis [18]. The objects of requirement covcov-erage are the message types, including requests and responses. When all the messages are covered, we may stop and claim 100% requirement coverage. We focus on 5 request types:

register, query-name, query-IP, modify, and cancel. For

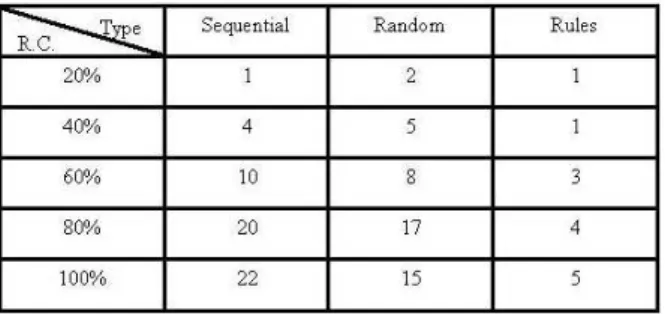

each request type, we want to observe the behaviors of its rejection and acceptance. Therefore, in total, we have 10 objects in our coverage analysis. To evaluate the perfor-mance of our rule-based test-controller, we compare it with the test plans generated with the sequential strategy (which executes the testcases sequentially) and the random strategy (which selects the next testcase randomly). We report the experiment data in table 1. In the leftmost column, we can find the coverage numbers. In each row, we list the num-ber of iterations of each strategy to reach the corresponding coverage number in the leftmost entry of the same row. As can be seen in the table, our test-controller runs the fastest to achieve 100% requirement coverage.

Table 1. Data for the requirement coverage testing

9.2 Fault-based testing

In fault-based testing, we assume the ways faults can happen in a system and design test plans to uncover such faults [2]. In this experiment, we used ten types of faults as our test objects. For example, one fault that we want to detect is deletion of an IP address twice without registering it in between. We also constructed 25 test-control rules for this experiment. We describe two of the test-control rules in the following.

• Rule 1: If the last testcase in the log was to

regis-ter “192.168.0.1” and “test.edu.tw” to the DNS server, then the next testcase should remove ”192.168.0.1”.

• Rule 2: if the last testcase in the log has removed

“192.168.0.1”, then the next testcase should remove “192.168.0.1” again.

In the experiment, we compare the performance of our test-controller with the random strategy and the sequential strat-egy. The data is reported in table 2. In the leftmost column,

Table 2. Fault detection testing

we list the numbers of faults detected. For each entry, we list the number of iterations needed to detect the number of faults in the leftmost entry of the same row. As can be seen, our test-controller outperformed the other two strate-gies. This experiment shows that our framework could be flexible enough to support efficient fault-based testing.

10 Conclusion

We discuss how to generate test plan automatically and efficiently with a user-friendly interface. In our open framework, test experts can easily materialize their do-main knowledge of test plan construction for various test-ing tasks. In the future, we plan to extend our test-control rules so that users can make more expressive and powerful queries. We also plan to implement a user-friendly interface for the easy construction of test-control rules.

References

[1] K. K. Aggrawal, Y. Singh, A. Kaur. Code coverage based technique for prioritizing test cases for regression testing. ACM SIGSOFT Software Engineering Notes, September 2004.

[2] S. Elbaum, A. Malishevsky, G. Rothermel. Prioritizing test cases for regression testing. International Sympo-sium on Software Testing and Analysis. Proceedings of the ACM SIGSOFT international symposium on Soft-ware testing and analysis, 2000.

[3] S. Elbaum, A. Malishevsky, G. Rothermel. Incorpo-rating Varying Test Costs and Fault Severities into Test Case Prioritization. Proceedings of the 23rd In-ternational Conference on Software Engineering, May, 2001, pp.12-19.

[4] S. Elbaum, A.G. Malishevsky, G. Rothermel. Test case prioritization: a family of empirical studies. IEEE Transactions on Software Engineering, 2002.

[5] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 1: TTCN-3 Core Language. ETSI ES 201873-1 v3.1.1 2005-06.

[6] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 2: TTCN-3 Tabular presentation Format (TFT). ETSI ES 201873-2 v3.1.1 2005-06.

[7] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 3: TTCN-3 Graphical Presentation Format (GFT). ETSI ES 201873-3 v3.1.1 2005-06.

[8] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 4: TTCN-3 Operational Semantics. ETSI ES 201873-4 v3.1.1 2005-06.

[9] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 5: TTCN-3 Runtime Interface (TRI). ETSI ES 201873-5 v3.1.1 200201873-5-06.

[10] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 6: TTCN-3 Control Interface (TCI). ETSI ES 201873-6 v3.1.1 2005-0201873-6.

[11] ETSI: Methods for Testing and Specification (MTS) The Testing and Test Control Notation version 3, Part 7: Using ASN.1 with TTCN-3. ETSI ES 201873-7 v3.1.1 2005-06.

[12] S. Fine, A. Ziv. Coverage directed test generation for functional verification using bayesian networks. Pro-ceedings of the 40th Design Automation Conference, 2003.

[13] J.-M. Kim, A. Porter. A history-based test prioritiza-tion technique for regression testing in resource con-strained environments. Proceedings of the 24th Interna-tional Conference on Software Engineering, Orlando, Florida, p.119-129, 2002.

[14] http://www.openttcn.com/

[15] M.A. Robbert, F.J. Maryanski. Automated test plan generator for database application systems. Proceed-ings of the ACM SIGSMALL/PC symposium on Small systems, 1991, Toronto, Canada, p.100-106, ACM Press.

[16] http://modis.ispras.ru/sedna/index.htm

[17] A. Srivastava, J. Thiagarajan. Effectively prioritiz-ing tests in development environment. ACM SIGSOFT Software Engineering Notes, Volume 27, Issue 4, p.97-106, July 2002.

[18] M.W. Whalen, A. Rajan, M.P.E. Heimdahl, S.P. Miller. Coverage metrics for requirements-based test-ing. Proceedings of the 2006 international symposium on Software testing and analysis Portland, Maine, USA, p.25-36, 2006.

[19] Z. Xu, K. Gao, T.M. Khoshgoftaar. Application of fuzzy expert system in test case selection for system re-gression test. IEEE International Conference on Infor-mation Reuse and Integration, Aug, 2005, p120-125.