Volume 2013, Article ID 645961,9pages http://dx.doi.org/10.1155/2013/645961

Research Article

A Study of the Integrated Automated Emotion Music with the

Motion Gesture Synthesis via ZigBee Wireless Communication

Chih-Fang Huang

1and Wei-Po Nien

21Department of Information Communications, Kainan University, Kainan Road, Luzhu Shiang, Taoyuan City 33857, Taiwan 2Department of Mechanical Engineering, National Chiao Tung University, 1001 University Road, Hsinchu 300, Taiwan

Correspondence should be addressed to Chih-Fang Huang; jeffh@saturn.yzu.edu.tw Received 22 March 2013; Accepted 22 September 2013

Academic Editor: Tai-hoon Kim

Copyright © 2013 C.-F. Huang and W.-P. Nien. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Automated music composition and algorithmic composition are based on the logic operation with music parameters being set according to the desired music style or emotion. The computer generative music can be integrated with other domains using proper mapping techniques, such as the intermedia arts with music technology. This paper mainly discusses the possibility of integrating both automatic composition and motion devices with an Emotional Identification System (EIS) using the emotion classification and parameters through wireless communication ZigBee. The correspondent music pattern and motion path can be driven simultaneously via the cursor movement of the Emotion Map (EM) varying with time. An interactive music-motion platform is established accordingly.

1. Introduction

Discussion of this paper is shown on the main schema such asFigure 1.

In this paper, the main idea of the innovated music-motion generation system is based on the mood analysis using the concept of Emotion Map (EM) which will be introduced in the next section. The corresponding mood parameters can be retrieved from Emotion Map with𝑥-axis and𝑦-axis parameters to generate music parameter by Music ID and to generate movement action by Motion ID. Music ID is used to generate music patterns through Max/MSP real-time programming [1], and the real-time automated composition is performed by the corresponding Motion ID from RoboBuilder Development Board [2], control servo-motors, and a robot that corresponds to the action. Music ID and Motion ID are transferred to client via wireless communication ZigBee. ZigBee’s wireless communication can achieve ubiquitous computing [3], concept that consists of five basic elements (5A): any time, any where, any service, any device, and any security.

In the system architecture diagram (Figure 1), the dashed line is the integration of the future development, including

Emotional Identification System (EIS), intermedia audio-visual art, digital music learning system, and interactive multimedia device, which can be combined with audio and interactive toys using motion sensor. To EIS, for example, the future work can consolidate the research of brain waves. By analyzing the data of user’s brain waves, it captures the tion response to generate the objective analysis of the emo-tional parameters. In addition, Music ID can be generated by motion sensor to perform the algorithmic composition with more parameters provided, but the ultimate goal of the entire system is to integrate automatic composition and interaction of science, and more music technologies can be applied in the future with cross-disciplinary research in the industry field. Automatic composition [4] becomes more mature than before, and it can be applied even to the video game.

2. Background

2.1. Cognitive Emotion Map and Emotional Parameters Anal-ysis. According to the cognitive response evaluation for the

study of the music mood, many experiments demonstrate that the arousal (activation) and valence (pleasantness) in an 𝑥-𝑦 two-dimensional diagram are the dominant representa-tion for music cognirepresenta-tion [6].

Emotional Identification System (EIS) Emotional Map (EM) according to

user requirement definition Generating music patterns and motion patterns based on various emotional patterns

Intermedia audio-visual art Digital music learning system Interactive multimedia device

Music ID Motion ID Automated music composition Automated robotic movement Motion sensor

Figure 1: Automated emotion music generation with robotic motion based on emotion patterns.

0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 Relaxing Stimulating Happiness Sadness Scary Peacefulness Arousal V alence U n p lea sa n t P lea sa n t

Figure 2: Emotional and cognitive reaction of corresponding figure [5].

As to the emotion mapping, based on Germany Augsburg University’s research [7], four categories of human emotions including happiness, anger, sorrow, and joy retrieved from the brain waves can be expressed in the degree of excitement (arousal) and pleasant degree (valence), distributed in a two-dimensional emotion plane.

According to the announced research by the University of Montreal [8], happy, sad, scary, and peaceful music excerpts for research on emotions are used as the experiments to allow subjects to listen to the music in various emotion types, to evaluate testers’ response from the cognitive music. In addition this system uses the specific result of the experiment as the linkage for both emotional and cognitive responses.

Exuberance Anxious Contentment Depression 53 54 Negative Positive Arousal Valence s y s x

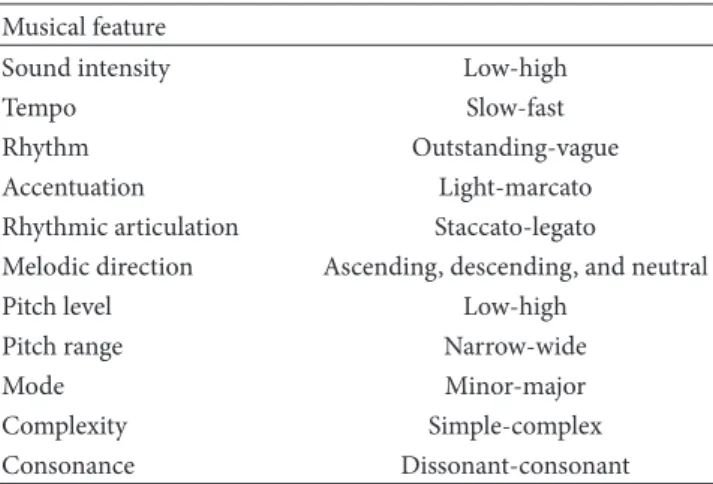

Figure 3: Two-dimensional Emotion Map (2DER). Table 1: Music parameters with their corresponding relations. Musical feature

Sound intensity Low-high

Tempo Slow-fast

Rhythm Outstanding-vague

Accentuation Light-marcato

Rhythmic articulation Staccato-legato

Melodic direction Ascending, descending, and neutral

Pitch level Low-high

Pitch range Narrow-wide

Mode Minor-major

Complexity Simple-complex

Consonance Dissonant-consonant

In combination with the above experimental results, the selected two-dimensional cognitive 2DER [5] inFigure 2is used, and the term “Emotion Map” (EM) is created in our research.

With Max/MSP computer music program implementa-tion, a screenshot of the user’s interface as EM is shown in

Figure 3. In this system the user can choose the emotion vary-ing trajectory through the EM to acquire arousal and valance values, and obtain the output values from the corresponding EM’s𝑥 and 𝑦 values correspondently.

2.2. Music Parameters Analysis. Music features including

pitch, tempo, velocity, and rhythm can be retrieved through musical instrument digital interface (MIDI), to make most of elements in music quantifiable with the integer in a range from 0 to 127.

Table 1is based on the previous research to put both music parameters [9,10] and the corresponding description [9,10].

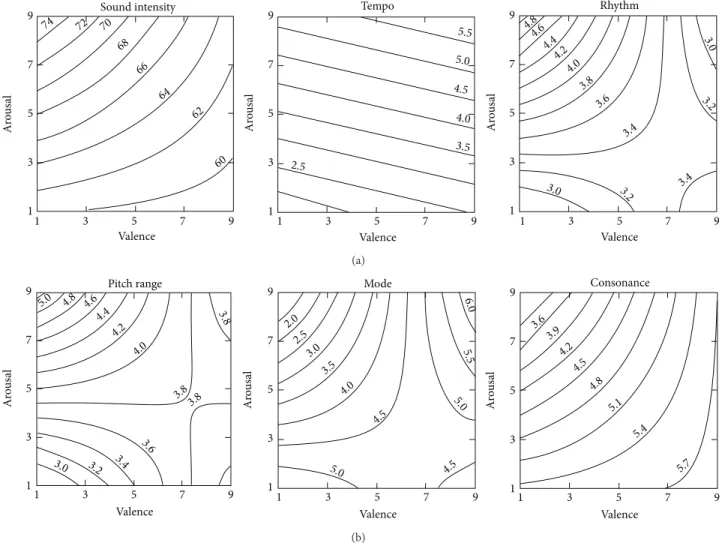

2.3. Music Parameters and the Correspondent Cognitive Emo-tion Map. According to Gomez and Danuser’s experimental

result [11], the rated score for the moods of voluntary subjects is obtained based on the emotional and cognitive analysis

5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l 5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l 5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l

Sound intensity Tempo Rhythm

74 72 70 68 66 64 62 60 5.0 3.5 4.5 5.5 2.5 4.0 4.8 4.6 4.4 4.2 4.0 3.8 3.6 3.4 3.4 3.2 3.2 3.0 3.0 (a) 5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l 5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l 5 7 7 9 9 5 3 3 1 1 Valence Ar o u sa l

Pitch range Mode Consonance

3.6 2.0 5.0 4.8 4.6 4.4 4.2 4.0 3.8 3.8 3.8 3.6 3.4 3.2 3.0 3.0 4.0 2.5 3.5 4.5 4.5 5.0 5.0 6.0 5.5 3.94.2 4.5 4.8 5.1 5.4 5.7 (b)

Figure 4: Music parameters and the corresponding cognitive reaction relationship.

with listening to several music fragments from different musical types. The following result shows the statistical ratings with expert analysis, which is shown inFigure 4.

These experimental results consist of the following con-clusions.

(i) Sound intensity in positive low arousal is lower and is relatively high in negative high arousal.

(ii) Tempo in positive areas (+𝑥 and/or +𝑦) is higher, and the value in high arousal is also higher than that in low arousal.

(iii) Rhythm in negative high arousal area is vaguer but is more explicit than that in the other three quadrants. (iv) Accentuated rhythms and arousal are positively

cor-related.

(v) Rhythmic articulation in positive area (+𝑥 and/or +𝑦) and high-arousal area shows more staccato than that in negative and low-arousal area.

(vi) As pleasantness increases, melodic direction has descending tendencies.

(vii) Pitch range in negative high arousal is broader and in the other three quadrants appears to be narrower.

(viii) Mode (major/minor) in negative high arousal tends to minor mode and in positive high-arousal tends to major mode.

(ix) Harmonic complexity in negative area (−𝑥 and/or −𝑦) is higher than that in positive one, and high arousal is higher than low arousal.

Consonance declines from positive low-arousal position to negative high-arousal position.

2.4. Sieve and Markov Model. These two methods are both

important in computer composition. A Markov model [12] is simply a nondeterministic finite automata with probability associated with each state transition.Figure 5is an example; it has some rule to work the model.

Figure 6 is the flowchart of sieve; it will generate the number in the scale at random, using the modulus operator to determine whether the number is in the scale or not. If the note is in the scale, it will become a musical note. On the contrary, the system will regenerate the number. According to pitch class theory [13], we can use that random number to modulo arithmetic in 12.

I IV V 1/3 1/3 1/3 1/2 1/2 1/2 1/2

Figure 5: Markov model in chord.

Sieve start Generate note at

random Module 12

In the scale

Output the note Yes

No

Figure 6: Sieve dataflow.

2.5. ZigBee Model. The ZigBee Alliance [14] is an

associa-tion of companies working together to develop standards and products for reliable, cost-effective, low-power wireless networking [15]. ZigBee has many kinds of topology, for example, Mesh, Star, and Cluster Trees. These topologies enable ZigBee to be applied to other fields.

In this study, Mesh network was chosen to play an important role. Point-to-point line configuration of Mesh makes isolation of faults easy. The transmission through node-by-node strategy allows longer transfer distance, and the system becomes more portable and unlimitable.

2.6. Algorithmic Composition. Algorithmic composition is

implemented with various algorithms to compose music automatically. The total randomized music can be auto-matically generated easily with random function; however, it lacks any musical rules and algorithms to control the music progression. As shown inFigure 7, thus the software “MusicSculptor” developed by Professor Phil Winsor can control the music parameter progressions via probability distribution [16,17].

Furthermore, there are some delicate works of algo-rithmic composition done by Professor David Cope, using computer music composing program, to generate music in some certain styles with proper music parameter settings and to discuss many of the issues surrounding the program, including artificial inteligence [18,19].

3. Method

In music analysis and automated composition part, we use Max/MSP to implement the generative music. The motion device design programming is by C, and the movement of the motors speed and direction can be controlled in real time.

Algorithm 1is part of program; its function is to move robot to another position.

The flowchart of system is as shown inFigure 8.

Figure 7: The pitch class and octave range distribution table in musicsculptor.

3.1. Sentiment Analysis. Traditional algorithmic composition

uses random function or probability function; however, it is not intuitive to general users. Therefore, in the research we supply friendly interface to user composition. According to 2DER, users draw the emotion trajectory from the EM, based on the emotional quadrant inFigure 2definition, to get the correspondent arousal and valence value and map them into the automated music composition (Max/MSP) and motion planning program.

3.2. Automated Composition. Automated composition of the

above study and discussion has a variety of musical parame-ters, and cognitive EM can be directly related. In the imple-mentation, some of the music parameters are used as follows. (i) Tonality. Tonality is divided into major and minor-chords. According to the value of the valence, the appearance chance of major chord and minor chord progressions can be controlled. For a large valance value, major chord probability is higher. On the contrary, a small valence value will make the minor chord probability higher.

(ii) Chord Progression and the Main Melody. Chord pro-gression is based on the “scale degree,” and cadence can be a rule using Markov chain to control the chord progression chance. Musical scale in major or minor can be determined based on the EM mode, and the main melody can be determined by the Max/MSP program from chord tones with the passing tones added.

(iii) Tempo. In high-arousal condition, the tempo goes faster, and in low-arousal condition it is slower. (iv) Accompaniment. Accompaniment parts use some

fixed music patterns.

(v) Progression Stability. In high-arousal condition, chords changing rate is higher. On the other hand, low arousal causes chord to change less frequently and to be more prone to remain at the level of the “tonic chord.”

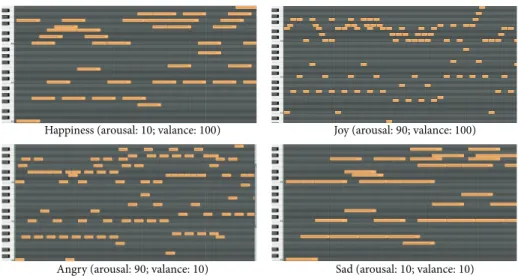

The “real-time automated composition program” by Max/MSP is constructed, and the generated music can be saved into an MIDI file to use MIDI sequencer to analyze its pitch distribution, as shown inFigure 9.

/∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗∗/

/∗Function that sends Position Move Command to wCK module ∗/

/∗lnput: ServolD, SpeedLevel, Position ∗/

/∗Output: Current ∗/

/∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗ ∗∗/ char PosSend (char ServolD, char Speed Level, char Position)

{

Char Current;

SendOperCommand ((SpeedLevel≪5)|ServoID, Position); GetByte (TIME OUT1);

Current = GetByte (TIME OUT1); return Current;

}

Algorithm 1: Part of program.

In Figure 9, the results of the automated composition program show that high arousal (joy and angry) leads into short but many notes. On the contrary, low arousal has more long notes than high arousal.

3.3. Motion Gesture Synthesis and Control. Motion unit for a

robot uses RoboBuilder (seeFigure 10). The robot is made in Korea. And it is a low-priced, full-featured, high technology, and research humanoid robot. The control signal can be sent out to the motors for the motion gesture synthesis and control.

In order to control the robotic motion with emotion data, it is necessary to define the robot’s movement in a table as shown inFigure 1. Action parts, as shown in Motion ID, can be synthesized by the C program to control the mapping between Motion ID and Action, as shown inTable 2.

The emotional controlled robot motion can be expressed based on the correspondent parameters shown inTable 3.

For obtaining the robot motion in the space, the kine-matics are a very common problem to discuss the relation between robot joint space and Cartesian space. The robot static positioning problem can be solved via the forward and backward kinematics [20]. The kinematic analysis for the robot system is significant to integrate the robotic motion control and the music emotion precisely. For instance, if we use the humanoid robot system as the robot motion mecha-nism, its forward kinematics can be described as follows:

joint body𝑇 = [ [ [ [ 𝑟11 𝑟12 𝑟13 𝑝𝑥 𝑟21 𝑟22 𝑟23 𝑝𝑦 𝑟31 𝑟32 𝑟33 𝑝𝑧 0 0 0 1 ] ] ] ] , (1) where [ [ 𝑟11 𝑟12 𝑟13 𝑟21 𝑟22 𝑟23 𝑟31 𝑟32 𝑟33 ] ] = 𝑅3×3 is rotation matrix, [ [ 𝑝𝑥 𝑝𝑦 𝑝𝑧 ] ] = 𝑇3×3 is translation matrix. (2)

Figure 11shows the control architecture of robot.

Table 2: Robotic Motion ID and its motion definition.

Motor position Motion ID Action

Base motor (around)

1 Turn right 90 degrees

2 Turn right 45 degrees

3 Origin 0 degrees

4 Turn left 45 degrees

5 Turn left 90 degrees

Base motor (before and after) 21 Backward 45 degrees 22 Backward 23 degrees 23 Origin 0 degrees 24 Forward 23 degrees 25 Forward 45 degrees Top motor 31 Up 45 degrees 32 Up 23 degrees 33 Origin 0 degrees 34 Down 23 degrees 35 Down 45 degrees

3.4. ZigBee Communication. Figure 12 is the flowchart of ZigBee communication. The ZigBee master is responsible for sending the data from server and receiving the data from the other ZigBee slave. Server can transmit generated MIDI files and motion commands to remote speaker and robot though ZigBee.

4. Experimental Result and Analysis

The experiment uses our method to input some emotion data to our system. The system transforms these data into music features and Motion ID. The specific emotions generate music and drive robot to do some action. We record the result of our system into videos which have four emotions: joy, anger, sadness, and happiness. All videos are available on the internet athttp://140.113.156.190/robot/.

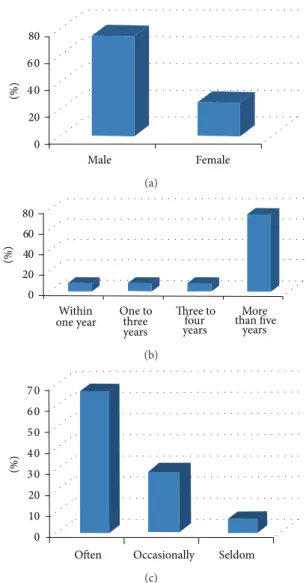

The SPSS software is used to get the statistic results (see

Figure 13). Among the 12 subjects, there are 75% male and 25% female. As most of them have been learning about music

User

Robot action

Automated composition

Corresponding to the musical

Composed music

Motion devices

Corresponding robot control status

Generating Motion ID Rotation angle Rotation rate Tempo Tonality Chord Melody Progression stability Accompaniment

User defined by emotion

Program to generate music

V

alence

Arousal

Motion gesture synthesis and control

Wireless communication parameters

progression

Play music Do action

Figure 8: System flowchart.

Happiness (arousal:10; valance: 100) Joy (arousal:90; valance: 100)

Angry (arousal:90; valance: 10) Sad (arousal:10; valance: 10)

Table 3: Emotional states and their correspondent motion. Emotional

states Happiness Anger Sorrow Joy

Action Fast rotating around the

neck, neck wiggle

Neck fast rotation, head forward tilting up and

down

Neck slow rotation, forward tilting his head down

Neck slow rotation, forward tilting his head down

Motion ID 1∼3 1∼5 4∼5 1∼5 22∼24 24 24 21 25 25 23 31 33∼35 34 32 35 X Y Z Figure 10: RoboBuilder. Emotion data command Motion controller Motion ID Servoamplifier Kinematics Actuator PC-based controller Robot platform PWM drive

Figure 11: Music emotion driven robot control architecture.

more than five years, the rate is up to 75%. There are 66.7% of them who often listen to music. The above information shows that most of the subjects are familiar with music, thus giving more credibility to the test.

ZigBee slave ZigBee master ZigBee slave ZigBee slave Server RS-232

Figure 12: ZigBee communication flowchart.

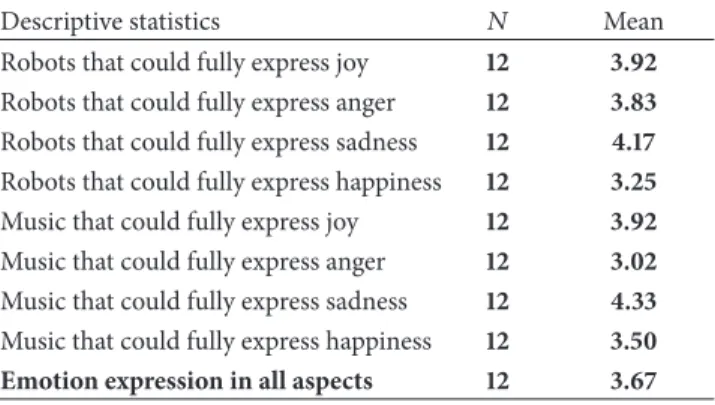

Table 4: Statistics results.

Descriptive statistics 𝑁 Mean

Robots that could fully express joy 12 3.92

Robots that could fully express anger 12 3.83

Robots that could fully express sadness 12 4.17

Robots that could fully express happiness 12 3.25

Music that could fully express joy 12 3.92

Music that could fully express anger 12 3.02

Music that could fully express sadness 12 4.33

Music that could fully express happiness 12 3.50

Emotion expression in all aspects 12 3.67

The reliability coefficient (Cronbach’s 𝛼) is 0.813 which shows the good quality of the subjects’ emotion discrimi-nation (𝛼 > 0.6). The questionnaire survey is conducted to see if the generated music and driven robot match the corresponding emotion. Likert 5-point scale (1: strongly disagree, 2: disagree, 3: neither agree nor disagree, 4: agree, and 5: strongly agree) is used for the evaluation. According to Table 4 robot and music expressing sadness have good feedback in this questionnaire.

Compared to the conventional algorithmic composition of computer programs, the proposed system can be applied in more practical and specific ways to perform the automated composition with proper auxiliary motion gestures and the emotion-based graphic interface, instead of the huge amount

80 6 0 4 0 20 0 Male Female (%) (a) 80 60 40 20 0 Within

one year One tothree years Three to four years More than five years (%) (b) 7 0 6 0 5 0 4 0 3 0 20 0

Often Occasionally Seldom 10

(%)

(c)

Figure 13: Subjects’ data.

of music parameters input, to make music composition much easier and interesting.

5. Conclusions

This study is based on the integration of automated music composition and motion device. Some important features and advantages are discussed and used to synthesize both music and motion based on the emotion data from the user’s input according to the EM𝑥-𝑦 value. The EM’s interface can be friendlier than the traditional algorithmic composition interface. The musical psychology literatures can be used to perform the automated composition, and the system uses emotion as an input parameter to allow users to compose music easily without having to learn the complicated music theory, with the emotion-mapped EM data input instead.

This research can create further development to integrate the music with the brain wave data, to make the computer generative music to be properly related to user’s current mood or emotion.

This system also performs the emotion-based automated composition with the integration of a small robot, according to the user’s mood selection.

Wireless communication makes the system more porta-ble and reliaporta-ble. Not only wireless communication but also the automated music composition can be brought into the commercial market in the future, to apply the research to the field of multimedia edutainment with music technology.

Acknowledgment

The authors are appreciative of the support from the National Science Council Projects of Taiwan: NSC 101-2410-H-155-033-MY3 and NSC 101-2627-E-155-001-MY3.

References

[1] T. Winkler, Composing Interactive Music: Techniques and Ideas

Using MAX, MIT Press, Cambridge, Mass, USA, 1998.

[2] “ROBOBuilder e-Manual,” 2013, http://www.robobuilder.net/

en/archives/enEmanual/list.php.

[3] I. F. Akyildiz, W. Su, Y. Sankarasubramaniam, and E. Cayirci, “Wireless sensor networks: a survey,” Computer Networks, vol. 38, no. 4, pp. 393–422, 2002.

[4] C. F. Huang, “The study of the automated music composition for games,” in Proceedings of the Digital Game-Based Learning

Conference, pp. 34–41, Hong Kong, China, December 2009.

[5] P. Gomez and B. Danuser, “Affective and physiological respons-es to environmental noisrespons-es and music,” International Journal of

Psychophysiology, vol. 53, no. 2, pp. 91–103, 2004.

[6] M. M. Bradley and P. J. Lang, “Measuring emotion: the self-assessment manikin and the semantic differential,” Journal of

Behavior Therapy and Experimental Psychiatry, vol. 25, no. 1, pp.

49–59, 1994.

[7] J. Wagner, J. Kim, and E. Andr´e, “From physiological signals to emotions: implementing and comparing selected methods for feature extraction and classification,” in Proceedings of the IEEE

International Conference on Multimedia and Expo (ICME 2005),

pp. 940–943, Amsterdam, The Netherlands, July 2005. [8] S. Vieillard, I. Peretz, N. Gosselin, S. Khalfa, L. Gagnon, and B.

Bouchard, “Happy, sad, scary and peaceful musical excerpts for research on emotions,” Cognition & Emotion, vol. 22, no. 4, pp. 720–752, 2008.

[9] A. Gabrielsson and P. N. Juslin, “Emotional expression in music,” in Handbook of Affective Sciences, Oxford University Press, New York, NY, USA, 2003.

[10] A. Gabrielsson and E. Lindstrom, “The influence of musical structure on emotional expression,” in Music and Emotion,

The-ory and Research, 2001.

[11] P. Gomez and B. Danuser, “Relationships between musical structure and psychophysiological measures of emotion,”

Emo-tion, vol. 7, no. 2, pp. 377–387, 2007.

[12] L. R. Rabiner and B. H. Juang, “An introduction to hidden Markov models,” IEEE ASSP Magazine, vol. 3, no. 1, pp. 4–16, 1986.

[13] J. N. Straus, Introduction to Post-Tonal Theory, Prentice Hall, Englewood Cliffs, NJ, USA, 1990.

[14] ZigBee Alliance, ZigBee Specifications, version 1.0, 2005. [15] J. N. Al-Karaki and A. E. Kamal, “Routing techniques in wireless

sensor networks: a survey,” IEEE Wireless Communications, vol. 11, no. 6, pp. 6–27, 2004.

[16] J. Durate, S. C. Hsiao, C. F. Huang, and P. Winsor, “The applications of sieve theory in algorithmic composition using MAX/MSP and BASIC,” in Proceedings of the 2nd International

Workshop for Computer Music and Audio Technology Conference (WOCMAT ’06), pp. 96–99, Taipei, Taiwan, 2006.

[17] C. F. Huang and E. J. Lin, “An emotion-based method to per-form algorithmic composition,” in Proceedings of the 3rd

Inter-national Conference on Music & Emotion (ICME3), Jyv¨askyl¨a, Finland, 11th–15th June 2013, G. Luck and O. Brabant, Eds.,

University of Jyv¨askyl¨a, Department of Music, 2013.

[18] D. Cope, Virtual Music: Computer Synthesis of Musical Style, MIT Press, Cambridge, Mass, USA, 2004.

[19] D. Cope, The Algorithmic Composer, vol. 16, AR Editions Inc., 2000.

[20] R. N. Jazar, Theory of Applied Robotics: Kinematics, Dynamics,

Submit your manuscripts at

http://www.hindawi.com

VLSI Design

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Machinery

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Hindawi Publishing Corporation http://www.hindawi.com

Journal of

Engineering

Volume 2014Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014 Shock and Vibration

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014 Mechanical

Engineering

Advances in

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Civil Engineering

Advances inAcoustics and VibrationAdvances in Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Electrical and Computer Engineering

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014 Distributed Sensor Networks International Journal of

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Sensors

Journal ofHindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Modelling & Simulation in Engineering Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Active and Passive Electronic Components Advances in OptoElectronics

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Robotics

Journal ofHindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Chemical Engineering

International Journal of

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Control Science and Engineering Journal of

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Antennas and Propagation

International Journal of

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com Volume 2014

Navigation and Observation