Genetic-Based Reinforcement Learning For

Fuzzy

Logic Control Systems

Kuo-Tsai Lee', Kuang-Tsang Jean',* and Yung-Yaw Chen'

'Corresponse Address: Lab. 202, Department of Electrical Engineering, National Taiwan University, Taipei, Taiwan, R.O.C.. 'Telecommunication Laboratories, Ministry of Transportations & Communication, Taiwan, R.O.C..ABSTRACT

This paper proposes a genetic-based reinforcement learning for fuzzy logic control systems (GR-FLCS) to solve reinforcement learning problems. The proposed GR-FLCS is constructed by integrating a real-coded genetic algorithm with a time accumulator as the fitness evaluator, a success criterion, a fuzzy logic controller (FLC), and a parameter adapter for the FLC. In this simple but powerful architecture, restrictions, usually met in reinforcement learning for FLCs, can be taken off completely. They are, the FLC must be implemented by a neuronlike network, the shapes of the membership functions in the FLC must be in some form, e.g., bell-shaped, the fuzzy operators must be modified, or only the consequent part of the rule base in FLC can be learned. Finally, the applicability and efficiency of GR-FLCS are demonstrated by an simulation example of the cart-pole balancing problem.

1.

INTRODUCTION

Starting with the self-organizing control (SOC) techniques of Procyk and Mamdani, many efforts have been done in developing fuzzy logic controllers which can learn from experiences. Among these efforts, some are to solve reinforcement learning problems which, in contrast to supervised learning, is to learn a fuzzy logic controller even when only delayed and weak information, such as binary failure signals, is available. On the basis of Barto, Sutton, and Andersons' neuronlike adaptive elements, C. C. Lee [l] proposed his paradigm in which individual rules engaged in the problem solving process are considered boxes in BOXES system to be blamed or rewarded. Therefore, all the possible rule are listed and only the consequent part of them are to be learned. Berenji [2] and C. T. Lin [3] employed the adaptive heuristic critic (AHC) algorithm, introduced by Sutton, in their fuzzy

structures. For doing this, Berenji used one network- based

fuzzy logic controller to substitute the action network, and C. T. Lin used two with one as an action network and the other as an evaluation network.

In the last few years, research devoted to search and optimization has significantly grown. Genetic algorithms (GAS), developed by Holland, his colleagues and his students, are more and more valued in this topic. Based on the mechanics of natural selection and natural genetics, GAS have been proved half by theory and half by experiment to be superior to hill-climbing methods in multimodular and non-derivative function cases and to random walk in efficiency and efficacy [4]. Recently, GAS have been successfully used in various areas. Odetayo and McGregor [ 5 ] first introduced GAS to solve reinforcement learning problems. In their algorithm, state space is partitioned into non-overlapping regions, as in BOXES system. Each allele value on a string recommends a left-push or a right-push if the system state falls into the corresponding box. Generation of candidate control rules continues to evolve until an eligible control rule is found. Whitely, etc. [ 6 ] employed their real-coded GA, the

GENITOR, as the evaluation network in AHC, whereas the original action network is preserved. An allele value itself in a string is a weight in the action network.

In this paper, a simple but powerful architecture, genetic-based reinforcement learning for fuzzy logic control systems (GR-FLCS), is proposed. With the assistance of GAS, GR-FLCS is able to solve reinforcement learning problems by adapting the FLCS without any restrictions. These restrictions may be: the FLC must be implemented by a neuronlike network, the shapes of the membership functions in the FLC must be in some form, e.g., bell-shaped, the fuzzy operators must be modified, or

only the consequent part of the rule base in FLC can be learned.

The organization of the paper is as follows. The architecture of GR-FLCS is briefly introduced in section 2.

The example of the cart-pole balancing is demonstrated in section 3. Finally, the conclusion is given in the last section.

2. OUR PARADIGM: GR-FLCS

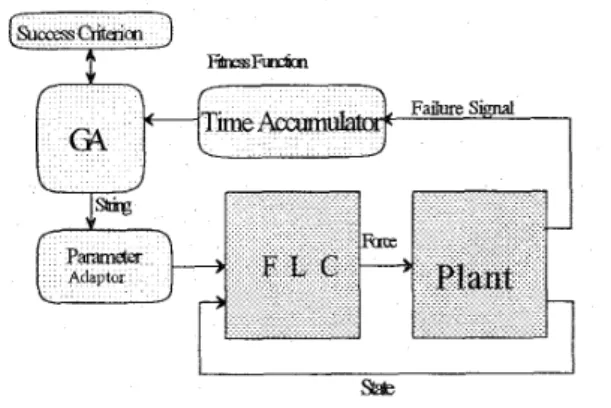

The architecture of GR-FLCS is shown in fig. 1. The proposed GR-FLCS is constructed by integrating a real-coded genetic algorithm, with a time accumulator as the fitness evaluator, a success criterion, a fuzzy logic controller (FLC), and a parameter adapter for the FLC.

Fig. 1 The architecture of the GR-FLCS.

In our paradigm, the fitness evaluator is in the form of a time accumulator which counts the time steps before the system state falls into the failure region, as in Whiteley's method [6]. The parameters, constituting scaling factors and anchoring points of the membership functions in the FLC, are concatenated into a real-valued string in the environment of a real-coded genetic algorithm, the variable-based genetic algorithm (VGA) [7]. These strings are then forwarded to the parameter adapter to mod* the parameters in the fuzzy logic controller. The VGA reinforces the good candidate solutions, the strings, on the basis of their performance measured by the time accumulator. Through crossover and mutation operators, a new generation is yielded. The searching is ongoing till a criterion, which is defined in the success criterion, is met.

The initials conditions are set randomly for each string of a population, which makes sense for the reinforcement learning problems. However, in t h ~ s

situation, a better fuzzy logic controller that receives a poor starting condition will be ranked lower than a worse one

that receives a good starting condition, which makes our fitness function very "noisy". Our strategy is to keep the population size small to lessen the influence [6] [8] of the great disturbance Furthermore, another problem arises. It is that the old criterion in reinforcement learning, which is defined as that the learning is considered to be successful if the controller is able to maintain the system over a given time steps, isn't appropriate. A bad fuzzy logic controller that can not deal with most of the starting conditions may meet the criterion from a fairly good starting condition. Therefore, it is necessary for us to set up a new success criterion.

Our policy is that the learning is considered to be successfid and stopped only if some candidate solution can maintain the system more than the threshold time steps for a given number of consequent generations. Thus, whenever a fuzzy logic controller meets the old criterion, it is not considered the one we are searching for in haste, whereas it will be challenged by other initial conditions. This strategy is implemented by the elitism in VGA. The first best candidate solution is preserved and take the first position in the new generation. If it is the eligible solution, it will s a l take the first position for the next consequent generations, if not, some other candidate solution will replace it. Meanwhile, the whole population is evolving through operators of the VGA. This simple policy allows the

fuzzy

logic controller visit more of the state space, and find out the solution to the problem over all possible states.3.THE CART-POLE BALANCING PROBLEM

The cart-pole system, which is illustrated in fig. 2, was simulated using equations as follows by euler method with time step 2 0 m ,

e =

Lm cos28

,, f+mf 02 sm

s-e cos e

1

-pc sgn(x)X = m c+m 9

where x is the horizontal position of the cart,

x

is the velocity of the cart, 9 is the angle of the pole with respect to the vertical line, 8is the angle of the pole with respect to the vertical line, is the angle velocity of the pole, and f is the force applied to the cart. All the values of the parameters and their meanings in our simulationare: mass

of the cart: m = 1 kg, mass of the pole: m, = 0.1 kg, half length of the pole = 0.5 m, gravitational acceleration: g =9.8

m /s2

,coefficient of fiiction of cart on track:p,

=0.0005, coefficient of friction of pole on cart:

y

= 0.000002.i

/

m

Fig. 2 The cart-pole system.

The primary control tasks are to keep the pole vertically balanced and to keep the cart within the rail track boundaries. A failure happens whenever 1x1 2 2.4m or 101 2 15". The force applied to the cart-pole system can be any value between -20 newtons to +20 newtons. The initial state for each candidate solution is random in the region of 1x1 I 2 . 0 m and

le1

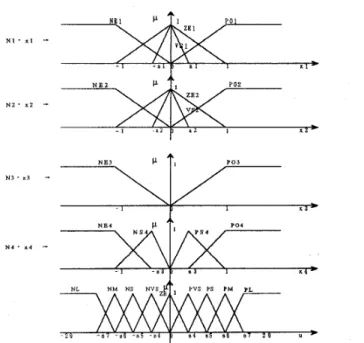

I 12". Whenever some string is able to maintain the system over 10000 time steps and is preserved over 8 consequent generations, it is considered a eligible solution and the learning is stoppedThirteen rules [2] are assumed to be available as in Table 1, where x, is 8, x, is

6

, ~3 is x, and x, is x . We also assumed that the anchoring points of a membership function are the peak points of its two neighboring ones, which makes the inference output curve smooth [9], and the labels are symmetric to the y-axis. The inference method we used is the so-called weighted average method. Therefore, a string in the environment of the VGAcomposes of a,-% , and N1-N4 as in the fig. 3. We set the base mutation rate M, ,variance, and population size n, to be 0.5, 0.8, and 10, respectively. The small population helps to improve the performance of genetic algorithms in noisy environment , which has be mentioned. An eligible solution came out in the 67th generation. Fig.

4

shows that this solution can handle initial conditions randomly generated.Here we made some assumptions to make the whole demonstration easier. Actually, we can learn the FLC well without these assumptions at the price of longer simulation time.

4. CONCLUSIONS

Integrating FLC and GAS into it, the genetic-based reinforcement learning for fuzzy logic control systems (GR-FLCS) gives more freedom for the FLC when prior knowledge of the experts or experienced operators is

adopted in a system. Furthermore, it has the merits

both

ofthe FLC and GAS, which makes the learning easy but powerfd.

I

Rule I : I f x , is POI and x, is PO2 then U is PL.

Rule 2: Ifx, i.s POI and x, is ZE2 then U is PM

Rule 3: I f x , is POI and x, is NE2 then U i.s ZE Rule 4: Ifx, is ZEI and x, is PO2 then U is PS

Rule 5: Ifx, is ZEI and x, is ZE2 then U is ZE Rule 6: I f x , is ZEI and x2 is NE2 then U is NS

Rule 7: Ifxl ir NE1 and x, is PO2 then U is ZE

Rule 8: Ifx, is NE1 and x2 is ZE2 then U is NM Rule 9: Ifxl is NE1 and x, i s NE2 then U i,s NL

Rule IO: I f x , is VSI and x, is VS2 and x, is PO3 and x, is PO4 then

U i,s PS

Rule I I : rf., is V S I and x, is VS2 and x3 is PO3 and x, i.s PS4 then

U is PVS

Rule 12: I f x , Is VSI and x, i.r VS2 and x3 is NE3 and x4 is NE4 then Rule 13: Ifxl is VSI and x, is VS2 and x., is NE3 and x, is NS4 then

U is NS

Table 1 The thirteen rules for balancing cart-pole system.

Fig. 3 The definitions of the labels in Table 1. Nl-N4, and

a,-a7 are concatenated to be a string i n VGA.

, t , , 1

..-

-

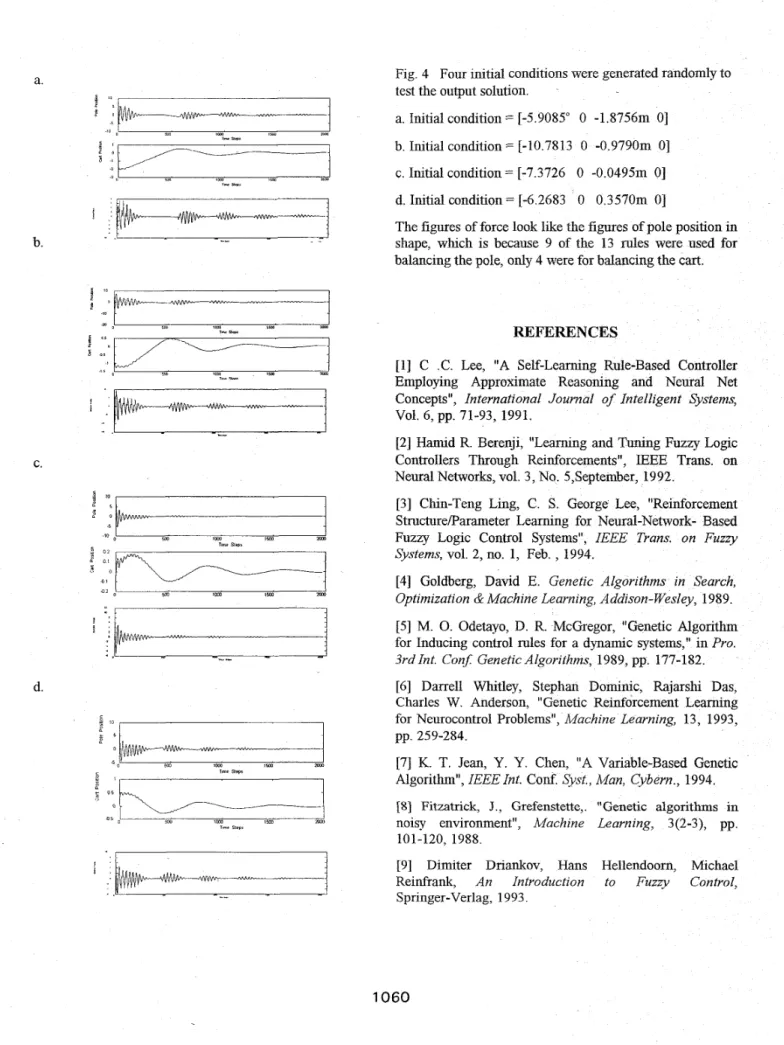

.I C.Fig. 4 Four initial conditions were generated randomly to test the output solution.

a. Initial condition = [-5,9085" 0 -1.8756m 01

b. Initial condition = [-10,7813 0 -0.9790m 01 c. Initial condition = [-7.3726 0 -0.0495m 01 d. Initial condition = [-6.2683 0 0.3570m 01

The figures of force look like the figures of pole position in shape, which is because 9 of the 13 rules were used for balancing the pole, only 4 were for balancing the cart.

REFERENCES

[l] C .C. Lee, "A Self-Learning Rule-Based Controller Employing Approximate Reasoning and Neural Net Concepts", International Journal of Intelligent Systems,

VOE. 6, pp. 71-93, 1991.

[2] Hamid R. Berenji, "Learning and Tuning Fuzzy Logic Controllers Through Reinforcements", EEE Trans. on Neural Networks, vol. 3, No. S,September, 1992.

[3] Chin-Teng Ling, C. S. George Lee, "Reinforcement Structure/Parameter Learning for Neural-Network- Based Fuzzy Logic Control Systems", IEEE Trans. on Fuzzy Systems, vol. 2, no. 1, Feb. , 1994.

[4] Goldberg, David E. Genetic Algorithms in Search,

Optimization & Machine Learning, Addison- Wesley, 1989.

[5] M. 0. Odetayo, D. R. McGregor, "Genetic Algorithm for Inducing control rules for a dynamic systems," in Pro. 3rd Int. Con$ Genetic Algorithms, 1989, pp. 177-182.

[6] Darrell Whitley, Stephan Dominic, Rajarshi Das, Charles W. Anderson, "Genetic Reinforcement Learning for Neurocontrol Problems", Machine Learning, 13, 1993,

pp. 259-284.

[7] K. T. Jean, Y. Y. Chen, "A Variable-Based Genetic Algorithm", IEEE Int. Conf. Syst., Man, Cybern., 1994.

[8] Fitzatrick, J., Grefenstette,. "Genetic algorithms in

noisy environment", Machine Learning, 3(2-3), pp. 101-120, 1988.

[SI Dimiter Driankov, Hans Hellendoorn, Michael Reinfrank, An Introduction to Fuzzy Control,

Springer-Verlag, 1993.