238

', E E E JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL 14, NO. 1, JANUARY 1996

ultiSvnc:

Jvnchronization

Jultimedia

Systems

Herng-Yow Chen and Ja-Ling Wu, Member, IEEE

Abstract-Synchronization among various media sources is one of the most important iss:ues in multimedia communications and various audiolvideo ( A N ) applications. For continuous playback (such as lip synchronization) under a time-sharing multipra- cessing operating system (such as UNIX), the synchronization quality of traditional synchronization mechanisms employed on single processes may vary according to the workload of the system. When the system encounters an overload situation, the synchronization usually fails and, even worse, results in two fatal defects in human perception: the audio discontinuity (audio break) and the out-of-synchronization (synchronization anomaly). In order to overcome these problems, a novel media synchro- nization model employed on multiple processes (or threads) in a multiprocessing environment is proposed in this paper. The problem of asynchronism due to system overload is solved by assigning higher priority to more important media and adopting a delay-or-drop policy to treat the lower priority ones. Some experimental results will be presented to show the effectiveness of the proposed model and the implementation mechanism under a UNIX, X-Windows environment. On the basis of the proposed model, a continuous media playback (CMP) module, which acted as the key component of some popular multimedia systems such as Multimedia Authoring System, Multimedia E-mail System, Multimedia Bulletin Board System (BBS), and Video-on-Demand

(VoD) System, was implemented.

I. INTRODUCTION

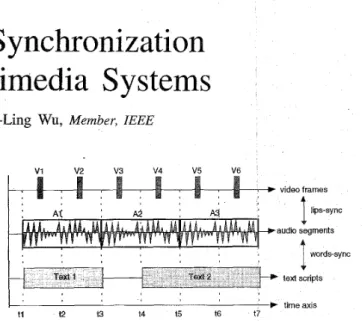

HE MULTIMEDIA synchronization (or orchestration) problem, a task of coordinating various time-dependent media streams, always arises when a variety of media with different temporal characteristics are brought together and integrated into a multimedia system (such as remote learning [ 11, video conferencing systems [ 3 ] , [4], collaborative work systems [5], [6], and information-on-demand systems [7], [8]). When media streams are presented or played in real-time from different sources, either located locally or in a distributed situation, the task of maintaining the temporal relationships among different media streams can be difficult but essential for smooth presentation playback. For instance, in presenting a music program in a digital Karaoke system, not only should audio segments and video frames be played out, with lips- synchronization performed between them, but the text media (such as lyrics or voice-over) should also be displayed on the screen with word-synchronization performed between it and the audio media. A timeline representation of the requirement of media synchronization is illustrated in Fig. 1.

Manuscript received August 7, 1994, revised March 5, 1995 This work was supported by the National Science Council of the Republic of Chma under Contract NSC 83-0425-E-002-140

The authors are with the Commumcation & M u l b m d a Laboratory, De- partment of Computer Science and Informatlon Engineenng, Natlonal Tawan Universitv. Taiuei. Taiwan. ROC

V I V2 V3 V4 V5 V6 video frames 4

4

text scripts P tirneaxis t l e t 3 t4 t5 t6 t7Fig. 1. An example of timeline representation of medn synchromzation (for the case of a music program in a digital Karaoke system).

In general, there are two basic types of synchronization within a multimedia framework [26], [30]: intra-media (serial) synchronization and inter-media Cparallel) synchronization. Intra-media synchronization commonly refers to the playback of medium individually in time continuity. For instance, a' sequence of medium units such as audio segments should be played out continuously and smoothly for guaranteeing the intra-media synchronization. On the other hand, inter-media synchronization needs to determine the relative scheduling among different media streams as in lips synchronization. However, from the time-dependency point of view, syn- chronization precision is another important issue in system implementation. Therefore, media are often described as be- longing to one of the two classes: continuous or discrete [ 161, (271. Most frequently, the continuous media require the fine-grain syncfironization among various media, such as lips- synchronization between audio and video. On the other hand, the synchronization of discrete media usually allows more coarse-grain synchronization precision-such as the words- synchronization with a piece of audio and video frames in a multimedia presentation system. In nontrivial multimedia ap- plications such as Karaoke systems, each media stream should support both intra-media synchronization indwidually and inter-media synchronization to provide a graceful presentation. Moreover, afferent synchronization precision should also be supported according to the characteristics of human perception. D. C . A. Bulterman 1301 described four data location models with different synchronization complexities in possible multimedia applications: local single source, local multiple sources, distributed single source, and distributed multiple sources. For the first two cases, synchronization is adequately supported by local applications or stand-alone systems and synchronization support in network is needless. However, for the last two cases, which are in distributed environment sit-

Publisher Itek Identifier S 0733-8716(96)00230-2. uations, more complicated synchronization mechanisms must

CHEN AND WU: MULTISYNC: A SYNCHRONIZATION MODEIL FOR MULTIMEDIA SYSTEMS 239

be supported by not only the application layer but also the network layer to eliminate all possible variations and delays incurred during the transmission of multiple media streams [24]. In other words, when communication is involved in multimedia systems, synchronization function should be pro- vided by both the network transport layer and the application

layer. Recalling the basic concept in network layering: if

the lower layer does not provide some senices but the upper layer does, then the application can always enjoy these services. Besides, in a multimedia communication application such as the video conferencing system, it is inadequate to provide synchronization service only by the apglication layer because the application has to handle all synchronization controls and this will always introduce intolerable overheads to the application itself. One can treat synchronization service provided by network (transport protocol) as a common service such as a synchronization guaranteed and connection-oriented services in end-to-end connection circuits-applications at the connected sites simplify the sendingheceiling functions through the synchronization provided by the transport pro- tocol. Thus, the design of applications can Ibe simplified. Consequently, a great deal of research has been dedicated to providing low latency, low jitter, and lowpacket error synchronization models; likewise, real-time prlotocols in the network transport layer have been carried out i n order to ful- fill the multimedia communication requiremeni s for network

services [ 1414 191. On the other hand, the synchronization provided by the application layer is necessary tci support more feasible synchronization functions, i.e., in multimedia docu- ment presentation systems in which there are multiple sources of data that should be synchronized in serial or in parallel [35]. This paper assumes that common synchronization facilities have been supported by the network transport protocol, and focuses on the synchronization model and related techniques used for the application layer.

Some previous research on the synchronization model and related techniques are briefly reviewed as folloR s. The concept of “multimedia objects” as components of an object-based model for a multimedia system is presented in [27], and the hu- man perception of media synchronization is discussed in [25]. L. Ehley et al. [28], presents a classification of the multimedia synchronization models (or techniques) and (evaluates their performance used to control jitter among media streams. T. Wahl et al. [29], classifies and evaluates a selection of the most commonly existing time models which are the representation of temporal relations among different media streams. There are some papers that discuss the synchronization of multimedia presentation, tools or specification for media synchronization [34], 1351. T. D. C. Little et al., proposed a formal specification for synchronization and for the retrieval and presentation of multimedia elements [22], and presented a iritermedia skew

control system for skew correction in a distribution multimedia system [23].

Currently, many modern computers (workettations or per- sonal computers) have been equipped with specialized hard- ware capable of handling multimedia data streams. Therefore, some multimedia systems such as VoD and Multimedia E-mail

supporting for continuous media are becoming very popular

[12], [13]. However, most operating systems on workstation usually adopt time sharing, multiprocessing policies to give fair use and maximum utilization among system resources. Hence, multimedia synchronization problems will arise due to no real-time services which are not provided in such operating systems. In other words, multimedia synchronization essen- tially requires somewhat real-time constraints in nature. Run- ning a synchronization-demand application under a nonreal- time and multiprocessing environment, the degradation of the synchronization performance depends heavily on the workload of the system. In the worse case, the synchronization may fail if no re-synchronization (synchronization recovery) mech- anism is provided. D. C. A. Bulterman [30] argued the role of

conventional UNIX in supporting multimedia applications and concluded that: UNIX is useful for many applications but may be inadequate for high performance multimedia computing. There are two approaches that can be adopted to solve the above problem-one is to rebuildmodify the operating system kernel such that multimedia primitive functions (such as real- time scheduling, synchronization) are included in the kernel. For instance, H. Tokuda, T. Nakajima [31], [32], and D. L.

Black [33], have examined the ability of operating system support for continuous media applications by a case study using the Real-Time Mach. Alternatively, as is done in this paper, one could develop a feasible approach based on the existing popular environments [37]-[39].

A robust synchronization model suitable for multitasking-

like environments and insensitive to the workload of a system is developed in this paper. In addition, this model can be applied to both real-time and non real-time operating systems. For the case of no real-time supporting, a synchronization model using multiprocesses and some programming tech- niques (such as changing process scheduling priority) are presented to solve or abate the audio break and the out-of-

synchronization defects which may occur in the traditional

synchronization model where only a single process is used to handle the synchronization. A set of experimental results

demonstrate the practical value of the proposed model under heavy load situations. These results also show that the pro- posed synchronization model is superior to traditional ones [37], 1381, not only in terms of synchronization performance but also from the view points of the easiness of developing and debugging.

The remainder of this paper is organized as follows. Firstly, we describe basic synchronization principles and traditional related mechanisms, and discuss the problems incurred when the system is designated to provide interactive facility un- der window-based (such as the X-Windows) systems. Then, the proposed multiprocesses (multithreads) synchronization model, namely Multisync, is presented and discussed in detail. After that, the superiority of the proposed model is verified. Finally, our conclusion and discussions are given.

11. THE BASIC SYNCHRONIZATION PRINCIPLES AND

DEFECTS OF THE TRADITIONAL SYNCHRONIZATION MODELS A significant requirement for supporting time-dependent media playback in a multimedia system is the identification

240 IEEE JOOURNAL ON SELECTED AREAS M COMMUNICATIONS, VOL. 14, NO. 1, JANUARY 1996 orage q a c e time axis audio segment phybackpath . ~ ;

.

video frame playback pathtime interval (Ti) =size of audio segment (ATI) / samplinLrate Fig. 2.

system.

Audiohide0 synchronization principle for a digital AN playback

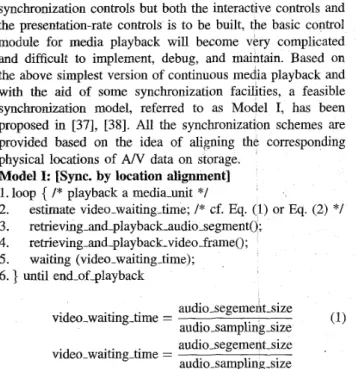

media-unit

Fig. 3. Concept of playbackmedia-unit.

and specification of temporal relationships among various media objects. Such temporal relationships among different media may be implicit in nature, as in the case of simultaneous acquisition of audio and video during recording stage), or may be explicitly formulated as in the case of making the multimedia documents by authoring tools. Let’s take tbe analog VCR system as an example for the former. It is clear that the rotational speed of tape in an analog VCR can be used as the reference for generating timing information. On the other hand, one can get the timing information directly from the size of the audio segment acquired during the media digitization stage in the digital AN playback system. As

shown in Fig. 2, the time interval T1 for playing the audio segment

AT^

can be computed by dividing the size ofAT^

by the sampling rate (such as 8000 Hz for voice quality), i.e., a 1000 byte audio segment stands for a time interval about 250 ms for a continuous audio playback with voice quality assumption.A good synchronization algorithm must guarantee both

inter-media and intra-media synchronization within the given tolerable precision which are application dependent. For ex- ample, in Fig. 2, both the video frames and audio segments should individually be played back smoothly to insure the intra-media synchronization. At the same time, some video frames (say VI, V,,

. . .

,

VG) and the associated audio segment (say AT^) in the same time interval should also be played back precisely under the enforced time-constraint to insure the inter- media synchronization. Notice that the time interval T1 is not necessarily equal to the time interval T2 in general. However, for the ease of explanation, a media-unit (say Vi), which is composed of only a video frame and its associated audio segment, is defined as the basic unit for playback during their corresponding fixed rime interval (sayTi).

For instance, under the 25 video frame/s and 8000 audio samples/s assumptions, a video frame and its associated audio segment (with 320 samples) comprise a media-unit that should be played out simultaneously during their common time interval (with 40 ms). The media-unit concept can be achieved easily in real implementation. Fig. 3 schematically shows this concept.To support a continuous media synchronization and play- back periodically, many different techniques had been pro- posed for different platforms (PC with DOS or MS-Windows [36f, Workstation with UNIX and X-Windows [37]-[39]). Among these, the simplest way is to maintain a loop structure for retrieving and playing back the corresponding media. Its pseudo algorithm is shown as follows:

[The simplest version for periodic continuous media playback]

2. retrieving-corresponding media (audio, video); 3. playback-correspondingmedia (audio, video); 1. loop {

4- }

But in this scheme, none are functionality provided with respect to synchronization controls (such as intra-media and inter-media synchronization), interactive controls (such as pause, resume, and rewind a presentation), and presentation- rate controls (such as fast and slow playbacks). If a VCR-like playback system which can support not only the essential synchronization controls but both the interactive controls and the presentation-rate controls is to be built, the basic control module for media playback will become very complicated

and difficult to implement, debug, and maintain. Based on the above simplest version of continuous media playback and with the aid of some synchronization facilities, a feasible synchronization model, referred to as Model I, has been proposed in 1371, 1381. All the synchronization schemes are provided based on the idea of aligning the corresponding physical locations of AN data on storage.

Model I: [Sync. by location alignment]

1. loop { /* playback a media-unit */ 2.

3. retrieving-and-playback-audio-segment(); 4. retrieving-and-playback-videoframe(); 5. waiting (video-waiting-time);

6.1 until end-of-playback

estimate video-waiting-time; /* cf. Eq. (1) or Eq. (2) */

(1) audio-segement-size audio-sampling-size audiosegement-size

.

audio-sampling size video-waiting-time = video-waiting-time =+

system-overheads. (2) In Model I, media-unit playback and synchronization con- trol are embedded in a loop stmcture. Since computer systems nowadays usually cannot properly deal with a very short time interval (such as few microseconds) required for playing the individual audio sample, several audio samples have to be grouped together to form a playable audio segment. These samples will be stored into the device buffer and issued at a sampling rate dependent on the consuming rate when an audio segment is written into the audio device. While video playback usually needs special hardware decoder (e.g., JPEG [20], MPEG [21]) equipped to meet the real-time and high-quality demands. In Model I, both of the video frame VI and its associated audio segment A1 are issued almost simultaneously; the video frame is consumed immediately dueCHEN AND WU: MULTISYNC: A SYNCHRONIZATION MODEL FOR MULTIMEDIA SYSTEMS 24 1

media-unit medm-unit

<

d normalaudio patti- - - - D normal video path occurred in here

"audio-break" audio path

: = : = )I 'audio-break" video path

Fig. 4. Example of "audio-break" anomaly

e normal audio path

- D normal vaeo path

: "out-of-sync" video path

occurred in here . . . .

. ~ ~ .

I

. . . . out of synchronization

Fig. 5. Example of "out-of-synchronization" anomaly.

control-orocess to the hardware support and the associated audio segment is

continuously played back by the audio device until the audio buffer becomes empty. Therefore, the next video frame V,

has to wait for a time period (video-waiting-time) to avoid playing back too early. The frame waiting operation can be realized easily either by the busy waiting mechanism such as the wait system call in the single-processing environment (such as MS-DOS), or the process suspending mechanism such as the sleep system call in the multiprocessing environment (such as UNIX). As a result of employing the concept of playback

of the media-unit in a loop, the complexity of inter-media

synchronization between audio and video car1 be reduced intuitively. Ideally, the video-waiting-time in Model I is equal to the size of the audio segment divided by the sampling rate of the audio device (cf. (l)), so the above scheme is a practical and effective algorithm for continuous media playback in a single-processing environment. However, some penalties such as context-switching delay and process scheduling delay would be induced by system overheads and should be taken into account (cf. (2)) in a multiprocessing environment. These overhead penalties are system dependent and are difficult and nearly impossible to be measured precisely. Consider a nonreal-time multiprocessing system which is under a heavy CPU load, we cannot assure at what exact time our process will be served by CPU because we don't know how many processes have been scheduled ahead of ours. As is pointed out in [37],

[38], the precise level of estimation for video-waiting-time is

critical in Model I and may not be estimated precisely enough under a heavy system load situation (such as CPU bound and/or

U 0

bound) and the following two unwanted cases may occur and degrade the synchronization performance.Case ( I ) : Audio-Break (cfi Fig. 4): If the estimated video-waiting-time interval is longer than Ihe real one, it would be too late to write the next audio data segment to the audio device in time. Therefore, the buffer of the audio device will be exhausted before the next ,audio segment arrives and there will be no audio data played back between these two audio segments. A discontinuity in audio output will result even though the synchronization with video can be held. This phenomenon violates the intra-media synchronization principle in the continuous audio playback and indeed causes an uncomfortable perception in audition. Fig. 4 shows an example of the audio-break phenomenon in an AN playback application.

Case (2): Out-of-Synchronization (c$ Fig. 5): If the esti- mated time interval is shorter than the real one, it would be too early to write audio data to the audio device. In

before playback

1

. ~ ~ . . . ...

during playback -t fork fork

->

f

f

audio-process video-process text-process (highest priority) (middle priority) (lowest priority)

d parent control-process path

+

child media-process path+

fork() or kill() system call. : . . . : . intermedia synchronization Fig. 6. MultSync: The proposed multiprocess synchronization model.

I

after playback

this case, the residual part of current audio segment is still buffered in the audio device, but the corresponding video frames will be immediately played out by the video interface hardware such as the MPEG card. Thus, the audio device buffer could be almost full and out-of-synchronization occurs. This phenomenon violates the inter-media synchronization principle and indeed causes a uncomfortable perception in vision. Fig. 5 shows an example of the out-of-synchronization defect in an AN playback application.

111. THE PROPOSED MODEL: THE MULTISYNC MODEL In order to overcome the above two fatal deficiencies, a novel synchronization modellmechanism which is insensitive to the system workload will be presented in this section. The basic idea of the proposed approach is not to perform both the intra-media and the inter-media synchronization within a loop structure, but distribute the jobs of synchronization from only one process to many individual processes. Each process undertakes only the intra-media synchronization with which it concerns and the inter-media synchronization can then be attained through eitherboth the absolute synchronization with system clock or/and some interprocess communication techniques such as pipe and shared-memory. In this model, we assume some primitive functionalities such as multipro- cesses/multithreads creation, termination and intercommuni- cation for multitasking have been supported by the operating system. Some operation systems do not support the multiple threads in a process (e.g., UNIX), and some do (e.g., OS/2, MACH). For the ease of explanation, thread is defined as a kind of light-weight process such that we can treat a thread as a process if the multithread facility is not supported by the operating system. Fig. 6 shows the proposed model, schematically.

242

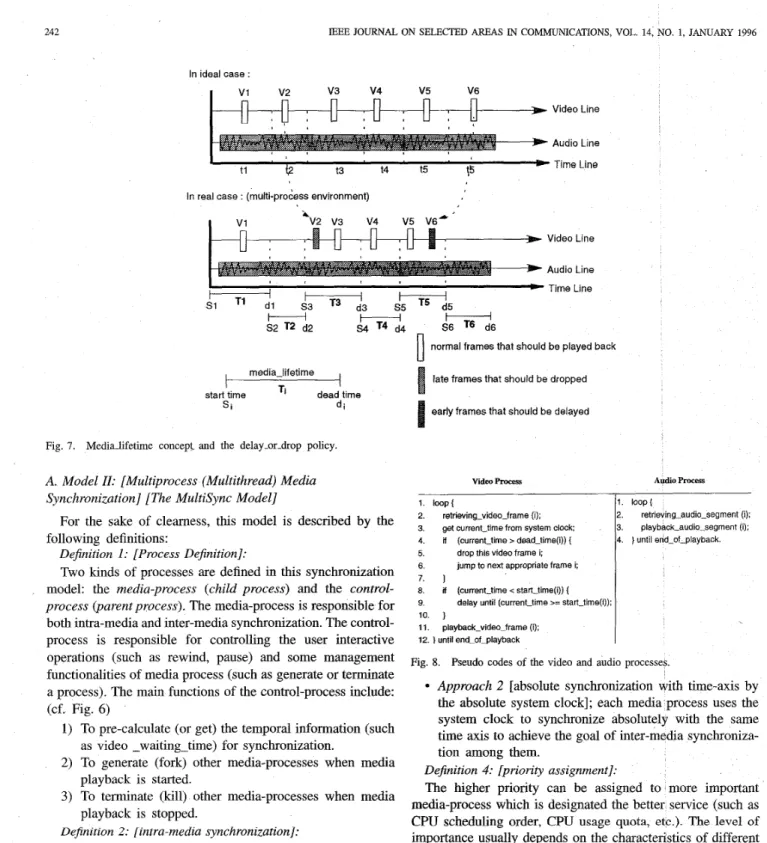

1 loop1

2 retrieving-videcirame (I), 3 get current-time from system clock 4 rf (currenttime > dead-time(i)) ( 5 6 7 1 8 I (current-time < stan_time(i)) ( 9 10 1 11 playba&-video_frame (I), 12 untd end-ofglayback

drop this video frame I,

jump to next appropriate frame I,

delay until (currenttime >= stantime(i)),

BEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL. 14, NO. 1, JANUARY 1996

1 loop1 2 retrievingaudio_segment (I), 3 playback-audio-segment (I), 4 ) until end-of-playback In ideal case : V I v 2 v 3 v 4 v 5 V6

n

,fl

,n

,n

,n

,+

-

l

f

Videoiine*

Audio Line Time Line I>

t l t3 !S In real case : (multi-pro& environment)V4 V5 V6L

Video Line

media-lifetime

start 'time Ti dead(time

S i di

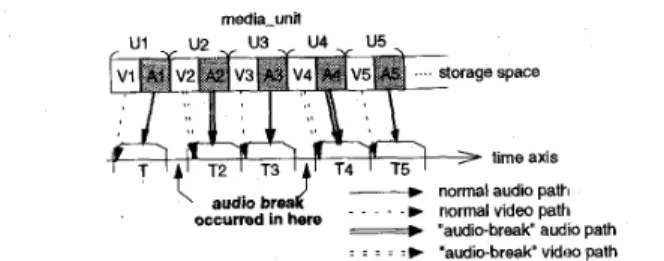

Fig. 7. Medialifetime concept- and the delay-or-drop policy.

A. Model II: [Multiprocess (Multithread) Media

Synchronization] [The Multisync Model] following definitions:

For the sake of clearness, this model is described by the DeJinition 1: [Process De$nition]:

Two kinds of processes are defined in this synchronization model: the media-process (child process) and the control- process (parent process). The media-process is responsible for

both intra-media and inter-media synchronization. The control- process is responsible for controlling the user interactive operations (such as rewind, pause) and some management functionalities of media process (such as generate or terminate a process). The main functions of the control-process include: (cf. Fig. 6)

1) To pre-calculate (or get) the temporal information (such 2) To generate (fork) other media-processes when media 3) To terminate (kill) other media-processes when media Definition 2: [intra-media synchronization]:

Intra-media synchronization must be achieved individually Definition 3: [inter-media synchronization]:

Inter-media synchronization can be achieved cooperatively by each media-process. Two general approaches can be adopted:

Approach 1 [relative synchronization by inter-process communication]; media processes can be synchronized relatively with each other through some well-known internal process communication techniques, such as share memory, socket and pipe.

as video -waiting-time) for synchronization. playback is started.

playback is stopped.

by each media-process.

U

normal frames that should be played back late frames that should be droppedearly frames that should be delayed

CHEN AND WU: MULTISYNC: A SYNCHRONIZATION MODEL FOR MULTIMEDIA SYSTEMS

Multimedia FlayBack System (3ru, =cord Synchronization

module module

X Video Vendor Extension X-window Library

UNIX Operating System

243 P-ram level

c

libraw level I U 0 burst prows 1. open-readfile(fpi); 2. open-write-file(fp2); 3.4. read (fpl. buffer, buffersize);

5. write (fp2. buffer, buffersize);

6. )

7. closefile (fpl);

8. close-file (fp2);

loop (1 000000) (/’ busy for disk 110 ‘I

CPU burst process

loop (1WOOW) (/* busy for CPU */ 1. i = 1; 2. 3. pow(i, i); 4. log2(i); 5. i = i + 1; 6. ) Fig. 9. Hardware and the software architecture of the realized playback system.

Action 1:

Action 2:

To get the temporal-relation infomiation for per- forming synchronization. This control-process will maintain a table of time stamps which is used as the check-points for inter-media syn- chronization. Each entry in the table records the presentation lifetime which comprises the starting point (start-time) and the ending point (dead-time) of each audio segment. All of the video frames and their associated audio seg- ments should be played back within their cor- responding time interval (cf. Fig. 7).

To generate the child process (media-process) for each media by using the process creation system call such as fork() [45] in UNIX. Meanwhile, there are one control-process (foir high level user interaction) and two media- processes (for synchronization support) running, concurrently. Since the media-processes are ithe child pro- cesses of the control-process, thle temporal in- formation obtained in Action 1 will be inherited (delivered) to each media-process.

According to the proposed model each child media- process must undertake both the intra-]media and the inter-media synchronization. In order to avoid the audio- break phenomenon, the audio-process is designed to keep writing the sequence of audio segments to the audio device in a loop way. On the other hand, video-process adopts a delay-or-drop policy to deal with the out-of- synchronization problem, as shown in Fig. 7, based on the definition of playback time interval (the so-called

media-lifetime). The right and the left columns in Fig. 8

show the adopted pseudo codes of the video-process and the audio-process, respectively.

If the user presses “stop” or “pause” button, the control- process will terminate the playback action by calling “kill()” system call [45] to kill all thl: child media- processes.

If the user presses “play” or “continue” button again, then the steps (1) to (3) will be repeatetd.

The above audio process uses neither the time stamp in- formation nor the interprocess communication technique to synchronize the video process. The process retrieves and plays back the audio data in a loop way. Nevertheless, the synchronization can be achieved in the above example be- cause the audio buffer is always filled and the audio data

in the buffer is consumed at a constant rate, i.e., the audio can be played out smoothly along with the time axis and no audio discontinuity will occur. While the video process makes use of the medialifetime, which comprises of two time stamps (start-time and dead-time), to check whether the corresponding video frame should be played back or not, based on the following delay-or-drop policy. In other words, we intend to keep the audio playback axis up with the time axis and make the video playback (based on the delay-or- drop policy) to be directly synchronized with the system clock. Consider the video frame v6 which is retrieved and

ready to be played out as shown in Fig. 7. Notice that, its current-time (gotten from the system clock) is ahead of its medialifetime (T6), hence, its playback action should be

delayed until the current-time is within its medialifetime. Conversely, the video frame Vz is also going to be played out but its current-time lags behind its medialifetime (T2),

therefore, its playback action should be canceled (dropped) due to out of time.

Delay-or-drop policy: The corresponding video frame

should be

Rule (1): dropped if the current-time is ahead of the dead-time.

Rule ( 2 ) : delayed if the current-time lags behind the star-time.

Rule (3): played back if the current-time is within the medialifetime (i.e. in between the start-time and the dead-time)

.

There are several advantages of the proposed synchroniza- 1) Easy to program and debug: The use of multiprocesses

(multithreads) model simplifies the complexity of a program so that it is more intuitive and easy to program each media function than the Model I does.

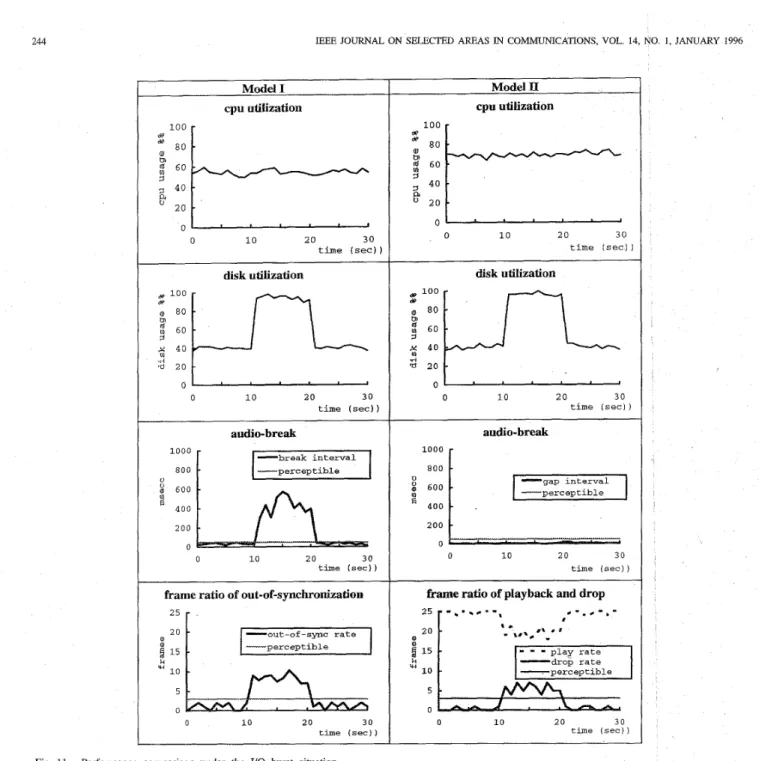

244 IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL 14, NO 1, JANUARY 1996 Model I cpu utilization 100 r 1 0 10 2 0 30 t i m e ( s e c ) ) disk utilization 0 10 2 0 3 0 time (sec)) audio-break -break interval -perceptible $ 6 0 0 E 4 0 0 2 0 0 0 0 10 2 0 30 time (sec)) frame ratio of out-of-synchronization

2 5 -out-of-sync rate perceptible 1 5 10 5 0 0 1 0 20 3 0 time (sec)) Fig. 11. Performance cornpanson under the U 0 burst situation

2) “Audio discontinuity” is eliminated: This is because the audio process is always busy on writing audio data and thus the audio device buffer is almost full.

3 ) “Out-of-synchronization” never happen: This 1s because the video process adopts the delay-or-drop policy to han- dle inter-media synchronization with the audio process on the same time axis.

4) Multiple media priority can be supported: Various media

priority can be supported by assigning different priority to different processes (threads) in real applications. For example, in the lip synchronization application, it is obvious that the priority of audio data is higher than that of the video because the human perception is more sensitive to audio than to video. In subtitle applications (with foreground slides and background music), the

Model II CPU utilization 7 2 0 I 0 10 2 0 3 0 time ( s e c ) ) disk utilization I 0 10 2 0 30 time ( s e c ) ) audio-break l o o 0

r

0 6 0 0 0 ’ 0 10 2 0 3 0 time (sec)) kame ratio of playback and drop+

-..

-,-0 10 2 0 30

time (sec))

priority of image or graphic media may be higher than that of the audio data.

5 ) System is robust and $exible: Multimedia applications based on the proposed model will become more flexible and adjustable than the ones based on the traditional synchronization model. For example, by taking the ad- vantage of the proposed model, an application, such as Quality of Service (QoS) manager can kill or suspend some less important media processes (threads) when the system workload is heavy and can be restarted or resumed when system workload becomes light.

IV. SYSTEM IMPLEMENTATION AND E X P E ~ E N T RESULTS

A continuous media playback (CMP) module based on the proposed synchronization model has been implemented on the

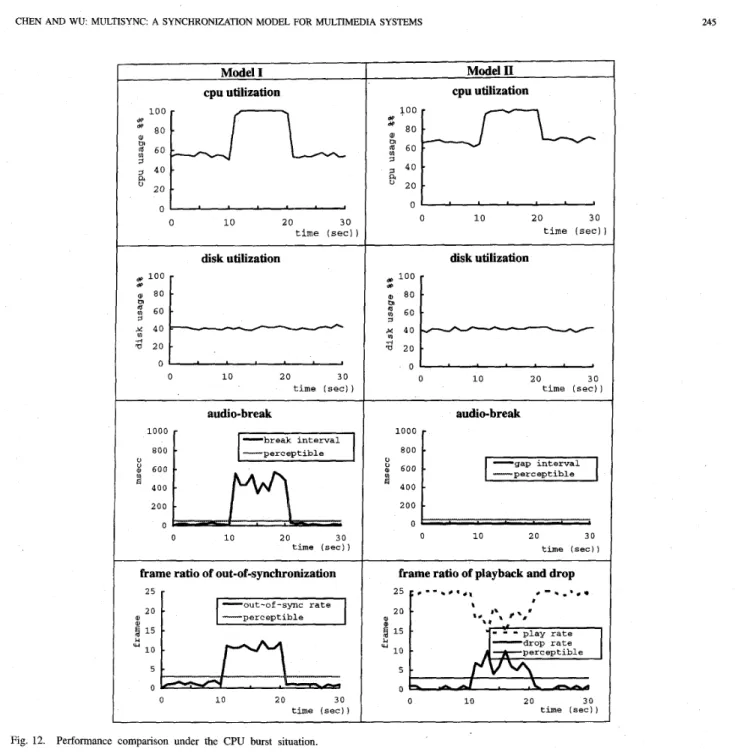

CHEN AND WU: MULTISYNC: A SYNCHRONIZATION MODEL FOR MULTIMEDIA SYSTEMS 245 Model I CPU utilization 0 ’ *- 0 10 2 0 30 t i m e ( s e c ) ) disk utilization 1 0 0

r

0 -0 10 20 3 0 time ( s e c ) ) audio-break -break i n t e r v a l $ 6 0 0 400 2 0 0 0 I 0 10 2 (1 3 0 time (sec) ) frame ratio of out-of-synchronization2 5

-out-of-sync r a t e p e r c e p t i b l e

0 10 20 3 0

time ( s e c ) )

Fig. 12. Performance comparison under the CPU burst situation.

SUN SPARC 10 workstation under UNIX and OlpenWindows environments. A JPEG-based hardware, the XVIDEO [40], [41] Parallax board, was used to compress and decompress the video stream (with 640 x 480 resolution) in real time (25 framesk). The built-in audio device was used to provide recordplayback functions with 8 KHz sampling rate. A graph- ical user interface based on OPENLOOK [42] and X-Windows

[431 was also developed. To provide interactive facilities such as fast forward, rewind and pause, the UNIX alarm signal (in X toolkit intrinsic: XtAddTimeOut [44]) rather than UNIX sleep [45] system function was included into the control process so as to return the input control right (such as mouse input focus) to the window manager. Fig. 9 shows the hardware and software architecture of the realized system.

~~ ~ Model 11 CPU utilization 0 -0 1 0 20 30 t i m e ( s e c ) disk utilization I 0 10 20 3 0 time ( s e c ) ) audio-break 2 600

‘

400 2 0 01

O t ‘ . ” ’ ’ 0 10 2 0 3 0 time ( s e c ) )frame ratio of playback and drop 25 c . - - . # ~ , ,

.

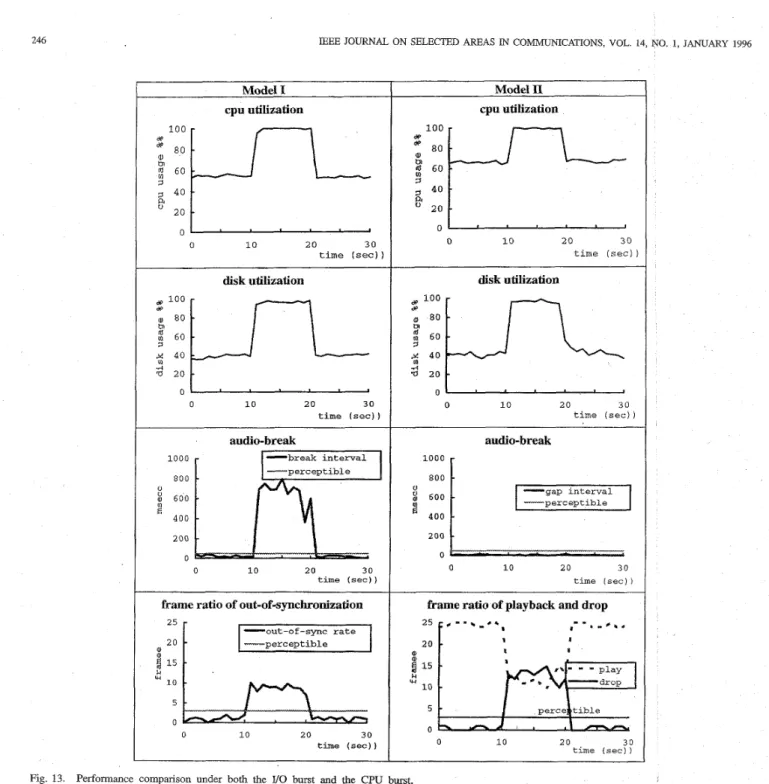

- b .‘I 20 1 1 5 LI 10 5 0 0 10 20 30 time ( s e c ) )Two critical situations, U0 burst and CPU burst which result in bad performance in traditional synchronization model, were used to judge the performance of the proposed model. The U 0

burst was generated by concurrently running some processes with heavy disk UOs, while the CPU burst was generated by running some processes with heavy CPU computations at the same time. Fig. 10 shows the pseudo codes used for simulating

the

U 0

burst and the CPU burst situations.The synchronization performance of the proposed model was examined by measuring the (1) “audio-break interval” (the time duration for the audio buffer retained in empty status) and the ( 2 ) “frame dropping ratio” (the number of dropped frames

per second) of the A N playback system under three different kinds of heavy load situations: U 0 burst (cf. Fig. 11) alone,

246 IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL. 14, NO. 1, JANUARY 1996 Model I CPU utilizatiun 1 0 0 80 6 0 4 0 2 0 d p 64 tn 3

2

0 1 I 0 10 2 0 30 time ( s e c ) ) ~ disk utilization 0 10 2 0 30 t i m e ( s e c ) ) 1000 8 0 0 $ 6 0 0 4 0 0 U 2 200 0 audio- break 0 10 2 0 30 time ( s e c ) )frame ratio of out-of-synchronization

2 5 0 10 20 30 t i m e ( s e c ) ) ~ Model II CPU utilization 0 ' I 0 10 2 0 3 0 time ( s e c ) ) disk utilization I 0 10 2 0 3 0 t i m e ( s e c ) ) audio-break gap i n t e r v a l p e r c e p t i b l e

3

6 0 0 400 2001

0 10 2 0 3 0 time ( s e c ) )kame ratio of playback and drop

-I

,

t - t . . I I 15 10 w 5 0 0 1 0 2 0 3 0 time ( s e c ) )Fig. 13. Performance comparison under both the I70burst and the CPU burst.

CPU burst (cf. Fig. 12) alone and combination of both the I/O burst and CPU burst (cf. Fig. 13). For comparison purposes, the synchronization performances of Model I under the same heavy load situations are also illustrated in the corresponding figures. Both of the system load histograms which include CPU load and disk access load and the performance measurement histograms are given in the illustrations. Since the out-of- synchronization problem is successfully solved by using the delay-or-drop policy in the Model II, only the frame ratio of out-of-synchronization of the Model I is exhibited while frame ratio of playback and drop of Model

II

is shown in the performance measurement histogram. Audio-break has also been measured for both models in the illustrations. Each experiment takes thirty seconds long and is executed in threeequal duration sub-intervals (10 seconds each). The system load is in normal situation in the first sub-interval. During the second sub-interval, the system load is in the designated burst situation (such as I/O burst, CPU burst, or both). In the third sub-interval, the system load return to its normal situation.

From the CPU utilization histograms as shown in Figs. 11-13, it is observed that in normal CPU load situation the percentage of CPU utilization of Model I1 (70% in average) is higher than that of the Model I (59% in average), in the normal CPU load situation. This is because the Model 11-based playback system uses more processes which include control-process, audio-process and video-process at least than its Model I-based counterpart.

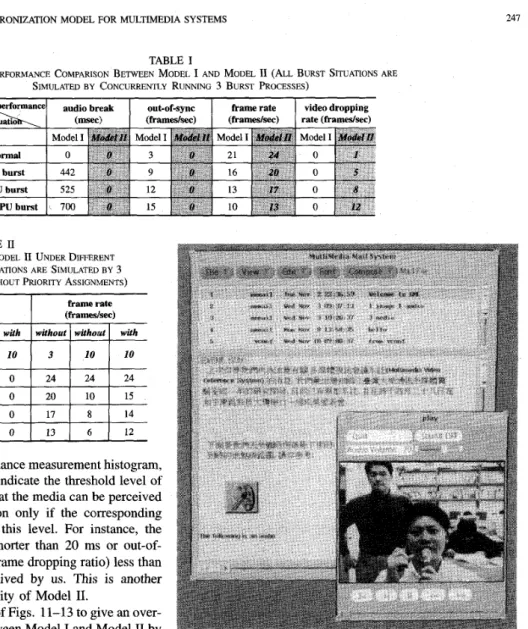

CHEN AND W U MULTISYNC: A SYNCHRONIZATION MODEL FOR MULTIMEDIA SYSTEMS ~ ~ 241 frame rate (framdsec) TABLE I

OVERALL PERFORMANCE COMPARISON BETWEEN MODEL I AND MODEL II (ALL BURST SITUATIONS ARE SIMULATED BY CONCURRENTLY RUNNING 3 BURST PROCESSES)

(process number)

TABLE II

BURST SITUATIONS (BURST SITUATIONS ARE SIMULATED BY 3

AND 10 PROCESSES w m OR WITHOUT PRIORITY ASSIGNMENTS) OVERALL PERFORMANCE OF MODEL 11 UNDER DIFFERENT

Normal 0 0 0 2 4 1 2 4 1 2 4

CPU burst U0,CPUburst

0 475 0 17

0 578 0 13 6

It is noticed that in the performance measurement histogram, a horizontal thin line is used to indicate the threshold level of perceptibility, which indicates that the media can be perceived by human audition and/or vision only if the corresponding measured value is higher than this level. For instance, the effect of audio-break interval shorter than 20 ms or out-of- synchronization ratio (or video frame dropping ratio) less than

3 framesh is hard to be perceived by us. This is another

positive factor for the applicability of Model 11.

Table I summaries the results of Figs. 11-13 to give an over- all performance comparison between Model I and Model I1 by

various performance indices including audio-break interval, - Fig. 14. The photo of the multimedia e-mail system. out-of-synchronization ratio, playback frame rate, and video

dropping frame rate, under different system load situations. More overheads will be induced if more burst processes are executed concurrently in a system, so the number of concurrent executing burst processes is an important parameter of the synchronization performance of Model 11. The effect of this parameter is examined by increasing the number of concurrent executing process from 3 to 10. As expected, audio break occurs (cf. the 3rd column of Table 11) and video

frame-dropping-rate/frame-rate increases/decreases (cf. the 6th column of Table 11) due to the insufficiency of CPU quota for each media process to retain its intra-media synchronization. This problem can be partially solved (or at least reduced) by assigning higher priority to more important media (such as audio in the AN playback application). The 4th column

and

the last column of Table I1 show the effectiveness of thepriority assignment for Model 11.

proposed model is insensitive to I/O burst and CPU burst situations, in which the traditional synchronization approach can not perform well.

UNIX is a very popular operating system, but it does not support real-time functions due to its kernel is a nonpreemptive one and its process scheduling criterion which adopts the multi-level feedback with round robin policy. As it is pointed out in [30] and 1311 that the conventional UNIX environment is not suitable for supporting high-performance multimedia computing. However, a multimedia synchronization model and some related mechanisms under UNIX environment is intended to develop in this paper in order to expand its popularity.

On the basis of the proposed synchronization model and the prescribed continuous media playback (CMP) subsystem, a series of multimedia systems, which include Multimedia Authoring System [ll] , Multimedia E-mail System [ 2 ] , Mul-

timedia BBS [lo], and a prototype of VoD System [9], have been developed on SUN SPARC 10 workstations under UNIX operating system and OpenWindows environments in the Communication and Multimedia laboratory of National Taiwan University. Fig. 14 shows the photo the Multimedia

v.

CONCLUSION AND DISCUSSIONSIn this paper, a novel synchronization model suitable for multi-user, multiprocesses (e.g., UNIX), and multithreads (e.g., MACH, 0 3 2 ) environments is proposed. Moreover, the

248 IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, VOL. 14, NO 1, JANUARY 1996 E-mail System. The successfulness of these multimedia ap-

plications show once again, the correctness and the practical values of the proposed synchronization model.

[25] R. Steinmetz and C. Engler, “Human perception of media synchroniza- tion,” IBM European Networking Center, Tech. rep. 43.9310, 1993. [26] L. F. R. da Costa Carmo, P. de Saqui-Saunes, and J.-P. Courtiat,

“Basic smchronization conceuts in multimedia svstems.” in Proc. 3rd

REFERENCES

R. C. Schank, “Active learning through multimedia,” IEEE Multimedia Mag., pp. 69-78, Spring 1994.

M. Ouhyoung et al., “The MOS multimedia e-mail system,” in Proc. IEEE Internal Conference on Multimedia Computing and Systems, 1994, pp. 315-324.

W.-H. F. Leung et al., “A software architecture for workstations sup- porting multimedia conferencing in packet switching networks,” IEEE

J. Select. Areas Commun., vol. 8, no. 3, pp. 38C390, Apr. 1990. W. J. Clark, “Multipoint multimedia conferencing,” IEEE Commun. Mag., pp. 44-50, May 1992.

E. Craighill, R. Lang, M. Fong, and K. Skinner, “CECED: A system for information multimedia collaboration,” in Proc. ACM Multimedia,

1993, pp. 431-446.

V. Anupam and C. L. Bajaj, “Collaborative multimedia scientific design in SHASTRAS,” in Proc. ACM Multimedia, 1993, pp. 438479. P. V. Rangan, H. M. Vin, and S . Ramanathan, “Designing an on-demand multimedia service,” IEEE Commun. Mag., pp. 5 M , July 1992. T. D. C. Little, G. Ahanger, R. J. Folz, J. F. Gibbon, F. W. Reeve, D. H. Schelleng, and D. Venkatesh, “A digital on-demand video service supporting content-based queries,” in Proc. ACM Multimedia, 1993, pp. 427436.

H.-Y. Chen, T.-J. Yang, J.-L. Wu, and W.-C. Chen, “Design of a video- on-demand system and its implementation on ethernet LAN,” in Proc. Int. Computer Symp. (ICs), 1994, pp. 376381.

K.-N. Chang et al., “The MOS multimedia bulletin board system,” in Proc. Int, Con$ Consumer Electronics, 1995.

H.-Y. Chen et al., “A novel audiohide0 synchronization model and its application in multimedia authoring system,” in Proc. Int. Con$ Consumer Electronics, 1994, pp. 176-177.

G. A. Wall, J. G. Hanko, and J. D. Northcutt, “Bus bandwidth manage- ment in a high resolution video workstation,” in Proc. 3rd Int. Workshop on Network and Operating System Support for Digital Audio and video, 1992, pp. 274-288.

P. Druschel, M. B. Abbott, M. Pagels, and L. L. Peterson, “Analysis of VO subsystem design for multimedia workstations,” in Proc. 3rd Int. Workshop on Network and Operating System Support for Digital Audio and video, 1992, pp. 289-301.

B. Wolfinger and M. Moran, “A continuos media data transport service and protocol for real-time communication in high-speed networks,” in Proc. 2nd Int. Workshop on Network and Operating System Support for Digital Audio and video, 1991, pp. 171-182.

G. J. M. Smit and P. J. M. Havinga, “The architecture of rattlesnake: A real-time multimedia network,” in Proc. 3rd International Workshop on Network and Operating System Support for Digital Audio and Video, Nicolaou, “An architecture for real-time multimedia communication systems,” IEEE .ISelect. Areas Commun., vol. . 8, no. 3, Apr. 1990. T. D. C. Little and A. Ghafoor, “Network considerations for distributed multimedia object composition and communication,” IEEE Network Mag., pp. 32-49, Nov. 1990.

__ , “Multimedia synchronization protocols for broadband integrated services,’’ IEEE J. Select. Areas Commun., vol. 9, no. 9, pp. 1368-1382,

Dec. 1991.

Shepherd, D. Hutchinson, F. Garcia, and G. Coulson, “Protocol support for distributed multimedia applications,” Computer Commun., vol. 15, no. 6, pp. 359-366, July 1992.

G. K. Wallace, “The JPEG still image compression standard,” ACM Commun., vol. 34, no. 4, pp. 30-44, Apr. 1991.

D. LeGall, “MPEG A video compression standard for multimedia applications,” ACM Commun., vol. 34, no. 4, pp. 45-58, Apr. 1991. T. D. C. Little, “Synchronization and storage models for multimedia objects,” IEEE J. Select. Areas Commun., vol. 8, no. 3, pp. 413427, Apr. 1990.

K. Rothermel and G. Dermler, “Synchronization in joint-viewing en- vironments,” in Proc. 3rd International Workshop on Network and Operating Systems Support for Digital Audio and Edeo, 1992, pp. D. C. A. Bulterman, “Synchronization of multi-soured multimedia data for heterogeneous target systems,” in Proc. 3rd International Workshop on Network and OS Suuuort for Digital Audio and video, 1992, DD. 1992, pp. 15-24.

106-1 18.

I n t e m t i o m l Workshop on Nitwork and OS Supdort for Digital Audio and video, 1992, pp. 94-105.

[27] R. Stektnetz, “Synchronization properties in multimeha system,” IEEE

L Select. Areas Commun., vol. 8, no 3, pp. 401412, Apr. 1990. [28] L. Ehley, “Evaluabon of multuneha synchronization techniques,” in

Proc. IEEE Int. Con$ Multimedia Computing and Systems, 1994, pp. 514-518.

[29] T. Wahl and K. Rothermel, “Represenhng time in mulbmedia systems,” in Proc. IEEE Int. Con$ Multimedza Computing and Systems, 1994, pp 538-543.

[30] D. C. A. Bulterman and R. van Liere, “Multimedia synchronization and UNIX,” in Proc. 2nd International. Workshop on Network and OS Support for Digital Audio and video, 1991, pp. 109-119.

[31] J. Nakajima, M. Yazaki, and H. Matsumoto, “Multme&a/realtime extensions for the Mach operating system,” in Proc. Usenin Con$, 1991, [32] H. Tokuda and T. Nakajuna, “Evaluation of real-time synchronization

m real-time Mach,” 111 Proc. USENIX 2nd Mar. Symp., 1991. [33] D. L. Black et al., “Microkernel operating system architecture and

Mach,” in Proc. Workshop on Micro-Kernels and Other Kernel Archi- lectures, Apr. 1992.

[34] G. Blakowski, J. Huebel, and U. Langrehr, “Tools for specifying and executing synchromzed multimema presentations,” 111 Proc. 2nd, Intenzatronal Workshop on Network and Operating System Support f o r Digital Audio and video, 1991.

[35] G. D. Drapeau, “Synchronization m the MAEstro multimedia authoring environment,” m Proc. ACM Multimedia, 1993, pp. 331-339 [36] S.-B. Wey, C.-W. Shah, and W.-C. Chen, “Synchronization of audio and

video signals in multimedia computing systems,” in Proc. Int. Computer Symp., 1992, pp. 665469.

[37] C.-C. Yang, J.-H. Huang, and M. Ouhyoung, “Synchronization of &gi-

tized audio and video m mulhmema system,” in HD-Media Technology

and Application Workshop, 1992.

[38] Y.-W. Lei and M. Ouhyoung, “A new archtecture for a TV graphics animation module,” IEEE Trans. Consumer Electronics, vol. 39, no. 4. pp. 797-800, Nov. 1993.

[39] L. A. Rowe and B. C. Smith, “A continuous media player,” in Proc. 3rd Int. Workshop on Network and OS Support fdr Digital Audio and video, 1992.

pp. 183-198.

[40] XVIDEO User’s Guide, Parallax Graphcs, Inc., 1991.

[41] XVIDEO: SofhYare Developer’s Guide, Parallax Graphcs, Inc. 1991.

[42] J. D. Miller, “An OPENLOOK at UNM,” A Developer’s Guide to X , [43] A. Nye and Tun, X Toolkit Intrinsics Programming Manual, O’Reilly &

[44] X Toolkit Intrinsics Reference Manual, O’Reilly & Associates, Inc.,

[45] SUN Microsystem, Programmer’s Language Guides.

M&T Inc. 1990. Associates, hc., 1990. 1990.

Herng-Yow Chen received the B S degree in computer science and informa-

hon engmeenng from Tamkang Umversity, Tamshoei, Taiwan, ROC He has been a Ph.D student m the department of Computer Science and Information Engmeermg at Nahonal Tawan Umversity, Tapei, Taiwan, ROC, since 1992

HIS research mterests include &gital signal processing, image codmg, data compression, multmedia synchronizahon modeling, and multimeda systems

Ja-Ling Wu (S’85-A’87) was born in Tapei, Tawan, on November 24,1956. He received the B S degree from Tamkang Umversity, Tamshoei, Tawan, ROC, in 1979, and the M.S. and Ph D. degrees from the Tatung Institute of

Technology, all m electncal engineenng, in 1981 and 1986, respecbvely From 1986 to 1987, he was an Associate professor of the Electncal Engmeenng Department at Tatung Institute of Technology, Tapei, Taiwan, ROC Since 1987, he has been with the Department of Computer Science and Information Engmeenng, Nahonal Taiwan Umversity, where he is presently a Professor His research interests include neural networks, VLSI signal processing, parallel processing, mage coding, algonthm design for DSP, data compression, and mulhmeda systems. He published more than 100 techmcal and conference papers

Dr Wu was the recipient of the 1989 Outstanchng Youth Medal of China and the Outstandma Research Award suonsored bv the National Science Council.