Mining Weighted Linguistic Browsing Patterns from Log

Data on Web Servers

Tzung-Pei Hong1, Ming-Jer Chiang2

, Shyue-Liang Wang3 1 Department of Electrical Engineering, National University of Kaohsiung

Kaohsiung, 811, Taiwan, R.O.C.

tphong@nuk.edu.tw

2 Institute of Information Engineering, I-Shou University Kaohsiung, 840, Taiwan, R.O.C.

ndmc893009@ yahoo.com.tw

3 Department of Computer Science, New York Institute of Technology New York 10023, USA

slwang@nyit.edu

Abstract. World-wide-web applications have grown very rapidly and have

made a significant impact on computer systems. Among them, web browsing for useful information may be most commonly seen. Due to its tremendous amounts of use, efficient and effective web retrieval has become a very impor-tant research topic in this field. Techniques of web mining have thus been re-quested and developed to achieve this purpose. All the web pages are usually assumed to have the same importance in web mining. Different web pages in a web site may, however, have different importance to users in real applications. Besides, the mining parameters in most conventional data-mining algorithms are numerical. This paper thus attempts to propose a weighted web-mining technique to discover linguistic browsing patterns from log data in web servers. Web pages are first evaluated by managers as linguistic terms to reflect their importance, which are then transformed as fuzzy sets of weights. Linguistic minimum supports are assigned by users, showing a more natural way of human reasoning. Fuzzy operations including fuzzy ranking are then used to find lin-guistic weighted large sequences. An example is also given to clearly illustrate the proposed approach.

1 Introduction

Knowledge discovery in databases (KDD) has become a process of considerable in-terest in recent years as the amounts of data in many databases have grown tremend-ously large. KDD means the application of nontrivial procedures for identifying effec-tive, coherent, potentially useful, and previously unknown patterns in large databases. The KDD process generally consists of three phases: pre-processing, data mining and post-processing. Among them, data mining plays a critical role to KDD. Depending on the classes of knowledge derived, mining approaches may be classified as finding association rules, classification rules, clustering rules, and sequential patterns, among others.

Recently, world-wide-web applications have grown very rapidly and have made a significant impact on computer systems. Among them, web browsing for useful infor-mation may be most commonly seen. Due to its tremendous amounts of use, efficient and effective web retrieval has thus become a very important research topic in this field. Techniques of web mining have thus been requested and developed to achieve this purpose. Cooley et. al. divided web mining into two classes: web-content mining and web-usage mining [8]. Web-content mining focuses on information discovery from sources across the world-wide-web. On the other hand, web-usage mining em-phasizes on the automatic discovery of user access patterns from web servers [9]. In the past, several web-mining approaches for finding sequential patterns and interesting user information from the world-wide-web were proposed [5-8].

The fuzzy set theory has been used more and more frequently in intelligent systems because of its simplicity and similarity to human reasoning. The theory has been ap-plied in fields such as manufacturing, engineering, diagnosis, and economics, among others. Several fuzzy learning algorithms for inducing rules from given sets of data have been designed and used to good effect with specific domains [2, 4, 10-15].

In the past, all the web pages were usually assumed to have the same importance in web mining. Different web pages in a web site may, however, have different impor-tance to users in real applications. For example, a web page with merchandise items on it may be more important than that with general introduction. Also, a web page with expensive merchandise items may be more important than that with cheap ones. Different importance for web pages should then be considered in web mining. Besides, the minimum support and minimum confidence values in most conventional data-mining algorithms were set numerical. In [14], we proposed a weighted data-mining algo-rithm for association rules using linguistic minimum support and minimum confidence. In this paper, we further extend it to mining weighted sequential browsing patterns from log data on web servers. The linguistic minimum support values are given, which are more natural and understandable for human beings. The browsing sequences of users on web pages are used to analyze the overall retrieval behaviors of a web site. Web pages may have different importance, which is evaluated by managers or experts as linguistic terms. The proposed approach transforms linguistic importance of web pages and minimum supports into fuzzy sets, and then filters out weighted large browsing patterns with linguistic supports using fuzzy operations.

The remaining parts of this paper are organized as follows. Several mining ap-proaches related to this paper are reviewed in Section 2. Fuzzy sets and operations are introduced in Section 3. The notation used in this paper is defined in Section 4. The proposed web-mining algorithm for weighted browsing patterns with linguistic mini-mum supports is described in Section 5. An example to illustrate the proposed web-mining algorithm is given in Section 6. Conclusions are finally given in Section 7.

2 Review of Related Mining Approaches

Agrawal and Srikant proposed a mining algorithm to discover sequential patterns from a set of transactions [1]. Five phases are included in their approach. In the first phase,

the transactions are sorted first by customer ID as the major key and then by transac-tion time as the minor key. This phase thus converts the original transactransac-tions into customer sequences. In the second phase, the set of all large itemsets are found from the customer sequences by comparing their counts with a predefined support parame-ter α. This phase is similar to the process of mining association rules. Note that when an itemset occurs more than one time in a customer sequence, it is counted once for this customer sequence. In the third phase, each large itemset is mapped to a conti-guous integer and the original customer sequences are transformed into the mapped integer sequences. In the fourth phase, the set of transformed integer sequences are used to find large sequences among them. In the fifth phase, the maximally large se-quences are then derived and output to users.

Besides, Cai et al. proposed weighted mining to reflect different importance to dif-ferent items [3]. Each item was attached a numerical weight given by users. Weighted supports and weighted confidences were then defined to determine interesting associa-tion rules. Yue et al. then extended their concepts to fuzzy item vectors [18].

In this paper, we proposed a weighted web-mining algorithm to mine browsing pat-terns with linguistic supports from log data on a web server. Different web pages have different importance, which is evaluated by managers or experts. The fuzzy concepts are used to represent importance of web pages and minimum supports. These parame-ters are expressed in linguistic terms, which are more natural and understandable for human beings.

3 Review of Related Fuzzy Set Concepts

Fuzzy set theory was first proposed by Zadeh and Goguen in 1965 [19][20]. Fuzzy set theory is primarily concerned with quantifying and reasoning using natural language in which words can have ambiguous meanings. This can be thought of as an extension of traditional crisp sets, in which each element must either be in or not in a set.

Triangular membership functions are commonly used and can be denoted by A=(a, b, c), where a≤b≤c. The abscissa b represents the variable value with the maximal grade of membership value, i.e. µA(b)=1; a and c are the lower and upper bounds of

the available area. They are used to reflect the fuzziness of the data.

Besides, fuzzy ranking is usually used to determine the order of fuzzy sets and is thus quite important to actual applications. Several fuzzy ranking methods have been proposed in the literature. The ranking method using gravities is introduced here. Note that other ranking methods can also be used in our fuzzy algorithm.

The abscissa of the gravity of a triangular membership function (a, b, c) is (a+b+c)/3. Let A and B be two triangular fuzzy sets. A and B can then be represented as follows: A = (aA, bA, cA), B = (aB, bB, cB). Let g(A) = (aA + bA + cA)/3, and g(B) = (aB + bB + cB)/3.

Using the gravity ranking method, we say A > B if g(A) > g(B).

4 Notation

The notation used in this paper is defined as follows. n: the total number of log records;

n’: the total number of browsing sequences; m: the total number of web pages;

Ai: the i-th web page, 1≤i≤m;

k: the total number of managers;

Wi,j: the transformed fuzzy weight for importance of Page Ai, evaluated by the j-th

manager;

ave i

W : the fuzzy average weight for importance of Page Ai;

Ws: the fuzzy weight for importance of sequential sequence s;

connti: the count of Page Ai;

α: the predefined linguistic minimum support value; Ij : the j-th membership function of importance;

Iave: the fuzzy average weight of all possible linguistic terms of importance; wsupi: the fuzzy weighted support of Page Ai;

minsup: the transformed fuzzy set from the linguistic minimum support value α; wminsup: the fuzzy weighted set of minimum supports;

Cr: the set of candidate sequences with r web pages;

Lr: the set of large sequences with r web pages.

5 The Proposed Algorithm for Linguistic Weighted Web Mining

Log data in a web site are used to analyze the browsing patterns on that site. Many fields exist in a log schema. Among them, the fields date, time, client-ip and file name are used in the mining process. Only the log data with .asp, .htm, .html, .jva and .cgi are considered web pages and used to analyze the mining behavior. The other files such as .jpg and .gif are thought of as inclusion in the pages and are omitted. The number of files to be analyzed is thus reduced. The log data to be analyzed are sorted first in the order of client-ip and then in the order of date and time. The web pages browsed by a client can thus be easily found. The importance of web pages are consi-dered and represented as linguistic terms. Linguistic minimum support value is as-signed in the mining process.

The proposed web-mining algorithm then uses the set of membership functions for importance to transform managers’ linguistic evaluations of the importance of web pages into fuzzy weights. The fuzzy weights of web pages from different mangers are then averaged. The algorithm then calculates the weighted counts of web pages from browsing sequences according to the average fuzzy weights of web pages. The given linguistic minimum support value is also transformed into a fuzzy weighted set. All

weighted large 1-sequences can thus be found by ranking the fuzzy weighted support of each web page with fuzzy weighted minimum support. After that, candidate 2-sequences are formed from weighted large 1-2-sequences and the same procedure is used to find all weighted large 2-sequences. This procedure is repeated until all weighted large sequences have been found. Details of the proposed mining algorithm are described below.

The weighted web-mining algorithm:

INPUT: A set of n web-log records, a set of m web pages with their importance eva-luated by k managers, two sets of membership functions respectively for im-portance and minimum support, and a pre-defined linguistic minimum sup-port value α.

OUTPUT: A set of weighted linguistic browsing patterns.

STEP 1: Select the records with file names including .asp, .htm, .html, .jva, .cgi and closing connection from the log data; keep only the fields date, time, client-ip and file-name.

STEP 2: Transform the client-ips into contiguous integers (called encoded client ID) for convenience, according to their first browsing time. Note that the same client-ip with two closing connections is given two integers.

STEP 3: Sort the resulting log data first by encoded client ID and then by date and time.

STEP 4: Form a browsing sequence for each client ID by sequentially listing the web pages.

STEP 5: Transform each linguistic term of importance for web page Ai, 1≤i≤m,

which is evaluated by the j-th manager into a fuzzy set Wi,j of weights, using

the given membership functions of the importance of web pages. STEP 6: Calculate the fuzzy average weight ave

i

W of each web page Ai by fuzzy

addition as:

∑

= ∗ = k j j i ave i W k W 1 , 1 .STEP 7: Count the occurrences (counti) of each web page Ai appearing in the set of

browsing sequences; if Ai appears more than one time in a browsing

se-quence, its count addition is still one; set the support (supporti) of Ai as

counti / n’, where n’ is the number of browsing sequences from STEP 4.

STEP 8: Calculate the fuzzy weighted support wsupi of each web page Ai as:

wsupi =

'

n

W

count

i×

iave.STEP 9: Transform the given linguistic minimum support value α into a fuzzy set minsup, using the given membership functions of minimum supports. STEP 10: Calculate the fuzzy weighted set (wminsup) of the given minimum support

value as:

wminsup = minsup

×

(the gravity of ave I ), wherem I I m j j ave

∑

= = 1 ,with Ij being the j-th membership function of importance. Iave represents the

fuzzy average weight of all possible linguistic terms of importance.

STEP 11: Check whether the weighted support (wsupi) of each web page Ai is larger

than or equal to the fuzzy weighted minimum support (wminsup) by fuzzy ranking. Any fuzzy ranking approach can be applied here as long as it can generate a crisp rank. If wsupi is equal to or greater than wminsup, put Ai in

the set of large 1-sequences L1.

STEP 12: Set r = 1, where r is used to represent the number of web pages kept in the current large sequences.

STEP 13: Generate the candidate set Cr+1 from Lr in a way similar to that in the

aprio-riall algorithm [1]. Restated, the algorithm first joins Lr and Lr, under the

condition that r-1 web pages in the two sequences are the same and with the same orders. Different permutations represent different candidates. The al-gorithm then keeps in Cr+1 the sequences which have all their

sub-sequences of length r existing in Lr.

STEP 14: Do the following substeps for each newly formed (r+1)-sequence s with web browsing pattern

(

s

1→

s

2→

...

→

s

r+1)

in Cr+1:(a) Calculate the fuzzy weight Ws of sequence s as:

ave s ave s ave s s

W

W

W

rW

1 2 1Λ

Λ

Λ

+=

K

, where ave siW is the fuzzy average weight of web page

s

i, calculated in STEP 6. If the minimum operation is used for the intersection, then:ave s r i s MinWi W 1 1 + = = .

(b) Count the occurrences (counts) of sequence s appearing in the set of

browsing sequences; if s appears more than one time in a browsing se-quence, its count addition is still one; set the support (supports) of s as

counts / n’, where n’ is the number of browsing sequences.

(c) Calculate the weighted support wsup of sequence s as: s ' n count W wsup s s s × = .

(d) Check whether the weighted support wsups of sequence s is greater than

or equal to the fuzzy weighted minimum support wminsup by fuzzy ranking. If wsups is greater than or equal to wminsup, put s in the set of large (r+1)-sequences Lr+1.

STEP 15: If Lr+1 is null, then do the next step; otherwise, set r = r + 1 and repeat

STEP 16: For each large r-sequence s (r>1) with weighted support wsups, find the

linguistic minimum support region Si with wminsupi≤ wsups < wminsupi+1

by fuzzy ranking, where:

wminsupi = minsupi

×

(the gravity of Iave),and minsupi is the given membership function for Si. Output sequence s

with linguistic support value Si.

The linguistic sequential browsing patterns output after Step 16 can serve as meta-knowledge concerning the given log data.

6 An Example

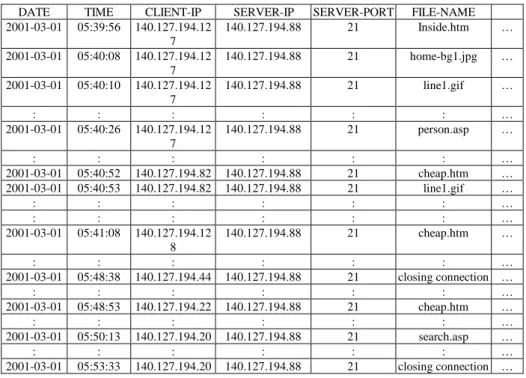

In this section, an example is given to illustrate the proposed web-mining algorithm. This is a simple example to show how the proposed algorithm can be used to generate weighted linguistic browsing sequential patterns for clients' browsing behavior accord-ing to the log data on a web server. Assume a part of the log data is shown in Table 1.

Table 1. A part of the log data used in the example

DATE TIME CLIENT-IP SERVER-IP SERVER-PORT FILE-NAME 2001-03-01 05:39:56 140.127.194.12 7 140.127.194.88 21 Inside.htm … 2001-03-01 05:40:08 140.127.194.12 7 140.127.194.88 21 home-bg1.jpg … 2001-03-01 05:40:10 140.127.194.12 7 140.127.194.88 21 line1.gif … : : : : : : … 2001-03-01 05:40:26 140.127.194.12 7 140.127.194.88 21 person.asp … : : : : : : … 2001-03-01 05:40:52 140.127.194.82 140.127.194.88 21 cheap.htm … 2001-03-01 05:40:53 140.127.194.82 140.127.194.88 21 line1.gif … : : : : : : … : : : : : : … 2001-03-01 05:41:08 140.127.194.12 8 140.127.194.88 21 cheap.htm … : : : : : : … 2001-03-01 05:48:38 140.127.194.44 140.127.194.88 21 closing connection … : : : : : : … 2001-03-01 05:48:53 140.127.194.22 140.127.194.88 21 cheap.htm … : : : : : : … 2001-03-01 05:50:13 140.127.194.20 140.127.194.88 21 search.asp … : : : : : : … 2001-03-01 05:53:33 140.127.194.20 140.127.194.88 21 closing connection …

Each record in the log data includes fields date, time, client-ip, ip, server-port and file-name, among others. Only one file name is contained in each record. For

example, the user in client-ip 140.127.194.127 browsed the file inside.htm at 05:39:56 on March 1st, 2001. For the log data shown in Table 1, the proposed web-mining algorithm proceeds as follows.

The records with file names being .asp, .htm, .html, .jva, .cgi and closing connec-tion are selected for mining. Only the four fields date, time, client-ip and file-name are kept. The values of field client-ip are then transformed into contiguous integers ac-cording to each client’s first browsing time. The resulting log data are then sorted first by encoded client ID and then by date and time. The web pages browsed by each client are listed as a browsing sequence. For convenience, simple symbols are used here to represent web pages. Assume the browsing sequences from the log data in Table 1 after Step 4 are shown in Table 2.

Table 2. The browsing sequences from the log data

CLIENT ID BROWSING SEQUENCES

1 B, E, D, C 2 D, B, D 3 A, D 4 B, C, E, B, C 5 D, B, C 6 D, C, E, B

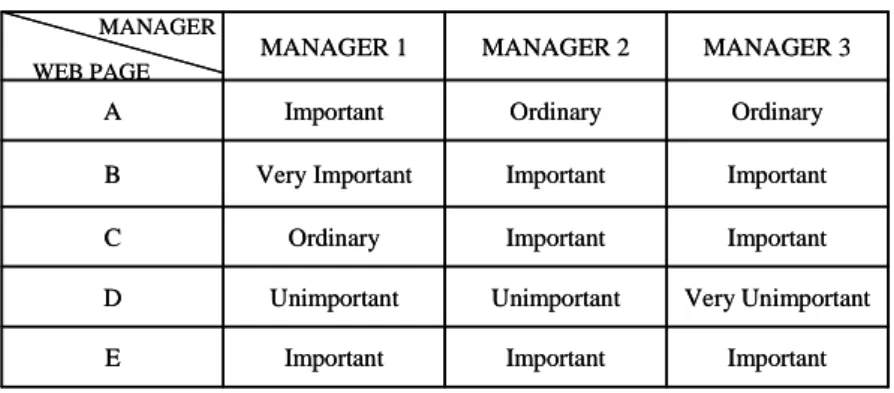

Assume the importance of the five web pages (A, B, C, D, and E) is evaluated by three managers as shown in Table 3. Also assume the membership functions for im-portance of the web pages are given in Figure 1.

Table 3. The importance of the web pages evaluated by three managers

MANAGER Important Important Important E Very Unimportant Unimportant Unimportant D Important Important Ordinary C Important Important Very Important B Ordinary Ordinary Important A MANAGER 3 MANAGER 2 MANAGER 1 WEB PAGE MANAGER Important Important Important E Very Unimportant Unimportant Unimportant D Important Important Ordinary C Important Important Very Important B Ordinary Ordinary Important A MANAGER 3 MANAGER 2 MANAGER 1 WEB PAGE

Fig 1. The membership functions of importance

In Figure 1, the importance of the web pages is divided into five fuzzy regions: Very Unimportant, Unimportant, Ordinary, Important and Very Important. Each fuzzy region is represented by a membership function. The membership functions in Figure 1 can be represented as follows:

Very Unimportant (VU): (0, 0, 0.25), Unimportant (U): (0, 0.25, 0.5), Ordinary (O): (0.25, 0.5, 0.75), Important (I): (05, 075, 1), and Very Important (VI): (0.75, 1, 1).

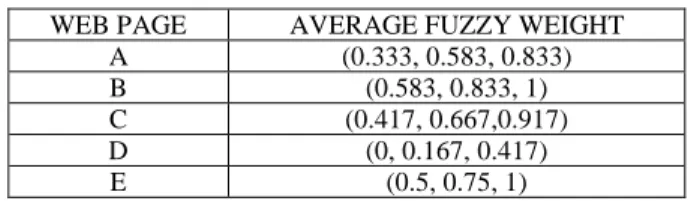

The linguistic terms for the importance of the web pages given in Table 3 are trans-formed into fuzzy sets by the membership functions in Figure 1. For example, Page A is evaluated to be important by Manager 1. It can then be transformed as a triangular fuzzy set (0.5, 0.75, 1) of weights. The average weights of the web pages are then calculated by fuzzy addition, with the results shown in Table 4.

Table 4. The average fuzzy weights of all the web pages

WEB PAGE AVERAGE FUZZY WEIGHT

A (0.333, 0.583, 0.833)

B (0.583, 0.833, 1)

C (0.417, 0.667,0.917)

D (0, 0.167, 0.417)

E (0.5, 0.75, 1)

The appearing number of each web page is counted from the browsing sequences in Table 2. If a web page occurs more than one time in a sequence, it is counted once for this sequence. The weighted support of each web page is then calculated. Take Page A as an example. The average fuzzy weight of A is (0.333, 0.583, 0.833) and the count is 1. Its weighted support is then (0.333, 0.583, 0.833) * 1/ 6, which is (0.056, 0.097, 0.139). Results for all the web pages are shown in Table 5.

Table 5. The weighted supports of all the web pages

WEB PAGE WEIGHTED SUPPORT

A (0.056, 0.097, 0.139) B (0.486, 0.694, 0.833) Weight Membership value 1 1 0.5 0.25 0.75 Very Unimportant Unimportant Important Very Important Ordinary 0 0 Weight Membership value 1 1 0.5 0.25 0.75 Very Unimportant Unimportant Important Very Important Ordinary 0 0

C (0.278, 0.444, 0.611)

D (0, 0.139, 0.347)

E (0.25, 0.375, 0.5)

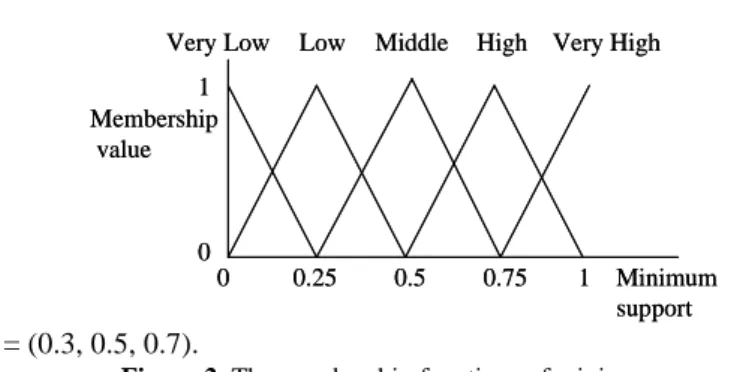

The given linguistic minimum support value is transformed into a fuzzy set of min-imum supports. Assume the membership functions for minmin-imum supports are given in Figure 2. Also assume the given linguistic minimum support value is “Middle”. It is then transformed into a fuzzy set of minimum supports, (0.25, 0.5, 0.75), according to the given membership functions in Figure 2. The fuzzy average weight of all possible linguistic terms of importance in Figure 2 is first calculated as:

Iave = [(0, 0, 0.25)+(0, 0.25, 0.5)+(0.25, 0.5, 0.75)+(0.5, 0.75, 1)+(0.75, 1, 1)]/5

= (0.3, 0.5, 0.7).

Figure 2. The membership functions of minimum supports

The gravity of Iave is then (0.3+0.5+0.7)/3, which is 0.5. The fuzzy weighted set of minimum supports for “Middle” is then (0.25, 0.5, 0.75) × 0.5, which is (0.125, 0.25, 0.375). The weighted support of each web page is then compared with the fuzzy weighted minimum support by fuzzy ranking. Any fuzzy ranking approach can be applied here as long as it can generate a crisp rank. Assume the gravity ranking ap-proach is adopted in this example. Take Page B as an example. The average height of the weighted support for Page B is (0.486 + 0.694 + 0.833)/3, which is 0.671. The average height of the fuzzy weighted minimum support is (0.125 + 0.25 + 0.375)/3, which is 0.25. Since 0.671 > 0.25, B is thus a large weighted 1- sequences. Similarly, C and E are large weighted 1-sequences. These 1-sequences are put in L1.

C2 is then generated from L1 as follows: (B, B), (B, C), (B, E), (C, B), (C, C), (C, E),

(E, B), (E, C) and (E, E). The fuzzy weights of all the 2-sequences in C2 are then

cal-culated. Here, the minimum operator is used for intersection. Take the 2-sequence (B, C) as an example. Its fuzzy weight is calculated as min((0.583, 0.833, 1), (0.417, 0.667, 0.917)), which is (0.417, 0.667, 0.917). The count of each candidate 2-sequence is found from the browsing 2-sequences. If a 2-2-sequence occurs more than one time in a browsing sequence, it is counted once for this browsing sequence. Results for this example are shown in Table 6.

Table 6. The counts of the 2- sequences

2-SEQUENCE COUNT (B, B) 1 Minimum support Membership value 1 1 0.5 0.25 0.75

Very Low Low Middle High Very High

0 0 Minimum support Membership value 1 1 0.5 0.25 0.75

Very Low Low Middle High Very High

0 0

(B, C) 3 (B, E) 2 (C, B) 2 (C, C) 1 (C, E) 2 (E, B) 2 (E, C) 2 (E, E) 0

The weighted support of each candidate 2-sequence is calculated. Take the se-quence (B, C) as an example. The minimum fuzzy weight of (B, C) is (0.417, 0.667, 0.917) and the count is 3. Its weighted support is then (0.417, 0.667, 0.917) * 3 / 6, which is (0.208, 0.333, 0.458). All the weighted supports of the candidate 2-sequences can be calculated. In this example, (B, C), (B, E) and (E, B) are found to be large weighted 2-sequences in Step 14. There are no large 3-sequences in the example.

The linguistic support value is found for each large r-sequence s (r > 1). Take the sequential browsing pattern (E, B) as an example. Its weighted support is (0.167, 0.25, 0.333). Since the membership function for linguistic minimum support region “Mid-dle“ is (0.25, 0.5, 0.75) and for “High“ is (0.5, 0.75, 1). The weighted fuzzy set for these two regions are (0.125, 0.25, 0.375) and (0.25, 0.375, 0.5). Since (0.125, 0.25, 0.375) ≤ (0.167, 0.25, 0.333) < (0.25, 0.375, 0.5) by fuzzy ranking, the linguistic support value for sequence (E, B) is then “Middle”. The linguistic supports of the other two large 2-sequences can be similarly derived. All the three large sequential browsing patterns are then output as:

1. B -> C with a middle support, 2. B -> E with a middle support, 3. E -> B with a middle support.

The three linguistic sequential patterns above are thus output as the meta-knowledge concerning the given log data.

7 Conclusion

In this paper, we have proposed a new weighted web-mining algorithm, which can process web-server logs to discover useful sequential browsing patterns with linguistic supports. The web pages are evaluated by managers as linguistic terms, which are then transformed and averaged as fuzzy sets of weights. Fuzzy operations including fuzzy ranking are used to find weighted sequential browsing patterns. Compared to previous mining approaches, the proposed one has linguistic inputs and outputs, which are more natural and understandable for human beings.

Although the proposed method works well in weighted web mining from log data, and can effectively manage linguistic minimum supports, it is just a beginning. There is still much work to be done in this field. Our method assumes that the membership functions are known in advance. In [12, 13, 15], we proposed some fuzzy learning methods to automatically derive the membership functions. In the future, we will

at-tempt to dynamically adjust the membership functions in the proposed web-mining algorithm to avoid the bottleneck of membership function acquisition.

Acknowledgement

This research was supported by the National Science Council of the Republic of China under contract NSC94-2213-E-390-005.

References

[1] R. Agrawal, R. Srikant: “Mining Sequential Patterns”, The Eleventh International

Conference on Data Engineering, 1995, pp. 3-14.

[2] A. F. Blishun, “Fuzzy learning models in expert systems,” Fuzzy Sets and Systems, Vol. 22, 1987, pp. 57-70.

[3] C. H. Cai, W. C. Fu, C. H. Cheng and W. W. Kwong, “Mining association rules with weighted items,” The International Database Engineering and Applications Symposium, 1998, pp. 68-77.

[4] L. M. de Campos and S. Moral, “Learning rules for a fuzzy inference model,” Fuzzy

Sets and Systems, Vol. 59, 1993, pp. 247-257.

[5] M. S. Chen, J. S. Park and P. S. Yu, “Efficient Data Mining for Path Taversal Pat-terns” IEEE Transactions on Knowledge and Data Engineering, Vol. 10, 1998, pp. 209-221.

[6] L. Chen and K. Sycara, “WebMate: A Personal Agent for Browsing and searching,”

The Second International Conference on Autonomous Agents, ACM, 1998.

[7] Edith Cohen, Balachander Krishnamurthy and Jennifer Rexford, ” Efficient Algo-rithms for Predicting Requests to Web Servers,” The Eighteenth IEEE Annual Joint

Conference on Computer and Communications Societies, Vol. 1, 1999, pp. 284 –293.

[8] R. Cooley, B. Mobasher and J. Srivastava, “Grouping Web Page References into Transactions for Mining World Wide Web Browsing Patterns,” Knowledge and Data

Engineering Exchange Workshop, 1997, pp. 2 –9.

[9] R. Cooley, B. Mobasher and J. Srivastava, “Web Mining: Information and Pattern Discovery on the World Wide Web,” Ninth IEEE International Conference on Tools

with Artificial Intelligence, 1997, pp. 558 -567

[10] M. Delgado and A. Gonzalez, “An inductive learning procedure to identify fuzzy systems,” Fuzzy Sets and Systems, Vol. 55, 1993, pp. 121-132.

[11] A.Gonzalez, “A learning methodology in uncertain and imprecise environments,”

International Journal of Intelligent Systems, Vol. 10, 1995, pp. 57-371.

[12] T. P. Hong and J. B. Chen, "Finding relevant attributes and membership functions,"

Fuzzy Sets and Systems, Vol.103, No. 3, 1999, pp. 389-404.

[13] T. P. Hong and J. B. Chen, "Processing individual fuzzy attributes for fuzzy rule induction," Fuzzy Sets and Systems, Vol. 112, No. 1, 2000, pp.127-140.

[14] T. P. Hong, M. J. Chiang and S. L. Wang, ”Mining from quantitative data with linguistic minimum supports and confidences”, The 2002 IEEE International

Confe-rence on Fuzzy Systems, Honolulu, Hawaii, 2002, pp.494-499.

[15] T. P. Hong and C. Y. Lee, "Induction of fuzzy rules and membership functions from training examples," Fuzzy Sets and Systems, Vol. 84, 1996, pp. 33-47.

[16] T. P. Hong and S. S. Tseng, “A generalized version space learning algorithm for noisy and uncertain data,” IEEE Transactions on Knowledge and Data Engineering, Vol. 9, No. 2, 1997, pp. 336-340.

[17] J. Rives, “FID3: fuzzy induction decision tree,” The First International symposium on

Uncertainty, Modeling and Analysis, 1990, pp. 457-462.

[18] S. Yue, E. Tsang, D. Yeung and D. Shi, “Mining fuzzy association rules with weighted items,” The IEEE International Conference on Systems, Man and

Cybernet-ics, 2000, pp. 1906-1911.

[19] L. A. Zadeh, “Fuzzy logic,” IEEE Computer, 1988, pp. 83-93.