E L S E V I E R Fuzzy Sets and Systems 71 (1995) 345-357

sets and systems

A fuzzy neural network for rule acquiring on fuzzy

control systems*

J.J. Shann*, H.C.

F uDepartment of Computer Science and Information Engineering, National Chiao-Tung University, Hsinchu, Taiwan 300, ROC

Abstract

This paper presents a layer-structured fuzzy neural network (FNN) for learning rules of fuzzy-logic control systems. Initially, FNN is constructed to contain all the possible fuzzy rules. We propose a two-phase learning procedure for this network. The first phase is a error-backprop (EBP) training, and the second phase is a rule pruning. Since some functions of the nodes in the FNN have the competitive characteristics, the EBP training will converge quickly. After the training, a pruning process is performed to delete redundant rules for obtaining a concise fuzzy rule base. Simulation results show that for the truck backer-upper control problem, the training phase learns the knowledge of fuzzy rules in several dozen epochs with an error rate of less than 1%. Moreover, the fuzzy rule base generated by the pruning process contains only 14% of the initial fuzzy rules and is identical to the target fuzzy rule base.

Keywords: Fuzzy logic control; Neural networks; Learning algorithms

1. Introduction

During the past decade, a variety of applications of fuzzy set theory [16] have been implemented in various fields. One of the most important applica- tions is fuzzy logic controllers [13, 7]. Meanwhile, interest in artificial neural networks has grown rapidly after two decades of eclipse. Many network topologies and learning methodologies have been explored. Among these learning methodologies, the backpropagation algorithm [15, 9], i.e., gradient

This work was supported in part by National Science Coun- cil of ROC, under grant No: NSC 84-2213-E009-039.

* Corresponding author.

descent supervised learning, has had an enormous influence in research on neural networks.

Recently, more and more research has been pub- lished concerning the integration of fuzzy systems and neural networks, with the goal of combining the human inference style and natural language description of fuzzy systems with the learning abil- ity and parallel processing of neural networks [1, 3-5, 8, 14]. Most of this research has been proposed for or can be applied to the knowledge learning of a fuzzy logic controller.

In [8], a multilayer feedforward connectionist model designed for fuzzy logic controllers and deci- sion-making systems was presented. A hybrid two- step learning scheme that combined self-organized and supervised learning algorithms for learning 0165-0114/95/$09.50 © 1995 - Elsevier Science B.V. All rights reserved

346 J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345~57

fuzzy logic rules and membership functions was developed. Some heuristics for rule reduction and combination were provided.

In [3, 41, adaptive fuzzy associative memories (AFAM) were proposed to integrate a neural net- work and fuzzy logic. Unsupervised differential competitive learning (DCL) and product-space clustering adaptively generated fuzzy rules from training samples.

In [14], fuzzy systems were viewed as a three-layer feedforward dedicated network with heterogeneous neurons. The network was trained by backpropaga- tion algorithm for membership function learning.

In [1], a fuzzy modeling method using fuzzy neural networks with the backpropagation algo- rithm was presented. Three types of fuzzy neural networks, 6, 7, and 10 layers, respectively, were pro- posed to realize three different types of fuzzy rea- soning. These networks can acquire fuzzy inference rules and tune the membership functions of nonlin- ear systems.

In [5,1, a fuzzy-set-based hierarchical network for information fusion in computer vision was present- ed. The proposed scheme could be trained as a neural network in which parametrized families of operators were used as activation functions and the gradient descent and backpropagation learning procedure was performed to generate degrees of satisfaction of various decision criteria by adjusting the parameters of these operators. After training, the network could be interpreted as a set of rules for decision making. Some heuristics were described to eliminate redundant criteria.

In general, most of the methodologies for learn- ing knowledge are in one of the following two categories: backpropagation type and competitive type. Roughly speaking, backpropagation-type learning algorithms learn more precisely than com- petitive-type algorithms because they are based on the gradient descent search, but they take a long time and numerous training epochs to converge. In contrast, competitive-type learning algorithms learn more rapidly than backpropagation-type al- gorithms because they are based on unsupervised clustering, but the knowledge learned may not be precise enough. Therefore, one of the goals in the field of knowledge learning is to learn knowledge both precisely and rapidly.

For a fuzzy logic controller, the principal design issues are fuzzification strategies, database, rule base, decision-making logic, and fuzzification strat- egies [7,1. Some items of the design issues, such as the membership functions of the linguistic values of input and output linguistic variables, the fuzzy rules, and the fuzzy operators, etc., might be un- known or uncertain prior to the construction of a controller. Hence, we wish to acquire more know- ledge of these items through learning. The pro- posed fuzzy neural network (FNN) is flexible and extendable for learning different combinations of these items, such as the membership functions of the input and output linguistic variables, the fuzzy rules, and the fuzzy operators [10-12,1. However, in this paper, we concentrate on acquiring fuzzy rules of a fuzzy control system in order to observe and analyze the nature of rule learning more thorough- ly and deeply. For the F N N presented in this paper, the working process of a fuzzy-logic control system is embedded in the layered structure of the network. The fuzzification strategies, decision-making logic, and defuzzification strategies chosen are for- mulated as the functions of the nodes in the net- work; and the fuzzy rules are represented by the learnable weights of the input links on one of the layers in the network. The learning strategy of the FNN is "start large and prune". For this strat- egy, the network is large initially and the training is performed with the large network. After the train- ing is finished, the network will then be reduced based on some criterion. Therefore, for fuzzy rule learning, the FNN is constructed to contain all the possible fuzzy rules, each with a weak strength, i.e., a small weight, initially according to the related linguistic variables and values of the system.

The learning procedure proposed for the F N N consists of two phases. The first phase is an error-

b a c k p r o p (EBP) training phase, and the second

phase is a rule-pruning phase. The EBP learning algorithm is based on a gradient descent search in the network, in which some of the node functions are formulated with competitive operations such as

the min and m a x operators, i.e., some nodes in the

network have competitive activation functions. The dominant terms, i.e., the winners, of these competi- tive operators are determined in the forward pass of the learning algorithm, while in the backward pass,

J.d. Shann, H.C Fu / Fuzzy Sets and Systems 71 (1995) 345-357 347 the computation of the gradients for adjusting the

learnable weights (see Section 3) are performed only on those links that are related to the dominant terms of the competitive operators. Therefore, the EBP algorithm applied on a network with competi- tive activation functions enables the network to learn both precisely and quickly, and can be viewed as a compromise between the advantages of back- propagation-type and competitive-type learning methodologies. After the EBP training phase, a rule-pruning process is executed to delete redund- ant fuzzy rules and obtain a rule base with much smaller size than the initial one. A truck backer- upper control problem for backing up a simulated truck to a loading dock in a parking lot was chosen as a benchmark [3]. Simulation results show that for this problem, the EBP training phase is completed in several dozen epochs with a training error of less than 1%. Moreover, the fuzzy rule base generated by the pruning process in the second phase contains only 14% of the initial fuzzy rules and reproduces the target fuzzy rule base with no error.

This paper is organized as follows. The structure of the FNN and the functions of the nodes in the network are described in Section 2. The EBP learn- ing algorithm for the FNN with competitive activa- tion functions is stated in Section 3. A pruning method for deleting redundant fuzzy rules and ob- taining a precise (and sound) rule base is described in Section 4. In Section 5, the truck backer-upper problem is simulated and the simulation results are analyzed and compared with those for other adaptive controller systems. Finally, our con- clusions and plans for future research are presented in Section 6.

2. The structure of the fuzzy neural network

In the fuzzy neural network presented, the fuzzy logic rules considered are the state evaluation fuzzy control rules in linguistic I F - T H E N form for mul- tiple inputs and single output [7]. The/F-part, i.e., the antecedent, of a rule is the conjunction of all input linguistic variables [ 17], each associated with one of its linguistic values. The THEN-part, i.e., the consequent, of a rule contains only one output linguistic variable associated with a linguistic value.

Assume that there are M input linguistic variables, AI, A2 . . . AM, and N output linguistic variables, F1, F2 .... , FN. The number of linguistic values as- sociated with an input linguistic variable Ah is ah, and these (input) linguistic values are denoted by ~/h, 1, ~h, 2, ..., ~h,a~. The number of linguistic values associated with an output linguistic variable Ft is ~, and these (output) linguistic values are denoted by ~ l , 1, ~ , 2 , .-., ~-~,I,- Two exemplar fuzzy rules are given below:

R1,1,1: IfA1 is ~1,1 a n d A 2 i s d 2 , 1 and ... and AM is dM,1 (AND1), then F1 is ~1,1,

RQ.N, IN: I f A ~ i s ~ l , a l a n d A 2 i s d 2 , , 2 a n d ... and AM is d M , ~ (ANDQ), then FN is ~-~,i~, where the rules are numbered Ri, ~, k, indicating the fuzzy rule with antecedent ANDi, output linguistic variable Fj, and the output linguistic value ~ , k.

The fuzzy neural network is a five-layer dedi- cated neural network, as shown in Fig. 1, designed according to the working process of fuzzy control- ler systems [7, 8]. The adjustable weights for rule learning are on the input links of the nodes in Layer IV. Initially, it is constructed to contain all the possible rules of a fuzzy control system. The num- ber of nodes and connections of the initial network are summarized in Table 1. The semantic meaning and functions of the nodes in the proposed network are as follows.

Layer I (input layer): Each node in Layer I repres- ents an input linguistic variable of the network and is used as a buffer to broadcast the input to the next layer, i.e., to the membership-function nodes of its linguistic values. The range (or space) of each input linguistic variable is determined by the application and need not be constrained to within [0, 1].

Layer II (membership-function layer): Each node in Layer II represents the membership function of a linguistic value associated with an input linguistic variable. These nodes are called MF nodes. The output of such an MF node is in the range [0, 1] and represents the membership grade of the input with respect to the membership function. There- fore, an MF node is a fuzzifier. The most commonly used membership functions are in the shape of triangle, trapezoid, and bell. The functions of the nodes in this layer are determined and formulated by applications. The weights of the input links in this layer are unity.

348 J.J. Shann, H.C Fu / Fuzzy Sets and Systems 71 (1995) 345-357 (Defu~ficatlon Layer) C9~1,1 ) (OR Layer) (AND Layer) (Membership- Function Layer) L a v e r 1 (Input Layer) Zl Zl ZN (F,) ( . . . .

\

(AND i 1, ~ . . . Z/ (ANDQ) ("~1,1) " ' " '"~1"" " ' " ~M, ~') Zh ... ... X l Xh XMFig. 1. The network structure of the fuzzy neural network.

Table 1

Summary of the nodes and links in the initial fuzzy neural network

Layer For a node in the layer Total

Index # Input links # Output links # Nodes # Input links # Output links

I h 1 a h M M P

II i 1 Q/ah P P MQ

III j M R Q MQ QR, QN

IV k Q 1 g QR, QN R

V 1 ft 1 N R N

Note: M, the number of the input linguistic variables; N, the number of the output linguistic variables; Ah, an input linguistic variable represented by node h in Layer I; ah, the number of the linguistic values of A,; F , an output linguistic variable represented by node I in Layer V;f~, the number of the linguistic values of F~; P = ~=l(ah); Q = H h~l(ah); R = Xt=l~ (fl); QR, the number of the initial fuzzy rules; QN, the number of the rules in a sound fuzzy rule base.

J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345-357 349

Layer I I I ( A N D layer): Each node in Layer III represents a possible/F-part for fuzzy rules. These nodes are called AND nodes. In fuzzy set theory, there are many different operators for fuzzy inter- section [2, 18]. We choose the most commonly used one, i.e., the min-operator suggested by Zadeh [16], as the function of an AND node. The main reason is that the rain-operator is simple and effec- tive and has strong characteristics of competition. Therefore, the operation performed by node j in Layer III is defined as follows:

z i = min(xji) = M I N t, (1)

i~ej

where Pj is the set of indices of the nodes in Layer II that have an output link connected to node j and xji = zi. The link weights w~i on the input links in this layer are unity. From Eq. (1) for z j, it is obvious

that the output value of node j in Layer III is determined by the output of a node i in Layer II, which provides the minimum among all the output values of the nodes connected to node j. Node i is called a dominant node of node j.

Layer I V (OR layer): Each node in Layer IV rep- resents a possible THEN-part for fuzzy rules. The nodes in this layer are called OR nodes. The opera- tion performed by an OR node is to combine fuzzy rules with the same consequent. Initially, the links between Layers III and IV are fully connected so that all the possible fuzzy rules are embedded in the struc- ture of the network. The weight Wkj of an input link in Layer IV represents the certainty factor of a fuzzy nile, which comprises the AND nodej in Layer III as the IF-part and the OR node k in Layer IV as the

THEN-part. Hence, these weights are adjustable while learning the knowledge of fuzzy rules.

In fuzzy set theory, there are many different oper- ators for fuzzy union [2, 18]. We choose the most commonly used one, i.e., the max-operator sugges- ted by Zadeh [16], as the function of an AND node for the same reason as that of the min-operator. Therefore, the operation performed by a node k in Layer IV is defined as follows:

Zk = max(xkjWkj) = M A X k , (2)

j~e~

where Pk is the set of indices of the nodes in Layer III that have an output link connected to node

k and Xkj = Zj. During the training phase, the link weights Wkj in Layer IV are learnable nonnegative real numbers.

As was the case with Eq. (1) for z~, from Eq. (2) for Zk, it is obvious that the output value of node k in Layer IV is determined by the maximum among the products of the output values Xkj of the nodes in the previous layer and the weights Wkj associated with the links connected to node k. Sup- pose that the maximal product is provided by node j in Layer III. Then, node j is called a dominant

node of node k.

Layer V (defuzzification layer): Each node in Layer V represents an output linguistic variable

and performs defuzzification, taking into consid- eration the effects of all the membership functions of the linguistic values of the output.

Suppose that the correlation-product inference

and the fuzzy centroid defuzzification scheme [4] are used. Then the function of node l in Layer V is defined as follows:

zt = Z k ~ P, (X,k ark Ctk) (3)

E~ ~,(x,ka~k) '

where P~ is the set of indices of the nodes in Layer IV that have an output link connected to node l,

Xtk = ZR, and aiR and Ctk are the area and centroid of the membership function of the output linguistic value represented by node k in Layer IV, respec- tively. Since it is assumed that the membership functions of the output linguistic values are known, the areas and centroids can be calculated before learning. The link weights of Layer V are unity.

3. Error backpropagation (EBP) learning algorithm for the FNN with competitive node functions

In conventional neural networks, most of the neurons perform summation and sigmoid func- tions. However, more and more alternatives for these functions have been presented while the ap- plications of neural networks explored recently [6]. In this section, we describe the derivation of the EBP learning algorithm for the FNN with the node functions defined in the previous section.

In the training phase, the concept of error back- propagation is used to minimize the least mean

3 5 0 J.J. Shann, H.C Fu / Fuzzy Sets and Systems 71 (1995) 345-357

square (LMS) error function: 1 N

E = ~ t~=l (Tt - zt) 2, (4)

where N is the number of nodes in Layer V and Tt and zt are the target and actual outputs of the node l in Layer V, respectively. The methods for adjusting the learnable weights Wki in Layer IV of the fuzzy rules are based on the gradient descent search. Let the delta value, 6, of a node in the network be defined as the influence of the node output with respect to E. The definition of the delta values 6k and fit for nodes in Layers IV and V, the evaluation of the gradients of E (VEw~j) with respect to the learnable weights, and the adjustments of the weights (A Wkj) are described as follows:

The definition of the Delta values: For a defuzzifi- cation node l in Layer V, the delta value 31 is defined as follows:

dE

fit -- dzt -- (Tt -- zt). (5)

For an OR node k in Layer IV, the Delta value 6k is defined as follows:

dE dE ~zt dzk dzt dz~

-- fit atk(ct_.___~k--_ zt) (6)

Zk' ~p,(Xlk'atk')'

where the output link of node k is connected to exactly one node l in Layer V.

The evaluation of the 9radients of E: For the weight Wkj of the link connected from nodej in Layer III to node k in Layer IV, the definition of the gradient of E with respect to Wkj is defined as follows:

dE dE dZk VEw~, dWkj OZ k dWkj

6k"

otherwise. (7)

The two cases in Eq. (7) for VEw~j indicate that when the EBP training algorithm is processed, there will be different adjustments in the backward pass depending on the situation that occurs in the forward pass. It is obvious that only the weight of the link emitted from a dominant node of node k has a nonzero gradient, i.e., only the weight will

be adjusted in the backward pass of the learning algorithm, due to the competitive characteristics of the function performed by the OR node.

The adjustments o f the learnable weights: The adjustments of the learnable weights Wkj, which are based on the gradient descent search, can be de- scribed as follows:

Wkj(t + 1) = Wkj(t) + AWkj

= wki(t) - fl 17Ew~j, (8)

where fl is the learning rate.

The knowledge of the fuzzy rules learned by the EBP algorithm is distributed over the weights of the links between Layers III and IV.

4. The rule-pruning method

Since the knowledge of fuzzy rules learned by EBP algorithm is distributed over the adjustable weights, most of the weights after training have nonzero positive values. Then the fuzzy rule base obtained directly right after the training phase will contain all the rules with nonzero weight, i.e., certain- ty factor. This causes the size of the rule base very large in most cases. Therefore, a pruning process is required to obtain a concise rule base. At the mean- time, the structure of the F N N will also be reduced. The physical meaning of the weights on the input links in Layer IV is explained in the following. After the EBP training, the learned weights wkj on a set of links from an AND node j in Layer III to the OR nodes of an output linguistic variable Ft (see Fig. 2) are interpreted as the certainty factors of a set of fuzzy rules that have the s a m e / F - p a r t and the same output linguistic variable but different linguistic values. These fuzzy rules will be referred to as "incompatible" rules. Fig. 2 can be interpreted as the following incompatible fuzzy rules:

Rj, t,I: I f A N D j , then Ft is ~ t . t

(Wkt,j)"

Rj.t.2: I f A N D j , then Ft is o~t,2 (Wk2.j). z Rj, l,r: Z Rj, l. fz:If AND j, then Ft is ~t., (Wkr, i).

J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345 357 351

The defuzzification node represents an output linguistic variable b)

O R N o d e s

The OR nodes represent the linguistic values of the output linguistic variable b)

The AND node represetns

an antecedent ANDj of

fuzzy rules

ZI

Fig. 2. The diagram of the possible fuzzy rules with identical antecedent ANDj for an output linguistic variable Ft.

where A N D j is the antecedent represented by an AND nodej in Layer III. The effects of these rules are that when the antecedent A N D j holds, each of the rules is activated to a certain degree represented by the weight value (the certainty factor) associated with that rule. In the previous research that related to the learning of fuzzy rules, only a few discussed about the pruning of the rules and the methods provided were heuristic, such as deleting the rules with weak strengths, i.e., small weights, or choosing the one with the maximum weights among the incompatible rules and deleting the others I-8].

In this paper, a method for pruning fuzzy rules is proposed based on the following derivation. For defuzzification node l, the corresponding output with respect to AND node j (and the set of incom- patible rules listed above) is denoted by z / a n d is evaluated as follows: z / = EK~ tp,~Nj)(XtkalkC,k) ~,k ~ (e,~N,)(Xtkatk) ' where X l k = Z k = X k j W k j = Z j W k j . Thus, z / = ~ k ~ e, (ZjWkja,kCtk) ~ k ~ e, (ZjWkjalk) ' _ E k ~ p,(wkjaaca) E k ~ e, (Wkjalk) (9)

From Eq. (9), z/ is the centroid of the weighted linguistic values of F~, where the weights of the linguistic values is equal to the certainty factors of the set of incompatible rules listed above. Hence, z/ can also be called the centroid of the set of incompatible rules.

From the illustration given above, it is obvious that the total effects of the set of incompatible rules on the output linguistic variable Ft is equivalent to the centroid of the incompatible rules. Therefore, for rule pruning, an evaluation equation is proposed based on the concept of the centroid of gravity.

The equation for evaluating the centroid of the incompatible fuzzy rules represented by the links from an AND node j in Layer III to the OR nodes of an output linguistic variable F~ is defined as follows:

C~j = E k ~ p, (wkjazkc~k)

(10)

•k ~ e, (Wkjatk)

For each linguistic value of the output linguistic variable F~, we define an interval in the space of F~ according to the membership function of the lin- guistic value and the requirements of the applica- tion. The basic criterion for defining the intervals is that they should cover the entire space of Ft. If the centroid Ctj computed for the set of incompatible rules listed above is in the interval of a linguistic value ~t.,, then the corresponding rule Rj. ~. r is not redundant and remains in the rule base. In the set of the incompatible rules, all the rules other than the remaining ones are eliminated.

For each AND node in Layer III, the pruning process is performed to delete its redundant output links connected to the OR nodes associated with each output linguistic variable. After the pruning phase, a rule base with much smaller size can be obtained.

In practice, after an fuzzy rule learning, a sound fuzzy rule base is expected to be obtained for many applications. A sound fuzzy rule base is defined as: for each output linguistic variable, there exists exactly one consequent for each possible anteced- ent in the rule base. In an application, the fuzzy rule base obtained after the pruning phase can be sound if the intervals defined for the linguistic values of each output linguistic variable are nonoverlapped.

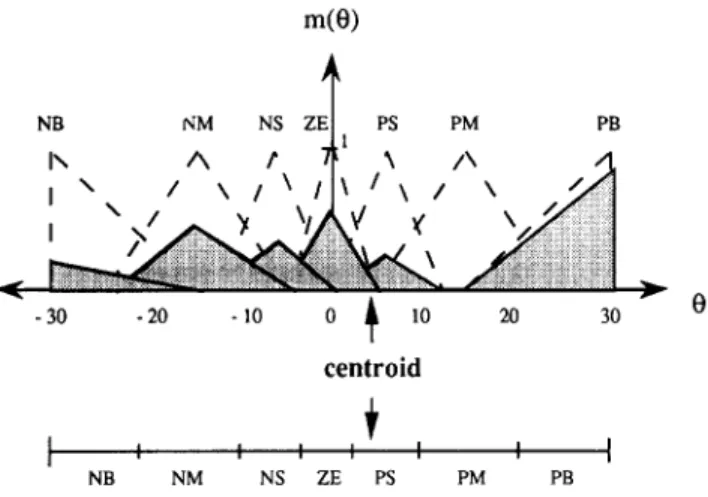

3 5 2 ZJ. Shann, H . C Fu / Fuzzy Sets and Systems 71 (1995) 345-357 d m(O) J N B N M N S Z E

I1\

\ /A

\ / \t /'2

- 3 0 - 2 0 - 10 ,o centroid N B N M N S Z E PS P M PB PS P M PB \ 1 \ / \ / v 20 30Fig. 3. The effects of the set of incompatible rules with respect to the output linguistic variable 0. The space of 0 is divided into seven nonoverlapped intervals. The centroid calculated by Eq. (10) for this set of incompatible rules is in the interval of linguistic value PS.

After rule pruning, the fuzzy rule base obtained may be either sound or containing incompatible rules. For a sound fuzzy rule base, the certainty factors of the rules are set to unity. For a fuzzy rule base containing incompatible rules, the certainty factors of the rules can be determined by processing the EBP training once more.

An example is given below for illustrating the rule pruning. From the simulation results of the truck backer-upper problem (see Section 5), one of the sets of incompatible fuzzy rules obtained after the EBP training phase is listed as follows: If x is LE and ~b is RB, then 0 is NB, (0.18337). If x is LE and ~ is RB, then 0 is NM, (0.42997). If x is LE and ~b is RB, then 0 is NS, (0.33736). If x is LE and ~b is RB, then 0 is ZE, (0.52446). If x is LE and ~b is RB, then 0 is PS, (0.23184). If x is LE and q~ is RB, then 0 is PM, (0.00379). If x is LE and q~ is RB, then 0 is PB, (0.84130). The values of the certainty factors shown in the parentheses are the simulation results of the prob- lem when the space of the output linguistic variable 0 was normalized to [0, 1]. When the antecedent of the set of incompatible rules holds, the relative

effects of the rules on the consequent are shown in Fig. 3. In this figure, the dashed lines represent the membership functions of the seven linguistic values for the output linguistic variable 0 as the same as that shown in Fig. 4(b), and the shaded area repres- ents the relative effect of these incompatible rules with respect to 0. The seven intervals defined for the output linguistic values of 0 are nonoverlapped and cover the entire space of 0 as shown in Figs. 3 and 4(b). The centroid calculated for this set of incom- patible rules is in the interval of linguistic value PS. Therefore, only the rule

If x is LE and ~b is RB, then 0 is PS

remains after the rule-pruning phase. The remain- ing rule is identical to the one specified at the left top square of the target fuzzy rule base shown in Fig. 5.

5. System simulation and evaluation

A general purpose simulator of the proposed fuzzy neural network was implemented on a Sun SPARC station. The truck backer-upper control problem [3] was used as a benchmark to evaluate the performance of the proposed network. In this fuzzy control system, the truck x-position coordinate

J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345-357 353 m(x)

l

LE LC CE RC RI 1 [ m ~ % ~ l ,,,.._ 10 20 30 40 50 60 70 80 90 100 f m((~) A RB "[" RU RV VE LV LU LB / - 90 0 90 180 270 (a) m(0) NB NM NS ZE l PS PM PB -30 -20 -I0 0 I0 20 30 ' ' ' ' ' ' ' I 0o)Fig. 4. Fuzzy membership functions of the linguistic values associated with (a) input linguistic variables x and ~b and (b) output linguistic variable 0 for the truck backer-upper problem. The space of 0 is divided into seven nonoverlapped intervals.

X LE LC CE RC RI RB PS PM PM PB PB RU NS PS PM PB PB Rv NM NS PS PM PB vE NM NM ZE PM PM LV NB NM NS PS PM LU NB NB NM NS PS LB NB NB NM NM NS

Fig. 5. The target FAM-bank matrix for the truck backer-upper

controller.

x a n d the t r u c k angle ~b were chosen as the input linguistic variables a n d the steering-angle c o n t r o l signal 0 was chosen as the o u t p u t linguistic vari- able. T h e input linguistic variable x h a d five linguistic values: LE, LC, CE, RC, and RI; the input

linguistic variable tk h a d seven linguistic values:

RB, RU, RV, VE, LV, LU, a n d LB; and the o u t p u t

linguistic variable 0 h a d seven linguistic values: NB, NM, NS, ZE, PS, PM, a n d PB. T h e m e m b e r s h i p

functions of the linguistic values of these linguistic variables are given in Fig. 4. T h e r e were 245 ( = 5 x 7 x 7) possible fuzzy rules. A target fuzzy rule base, referred to as the F A M - b a n k matrix, is specified in Fig, 5. I n the s i m u l a t i o n of the different a d a p t i v e controller systems, the t r u c k trajectories

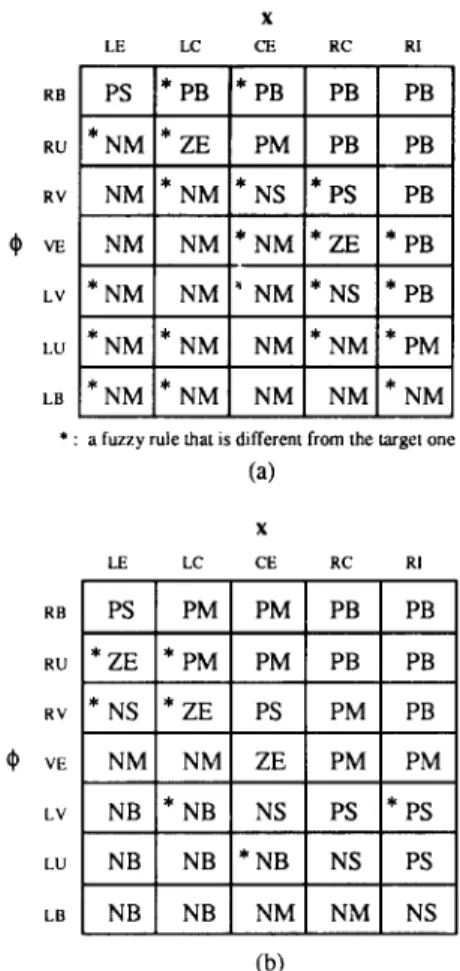

3 5 4 J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345-357 x LE LC CE RC RI RB PS * PB * PB PB PB RU *NM * ZE PM PB PB RV NM * N M * N S * P S PB VE NM NM * N M * Z E * P B LV *NM NM ~ NM *NS *PB LU *NM *NM NM *NM *PM La *NM !* NM NM NM * NM

• : a fuzzy rule that is different from the target one

(a) x LE LC CE RC RI RB PS PM PM PB PB RU *ZE * P M PM PB PB RV *NS *ZE PS PM PB VE NM NM ZE PM PM LV NB *NB NS PS *PS LU NB NB * NB NS PS LB NB NB NM NM NS (b)

Fig. 6. (a) The FAM bank generated by the neural control system. (b) The DCL-estimated FAM bank.

produced according to the membership functions and the target fuzzy rule base shown in Figs. 4 and 5, respectively, were regarded as the ideal trajecto- ries and were used as the training samples [3]. Besides, the target FAM-bank matrix was used as a basis for comparing the performance of different adaptive controller systems.

In [3], two adaptive controller systems with different learning methodologies were analyzed. One was a neural system, which consisted of a multilayer feedforward neural network with the conventional backpropagation gradient-descent al- gorithm. The neural system was trained by ap- plying 35 sample vectors to the network, and more than 100000 iterations (epochs) were required to complete the training process. The second system

was the adaptive fuzzy

truck backer-upper,

which consisted of a laterally inhibitive differential com- petitive learning (DCL) network trained with the DCL algorithm. The adaptive fuzzy system was trained by applying 2230 sample vectors to it. In order to generate the fuzzy rules, i.e., the FAM banks, product-space clustering was applied to the training results of the neural system and the adap- tive fuzzy system. The FAM banks generated by these two different adaptive controller systems, are shown in Fig. 6.To illustrate briefly the performance of these two adaptive controller systems, a set of random input vectors were tested to estimate the retrieving error rates of the FAM banks generated by these two systems in comparison with the target FAM bank in Fig. 5. The error rates of the neural system and the adaptive fuzzy system were 11.42% and 3.74%, respectively.

In the simulations for our fuzzy neural network, the fuzzy membership functions and the FAM- bank matrix shown in Figs. 4 and 5, respectively, were used to generate the sample vectors for train- ing. A total of 350 training samples were generated randomly, about 10 samples in the product space of each antecedent. The network contained 2 neurons in Layer I, 12 ( = 5 + 7) neurons in Layer II, 35 ( = 5 × 7) neurons in Layer III, 7 neurons in Layer IV, and 1 neuron in Layer V. Initially, there were 245 ( = 5 x 7 × 7) links between Layers III and IV; each link represented a possible fuzzy rule.

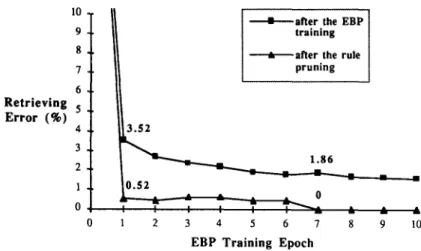

During the EBP training of fuzzy rules in the first phase of the proposed learning procedure, the training error was reduced very quickly in each epoch iteration and the learning was completed in a small number of epochs. Simulation results for the EBP training with random initial weights on the input links of Layer IV are shown in Fig. 7. The training iteration was terminated after the error rate was reduced to 1%.

After the EBP training, the rule-pruning process was performed in the second phase of the learning procedure. The space of the output linguistic vari- able 0, - 3 0 ~< 0 ~< 30, was divided into seven nonoverlapped intervals, shown in Fig. 4(b), corres- ponding to the seven linguistic values of 0. Only 35 of the 245 initial fuzzy rules remained after pruning. For analysis, a FAM bank was generated by the

J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345-357 355 10 9 8 7 6 T r a i n i n g 5 E r r o r ( % ) 4 3 2 1 0 3 . 4 9 1.56 0 98 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 E B P T r a i n i n g E p o c h

Fig. 7. Training error rates of the E B P learning algorithm for the truck backer-upper problem: training error versus E B P training epochs. The n u m b e r of the r a n d o m training samples is 350.

Retrieving Error (%) 1 0 - 9 - 8 _ 7 - 6 - 5 - 4 - I -" after the EBP I

training

I

~. after the rule I

I

pruning 3.52 1 0 [ [ ~ [ : , - ._ _~ _. 0 1 2 3 4 5 6 7 8 9 10 E B P T r a i n i n g E p o c hFig. 8. Retrieving error rates of the fuzzy neural network after the EBP training a n d after the rule pruning, respectively, for the truck backer-upper problem: retrieving error versus EBP training epochs.

pruning process after each EBP training epoch. The sets of random input vectors that were generated to test the performance of the other two adaptive controller systems mentioned previously were tes- ted to estimate the retrieving error rates of the FAM banks. Fig. 8 shows the retrieving error rates of the fuzzy neural networks after the EBP training and after the rule pruning, respectively. From this figure, we can see that the retrieving error of the fuzzy neural network after the rule pruning is small-

er than that just after the EBP training. It means that the truck trajectories produced by an FNN with EBP training and rule pruning are closer to the ideal trajectories than that produced by an FNN with EBP training only.

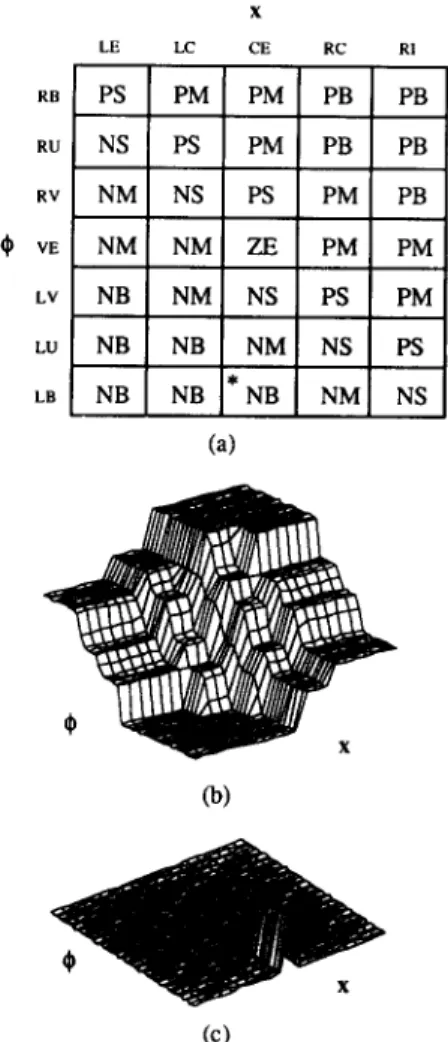

Fig. 9 shows (a) the resulting FAM bank gener- ated by the pruning phase after only one EBP training epoch, (b) the corresponding control surfa- ces of the FAM bank, and (c) the absolute difference of the control surface and the target FAM surface.

3 5 6 d.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345 357 x LE LC CE RC RI gs PS PM PM PB PB RU NS PS PM PB PB Rv NM NS PS PM PB VE NM NM ZE PM PM LV NB NM NS PS PM LO NB NB NM NS PS * LB NB NB NB NM NS (a) (b) (c)

Fig. 9. (a) FAM bank generated by the rule-pruning process when the EBP training was terminated after the first epoch in Fig. 7. (b) The corresponding control surface of the FAM bank in (a). (c) The absolute difference of the control surface in (b) and the target FAM surface.

We can see that the FAM bank in Fig. 9(a) mis- matches the target FAM bank in Fig. 5 at only one rule with a slightly different output linguistic value. Moreover, the retrieving error after the pruning phase for seven EBP training epochs is 0%, i.e., the FAM bank generated then is identical to the target FAM bank. Therefore, the target F A M bank can be reproduced by the fuzzy neural network with no error. In other words, the fuzzy neural network can produce the ideal trajectories for the truck backer-upper controller problem after the training and pruning processes.

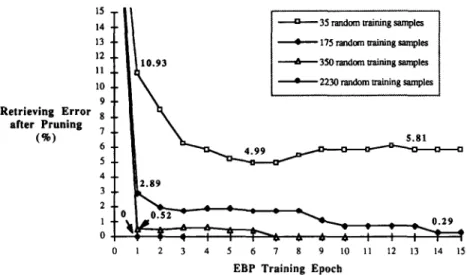

For different numbers of training samples, the retrieving errors after pruning with different E B P training epochs are shown in Fig. 10. When 35 random training samples are applied to the net- work, the pruning phase can generate a F A M bank with an error rate of 10.93% after the first EBP training epoch and with an error rate of 5.81% after the 13th training epoch. In addition, when 2230 random training samples were applied to the net- work, the pruning phase can reproduce the target FAM bank with no error after only one E B P train- ing epoch.

6. C o n c l u s i o n s

A fuzzy neural network for acquiring rules of a fuzzy-logic rule-based control system has been presented. The learning procedure of the network is divided into two phases. The first one is an error backpropagation (EBP) training phase, and the second one is a rule-pruning phase. In the first phase, the E B P learning algorithm en- ables the network to acquire the knowledge of fuzzy rules precisely and quickly. The main reason for these results is that the gradient descent search approach in the EBP algorithm enables the net- work to learn more precisely than typical competi- tive learning algorithms, while the dedicated struc- ture of the network and the competitive character- istics of the functions for OR nodes in the network enable the network to converge much more rapidly than conventional backpropagation learning algo- rithms. The knowledge of fuzzy rules learned in the EBP training phase is distributed over the learn- able weights of the network. Therefore, in the sec- ond phase of the learning procedure, a pruning process is performed to convert the distributed knowledge of fuzzy rules learned by the E B P train- ing into a precise and sound (or much smaller size) rule base.

In the near future, we plan to analyze the conver- gence for the learnable items of the design issues for fuzzy control systems, such as the fuzzy rules, the membership functions of the linguistic values, and the fuzzy operators. Furthermore, we plan to ex- tend the layer-structured fuzzy neural network to event-driven acyclic networks [6].

J.J. Shann, H.C. Fu / Fuzzy Sets and Systems 71 (1995) 345-357 357 R e t r i e v i n g E r r o r a f t e r P r u n i n g (%) 1 5 - - 1 4 - - 1 3 - - 1 2 - - 11 _ 1 0 - - 9 - - 8 - - 7 5 - - 4 - - 3 - - 2 - - 1 -- 0 .

--...4z-.--- 35 rmxdom training samples • 175 random ~aining samples

10.93 ~ 350 random training samples

• 2230 random training sampl~

~ ? ~ = ~ ~ - 2 9

j . . , 0.290.

2 3 4 5 6 7 8 9 10 11 12 13 14 15

E B P T r a i n i n g E p o c h

Fig. 10. Retrieving error rates of the proposed fuzzy neural network after the pruning phase for different numbers of training samples in the EBP training phase: retrieving error after pruning versus EBP training epochs.

References

I-1] S.I. Horikawa, T. Furuhashi and Y. Uchikawa, On fuzzy modeling using fuzzy neural networks with the back- propagation algorithm, IEEE Trans. Neural Networks 3(5) (1992) 801-806.

[2] G.J. Klir and T.A. Folger, Fuzzy Sets, Uncertainty, and

Information (Prentice-Hall, Englewood Cliffs, N J, 1988). [3] S.G. Kong and B. Kosko, Adaptive fuzzy systems for

backing up a truck-and-trailer, IEEE Trans. Neural Net-

works 3(2) (1992) 211-223.

[4] B. Kosko, Neural Networks and Fuzzy Systems: A Dynamic

Systems Approach to Machine Intelligence (Prentice-Hall, Englewood Cliffs, N J, 1992).

[5] R. Krishnapuram and J. Lee, Fuzzy-set-based hierarchical networks for information fusion in computer vision,

Neural Networks 5 (1992) 335-350.

[6] R.C. Lacher, S.I. Hruska, and D.C. Kuncicky, Back-propa- gation learning in expert networks, IEEE Trans. Neural

Networks 3(1) (1992) 62-72.

[7] C.C. Lee, Fuzzy logic in control systems: fuzzy logic con- troller - part i and part ii, IEEE Trans. Systems Man

Cybernet. 20(2) (1990) 404-435.

[8] C.T. Lin and C.S.G. Lee, Neural-network-based fuzzy logic control and decision system, IEEE Trans. Comput. C-40(12) (1991) 1320-1336.

[9] D.E. Rumelhart and J.L. McClelland, Parallel Distributed

Processing (MIT Press, Cambridge, MA, 1986).

[10] J.J. Shann, The structure design and learning methodology for a fuzzy-rule-based neural network, Ph.D. Dissertation, National Chiao-Tung University, ROC (1994).

[11] J.J. Shann and H.C. Fu, A fuzzy neural network for know- ledge learning, Proc. I F S A '93 (1993).

[12] J.J. Shann and H.C. Fu, Backpropagation learning for acquiring fine knowledge of fuzzy neural networks, Proc.

W C N N "93 (1993).

[13] M. Sugeno, Ed., Industrial Applications of Fuzzy Control (North-Holland, Amsterdam, 1985).

[14] L.X. Wang and J.M. Mendel, Back-propagation fuzzy systems as nonlinear dynamic system identifiers, IEEE

Internat. Conf. on Fuzzy Systems (1992) 1409-1418. [15] P. Werbos, Beyond regression: new tools for prediction

and analysis in the behavioral sciences, Ph.D. Dissertation, Harvard University (1974).

[16] L.A. Zadeh, Fuzzy sets, Inform. and Control 8 (1965) 338-353.

[17] L.A. Zadeh, The concept of a linguistic variable and its applications to approximate reasoning I, II, III, Inform.

Sci. 8 (1975) 199-249, 301-357; 9 (1975) 43-80.

[18] H.J. Zimmermann, Fuzzy Set Theory - and Its Applica-

tions (Kluwer Academic Publishers, Dordrecht, Boston, MA, 2nd ed., 1991).