A Fuzzy-Possibilistic Neural Network to Clustering

全文

(2) tools for detecting similarities between members of a collection of samples. The theory of fuzzy logic provides a mathematical framework to capture the uncertainties associated with the human cognition processes. Unlike the hard c-means method, in fuzzy c-means each training sample belongs to every cluster with some degree of membership. The purpose of the FCM approach, like the conventional clustering techniques, is to group data into clusters of similar items by minimizing a least squared error measure. For c ≥ 2 and m>1, the algorithm chooses µ x : Z → [0,1] so that ∑ µ x = 1 x and ϖ i ∈ R d for i=1, 2, ..., c to minimize the objective function J FCM =. 1 ∑ ∑ ( µ x,i ) m || z x − ϖ i || 2 2 x =1i =1. (1). ϖ 1 ,...,ϖ j ,...,ϖ c can be regarded as prototypes for the clusters represented by the membership grades. For the purpose of minimizing the objective function, the cluster centers and membership grades are chosen so that a high degree of membership occurs for samples close to the corresponding cluster centers. The membership grades and cluster centers are iteratively updated by the following formulas c (|| z x − ϖ i || 2 )1 /( m −1) µ x,i = ∑ λ=1 (|| z − ϖ || 2 )1 /( m −1) λ x . . −1. ; (2). x = 1,2,..., n; i = 1,2,..., c. and 1 n. ∑ (µ x,i ). n. ∑ ( µ x,i ) m z x. (3). m x =1. x =1. The value m ∈ (1, ∞ ) is the fuzzification parameter (or exponential weight). This parameter reduces the sensitivity of the class centers to noise in the data.. 3. Possibilistic Clustering Techniques The theory of fuzzy logic provides a mathematical environment to capture the uncertainties same as human cognition processes. The fuzzy clusters are generated by partition the training samples in accordance with the membership functions matrix U = [ µ x,i ] . The. µ x, i denotes the grade of membership that a training sample belongs to a cluster. The fuzzy c-means algorithms use the component. J PCM =. 1 n c (t x,i )η || z x − ϖ i || 2 + ∑ ∑ 2 x =1i =1 n. c. x =1. i =1. (9). ∑ β i ∑ (1 − t x,i )η. n c. where µ x,i is the value of the ith membership grade on the xth sample z x . The cluster centers. ϖi =. probabilistic constraint to make the memberships of a training sample across clusters must sum to 1 that means the different grades of a training sample shared by distinct clusters but not as degrees of typicality. In contrast, each component generated by the possibilistic c-means (PCM) corresponds to a dense region in the data set. Each cluster is independent of the other clusters in the PCM strategy. The PCM strategy was proposed by Krishnapuram et al. [9-10] for unsupervised clustering. The objective function of the PCM can be formulated as. where n. βi =. ∑ t ηx,i || z x − ϖ i || 2. x =1. , is the scale parameter. n. t ηx,i x =1. ∑. at the ith cluster. t x, i =. 1 1 /(η −1). ,. Possibilistic. 1 + || z x −ϖ i || β i typicality value of training sample z x belonging to the cluster i. η ∈ [1, ∞) , is a weighting factor called the possibilistic parameter. 2. 4. Fuzzy-Possibilistic C-Means Memberships and typicalities are both important for correct feature of data substructure in clustering problem. If a training sample been classified to a suitable cluster, membership is a better constraint for which the training sample is closest to this cluster. On the other word, typicality is an important factor for unburdening the undesirable effects of outliers to compute the cluster centers. In accordance with reference [11], typicality is related to the mode of the cluster and can be calculate based on all n training samples. Thus an objective function in the fuzzy-possibilistic c-means (FPCM) can depend on both of memberships and typicalities and be defined as 1 n c ∑∑ ( µ x,i m + t ηx,i ) || z x − ϖ i || 2 2 x=1i =1 where memberships, typicalities, and centroids are J FPCM =.

(3) c (|| z x − ϖ i || 2 )1 /( m −1) µ x,i = ∑ λ=1 (|| z − ϖ || 2 )1 /( m −1) λ x . . −1. ; (10). x = 1,2,..., n; i = 1,2,..., c. . n (|| z − ϖ || 2 )1 /(η −1) x i t x,i = ∑ y =1 (|| z y − ϖ i || 2 )1 /(η −1) . −1. ; (11). problem of possibilistic learning, the fuzzy-possibilistic c-means strategy is embedded into Hopfield network to construct the FPHN. In the FPHN, shown in Figure 1, each neuron occupies 2 states named membership state based on all c cluster centers and typicality state based on all n training samples individually. Thus the total weighed input for neuron (x,i) and Lyapunov energy function in the FPHN can be modified as. y = 1,2,..., n; i = 1,2,..., c. Net x,i = z x −. and. ϖi =. 1 n. ∑ (µ x,i. m. y =1. n. ∑ (µ x,i. m. + t ηx,i ) x=1. + t ηx,i )z x. (12). z x and cluster center ϖ i . Thus typicality t x,i does just depend on the location. sample. η + t y ,i ). +. (13). I x,i + K x,i n 1 n c η η m E = ∑ ∑ (µ m x,i + t x ,i ) z x − ∑ W x,i;y,i (µ y,i + t y ,i ) 2 x =1i =1 y =1. −. n. c. ∑ ∑ ( I x,iµ mx,i + K x,i t ηx,i ). (14). x =1i =1. n. where. of the cluster center ϖ i .. ∑ W x,i;y,i (µ my,i + t ηy,i ). is. the. total. y =1. Ix,i. weighed input received from the neuron (y,i) in column i, m and η are fuzzification and. Kx,i ux,i. n. y =1. ∑ W x,i;y,i (µ. y =1. m y,i. and. In the FPCM, membership µ x,i . is a function of training sample and all c cluster centers while the typicality t x,i is a function of training. ∑ W x,i;y,i ×. 2. n. Netx,i. η (µ m y ,i + t y ,i ). tx,i zx. Figure 1. Architecture of the neuron (x, i) in a 2-D FPHN. 5. Fuzzy-Possibilistic Hopfield Neural Network. µ x,i and t x, i are membership state and typicality state at neuron. typicality parameters,. (x,i), and I x,i , K x, i are input biases for membership and typicality states at neuron (x,i) respectively. The network reaches an equilibrium state when the modified Lyapunov energy function is minimized. The objective function for clustering problem in the 2-D FPHN is defined as follows: E=. A n c ∑ ∑ (µ xm,i + t ηx,i ) × 2 x =1i =1 2 n. The Hopfield-model neural networks [14-16] have been studied extensively. The features of this network are simple architecture and clear potential for parallel implementation. In order to update the performance in the application of optimal problems, modified Hopfield networks [17-20] have been proposed. Lin et al. [8, 17-19] proposed different fuzzy Hopfield networks to the applications of clustering problem and medical image segmentation. Cheng et al. [20] presented a possibilistic Hopfield network on CT brain hemorrhage image segmentation. These modified Hopfield networks base either fuzzy reasoning or possibilistic learning. For the purpose of solving the noise sensitivity fault of fuzzy reasoning and the simultaneous clustering. zx − ∑. y =1. 1. ∑. n (µ m h =1 h,i. η + t h ,i ). η z y (µ m y ,i + t y ,i ). B n c + ∑∑ ( µ x,i + t x,i ) − n − c 2 x =1i =1 . 2. (15). where E is the objective function that accounts for the energies of all training samples in the same class, and z x , z y are the training samples at rows x and y in the FPHN, respectively. The first term in Eq. (15) defines the Euclidean distance between the training samples in a cluster and that cluster’s centers over c clusters with membership grade and typicality degree.. 2.

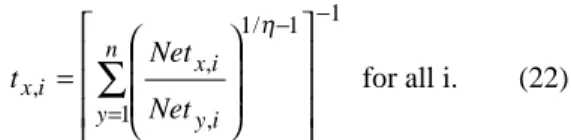

(4) The second term guarantees that n training samples in Z are distributed among these c clusters. More specifically, the second term (the constrained term), imposes constraints on the objective function, and the first term minimizes the intra-class Euclidean distance from training samples to the cluster centers. All the neurons in the same row compete with one another to determine the training sample represented by that row belongs to all clusters with membership grades and typicality degree respectively. In other words, the summation of the membership states in the same row equals 1 and the summation of the typicality states in the same column also equals 1. That is the total sum of membership states in all n rows equal n and the total sum of typicality states in all c columns equal c. This assures that all n samples will be classified into c classes. The objective function in these networks can be further simplified as 1 n c E = ∑ ∑ ( µ xm,i + t ηx,i ) × 2 x =1i =1 2 n. zx − ∑. y =1. 1. ∑. n (µ m h =1 h,i. η + t h,i ). (16). z y ( µ xm,i + t ηx,i ). By using Eq. (16), the minimization of E is greatly simplified, since Eq. (16) contains only one term, removing the need to find the weighting factors A and B. Comparing Eq. (16) with the modified Lyapunov function Eq. (14), the synaptic interconnection weights and the bias input for the proposed can be obtained as 1 W x ,i ; y ,i = zy , (17) n ∑h=1 ( µ hm,i + t ηh,i ). n Net x,i t x,i = ∑ y =1 Net y,i . 1 /η −1 . . −1. for all i. (22) Directly mapping training samples to the two-dimensional neuron array, the FPHN is trained to update all neuron states in order to classify the input samples into feasible clusters when the defined energy function converges to near global minimum.. x12 x8. x2 x1. x3. x7. x5. x9. x11. x6 x4. x10. Figure 2. Coordinates of the data set. 6. Experimental Results. To show the performance of the FPHN, a data set proposed by Pal et al. [11] and real multi-spectral images are used for simulation in an IBM compatible personal Pentium computer. The data set, shown in Figure 2, consists of 12 points on a 2-D coordinate given in Table 1. Initially, the states of neurons µ x, i and t x,i are randomly set during 0 to 1. These two states for all neurons are modified iteratively to stable I x, i = 0 , (18) solutions as the defined Lyapunov energy and function converging to a near-global minimum K x,i = 0 (19) value. The cluster centers associated the run By introducing Eqs. (17), (18), and (19) into Eq. shown in Table 1 with c=2 are [(-3.19, 0.31), (13), the input of neuron (x,i) can be expressed (3.19, 0.31)] and [(-3.20, 0.27), (3.20, 0.27)] for as FCM and FPHN respectively. From these results, 2 the centroids resulted by FPHN are more weakly n 1 influenced by point 12 than FCM. Table 2 shows η Net x,i = z x − ∑ z y (µ m y ,i + t y ,i ) . the indices of the 12 points sorted by typicality n η m y =1 ∑h=1 ( µ h,i + t h,i ) values in each cluster. From Tables 1 and 2, the (20) FPHN can also get the same promising results as Consequently, the neuron states at neuron (x,i) FPCM. Same as the results in the FPCM, points are given by 1-5 are most typical to cluster 1 and points 7-11 −1 are also most typical to cluster 2. Points 6 and 12 1/ m−1 c with equal typicality values to both clusters, but Net x ,i for all i, (21) µ x,i = ∑ point 12 is an order of magnitude smaller than j =1 Net x, j the typicality value for point 6 that means point 6 belongs to both clusters with proper grades and more strongly than point 12. This also means that the FPHN can prune outliers from the data to reduce the effects of noise..

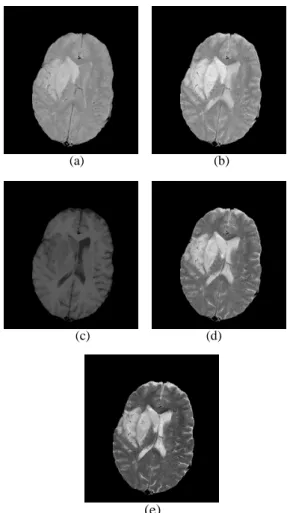

(5) The other example is multi-spectral image classification in MR head images of a patient diagnosed with cerebral infarction shown in Figure 3. These real images are acquired with T2-weighted sequences for channel images CH = 1, 2, 4, and 5 and T1-weighted signal for channel image 3. The acquisition parameters with different repetition time (TR) and echo time (TE) are TR1/TE1 = 2500 ms / 25ms, TR2 / TE2=2500ms / 50ms, TR3 / TE3=500ms / 20ms, TR4/TE4=2500ms / 75ms, and TR5/TE5=2500ms / 100ms respectively. Figure 4 shows the classified abnormal region with cerebral infarction. Experts indicated that the more promising result is obtained using the FPHN than those yielded by the fuzzy Hopfield neural network in reference [18].. (a). (b). Table 1. The membership grades and typicality degrees for FCM and FPHN Data set. FCM (m=3). FPHN (m=3,. η =3). x. p1. p2. 1. -3.34. 0.00. 0.95 0.05 0.95 0.05 0.0227 0.0012. 2. -3.34. 1.67. 0.96 0.04 0.96 0.04 0.0368 0.015. 3. -3.34. 0.00. 1.00 0.00 1.00 0.00 0.8664 0.0016. 4. -1.67. -1.67 0.92 0.08 0.92 0.08 0.0178 0.0014. 5. -1.67. 0.00. 0.91 0.09 0.91 0.09 0.0287 0.0031. 6. 0.00. 0.00. 0.50 0.50 0.50 0.50 0.0067 0.0067. 7. 1.67. 0.00. 0.09 0.91 0.09 0.91 0.0028 0.0301. 8. 3.34. 1.67. 0.04 0.96 0.04 0.96 0.0015 0.0385. 9. 3.34. 0.00. 0.00 1.00 0.00 1.00 0.0016 0.8654. 10. 3.34. -1.67 0.08 0.92 0.07 0.93 0.0014 0.0193. 11. 5.00. 0.00. 12. 0.00. 10.00 0.50 0.50 0.50 0.50 0.0005 0.0005. Class center. µ x,1 µ x,2 µ x,1 µ x,2. t x,1. (c). (d). t x, 2. (e) Figure 3. The multi-spectral MR head images with cerebral infarction: (a) TR1/TE1 = 2500 ms / 25ms; (b) TR2 / TE2=2500ms / 50ms; (c) TR3 / TE3=500ms / 20ms; (d) TR4/TE4=2500ms / 75ms; (e) TR5/TE5=2500ms / 100ms.. 0.05 0.95 0.05 0.95 0.0010 0.0210. (-3.19, 0.31) (3.19, 0.31). (-3.20, 0.27) (3.20, 0.27). Table 2. The indices of the 12 points corresponding to a sort on t x,1 and t x, 2 Typicality order. t x,1 3 2 5 1 4 6 7 9 8 10 11 12. t x, 2 9 8 7 11 10 6 5 3 2 4 1 12. Figure 4. The classified image using the proposed FPHN in channel 2 with TR2 / TE2=2500ms / 50ms.. 7. Discussion and Conclusions A Modified Hopfield-net model called Fuzzy Possibilistic Hopfield Net (FPHN) embedded fuzzy possibilistc c-means strategy with 2 neuron states, membership state and typicality state, is proposed to clustering problem. Not.

(6) only solves the noise sensitivity fault of fuzzy c-means but also overcomes the simultaneous clustering problem of possibilistic c-means strategy using the proposed FPHN. Therefore, the FPHN can prune outliers from the data to reduce the effects of noise. Moreover, the designed FPHN neural-network-based approach is a self-organized structure that is highly interconnected and can be implemented in a parallel manner. It can also be designed for hardware devices to achieve very high-speed implementation.. [13]. [14]. [15]. [16]. Acknowledgements [17]. This work is supported by the National Science Council, ROC, under the Grant NSC89-2218–E -167-001.. [18]. References [1]. [2]. [3] [4]. [5]. [6]. [7]. [8]. [9]. [10]. [11]. [12]. G. H. Ball and D. J. Hall, “A clustering technique for summarizing multivariate data,” Behav. Sci., vol. 12, pp. 153-155, 1967. J. C. Dunn, “A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters,” J. Cybern., vol. l.3, pp. 32-57, 1974. H. J. Zimmermann, Fuzzy set theory and its application, Cluwer:Boston, 1991. M. A. Ismail and S. Z. Selim, Fuzzy c-mean: optimality of solutions and effective termination of the algorithm, Pattern Recognition, vol. 19, pp. 481-485, 1986. J. C. Bezdek, Fuzzy Mathematics in pattern classification, in Ph.D. Dissertation, Applied Mathematics, Cornell University, Ithaca, New York, 1973. M. S. Yang, On a class of fuzzy classification maximum likelihood procedures, Fuzzy Sets and Systems, vol. 57, pp. 365-375, 1993. M. S. Yang, and C. -F. Su, On parameter estimation for normal mixtures based on fuzzy clustering algorithms, Fuzzy Sets and Systems, vol. 68, pp. 13-28, 1994. Jzau-Sheng Lin, “Fuzzy clustering using a compensated fuzzy Hopfield network,” Neural Processing Letters, vol. 10, pp. 35-48, 1999. R. Krishnapuram and J. M. Keller, “A Possibilistic Approach to clustering,” IEEE Trans. On Fuzzy Systems, vol. 1, pp. 98-110, 1993. R. Krishnapuram and J. M. Keller, “The Possibilistic C-Means Algorithm: Insights and Recommendations,” IEEE Trans. On Fuzzy Systems, vol. 4, pp. 385-393, 1996. N. R. Pal, K., Pal, and J. C. Bezdek, “A Mixed c-Means Clustering Model,” IEEE International Conf. On Fuzzy Systems, vol. 1, pp. 11-21, 1997. Jzau-Sheng Lin, K. –S. Cheng, and C. –W. Mao, “Segmentation of MultiSpectral Magnetic Resonance Image Using Penalized Fuzzy Competitive Learning Network,” Computers and Biomedical Research, vol. 29, pp. 314-326, 1996.. [19]. [20]. A. Kanstein, M. Thomas, and K. Goser, “Possibilistic Reasoning Neural Network,” IEEE International Conference on Neural Networks, vol. 4, pp.2541-2545, 1997. J. J. Hopfield, "Neural networks and physical systems with emergent collective computational abilities," Proc. Nat. Acad. Sci., USA, vol. 79, pp. 2554-2558, 1982. J. J. Hopfield and D. W. Tank, "Neural computation of decisions in optimization problems," Biol. Cybern., vol. 52, pp. 141-152, 1985. Jzau-Sheng Lin and S. –H. Liu, “A Competitive Continuous Hopfield Neural Network for Vector Quantization in mage Compression,” Eng. Appli. of Artif. Intell., vol. 12, pp. 111-118, 1999. Jzau-Sheng Lin, K. -S. Cheng, and C. –W. Mao, "A fuzzy Hopfield neural network for medical image segmentation," IEEE Trans. Nucl. Sci., vol.43, pp.2389-2398, 1996. Jzau-Sheng Lin, K. -S. Cheng, and C. –W. Mao, "Multispectral magnetic resonance images segmentation using fuzzy Hopfield neural network," J. Biomed. Comput., vol.42, pp.205214, 1996. Jzau-Sheng Lin, “Segmentation of medical Images through a Penalized Fuzzy Hopfield Network with Moments Preservation,” to be appeared in J. of the Chinese Institute of Engineers. D. –C. Cheng, Q. Pu, K. –S. Cheng, and H. Burkhardt, “ Possibilistic Hopfield Neural Network on CT Brain Hemorrhage Image Segmentation,” Proc. of The 4th Asian Conf. On Computer Vision, vol. II, pp. 871-876, 2000..

(7)

數據

相關文件

Secondly then propose a Fuzzy ISM method to taking account the Fuzzy linguistic consideration to fit in with real complicated situation, and then compare difference of the order of

In order to improve the aforementioned problems, this research proposes a conceptual cost estimation method that integrates a neuro-fuzzy system with the Principal Items

(2007), “Selecting Knowledge Management Strategies by Using the Analytic Network Process,” Expert Systems with Applications, Vol. (2004), “A Practical Approach to Fuzzy Utilities

“ Customer” ,employs the fuzzy analytic hierarchy process (FAHP) to develop a systematic model for the evaluations of knowledge management effectiveness , to reach the goal

Kuo, R.J., Chen, C.H., Hwang, Y.C., 2001, “An intelligent stock trading decision support system through integration of genetic algorithm based fuzzy neural network and

In each window, the best cluster number of each technical indicator is derived through Fuzzy c-means, so as to calculate the coincidence rate and determine number of trading days

Generally, the declared traffic parameters are peak bit rate ( PBR), mean bit rate (MBR), and peak bit rate duration (PBRD), but the fuzzy logic based CAC we proposed only need

The neural controller using an asymmetric self-organizing fuzzy neural network (ASOFNN) is designed to mimic an ideal controller, and the robust controller is designed to