行政院國家科學委員會專題研究計畫 成果報告

遠距點選輸入之手勢互動控制系統開發(第 2 年) 研究成果報告(完整版)

計 畫 類 別 : 個別型

計 畫 編 號 : NSC 96-2628-E-011-007-MY2

執 行 期 間 : 97 年 08 月 01 日至 98 年 07 月 31 日 執 行 單 位 : 國立臺灣科技大學工業管理系

計 畫 主 持 人 : 李永輝

計畫參與人員: 碩士班研究生-兼任助理人員:陳建州 碩士班研究生-兼任助理人員:劉燕萍 碩士班研究生-兼任助理人員:黃美甄 博士班研究生-兼任助理人員:林蕙如

報 告 附 件 : 出席國際會議研究心得報告及發表論文

處 理 方 式 : 本計畫可公開查詢

中 華 民 國 98 年 09 月 23 日

行政院國家科學委員會補助專題研究計畫

□成果報 告

遠距點選輸入之手勢互動控制系統開發

(二年計畫之第二年)

A remote input device base on controls from finger gesture recognition system

計畫類別:□ 個別型計畫

計畫編號:NSC 96-2628- E -011 - 007 -MY2

執行期間: 96 年 8 月 1 日至 98 年 7 月 31 日

計畫主持人:李永輝 國立台灣科技大學 工業管理系 計畫參與人員:柯志涵、葉陳鴻、吳淑楷 工業管理系

成果報告類型(依經費核定清單規定繳交):□精簡報告

本成果報告包括以下應繳交之附件:

□赴國外出差或研習心得報告一份

□赴大陸地區出差或研習心得報告一份

█出席國際學術會議心得報告及發表之論文各一份

□國際合作研究計畫國外研究報告書一份

處理方式:除產學合作研究計畫、提升產業技術及人才培育研究 計畫、列管計畫及下列情形者外,得立即公開查詢

執行單位: 國立台灣科技大學 工業管理系

中 華 民 國 98 年 9 月 20 日

可供推廣之研發成果資料表

□ 可申請專利 □ 可技術移轉

日期:98 年 9 月 20 日

國科會補助計畫

計畫名稱:遠距點選輸入之手勢互動控制系統開發 計畫主持人: 李永輝

計畫編號:NSC 96-2628- E -011 - 007 -MY2 學門領域:工業工程與管理

技術/創作名稱 遠距點選輸入之手勢互動控制系統 發明人/創作人 李永輝

中文:

利用兩部攝影機發展出的三度空間,以自然手部動作為輸入控制設備,萃取 手部與頭部的膚色,並以投手連線投射到螢幕,進行遠距的點選控制。

技術說明

英文:A GOAL-DIRECTED POINTING TRACKING SYSTEM: we

combine stereoscopic range information and skin-color

classification to achieve a robust tracking system of the free hand movement in 3D. The setup consists of two fixed-baseline cameras connected to PC.

可利用之產業 及 可開發之產品

智慧環境配合的遠距輸入裝置

技術特點 三度空間自然姿勢的輸入方法

推廣及運用的價值 與智慧環境結合,最適合於遠距與大型銀幕的互動需求

※ 1.每項研發成果請填寫一式二份,一份隨成果報告送繳本會,一份送 貴單位

成果推廣單位(如技術移轉中心)。

A remote, hand-free, 3-dimensional finger trajectory tracking system and its application

Lee, Yung-Hui and Ko, Chin-Han Department of Industrial Management,

National Taiwan University of Science and Technology, No. 43 Kee-lung Road, Section 4, Taipei, Taiwan, ROC

yhlee@im.ntust.edu.tw

Keyword:Finger and Head Orientation, 3-dimensional tracking, remote interaction

In this paper, we describe a simple and inexpensive solution to providing real time 3D input of hand and head orientation called “goal-directed pointing tracking system (GPTS).” We provided 1) a presentation of GPTS that tracked the hand and head orientations and 2) an experimental verification of the reliability of GPTS, and (3) an interaction with a music player. GPTS was demonstrated to recognize a range of gestures which was performed in our 3D video based recognition environment.

The recognition rate of this scenario is above 90% when user’s head and hands were tracked.

INTRODUCTION

As an alternative to traditional input devices, researchers are exploring the possibility of adopting goal-directed pointing movements as an input function to various appliances of computer games, interactive computer graphics, and remote control for home appliances in a smart environment. Pointing is a movement of the hand/arm towards a specific object, location, and/or a direction. Among the set of gestures performed by humans when communicated with each other and/or with machines, pointing movement has the most spatial compatibility. In recognition pointing movements, the detections of the occurrence of the finger-hand-arm movements and the pointing directions have to be addressed (Kai, et al., 2003).

Most of pointing movement system recognition forearm orientation only. Our previous study of remote pointing accuracy in a distance of 3 meters showed that pointing accuracy to a target size of 7.5 cm in radius with no visual cue, the hitting rate was only 66.0% and the spread ranges was 7.02±5.30cm (Lee, et al., 2008). When pointing an object, the eye, the finger, and the object should be collinear. It was hypothesized in this study that pointing accuracy can be improved by the inclusion of the hand and head orientation into the determination of pointing location, (Lee, et al., 2001).

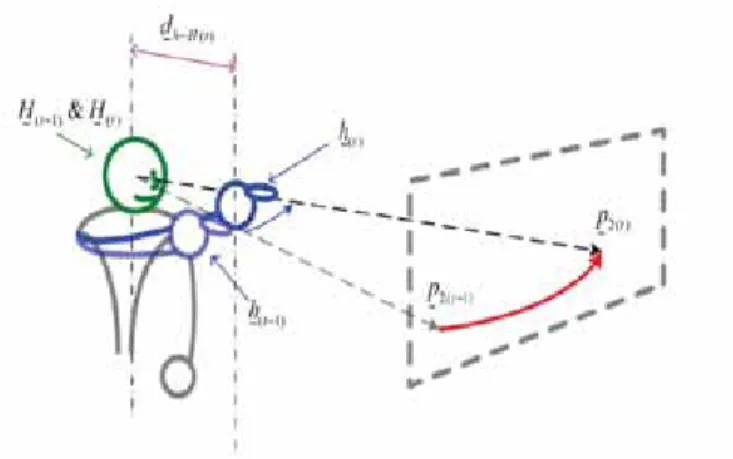

Figure 1 showed the environment of this remote interaction, where hand and head orientation was recognize, and the direction vector was determined according to the extended line of the hand and head vector. What unknown is the accuracy of this goal-directed pointing movement.

Figure 1: Example of remote interaction with electronic systems. In this paper, we describe a simple and inexpensive solution to providing real time 3D input of hand and head orientation called “goal-directed pointing tracking system (GPTS).” We provided 1) a presentation of GPTS that tracking the hand and head orientations and 2) an experimental verification of the reliability of GPTS by calibrating the pointing accuracies in 3D, and (3) an interaction with a music player, which contains functions of play, pause, volume controls, move to a previous and/or a next control.

GOAL-DIRECTED POINTING TRACKING SYSTEM In the study, we combine stereoscopic range information and skin-color classification to achieve a robust tracking performance. The setup consists of two fixed-baseline cameras connected to PC.

3-D Calibration

Based on data from a 2 camera system, direct linear transformation (DLT) was used to obtain 3D coordinates for the system. Measurements of the focus, positions, rotation angles, and distance parameters of the two cameras were used as inputs for the calibration.

2-D Hand/Head Area Detection

GPTS uses skin detection and background subtraction techniques to isolate the image of hand and head on each frame, which is then used for direction calculation. Del/The skin detection algorithm is a modified version of that of McKenna and Morrison (2004). Areas of hand and head were detected by identifying ellipse cylinder skin areas in the ycbcr color space of the images from two cameras. Only pixels of skin-color-liked are collected to a coordinate sheet.

The most memory-saving format is to use a binary dataset.

Erosion algorithm was used to filter out the noise. Finally, a K-means clustering mechanism (K=3) was used to cluster the coordinates sheet into 3 skin-area sets (2-D positions of the head and 2 hands). These operations are applying onto the both 2 images in the initial state.

2-D Hand/Head Position Tracking

It is not efficient to scan the whole stereo-image, after obtaining the initial 2-D hand and head coordinates, method of kernel based object tracking (Comaniciu et al., 2003) was adopted. When color histogram that describe the object population, a comparisons of the current and the previous target populations were conducted, update by gradient information until the correlation coefficient is large enough and then the scan stopped. Since the moving trajectories of hand and head are nearby-differentiable, the method works efficiently in this application.

Figure 2: hand/head position tracking and the pointing movement

3-D Goal-directed pointing movement

The trajectories of pointing movement were smoothed by Kalman filter (Keskin, et al., 2003). Combine the paired 2-D hand and head positions from each image by DLT parameters, the 3-D coordinates of the goal directing pointing were calculated. Sequences of hand and head information are then used for goal-directed pointing controls.

The system is capable of recognizing gestures at a speed of 20 Hz. It was then the velocity and the accelerations of the

pointing movements were calculated.

SYSTEM VALIDATIONS

In order to evaluate the performance of our system, we prepared a calibration test and an aiming stability tests. There were 16 different markers on the calibration frame. The geometric relationships and the 3D Cartesian coordinates of the 16 markers were known. The frame was of a size of one cubic meter. The frame was moved around and into the filed of view of the two cameras in a distance of 3.6 m. The 3D coordinates of the markers were calculated and the standard deviations of the differences of the mean coordinates in three axes were presented in Table 1.

Table 1: Reliability of 3-D calibration (N=16) Standard Deviation(cm) in Experiment x(cm) z(cm)

Moving ˆσRange Moving ˆσ Range 1 2.26 9.3 1.66 4.60 2 2.18 8.6 1.83 6.32 3 2.94 12.1 1.56 5.44 4 2.54 10.9 1.48 4.89 5 2.04 8.9 1.42 5.72 6 2.66 11.2 1.87 6.08 7 2.29 10.2 1.45 5.42 8 2.61 11.3 2.03 6.48 9 1.97 8.5 1.49 5.68 10 2.74 10.2 1.63 5.52 2.44 10.1 1.66 5.62

Table 2 presents the aiming stability and the range of 10 goal-directed pointing movements. It showed that the aiming accuracy, in a distance of 3.2 m which is about 5 times of the length of head/hand link, can be achieved in a range of 10 cm in X-axis and 6 cm in Z-axis.

Table 2 Point Stability in 3.2 m No. X-axis Y-axis Z-axis

1 0.012 0.124 0.032 2 0.011 0.326 0.012 3 0.006 0.475 0.021 4 0.011 0.102 0.015 5 0.007 0.079 0.014 6 0.003 0.207 0.033 7 0.008 0.292 0.007 8 0.016 0.166 0.017 9 0.004 0.143 0.036 10 0.012 0.078 0.009 total σ 0.031 0.739 0.070

Reliability test of the 3D coordinates showed that the most variations (<0.8 cm) appeared in Y-axis (forward and backward movements)

Figure 3: Aiming stability in 3.2 m

SYSTEM APPLICATON

To verify the advantage of GPTS, we built a remote controlled music player with free-hand comments. The comments were achieved by continuous monitoring the changes of the 3-D coordinates and the rotational angles of the pointing movements, either clockwise or counter-clockwise.

Figure 4: Tracking of the changes of the rotational angle both clockwise and counter-clockwise

In this application, comments were defined as following:

1. Comment initiation: continuously monitoring 20 frames of

formation of a circle with continuously changed rotational angles. This starts when there are forward movements (push) in the Y-axis and the velocity is greater than a threshold.

2. Play: when there are forward movements (push) in the Y-axis and the velocity is greater than a threshold (Same as Comment initiation).

3. Stop: when there are backward movements (pull) in the Y-axis and the velocity is greater than a threshold. This has to be done after comment initiation.

4. Volume up and down: pointing movement move toward the right/left and the velocity is greater than a threshold.

This has to be done after comment initiation.

5. Move to the next song: circling movements, clockwise, longer than 1 sec, and then push.

6. Back to the previous song: circling movements, counter clockwise, longer than 1 sec, and then push.

Experiment result showed that the average time-spending for gesture command is less than 4 seconds, and the recognition rate of all comments is more than 90%.

Figure 5: Application of GPTS for a control of music player

CONCLUSION

In this paper, GPTS was demonstrated to recognize a range of gestures in our 3D video based recognition environment in a distance of 3 meters. The system was designed to interact with a music player. The recognition rate of this scenario is greater tan 90% when user’s head and hands are well tracked. Other intended future work includes (a) a strong recognition engine using recurrent neural network model or hidden Markov model, (b) a more complete elements of gesture will be defined that movements in 3D spaces, moving speeds, and the strength of movement are more meaningful, and (c) interaction with a larger display at a longer distance.

Acknowledgements

This study is supported by a grant from the National Science Council, R.O.C. (Project No. NSC NSC 96-2628-E-011 -007 -MY2). The authors wish to acknowledge this financial support.

References

[1]Comaniciu, D., Ramesh, V., and Meer, P., 2003, Kernel-based object tracking, IEEE Transactions on Pattern Analysis and Machine Intelligence, 25, 564-575.

[2]Kai, N., Seemann, E., and Stiefelhagen, R., 2004, 3D-tracking of head and hands for pointing gesture recognition in a human-robot interaction Scenario, Automatic Face and Gesture Recognition, Proceedings. Sixth IEEE International Conference, 565-570.

[3]Kai, N. and Stiefelhagen, R., 2003, Pointing gesture recognition based on 3D-tracking of face, hands and head orientation, ICMI’03, November, 5-7, 2003, Vancouver, British Columbia, Canada.

[4]Lee, Y.H., Yeh, C.H., and Wu, S.K., 2008, Accuracy measurement of distant goal- directed hand pointing movements, Conference Proceeding of the 1st East Asia Ergonomics Symposium, Nov. 12 – 14, Kitakyushu, Japan.

[5]McKenna, S. J. and Morrison, K. 2004, A comparison of skin history and trajectory-based representation schemes for the recognition of user-specified gestures, Pattern Recognition 37, 5, 999-1009

[6]Lee, M. S., Weinshall, D., Cohen-Solal, E., Comenarez, A., and Lyons, D., 2001, A Computer Vision System for On-Screen Item Selection by Finger Pointing, in Proc IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, Dec 2001.

[7]Keskin, C., Erkan, A., Akarun , L., 2003, Real time hand tracking and 3D gesture recognition for interactive interfaces using HMM, ICANN 2003