國立臺灣大學電機資訊學院電機工程學系 碩士論文

Department of Electrical Engineering

College of Electrical Engineering and Computer Science

National Taiwan University Master Thesis

動態均衡的無政府狀態價格

On the Price of Anarchy of Dynamic Equilibria

梁峻瑋 Jun-Wei Liang

指導教授:陳和麟 博士 Advisor: Ho-Lin Chen, Ph.D.

中華民國 110 年 1 月

January, 2021

謝 辭

首先,我發自內心地感謝陳和麟老師這一年半以來的指導。從一開始的題目上的挑選、

論文方面的選讀、開始算一些例子、猜測模型上限、直到證明模型的上限等等,研究的 每一步都是由每周例行的一對一會議指出方向,再在當周花點時間完成回家作業,並於 下周回報結果的方式來執行的。經過碩一下整個學期,持續完成這些不太費力費時的研 究作業,我的研究結果大概已經完成三分之二。而後,在碩二上學期,前半學期是完成 最後的研究,後半學期是撰寫論文的部分,最終於寒假的第二周舉行口試。我不時感嘆,

這麼愉快的研究生活就此結束,也很懷念這段順遂且具有成就感的研究旅途。

其次,我必須特別在此感謝電機系辦的承辦人員,能夠通融我形式上的繁文縟節,

幫助我處理完一連串提早口試、學位考申請、學位成績繳交等等的行政事務。也在此感 謝台大電機所在過程中多次給予的支持與協助。我誠摯地向你們說聲謝謝。

最後,感謝我的研究室同儕們在這一年半中的協力。雖然平時我們並沒有一起合作 的需求,不過整個融洽的實驗室氣氛,正是由各位所維護起來的。也感謝我的實驗室同 學,黃道譽,犧牲暑假與實習機會來努力進行研究,最終才能讓老師同意我倆一同提早 進行口試。

摘 要

在這篇論文中,我們研究了一個隨時間變化的網路流(network flow)模型,稱之為

「流體排隊模型」。考慮到一個有向圖被注入連續的流量,致使水分子傳播到每一條 邊上。對於每一條邊而言,如果流入的流率超過給定的容量,那麼超出的粒子將會 形成等待的隊伍,而其他的粒子在給定的時間長度內通過這一條邊。更精確地,我們 研究了在賽局觀點上的流體排隊模型,稱之為動態平衡模型,這被用來描述一些問 題。例子包含了網際網路、自駕車中控系統、以及中央處理器挑選任務的過程。先前,

有幾位作者研究了流體排隊模型,像是 Ford and Fulkerson [1,2]、Gale [3]、Anderson and Philpott [4]、Fleischer and Tardos [5]。 也 有 一 些 作 者 研 究 了 動 態 平 衡 模 型, 像 是 Vickrey [6]、Meunier and Wagner [7]、Cominetti, Correa, andLarré [8]、Kaiser and Marcus [9]。我們的研究延續了單調猜想 (monotonicity conjecture) 下具有常數函數流量 的網路的 PoA(Price of Anarchy) 上限結果 [10],以及具有連續函數流量的網路的 PoA 存在性結果 [8,9]。一方面,在串並聯網路(Seriesparallel network)研究 PoA 的目的 是要測量「分配任務給處理器」這項任務的無效率程度。另一方面,在具備連續函數流 量的網路研究 PoA 的目的是要縮小模型和現實中的差距。我們認為具備動態函數流量的 網路相對接近實際狀況,如自駕車中控系統。在現實生活中,交通流量隨著時間而變化。

我們在研究中發現了具備連續函數流量的網路的 PoA 上限。我們證明了平行網路和 兩層兩條邊平行網路的 PoA 上限為 2,串並聯網路有一個 PoA 上限為「網路的直徑」,

所有網路在假設成立下有一個 PoA 上限為 2|V | − 1。這是第一篇論文研究具備連續函數

流量的網路在流體排隊模型上的 PoA。我們把平行網路和串並聯網路的 PoA 上限從無限 大分別壓低到 2 和「網路的半徑」。這兩個被證明出來的上限和具備常數函數流量的網路 在流體排隊模型上的情況截然不同。另一方面,類似於在早先抽稅方案上的研究 [11],

我們設計了一個簡單的抽稅方案來改善系統的無效率程度。這會許對於具備連續函數流 量的網路會有極大的幫助。

Abstract

In this thesis, we study a TimeVariantModel, called the “fluid queuing model”. Consider a directed graph injected with a continuous inflow such that water propagates to each edge. For each edge, If the inflow rate exceeds the given capacity, the exceeding particles form a waiting queue, and the other particles pass through this edge under the given delay time. We study the game theory aspect of the fluid queuing model, called the dynamic equilibrium model, which was applied to describe several problems. Examples include the Internet, the selfdriving car central control system, and the procedure of CPUcore.

Previously, several authors studied on fluid queuing model, likes Ford and Fulkerson [1, 2], Gale [3], Anderson and Philpott [4], Fleischer and Tardos [5]. Some authors studied on dynamic equilibrium model, likes Vickrey [6], Meunier and Wagner [7], Cominetti, Correa, andLarré [8], Kaiser and Marcus [9]. Our study continues the result of PoA bound of networks with constant inflow under monotonicity conjecture [10], and the existence of PoA of networks with dynamic inflow [8,9]. On the one hand, the purpose of studying the PoA in a seriesparallel network is to measure the inefficiency of the problem of assigning tasks to processors. On the other hand, the purpose of studying the PoA of networks with dynamic inflow is to narrow the gap between the model and the reality. We think the networks with dynamic inflow will be closer to the actual situation of the central control system of autonomous vehicles. In the real world, traffic will change over time.

We find upper bounds of the PoA of networks with dynamic inflow. We prove the PoA of 2 of parallellink networks and (2 + 2)parallellink networks, the PoA of “net

work’s diameter(called D(C))” of seriesparallel networks, the PoA of 2|V |−1 of general networks with assumption. This thesis is the first study on the PoA for networks with dy

namic inflow in the fluid queuing model. That is, we reduce the upper bound of PoA

of parallellink networks or seriesparallel networks from infinite to 2 and D(C) respec

tively. The bounds we proved are different from networks with constant inflow in the fluid queuing model. On the other hand, similar to the work of tax scheme [11], we design a simple tax scheme to improve the inefficiency of the system. This may help a lot on networks with dynamic inflow.

Contents

Acknowledges ii

Abstract in Chinese iii

Abstract in English iv

Contents vi

List of Figures viii

List of Tables ix

1 Introduction 1

2 Model 5

2.1 Fluid Queuing Model . . . 5

2.2 Flow of Model . . . 7

2.2.1 OPT flow . . . 7

2.2.2 EQU flow . . . 7

2.2.3 Throughput flow . . . 8

2.3 Networks of Model . . . 8

3 Main 10

3.1 Parallellink Networks . . . 10 3.2 Seriesparallel Networks . . . 13 3.3 (2 + 2)parallellink Network . . . 17

4 Extension 21

5 Conclusions and Future Works 27

References 29

Letter of Authority 31

List of Figures

2.1 The diagram of “(2 + 2)parallellink Network”. In the first stage C1, the source is s and the sink is p; In the second stage C2, the source is p and the sink is t. . . . 9

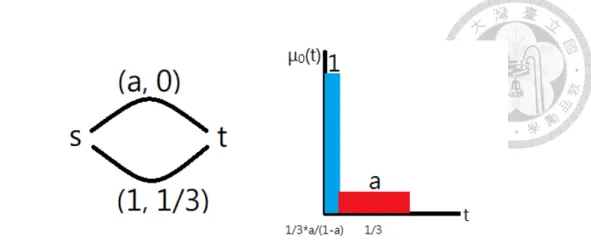

3.1 The diagram of “PoA of 2 Example”. Left part is the parallellink network with edges{e1 = (a, 0), e2 = (1,13)}; Right part is the inflow function, which is a step function with range={1, a}. . . 11

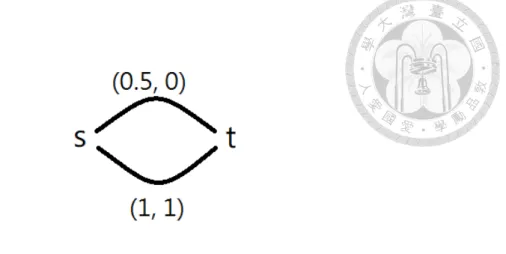

4.1 The diagram of “PoA of 43 Example” This is the parallellink network with edges{e1 = (0.5, 0), e2 = (1, 1)}, the total amount M = 1, and the constant inflow function µ0 = 1. . . 22 4.2 The diagram of “PoA ofe−1e Example” This is the seriesparallellink net

work with edges{ei = (ui, 0), ei = (ui, αµm(u 1

OP T − u1i))}i=1∼m, the total amount M = αµm, and the constant inflow function µ0 = µm, where m∈ N, α > 0, µi =∑i

k=1µk. . . 24 4.3 The diagram of “PoA of 2 Example”. Left part is the parallellink network

with 2 edges{e1 = (a, σ), e2 = (b, τ )}; Right part is the inflow function, which is a step function with range={1, a}. . . 25

List of Tables

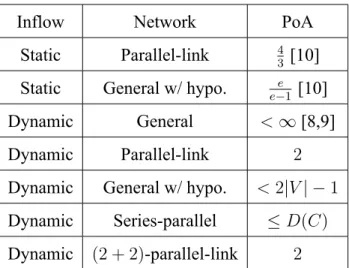

1.1 Summary of result. The PoA of 2 is a tight bound of parallellink networks and (2 + 2)parallellink networks; The PoA of D(C) is a loose bound of seriesparallel networks; The PoA of 2|V | − 1 is a loose bound of general networks with assumption. . . 4

Chapter 1

Introduction

In this thesis, we consider the game theory aspect of the fluid queuing model proposed by Vickrey [6]. In the fluid queuing model, the system consists of a directed graph and a continuous inflow. Each edge of this graph has a capacity and a delay time. When the flow traverses through the edges, queuing occurs if the flow rate exceeds the capacity of the edge. The exceeding particles of the flow would form queuing to wait for the next moment, and the other particles would pass this edge with the delay time of edge as the cost of time. This model views each of the infinite particles as a player. These players selfishly choose the shortest path from source to sink to minimize the travel time, which forms the equilibrium flow. The travel time of each particle is the summation of the waiting time at the source plus the queuing time and delay time of each edge it chooses.

This model can be used to describe several problems. Examples include the Internet, the central control system of selfdriving cars, and the task processor of the CPU core.

Consider the problem of transferring packets on the Internet. We can model the Internet as a network, packet as particle forming inflow, and network congestion as fluid queuing of each edge. On the example of traffic networks, we can model the traffic network as a network, vehicle as particle forming inflow, the traffic jam on each road as the fluid queuing of each edge. Note that the optimal solution is the selfdriving car central control system. On the problem of assigning tasks to processors, we can model each processor execution time as edges of a network, the process as particle forming inflow, and the

waiting queue of each machine as the fluid queuing of each edge. In this example, the programming statements forming series operations and parallel operations, such as for

loops or ifelse. These are the motivation of seriesparallel networks.

Previously, Ford and Fulkerson [1,2] provided an algorithm of the fluid queuing model with constant inflow to send the maximal mass of flow with a given time. Gale [3]

proved the existence of flow that is the optimal solution at each moment. Anderson and Philpott [4], Fleischer and Tardos [5] improved this result from each moment to continuous time version.

Consider the game theory aspect on the fluid queuing model, which is called dynamic equilibrium model and laterally introduced as “Flow of Model”, firstly studied by Vickrey [6]; Meunier and Wagner [7] proved the existence of dynamic equilibria.

For fluid queuing model with dynamic inflow, Cominetti, Correa, and Larré [8]

proved the existence of dynamic equilibria of piecewise constant inflow and locally Lebesgue

integrable inflow. Kaiser and Marcus [9] constructively proved the existence of dynamic equilibria of locally Lebesgueintegrable inflow.

The purpose of studying the Price of Anarchy (PoA) is to evaluate and quantify the inefficiency of the system. This analysis of PoA enables us to measure each kind of game and design an improved mechanism for them. The PoA was studied in several games, likes Stackelberg game [12], selfish routing game [13], or network game or called game

theory aspect on fluid queuing model [10]. Focusing on the networks with dynamic inflow, we discuss PoA’s bound for parallellink networks, seriesparallel networks, and general networks in this thesis. On the other hand, those two papers in the previous paragraph showed the PoA of the fluid queuing model with locally integrable inflow is finite due to the existence of dynamic equilibria.

In the fluid queuing model, the price of anarchy for networks with constant inflow is tightly bounded by e−1e under a weak assumption, called the monotonicity conjecture.

In particular, the price of anarchy for parallellink networks tightly bounded by43 without any assumption [10].

Consider the existence of dynamic equilibria of locally integrable inflow and PoA’s

bound for networks with constant inflow. We want to find PoA’s bound of seriesparallel networks with locally integrable inflow or called dynamic inflow. The purpose of study

ing the PoA of seriesparallel networks is related to the problem of assigning tasks to processors. That is, we want to study the efficiency of the processandCPU system. On the other hand, the purpose of studying the PoA of networks with dynamic inflow is to narrow the gap between the model and the reality. We think the networks with dynamic inflow would be closer to real situations, such as the internet or selfdriving car central control system. In the real world, traffic will change over time.

Our Result: We find upper bounds of the PoA of networks with dynamic inflow. We prove that the PoA of 2 is a tight bound for parallellink networks and (2 + 2)parallellink networks; the PoA of seriesparallel networks is upper bounded by D(C), where D(C) is closed related to the diameter of the network; the PoA of general networks is upper bounded by 2|V | − 1 with assumption. These results are shown in table 1.1.

This thesis is the first study on the PoA for networks with dynamic inflow in the fluid queuing model. That is, we reduce the upper bound of PoA of parallellink networks or seriesparallel networks from infinite to 2 and D(C) respectively. The bounds we proved are different from networks with constant inflow in the fluid queuing model. On the other hand, similar to the work of tax scheme [11], we design a simple tax scheme, called Delay

time tax scheme, to improve the efficiency of the system. Surprisingly, the delaytime tax scheme did not work on networks with constant inflow but may help a lot on networks with dynamic inflow.

Our main work is to use the total amount of inflow as an upper bound of the maximal throughput of networks to afford the lower bound of the cost of optimal flows (or said optimal time) in the fluid queuing model. This technique helps us finding PoA’s tight bound of parallellink networks and a simple example of seriesparallel networks, even a loose bound of seriesparallel networks. This technique provides an exactly bound of PoA of seriesparallel networks with dynamic inflow. Also, it simplifies the work from finding PoA’s bound to calculating the mass of the maximal throughput of networks. On the other hand, we design a tax scheme to improve society’s welfare as a possible solution to the system’s inefficiency.

Inflow Network PoA Static Parallellink 43 [10]

Static General w/ hypo. e−1e [10]

Dynamic General <∞ [8,9]

Dynamic Parallellink 2

Dynamic General w/ hypo. < 2|V | − 1 Dynamic Seriesparallel ≤ D(C) Dynamic (2 + 2)parallellink 2

Table 1.1: Summary of result. The PoA of 2 is a tight bound of parallellink networks and (2 + 2)parallellink networks; The PoA of D(C) is a loose bound of seriesparallel networks; The PoA of 2|V | − 1 is a loose bound of general networks with assumption.

Chapter 2

Model

In this chapter, there are three sections. Firstly, we will define the fluid queuing model as the base of this thesis; Secondly, we will introduce the few kinds of flows in the fluid queuing model and imply the term–the Price of Anarchy; Thirdly, we will choose some specific models to study. These contents will be used in the next chapter.

2.1 Fluid Queuing Model

Consider a network C by directed graph G = (V, E) with source s∈ V and sink t ∈ V . Each edge ej has capacity vj and delay time τj, denoted by ej = (vj, τj). Sometimes, we use the notation ej = (aj, σj). Moreover, each edge also has an infinite buffer to store the particles.

In this paper, the fluid queuing model is regarded as one of the models of network flow changes over time. The inflow of network, µ0, is a Lebesgue locally integrable function.

Denote the last leaving time by ˆθ = sup{θ|µ0(θ) > 0}, and the total amount by M =

∫θˆ

0 µ0(θ)dθ. Here, we assume all particles have full information, enable them to imply each moment’s situation.

Let’s define fluid queuing model here, refer to [10]. For each e∈ E at θ, denote the queuing mass by ze(θ), and the inflow rate by fe+(θ), where fe+(θ) : R+ → R+. At this

moment, the changing speed of ze(θ) is:

fe+(θ)− ve ↗, if fe+(θ)≥ ve,

ve− fe+(θ) ↘, if fe+(θ)≤ ve, ze(θ) > 0, 0→, if fe+(θ)≤ ve, ze(θ) = 0.

So, the particle comein e at θ will leave e at θ + ze(θ)

ve + τe, where zev(θ)

e + τeis named by the travel time of e at θ.

For each e∈ E at θ + τe, denote the outflow rate by

fe−(θ + τe) =

ve, if ze(θ) > 0, min(ve, fe+(θ)), if ze(θ) = 0.

Now, for each v∈ V − {s, t}, no particle would stay at v at any θ. That is,

∑

e=(u,v)∈E

fe−(θ) = ∑

e=(v,w)∈E

fe+(θ).

For source s, particle is allowed to wait at s and leave at any θ. We have:

µ0(θ) + ∑

e=(u,s)∈E

fe−(θ)≥ ∑

e=(s,w)∈E

fe+(θ),

since some particles may stay at s.

Remark 1. In the previous paper, like [10], they denote that

µ0(θ) + ∑

e=(u,s)∈E

fe−(θ) = ∑

e=(s,w)∈E

fe+(θ).

But, in this thesis, we use the inequality notation. This is because we do not view the particle waiting at s as part of outflow∑

e=(s,w)∈Efe+(θ). This only has few influence on the continued part of thesis.

In the fluid queuing model, the strategy of each particle is to wait for a moment in s, and then choose a path to leave. For this particle p, the travel time is defined by waiting time at s plus the summation of the travel time of all e∈ path at the arriving time of p.

2.2 Flow of Model

2.2.1 OPT flow

The OPT solution (OPT flow) of the fluid queuing model is the strategy as below: Given network C, inflow µ0. Each particle from µ0 is manipulated such that the last particle can arrive at t at the earliest time. We denoted this earliest time by TOP T, called the arriving time of all particles in OPT flow.

Remark 2. The OPT flow maybe not unique. We default it by the OPT flow without queuing and prefer paths with shorter delay time.

2.2.2 EQU flow

For each particle as a player, the Nash Equilibrium (EQU flow) of the fluid queuing model is the strategy as below: Given network C, inflow µ0. For each particle, it has to choose waiting time at s plus the travel time of one of the paths from s to t. And, the cost of each particle is the arriving time. Finally, we denoted the time of the last particle arriving t by TEQU, called the arriving time of all particles in EQU flow.

Now, let’s introduce two terms. The PoA, price of anarchy [14], is the ratio between the worst Nash Equilibrium and the optimal solution of social cost. The PoS, price of stability [15], is the ratio between the best Nash Equilibrium and the optimal solution of social cost. However, there is only one unique Nash equilibrium in this network game.

We simply denote PoA := TTEQU

OP T without any ambiguity. Here, PoA is the ratio of the arriving time of all particles in EQU flow to OPT flow.

Remark 3. The waiting time at s is 0 for all particles in this case.

Definition 1. Consider EQU flow of network C, inflow µ0. For the particles arriving s at θ0, we denote the earliest time to arrive each v ∈ V − s by lv(θ0).

Definition 2. Consider EQU flow of network C, inflow µ0. The mass of particle existing in C at θ0, Q(C, µ0, θ0) :=∫

{θ|θ≤θ0,lt(θ)>θ0}µ0(θ)dθ. When it is clear from the context, we use Q(θ0) to denote Q(C, µ0, θ0).

Definition 3. ConsiderEQU flow of network C, inflow µ0. At the moment θ0 , we define the shortest travel time L(C, µ0, θ0) := lt(θ0)− θ0. And, at the last leaving time ˆθ, we shortwrite ˆL := L(C, µ0, ˆθ). When it is clear from the context, we use L(θ0) to denote L(C, µ0, θ0).

2.2.3 Throughput flow

The throughput flow of the fluid queuing model is the strategy as below: Given network C, inflow µ0, period time θ = 0 ∼ t. Each particle from µ0 is manipulated such that sending the maximal mass of particle throughput C at inflow µ0 during θ = 0∼ t.

Definition 4. The maximal mass of particle is denoted by Mput(C, µ0, t). Sometimes we instead inflow function of infinite inflow rate during θ = 0∼ t to afford an upper bound of Mput(C, µ0, t), denoted by Mput(C,∞, t). When it is clear from the context, we use Mput(t) to denote Mput(C,∞, t).

2.3 Networks of Model

Definition 5 (Parallellink Network). Given a network C in fluid queuing model, C is Parallellink networks if e = (s, t) for all e∈ E.

Definition 6 (Seriesparallel Network). Seriesparallel networks are defined by Induc

tion: Start from parallellink networks, each time can do serieslinking or parallellinking operation to another welldefined seriesparallel network. All possible results form series

parallel networks.

Definition 7. Given the seriesparallel network C, the maximal number of each path’s nodes among all paths is denoted by D(C).

Definition 8 (Parallelgrouplink Network). Given ϵ > 0 and a parallellink network C.

If there exists [L1, R1], ..., [LN, RN], RLi

i ≤ 1 + ϵ and LRi+1i ≥ 1ϵ, and the delay time of each edge of C is in one of the intervals, then C is called parallelgrouplink network.

Connected from the routing game with groups of similar links, refer to [11].

Figure 2.1: The diagram of “(2 + 2)parallellink Network”. In the first stage C1, the source is s and the sink is p; In the second stage C2, the source is p and the sink is t.

Definition 9 ((2 + 2)parallellink Network). Given parallellink networks C1, C2 and C1’s inflow µ0. Now, serieslink C1 to C2, denoted by C1+ C2. Consider OPT flow and EQU flow on this linking network C1+C2, the maximal number of used edges in OPT flow or EQU flow in C1 and C2 part are denoted by m1 and m2 respectively. If m1, m2 ≤ 2, we called C1+ C2 is a (2 + 2)parallellink network.

Chapter 3

Main

We will show several PoA’s bounds in the fluid queuing model in this chapter. These include some tight upper bound on parallellink networks or (2+2)parallellink networks and some finite upper bound on seriesparallel networks.

3.1 Parallellink Networks

First of all, we need Lemma 1 to evaluate the upper bound of the Price of Anarchy for networks.

Lemma 1. Consider the fluid queuing model with network C, inflow µ0. For all time θ0, if

Mput(L(θ0))≤ k ∗ Q(θ0),

then TEQU ≤ (k + 1) ∗ TOP T, where Mput is the maximal mass of particle throughput network, Q is the particle existing in network, and TOP T, TEQU are the arriving time of all particle in OPT flow, EQU flow respectively.

Proof. For the shortest travel time ˆL = L(ˆθ) := lt(ˆθ)− ˆθ, we have:

TEQU− TOP T = lt(ˆθ)− TOP T = ˆL + ˆθ− TOP T (∗)

≤ ˆL,

Figure 3.1: The diagram of “PoA of 2 Example”. Left part is the parallellink network with edges{e1 = (a, 0), e2 = (1,13)}; Right part is the inflow function, which is a step function with range={1, a}.

last inequality holds by considering the leaving time and the arriving time of last particle in OPT flow. On the other hand, for the total amount M and the inequality, we have:

M ≥ Q(ˆθ) ≥ 1

kMput(L(ˆθ))

(∗)

≥ 1

k ∗ k ∗ Mput(1 kL(ˆθ)),

last inequality holds since we can copy the infinite inflow during θ = 0 ∼ 1kL(ˆθ) for k times as an option of infinite inflow during θ = 0 ∼ L(ˆθ). On the other hand, since we have to send M mass of particle in OPT flow, so:

TOP T ≥ 1

kL(ˆθ) = 1 k

Lˆ ≥ 1

k(TEQU− TOP T), k + 1≥ TEQU

TOP T = PoA.

Theorem 1. In the fluid queuing model, the Price of Anarchy of 2 for parallellink net

works is tight.

Proof. Gien parallellink network C, inflow µ0, denoted ej = (vj, τj) for all ej ∈ E. At any moment θ0, suppose EQU flow use n edges, then we have:

L(θ0)≤ τn+1.

For each edge, the travel time minus the delay time of edge is the queuing time. Hence, we can calculate the mass of particle queuing in C at θ0as an lower bound of Q(θ0):

Q(θ0)≥

∑n i=1

vi[L(θ0)− τi],

where Q(θ0) is the particle existing in C at θ0. On the other hand, for each edge, the given time of Mput minus the delay time of edge is the total time to pass particle. We have:

Mput(L(θ0)) =

∑n i=1

vi[L(θ0)− τi].

This implies Q(θ0) ≥ Mput(L(θ0)). Follow from Lemma 1, we have P oA ≤ 2. Fur

thermore, let’s provide an example for the Price of Anarchy of 2. Given 1 > a > 0, consider:

E ={e1 = (a, 0), e2 = (1,1 3)}.

µ0(θ) =

1, θ = 0 ∼1

3 ∗ a 1− a, a, θ = 1

3∗ a

1− a ∼1 3 ∗ a

1− a + 1 3. In this case, we have:

TOP T = 1 3 ∗ a

1− a + 1 3, TEQU = 1

3 ∗ a 1− a + 1

3+ 1 3, PoA = TEQU

TOP T =

1

3 ∗ (2 − 2a + a)

1

3 ∗ (1 − a + a) = 2− a → 2 as a → 0+.

Theorem 2. In the fluid queuing model, if all edges in 1 aggregated network, the Price of Anarchy of 2−1+ϵ1 ≈ 1 + ϵ for parallelgrouplink networks is tight, where ϵ is given by the network.

Proof. Given parallelgrouplink network C whose edges are in 1 aggregated network, inflow µ0, denoted ej = (vj, τj) for all ej ∈ E. At the last leaving time ˆθ, for the shortest travel time ˆL, we have the condition:

Lˆ

τj ≤ 1 + ϵ, ∀ej ∈ E.

Similar as Lemma 1, we have:

TEQU − TOP T = ˆL + ˆθ− TOP T ≤ (1 − 1

1 + ϵ) ˆL + τ1+ ˆθ− TOP T (∗)

≤ (1 − 1 1 + ϵ) ˆL,

where the last inequality holds by considering the leaving time plus minimal delay time and the arriving time of last particle in OPT flow. Hence, we afford:

2− 1

1 + ϵ ≥ TEQU

TOP T = PoA.

Finally, similar as Theorem 1, the example for the Price of Anarchy of 2− 1+ϵ1 is shown as below:

E ={e1 = (a,1 3

1

1 + ϵ), e2 = (1,1 3)},

µ0(θ) =

1, θ = 0 ∼(1

3 −1 3

1 1 + ϵ) a

1− a, a, θ = (1

3 − 1 3

1 1 + ϵ) a

1− a ∼(1 3 −1

3 1 1 + ϵ) a

1− a + 1 3. In this case, we have:

TOP T = (1 3 − 1

3 1 1 + ϵ) a

1− a+ 1 3, TEQU = (1

3 − 1 3

1 1 + ϵ) a

1− a+ (1 3− 1

3 1

1 + ϵ) + 1 3, PoA = TEQU

TOP T →

2

3 −131+ϵ1

1 3

= 2− 1

1 + ϵ ≈ 2 − (1 − ϵ) = 1 + ϵ.

holding when a→ 0.

3.2 Seriesparallel Networks

Lemma 2. Consider the fluid queuing model with network C, inflow µ0, the total capacity of minimal cut face MinCut(C), the inequality holds:

M ≤ TOP T ∗ MinCut(C),

where M is the total amount and TOP T is the arriving time of all particle in OPT flow.

Proof. In this case, for OPT flow, each particle from s to t has to pass by one of the edges contained in the minimal cut face of C. And, the throughput of the minimal cut face is at most MinCut(C) at any moment. As a result, the inequality holds.

Theorem 3. In the fluid queuing model, given network C, inflow µ0. If each of C’s edge is used by OPT flow at some moment, the Price of Anarchy is at most 2|V | − 1.

Proof. At the last leaving time ˆθ, record lv(ˆθ) for all v ∈ E − {s} and sort them into {t0, t1, ..., tn}. We discretize the earliest travel time ˆL = lt(ˆθ)− ˆθ into interval (ti−1, ti).

Note that, n + 1≤ |V |.

We know that (ti−1, ti) contains some edges with some queue mass. For each edge ej in this interval, we suppose ej start at tf (j) ≤ ti−1, denoted ej = (vj, τj) and the queuing mass zj(tf (j)), then:

zj(tf (j))

vj + τj ≥ ti− ti−1.

Now, for network C, interval (ti−1, ti), consider the MinCut(C) and an index set of interval S :={k|ek ∈ [ti−1, ti]}, we have:

∑

k∈Szk(tf (k))

∑

k∈Svk ≤ M

∑

k∈Svk ≤ M MinCut(C)

(∗)

≤ TOP T,

last inequality holds by Lemma 2. This implies mink∈S zk(tvf (k))

k ≤ TOP T. Now, consider j = arg minzk(tvf (k))

k , we have:

ti− ti−1 ≤ zj(tvf (j)j )+ τj (∗)

≤ TOP T + TOP T, L = L(C, µˆ 0, ˆθ) = lt(ˆθ)− ˆθ = tn− t0 =∑n

i=1ti− ti−1 ≤ 2n ∗ TOP T, PoA≤ 2n + 1 = 2|V | − 1.

Note that, τj ≤ TOP T holds since OPT flow uses all edges including ej.

Conjecture 1. In the fluid queuing model, removing each OPTunused edge in networks only worsens the Price of Anarchy. Hence, the Price of Anarchy is at most 2|V | − 1 for networks.

Remark 4. In parallellink networks, this conjecture is true. In general networks, this conjecture could be false, take Pigou’s example for an example. The conjecture is possible to be true in seriesparallel networks.

Theorem 4. In the fluid queuing model, the Price of Anarchy is at most D(C) for series

parallel networks C, where D(C) denotes the maximal number of each path’s node among all s to t paths.

Claim: Given a seriesparallel network C, inflow µ0, any moment θ0. For the through

put flow Mput, the shortest travel time L, and the mass of particle existing in network Q.

We have the inequality:

Mput(C,∞, L(C, µ0, θ0))≤ (D(C) − 1)Q(C, µ0, θ0), which implies the Theorem from Lemma 1.

Proof. (a)Base Case

In parallellink network, D(C) = 2. The statement is true by Theorem 1.

(b)Parallellinking

After parallellinking operation, seriesparallel networks C1 links to C2. For C’s inflow µ0, define the EQU flow’s inflow of C1 is µup0 , inflow of C2 is µdn0 , and µ0 = µup0 + µdn0 ; for any moment θ0. we have:

L(C1, µup0 , θ0) ≥ L(C, µ0, θ0), L(C2, µdn0 , θ0) ≥ L(C, µ0, θ0),

Q(C, µ0, θ0) = Q(C1, µup0 , θ0) + Q(C2, µdn0 , θ0).

Induction hypothesis of Claim:

given C1, µup0 , θ0 : Mput(C1,∞, L(C1, µup0 , θ0))≤ (D(C1)− 1)Q(C1, µup0 , θ0), given C2, µdn0 , θ0 : Mput(C2,∞, L(C2, µdn0 , θ0))≤ (D(C2)− 1)Q(C2, µdn0 , θ0),

This implies:

Mput(C,∞, L(C, µ0, θ0))

(∗)

≤ sum of LHS

≤ sum of RHS

≤ max(D(C1)− 1, D(C2)− 1)[Q(C1, µup0 , θ0) + Q(C2, µdn0 , θ0)]

= (D(C)− 1)Q(C, µ0, θ0),

where D(C) = max(D(C1), D(C2)). And, the first inequality holds since the throughput of C is just the sum of throughput of C1and C2 with same period time.

(c)Serieslinking

After serieslinking operation, seriesparallel networks C1 links to C2. For C1’s inflow µ0, define the EQU flow’s outflow of C1is µ1, and µ1is C2’s inflow; for any moment θ0, define θ1 = lp(θ0). We have:

L(C, µ0, θ0) = lt(θ0)− θ0

= lt(θ0)− θ1+ lp(θ0)− θ0

= L(C2, µ1, θ1) + L(C1, µ0, θ0),

Q(C, µ0, θ0)

(∗)

≥ Q(C1, µ0, θ0), Q(C, µ0, θ0)

(∗∗)

≥ Q(C2, µ0, θ0).

Inequality (*) holds by consider the mass of particle existing in C1 at θ = θ0 as a lower bound; Inequality (**) holds by consider the mass of particle existing in C2at θ = θ1as a lower bound.

Induction hypothesis of Claim:

given C1, µ0, θ0 : Mput(C1,∞, L(C1, µ0, θ0))≤ (D(C1)− 1)Q(C1, µ0, θ0), given C2, µ1, θ1 : Mput(C2,∞, L(C2, µ1, θ1))≤ (D(C2)− 1)Q(C2, µ1, θ1),

This implies:

Mput(C,∞, L(C, µ0, θ0))

(∗)

≤ sum of LHS

≤ sum of RHS

≤ [D(C1)− 1 + D(C2)− 1]Q(C, µ0, θ0)

= (D(C)− 1)Q(C, µ0, θ0),

where D(C) = D(C1) + D(C2)− 1. Moreover, the first inequality holds since we can consider C2sending infinite particle without delay time in θ = 0∼ θ1and C1sending infinite particle without delay time in θ = θ1 ∼ lt(θ0) as an upper bound of Mput.

3.3 (2 + 2)parallellink Network

Remark 5. We remove all edges exceed m1 and m2, where m1 and m2 are the maximal number of used edges in OPT flow or EQU flow in C1 and C2 parts respectively. And, this has no influence on our model. On the other hand, we use ej = (aj, σj) in C1 and ej = (vj, τj) in C2.

Now, consider the flows on network C1 and inflow µ0. Define TEQU|C1,TOP T|C1 as the arriving time of all particle in EQU flow and OPT flow respectively. Let’s see a new model named by ”Restricted Inflow Model” as below:

Definition 10 (Restricted Inflow Model). In EQU flow, consider any moment θ0such that µ0(θ0) ≥ a1 + ... + am1 and lt(θ0) = σm1. We imitate the OPT flow, let µ0(θ0)− (a1 + ... + am1) mass of particles wait at s until µ0 ≤ a1+ ... + am1 and all previous particles have leaven.

In EQU flow after the operation, the arriving time of each particle is the same as usual.

Since the order of any pair of particles is conserved and each edge passing the same mass of particles as usual. Furthermore, the travel time of each particle is at most σm1 in the Restricted inflow model. Finally, each particle in EQU flow would depart earlier or equal to OPT flow, since there is always no more particle wait at s in EQU flow.

Definition 11 (Different Arriving Time). Given parallellink network C1, inflow µ0, each particle p from µ0. Define d(C1, µ0, p) by the different arriving time of EQU flow to OPT flow for particle p. Define d(C1, µ0) = suppd(C1, µ0, p).

Follow from definition, we have:

d(C1, µ0, p)≤ σm1. TEQU|C1 − TOP T|C1

(∗)

≤ d(C1, µ0)≤ σm1,

last inequality holds by considering the last particle as a special case of particle p.

Definition 12 (EO flow). Given (2 + 2)parallellink network C1 + C2, inflow µ0. For EQU flow in network C1 using inflow µ0, we have the outflow of C1 as the inflow of C2,

denoted by µ1. Now, consider the OPT flow of network C2using inflow µ1, this is the EO flow in (2 + 2)parallellink network C1+ C2. And, define the arriving time of all particle in EO flow by the arriving time of all particle in OPT flow of network C2using inflow µ1.

Define n2 is the maximal number of used edges in EO flow or EQU flow in C2part.

We apply the above lemma on networks C2, inflow µ1, we have:

TEQU − TEO ≤ τn2 ≤ τm2,

where And, m2 is the maxiaml number in OPT flow or EQU flow in C2 part. Since we remove all edges exceed m2, n2 ≤ m2.

Lemma 3. In the fluid queuing model, given (2 + 2)parallellink network C1 series

linking to C2 and inflow µ0, denoted ej = (aj, σj) in C1 and ej = (vj, τj) in C2. For the OPT flow and EQU flow, we have:

TEQU − TOP T ≤ σm1 + τm2.

Proof. Follow from above, for the EO flow in this (2 + 2)parallellink network, we have:

TEQU− TEO ≤ τm2, TEQU|C1 − TOP T|C1 ≤ σm1.

In EO flow, consider a strategy as below: For all particle arriving source of C2, let them wait at source for σm1 − d(C1, µ0, p) time and leave. In this case, EO flow has the same inflow of C2as OPT flow, but delay for exactly σm1 time. That is:

OPT’s inflow of C2 : µ2(θ), EO’s inflow of C2 : µ2(θ + σm1),

where µ2(θ) is the inflow of C2in OPT flow. This relationship implies TEO−TOP T = σm1. However, EO flow may choose some better solutions, implies

TEO− TOP T ≤ σm1,

TEQU − TOP T = TEQU− TEO+ TEO− TOP T ≤ σm1 + τm2.

Remark 6. Inthe proof of Lemma 3, for EO flow, we let the particle wait at the intermedi

ate point p. Despite this is not allowed in our model, it only worsens TEQUand makes the PoA bigger. The upper bound of PoA is still true if we allowed waiting at the intermediate point p. So, we allow it.

Lemma 4. Given (2 + 2)parallellink network C1+ C2, TOP T = TEOor TOP T ≥ σ2+ τ2, where TOP T, TEOis the arriving time of all particle in OPT flow and EO flow respectively and (a1, σ1), (a2, σ2) denotes the edges of C1, (v1, τ1), (v2, τ2) denotes the edges of C2.

Proof. Given (2 + 2)parallellink network C1 + C2, inflow µ0, the maximal number of used edges in OPT flow or EQU flow in C1 part and C2 part are m1 and m2 respectively.

Now, if m1 = 1, then TOP T = TEO. Else, consider m1 = 2. Let’s divide it into two cases:

(a)m1 = 2 and a1 ≥ v1

During θ = 0 ∼ σ2, OPT flow and EO flow have the same inflow and outflow at C2. Now, if m2 = 1, after θ = σ + 2, OPT flow and EO flow still have the same outflow at C2, implying TOP T = TEO. Else, m2 = 2, both OPT and EO active (v2, τ2) at θ = σ1.

• If EO flow shutdown (v2, τ2)f irst, then TEO ≤ TOP T, implies TEQ = TOP T.

• If OPT flow shutdown (v2, τ2)f irst but before θ = σ2+ τ2, then OPT flow and EO flow have the same outflow at C2 later, which will pass the same mass of particle.

We let EO flow shutdown (v2, τ2) at the same time to afford TEQ = TOP T.

• If OPT flow shutdown (v2, τ2)f irst and after θ = σ2+ τ2, then TOP T ≥ σ2+ τ2.

(b)m1 = 2 and a1 ≤ v1

If m2 = 1, consider the EO flow and OPT flow. Maybe EO flow and OPT flow have the total same outflow, then TEO = TOP T; Else, EO flow and OPT flow must have the same outflow during θ = 0∼ σ2+ τ1, implies TOP T ≥ σ2 + τ1. Both of the cases are enough to prove PoA≤ 2.

Else, m2 = 2, which implies a1+ a2 ≥ v2 ≥ a1 since v2is used. Now, if OPT flow

use (v2, τ2), then TOP T ≥ σ2+ τ2. Else, EQU flow use (v2, τ2), we can compute:

Mput(C,∞, σ2 + τ2) =

0, θ = 0 + τ1 ∼ σ1+ τ1, a1(σ2 − σ1), θ = σ1 + τ1 ∼ σ2+ τ1, v1(τ2− τ1), θ = σ2 + τ1 ∼ σ2+ τ2.

Mput(C, µ0, σ2+ τ2) =

0, θ = 0 + τ1 ∼ σ1+ τ1,

∫ σ2−σ1

0

min(µ0(θ), a1)dθ, θ = σ1+ τ1 ∼ σ2+ τ1, v1(τ2− τ1), θ = σ2+ τ1 ∼ σ2+ τ2. On the other hand, in EQU flow, let’s find a lower bound of the total mass of particle arriving at the source of C1+ C2 before activating (v2, τ2) as an lower bound of M :

0, θ = 0 + τ1 ∼ σ1+ τ1,

the mass of particle passing by C1+ C2 =∫σ2−σ1

0 min(µ0(θ), a1)dθ, θ = σ1+ τ1 ∼ σ2+ τ1, the mass of particle queuing at C2to active (v2, τ2) = v1(τ2− τ1), θ = σ2+ τ1 ∼ ˆθ.

As a result, we have:

Mput(C, µ0, σ2+ τ2)≤ M.

Similar as Lemma 1, this implies TOP T ≥ σ2+ τ2.

Theorem 5. In the fluid queuing model, the Price of Anarchy of 2 for (2 + 2)parallellink networks is tight.

Proof. Follow from the Lemma 4, if TOP T = TEO, apply Lemma 1 on network C2using inflow µ1, we have:

TEQU

TOP T = TEQU TEO ≤ 2.

On the other hand, if TOP T ≥ σ2+ τ2, together with Lemma 3, we have:

TOP T ≥ σ2+ τ2 ≥ TEQU − TOP T, PoA = TTEQU

OP T ≤ 2.

So, we achieve the goal.

Chapter 4

Extension

We will show a selfdefined tax scheme and improve the PoA’s bound with it in the fluid queuing model. Although it seems helpless for the networks with constant inflow, it is helpful for the parallellink networks with some extreme inflow cases.

Definition 13 (Delaytime Tax Scheme). In the fluid queuing model, we increase the delay time of edges by imposing tax in the given networks. The tax is not considered as the cost of society’s welfare. That is, the tax scheme will only change the behavior of particles, and the computation of delay time and travel time still uses the original setting. This is called the delaytime tax scheme, refer to [11].

Remark 7. Under the definition of the Delaytime Tax Scheme, OPT flow would not change anything after taxing. This is because the computation of delay time and travel time still uses the original setting. As a result, we only discuss EQU flow and the changes on the arriving time of all particle in EQU flow, TEQU.

Theorem 6. In the fluid queuing model with constant inflow, the Price of Anarchy is at least 43 for parallellink networks after taxing.

Proof. Let’s show an example of the Price of Anarchy of 43, refer to [10]. Consider the parallellink network with two edges, the total amount M , and the constant inflow function