Using Audio Reverberation to Compensate Distance Compression in Virtual Reality

Yi-Hsuan Huang

∗National Yang Ming Chiao Tung University

Taiwan

fonyanweiyi@gmail.com

Roshan Venkatakrishnan

∗Clemson University USA

rvenkat@g.clemson.edu

Rohith Venkatakrishnan

Clemson University USA

rohithv@g.clemson.edu

Sabarish V Babu

Clemson University USA sbabu@clemson.edu

Wen-Chieh Lin

National Yang Ming Chiao Tung University

Taiwan wclin@cs.nctu.edu.tw

ABSTRACT

Virtual Reality (VR) technologies are increasingly being applied to various contexts like those gaming, therapy, training, and educa- tion. Several of these applications require high degrees of accuracy in spatial and depth perception. Contemporary VR experiences continue to be confronted by the issue of distance compression wherein people systematically underestimate distances in the vir- tual world, leading to impoverished experiences. Consequently, a number of studies have focused on extensively understanding and exploring factors that influence this phenomenon to address the challenges it poses. Inspired by previous work that has sought to compensate distance compression effects in VR, we examined the potential of manipulating an auditory stimulus’ reverberation time (RT) to alter how users perceive depth. To this end, we conducted a two action forced choice study in which participants repeatedly made relative depth judgements between a pair of scenes featuring a virtual character placed at different distances with varying RTs.

Results revealed that RT influences how users perceive depth with this influence being more pronounced in the near field. We found that users tend to associate longer RTs with farther distances and vice versa, indicating the potential to alter RT towards compen- sating distance underestimation in VR. However, it must be noted that excessively increasing RT (especially in the near field) could come at the cost of sensory segregation, wherein users are unable to unify visual and auditory sensory stimuli in their perceptions of depth. Researchers must hence strive to find the optimal amount of RT to add to a stimulus to ensure seamless virtual experiences.

∗Both authors contributed equally to this research.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

SAP ’21, September 16–17, 2021, Virtual Event, France

© 2021 Association for Computing Machinery.

ACM ISBN 978-1-4503-8663-0/21/09. . . $15.00 https://doi.org/10.1145/3474451.3476236

CCS CONCEPTS

• Human-centered computing → Virtual reality.

KEYWORDS

virtual reality, depth perception, distance compression, multi sen- sory experiences, audio reverberation

ACM Reference Format:

Yi-Hsuan Huang, Roshan Venkatakrishnan, Rohith Venkatakrishnan, Sabar- ish V Babu, and Wen-Chieh Lin. 2021. Using Audio Reverberation to Com- pensate Distance Compression in Virtual Reality. In ACM Symposium on Ap- plied Perception 2021 (SAP ’21), September 16–17, 2021, Virtual Event, France.

ACM, New York, NY, USA, 10 pages. https://doi.org/10.1145/3474451.3476236

1 INTRODUCTION

Virtual Reality (VR) technologies have started to see the integra- tion of their applications into the areas of gaming [Fisher et al.

2006], education [Chen et al. 2019], therapy [Heinrichs et al. 2008], training [Wald and Taylor 2000] and so on. Even with this grow- ing popularity in the technology and its applications, the distorted perception of distance in these simulated environments continues to present itself as a challenge in contemporary VR experiences [Breedveld et al. 1999; Ebrahimi et al. 2015; Richardson and Waller 2005]. This phenomenon is commonly referred to as distance com- pression, wherein users perceive distances to be nearer in virtual environments with a large body of work demonstrating system- atic distortions in users’ perceptions of depth [Loomis et al. 2003;

Messing and Durgin 2005; Richardson and Waller 2005; Sahm et al.

2005; Willemsen et al. 2009a; Witmer and Kline 1998; Ziemer et al.

2009]. On reviewing a number of articles in relation to distance perception, Renner et al. [Renner et al. 2013] indicate that the per- ceived egocentric distances (distance from observer to an object) in virtual environments is 74% of the actual distances. This inaccuracy in distance perception can potentially degrade training outcomes that rely on depth information seeing as how research has shown that depth perception is closely linked to how users perceive other aspects like size, height, scale and speed [de Siqueira et al. 2021;

Leyrer et al. 2011; Rolland et al. 1995]. This makes it important for VR Researchers and designers to investigate and explore new means to address this issue.

A number of studies have investigated different factors that affect distance perception in immersive virtual environments (IVEs). Some of these include physical aspects like the field of view (FOV), the weight of the head mounted display (HMD) along with the quality of graphics employed, etc. [Interrante et al. 2006]. Work conducted by Willemsen and colleagues has shown that the ergonomics of HMDs influences the perception of depth, partly accounting for the underestimation/distance compression that occurs in IVEs [Willem- sen et al. 2009b]. A large body of research attempts at addressing distance underestimation from a visual standpoint, leveraging tech- niques like minification [Kuhl et al. 2006], parameter calibration [Ponto et al. 2013], and training [Kelly et al. 2018] towards cor- recting skewed perceptions of depth. Virtual self-avatars also play a role in distance perception, with recent research showing that users’ reach estimates become more accurate with the increase in the anthropomorphic fidelity of their self-avatars [Ebrahimi et al.

2018]. As such, existing solutions that address the classic problem of distance compression in VR mostly approach the problem from a visual standpoint, potentially causing undesirable side effects like geometric distortion. Moreover, given that contemporary VR simu- lations feature a multisensory experience including visual, haptic information, olfactory and audio/ sound information, there may be merit in investigating if these other aspects can help address the distance underestimation problem in VR.

While visual perception is usually more dominant than other senses, human brains integrate cues from multiple senses each play- ing a role in the perception of the overall experience [Berry et al.

2014; Xi et al. 2018]. Of these other senses, auditory information may help compensate for distance compression while allowing for immersive and rich multisensory experiences. Along these lines, Finnegan et al. [Finnegan et al. 2016] found that the incongru- ence of auditory and visual perceptual information can compensate distance compression in longer distances using anechoic sound.

Reverberation, containing all the reflective waves, is an important cue for auditory depth perception. It is a key characteristic of a room’s acoustic environment and is created by sound reflecting off the walls, floor, ceiling, objects, etc. Research has shown that stronger reverberation is associated with longer perceived distance in acoustical environments [Etchemendy et al. 2017; Mershon and King 1975]. Additionally, research conducted by the authors of [Bai- ley and Fazenda 2017] showed that the presence of reverberation increases users’ perceptions of distance for objects further than five meters. While there have been a small number of such efforts that have explored the manipulation of auditory reverberation to- wards addressing and compensating distance compression effects in VR, there is a lack of adequate research that has investigated the potential manipulation of this characteristic in fully immersive virtual experiences achieved using tracked head mounted displays.

Apropos this, Reverberation time (RT) is one of the reverberation parameters that can be linearly adjusted to adapt to different scenes offering a potential means to alter how users perceive distances in IVEs. With this in mind, we aimed to explore how the manipulation of RT affects distance perception in IVEs.

2 RELATED WORK

2.1 Distance Compression in IVEs

Distance perception in IVEs is distorted and a number of studies have shown that users systematically underestimate depth in these virtual experiences [Richardson and Waller 2005; Willemsen et al.

2009a; Ziemer et al. 2009]. This is commonly referred to as distance compression wherein observers perceive distances in virtual worlds to be nearer than what they actually are [Interrante et al. 2006]. The phenomenon is also observed in augmented [Grechkin et al. 2010], mixed [Lin et al. 2015] and immersive CAVE based environments [Bruder et al. 2015]. In this section, we discuss factors that affect distance compression in VR and approaches that have been taken to compensate its effects.

2.1.1 Influential Factors of Distance Compression in VR:. A number of studies have demonstrated the influence of the physical prop- erties of the HMD on distance compression. On studying distance compression across different HMD devices, Buck et al. [Buck et al.

2018] found that heavier weight and smaller FOV was generally associated with a larger degree of distance compression. However, they also found that HMDs with similar specs/properties could result in different degrees of distance compression. Similar results were obtained by Young et al. [Young et al. 2014] and Andrus et al.

[Andrus et al. 2014], suggesting that the weight, FOV, and sense of wearing a HMD indeed have an influence on distance underestima- tion, but only partially account for it [Willemsen et al. 2009b].

Researchers have explored the role of graphics and certain visual effects on distance perception in virtual environments. Vaziri et al.

[Vaziri et al. 2017] investigated the impact of graphical realism on distance perception and found that the degradation of visual real- ism did not cause a significant decrease in the accuracy of distance perception. The authors of [Cidota et al. 2016] conducted a user study involving spatial placement tasks, exploring whether visual blurring or fading affects depth perception. Results of their study suggest that such effects positively influence self perceived perfor- mance more so than measured performance. Similarly, Langbehn et al. [Langbehn et al. 2016] also showed that visual blur has no noticeable influence on distance estimation.

2.1.2 Distance Compensation Approaches: Most approaches taken to compensate the effects of distance compression rely on visual interventions. Through minification, a technique that shrinks an image larger than the HMD’s FOV and renders it on its display, Kuhl et al. [Kuhl et al. 2006] demonstrated that the participants who experienced minified spaces underestimated distances less than those that did not. This sort of effect was also observed by the authors of [Li et al. 2014], who showed that the application of geometric minification causes people to overestimate distances and this can be used to compensate compression effects experienced in IVEs. Similar to geometric minification, Peer et al. [Peer and Ponto 2016] introduced Perceptual Space Warping (PSW) wherein a vertex shader is used to warp geometry thus affecting perceived dis- tance. Their study demonstrated significant effects, but of smaller magnitude than hypothesized. Leyrer and his colleagues proposed a simple approach involving visual calibration through individual eye height manipulation towards altering perceived distances in the virtual world. Reducing the eye height made users perceive objects

as further [Leyrer et al. 2015]. Ponto et al. [Ponto et al. 2013] intro- duced a perceptual calibration procedure that determined viewing parameters to produce wider and deeper virtual eye positions than standard estimates. Their results indicate that perceptually cali- brated viewing parameters can significantly improve depth acuity, distance estimation, and the perception of shape.

Researchers have also attempted to address the distance com- pression problem using methods less reliant on visual interventions.

Along these lines, work conducted by the authors of [Ng et al. 2017]

showed that users’ depth judgements generally became more ac- curate after provisioning verbal corrective feedback in cave based IVEs. Research has also shown that previewing a virtual space be- fore experiencing it improves depth judgement accuracy [Kelly et al. 2018]. The work conducted by these authors also served to show that interaction walking helps improve depth perception due to the integration of continuous visual feedback.

2.2 Using Audio to Compensate Distance Compression Effects

Our brains receive a variety of information from different senses in the real world, making it appropriate to consider multiple senses while addressing distance compression. While researchers acknowl- edge the existence of visual capture (sense of vision dominates over other senses in creating a percept), other sensory perceptual information also play a strong role in perceptual judgements. This can be modeled using a maximum likelihood estimation where judgements are predicted to be the weighted average location of multiple senses in the proportion to relative reliability [Berry et al.

2014; Ernst and Banks 2002]. Bayesian causal inference models can also be used to estimate sensory weights based on which percep- tual judgments can be predicted [Xi et al. 2018]. Along these lines, research has shown a potential for audio to strongly influence per- ception with the authors of [Shams and Kim 2010] demonstrating that this channel of sensory information can affect users’ perceived visual fidelity, motion smoothness, latency, etc. Turner and her colleagues showed that audio influences the visual perception of depth in a stereoscopic image and can be used to compensate depth compression effects [Turner et al. 2011]. Similar results have also been demonstrated by Cullen and group showing that asynchro- nous audio-visual interactions, the type of sound and its effects, all play a role in shaping users’ distance perception [Cullen et al.

2016]. Using audio to compensate distance compression in VR has been discussed by Finnegan et al. demonstrating that applying an incongruence scheme that misaligns the location of audio and vi- sual stimuli can be used to alter how users perceive distance. They were able to show that shifting the audio stimulus further than the visual object results in lower distance underestimation effects in far field (4.5 - 9.5 meters) VR experiences [Finnegan et al. 2016].

Such efforts are indicative of the potential to use audio to com- pensate distance underestimation effects. While most studies of cross-modality between visual and audio senses focus on intensity as the acoustic parameter of interest, other auditory components like the reflection and dimensionality of the sound are just as im- portant for building a sense of space. Along these lines, research has shown that users involuntarily integrate spatial audio cues to improve spatial perception accuracy and depth perception acuity

[Yong and Wang 2018; Zhou et al. 2004]. In connection to depth per- ception, a component of auditory information that may be worth investigating is reverberation seeing as how it has been shown to directly influence perceived room size [Etchemendy et al. 2017].

2.2.1 Reverberation: Audio waves interact with environment via reflections and diffractions. Sound waves repeatedly bounce off reflective surfaces and gradually lose energy either before reaching the listener or until reaching zero amplitude. When these reflections mix with each other, a distinctive sound field called reverberation is produced. This represents a collection of reflection waves and is a global descriptor of the acoustics of an environment [Kapla- nis et al. 2014]. Reverberation has been shown to affect how users perceive room sizes and is identified as a significant attribute in spatial acoustics [Bronkhorst and Houtgast 1999; Etchemendy et al.

2017; Kaplanis et al. 2014]. Furthermore, the addition of reverbera- tion tends to be associated with increased estimates of distances [Etchemendy et al. 2017; Mershon and King 1975]. Considering a room impulse response model featuring a source and receiver, early reflections are the initial set of sound waves that reach listeners’

ears before the rest of the sound, and late reverberations contain higher order and diffuse reflections that decay exponentially based on distance [Coleman et al. 2017]. Compared to early reflection orders, late reverberations are associated with better depth judge- ments in VR [Bailey and Fazenda 2017].

Manipulating the reverberation parameter to compensate dis- tance underestimation has also been pursued by the authors of [Finnegan 2017; Finnegan et al. 2016] finding no significant effect of the same on the accuracy of depth perception. This has been attributed to the use of artificial reverberations produced using de- lay networks, filters, etc. that may be efficient and flexible but only good enough to provide gross reverberation [Valimaki et al. 2012].

As such, there is a lack of adequate research exploring the possi- ble manipulation of the reverberation parameter towards making users perceive visual stimuli as farther than they really are in con- temporary VR experiences. Furthermore, while researchers have demonstrated an influence of reverberation on perceived distances, there isn’t a clear consensus among the research community on its effects and it continues to remain relatively unexplored in im- mersive virtual environments achieved using tracked HMDs. With the distance compression problem continuing to persist in IVEs, we attempt to contribute to this knowledge base by investigating if and how reverberation can be used to address this classic problem.

3 SYSTEM DESCRIPTION 3.1 Audio Stimulus

The audio stimulus used in this study was an anechoic clip of a fe- male voice from OpenAIRlib (http://www.openairlib.net/) [Murphy and Shelley 2010] provided by the AudioLab in the Department of Electronics at the University of York, UK. We added reverberation to the anechoic clip by using the WAVES IR1 Convolution Reverb framework, implemented by Adobe Audition. This convolves a dry (anechoic) input signal with recorded room impulse responses, matching the signal to the measured space. Based on results from our pilot study, we settled on 0.4 seconds as a standard increment adding to the optimal RT (0.7 seconds) of our experiment scene.

3.2 Virtual Scene and Equipment

To study the feasibility of using reverberation time to compensate perceived distances in the virtual world, we built a virtual room using the Unity game engine. We set the target range of the distance to be compensated to be within one and ten meters seeing as how most commercial VR experiences tend to involve these distances.

Furthermore, this range is similar to the range studied in [Finnegan et al. 2016], allowing for easy comparison of results. The average distance compression in virtual environments is approximated as 74% [Renner et al. 2013], i.e., people tend to perceive the range, 1.35- 13.5 meters in the real world as 1-10 meters in the virtual world.

Based on these factors, we set the length of the virtual room at 15m so that participants could stand 1m in front of a wall in the virtual room while experiencing the simulation. To build a normal room size with an appropriate RT, the size of the virtual room was set as 15m × 8.72m × 3.5m (Figure 1). With the room volume of 457.8 m3, the appropriate RT was set at 0.7s according to the equation in [Beranek and Mellow 2012]. The virtual room featured a female character in a closed space. The Unity-chan model from the Unity asset was used as the female speaking character (Figure 1). Her body motion was combined with two animations: the base layer of the animator was a speaking body motion provided by the Max-Planck Institute for Biological Cybernetics in Tuebingen, Germany [Volkova et al. 2014a,b], and the mesh layer was “FREE Preview of Animations for RPG - Human” provided by Shine Bright on the Unity asset store. The object distance in our study is the distance between the participants and the female character.

We used a PC running Windows 10, with a 4GHz Intel i7 pro- cessor, 32GB RAM, and a GTX 1070 Graphics card. We used an Oculus Rift HM-A (CV1) with earphones and a pair of Oculus touch controllers to simulate our virtual scene. The experiment was con- ducted in a meeting room in our University.

4 EXPERIMENT DESIGN

4.1 Research Questions and Hypotheses

With our research thrust aiming to address the classic distance compression problem in VR, we sought to explore if increasing the RT of auditory stimuli could help alter how users perceive distance in the virtual world. Along these lines, we specifically aimed at answering the following questions.

RQ1: Is there a relationship between the reverberation time of auditory stimuli and users’ egocentric distance estimates in IVEs? For the purpose of showing that increasing RT has the potential to compensate compressed distances in VR, we focus on egocentric distances between an observer and an object. In this study, we define “object distance" as the distance between the par- ticipant and a target character. We hypothesize that for a constant object distance, longer RTs are associated with increased egocentric distance estimates.

RQ2: Does the reverberation time differentially influence distance perception for near and far field object distances?

Reverberation time is a measure of the time that an audio signal exists within an environment after the sound is produced. It is usu- ally defined as the time required for the source signal pressure level to fall by 60dB from its initial level, termed as RT60[Iazzetta et al.

2004]. The RT parameter we use follows this definition. As Finnegan

et al. [Finnegan et al. 2016] suggested, the incongruence of audio and visual object distances can compensate distance compression only when the object is at a longer range. Thus we hypothesize that the influence of RT on distance perception will be stronger when the object distance exceeds 5 meters. That is, we expect the reverberation compensation to be more obvious at the far distance range.

RQ3: How do audio cues of reverberation influence visual cues on distance perception? We intend to observe the interac- tion between audio and visual senses. We expect that as RT in- creases, the audio influence on visual perception will increase. We also attempt to find the appropriate RT ranges to compensate the compressed distance, making the perceived distance as close to the Visually Compensated Distance as possible.

4.2 Experimental Factors and Stimuli

We manipulated two independent variables in this experiment: ob- ject distance (termed reverberantly compensated distance) and RT.

The levels of object distances were 1, 3, 5, 7 and 9 meters, and RTs were anechoic (RT=0), 0.7, 1.1, 1.5, 1.9 and 2.3 seconds (see Table 1). Participants encountered a number of trials in which they were exposed to 2 scenes one after another, each of which featured the virtual character and the audio stimuli. They were asked to indicate (using the Oculus touch controllers) which of the two scenes they perceived the character to be nearer to them. It was ensured that the order in which the two scenes were presented was randomized thus eliminating any extraneous influence of order effects on partic- ipants’ depth judgements. Each of these scene pairs constituted one trial forming a two action forced choice response system for partici- pants to indicate relative depth/distance judgements. We chose this response system because it has been set as a precedent for relative distance estimation in recent studies such as [Finnegan et al. 2016;

Turner et al. 2011]. Besides, other techniques like blind walking and bean bag throwing were not feasible given the potential fatigue that could have manifested from the large number of trials involved in this study.

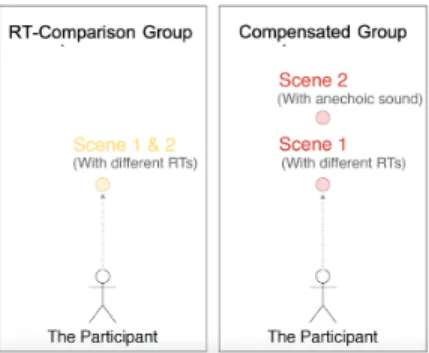

The experiment included two groups of comparisons. Group One (RT-Comparison Group) involved trials that included comparisons on every possible pair of RTs for each object distance (Figure 4).

An additional trial featuring extreme RT values (3.2 seconds) was added for each object distance. If a participant failed to provide a correct response for these trials, their data was excluded from the study. With five objects distances, six RTs and these additional data exclusion trials, group one featured a total of 80 trials ((C26+1)×5 = 80). The manipulation of RT in these trials was achieved using a convolution reverberation framework such that any alteration in RT associated with auditory stimulus would not affect the duration or any other parameter of the original stimulus itself. Thus, the auditory stimulus remained constant with the only difference lying in the reverberation time associated with the presented stimulus.

In Group Two (Compensated Group), each trial featured a com- parison between two scenes, one of which always involved ane- choic sound (RT = 0 seconds) with the virtual character placed at a visually compensated distance that was calculated based on the corresponding reverberantly compensated distance (see 2 and Figure 4). Thus, for each reverberantly compensated distance, six

Figure 1: First person point of view of the two comparison groups. In each trial, participants saw scenes 1 and 2 one after the other with the virtual character. In the Group 1 (RT-Comparison group), for each object distance, the RTs were varied. In Group 2 (Compensated Group), participants compared Revereberantly and Visually compensated distances.

RTs were tested against scenes featuring the virtual character and sound stimuli presented from the corresponding visually compen- sated distance (with RT=0). Trials of this sort were presented for each object distance, making a total of 30 trials (5 object distances times 6 RTs). The trials of both Groups were interleaved such that participants could encounter trials from any of the groups at any time during the experiment. This was done to prevent participants from noticing that the virtual character was at different distances in trials of Group Two and this was achieved by randomizing the order of all the trials across groups. Overall, a participant encountered a total of 110 trials that roughly took between 40-50 minutes to complete.

4.3 Participants

Twenty-three participants were recruited for this study. Fourteen of these participants were female, and ten reported having expe- rience with learning musical instruments. It was ensured that all participants had corrected to 20-20 if not for normal vision, and that none of them had any hearing impairments. Participants’ identities remained confidential and they were free to opt out of the study at any time. No participants were excluded from the study as a result of failing the data exclusion trials featuring extreme RT values.

4.4 Procedure

Participants were greeted and asked to read and sign a consent form (informed consent) upon arrival. After consenting to participate in the study, participants filled out a demographics questionnaire that included information about their backgrounds and experience with VR, music and musical instruments. Following this, participants were invited to sit across the experimenter and were briefed about the study. They were then allowed to familiarize themselves with the devices (Oculus HMD and touch controllers). After measur- ing their interpupillary distance (IPD), participants then donned the HMD adjusted based on this measurement. They were then equipped with the controllers with which they could input their response for each trial. It was ensured that their pinna were cov- ered by headphones to prevent any external audio stimuli from interfering with the study.

The experimenter then allowed participants to familiarize them- selves with the VR environment. Participants were then given the Oculus controllers and had to perform three practice trials. Before starting the formal trials, the experimenter reminded participants to look in the front, pay attention to the stimuli and respond as

Figure 2: Schematic representation of the two comparison groups of trials.

accurately as possible. The 110 trials were divided into three ses- sions of 40, 35 and 35 trials with breaks in between to prevent participants from getting fatigued from prolonged exposure to the virtual world. Each trial showed the character in the two scenes, 1 and 2. After the first scene was presented, a black screen with fade-in and fade-out effects was presented for two seconds. This intermediate black screen was presented between the two scenes to simulate eye blinking and to ensure that participants were aware of the start of the second scene. After the second scene was pre- sented, participants would see an instruction to do a selection and indicate in which of the two scenes (the first or second) they felt the character to be nearer to them. To perform this selection, they had to press the trigger on the Oculus touch controller. The two scenes numbered one and two were juxtaposed to help participants respond accurately based on their perceptions. After pressing the trigger, there was a 0.5 second buffer showing an empty room be- fore the next trial. It took a participant approximately 12 seconds to complete a trial on average. Between every two sessions, there was a break so that the participants could take off the HMD and feel at ease if they wanted. Before a session commenced, the experimenter would help participants wear the HMD and adjust the straps for comfort. After completing all trials, participants proceeded to en- gage in a short interview with the experimenter to discuss their experience in this study. Upon completion of the interview, partici- pants were debriefed and financially compensated for taking part in the study with a gift card and were free to leave. On average, it took a participant up to 50 minutes to complete the whole procedure.

4.5 Analysis Methods

For the trials of the RT-Comparison Group, we calculated the correct rate by defining a correct trial as a participant choosing shorter RTs as nearer in a comparison. Moreover, object distance and RT were divided into two levels listed in Table 1 in the analysis.

We binned object distance into Near (1, 3, 5 m) and Far (7, 9 m) ranges in order to study whether the reverberation compensation performed differently for near and far field object distances. RT was divided into Long (for RTs > 1.4s) and Short (RT < 1.4s) as 1.4s was two times of the optimal RT according to the room size. The Short RTs included anechoic reverberation (RT = 0). This binning of RTs into short and long categories was performed to investigate whether the two reverberation levels had different influences on the distance compensation.

Table 1: The two levels of the factors used in the analysis

Factor Level

Object Distance Near (1m, 3m, 5 m) Far (7m, 9m) Reverber- Short (anechoic, Long (1.5s, ation Time Short 0.7s, 1.1s) 1.9s, 2.3s)

Homogeneity of variance was verified for each factor using Lev- ene’s test. Parametric Analysis of Variance (ANOVA) was performed with appropriate post-hocs to conduct pairwise comparisons in fol- lowing up the main and interaction effects. For the trials of the Compensated Group, we calculated the ratio of choosing “Rever- berantly Compensated Distance" as nearer (i.e. Visually Compensated Distance is further). This ratio can be interpreted as “the ratio of the tendency to rely on visual stimuli" since Reverberantly Compensated Distances are always nearer with longer RTs and Visually Compen- sated Distances were positioned farther with anechoic sound.

5 RESULTS

5.1 RT-Comparison Group

In the RT-Comparison Group, we focus on the influence of RT on distance perception for various object distances. In each compar- ison, the object distance is kept constant with varying RTs. The correct rate, which we interchangeably refer to as the rate of correct- ness, can be interpreted as the ratio of trials in which participants perceive shorter RTs as nearer for the same object distance.

5.1.1 Reverberation Compensation - RT Influences Distance Per- ception: The overall correct rate was 75.82% (SD = 14.69%). That

Table 2: Trial pairs at each object distance Reverberantly

Compensated Visually Compensated

Distance RT(s) Distance

1m 1.35 m (0s)

3m 0, 0.7, 1.1, 1.5, 4.05 m (0s)

5m 1.9, 2.3 6.76 m (0s)

7m 9.46 m (0s)

9m 12.16 m (0s)

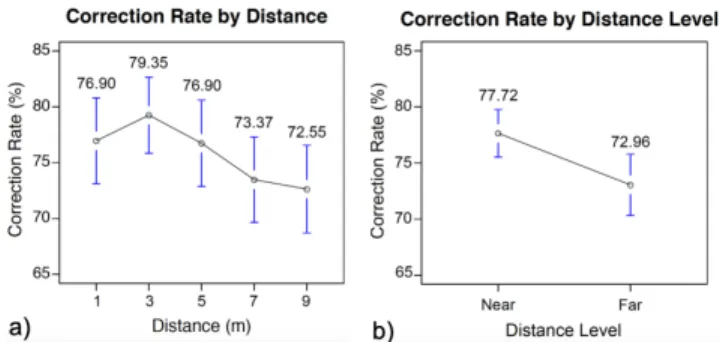

Figure 3: Correct rates at (a) different distances and (b) dis- tance levels.

Figure 4: Correct rates at (a) different RTs and (b) RT levels.

is, in 75.82% of trials, participants perceived shorter RTs as nearer even with the same object distance. There was no statistically sig- nificant difference between genders (F(1,21)=1.291, p>0.1). These results suggest that adjusting the reverberation time can influence participants’ perceived distances in VR.

5.1.2 Distance and RT Levels Influence Reverberation Compensa- tion: Figure 3(a) presents the correct rates for the five object dis- tances. The correct rate of the near distance range is significantly (F(1,21)=6.977, p<0.01) greater than that of the far range (77.72% vs.

72.96%) as shown in Figure 3(b).

Figure 4(a) shows the correct rate associated with each RT. As seen in this figure, the influence of RT on distance perception de- creases as RT increases, possibly because participants can not dif- ferentiate between longer RTs. Based on post-hoc pairwise com- parisons, the correct rate of anechoic reverberation (RT=0s) was significantly higher than those with RT as 1.9s (p<0.01) and 2.3s (p<0.1), showing that the two longest RTs had a rather weaker in- fluence on distance perception. Moreover, the average correct rate of the Shorter RTs (0-1.1s) (79.40%) was significantly (F(1,21)=17.93, p<0.0001) greater than those with Longer RTs (1.5-2.3s) (72.23%), as shown in Figure 4(b). Overall, Shorter RTs had a stronger influence on distance perception.

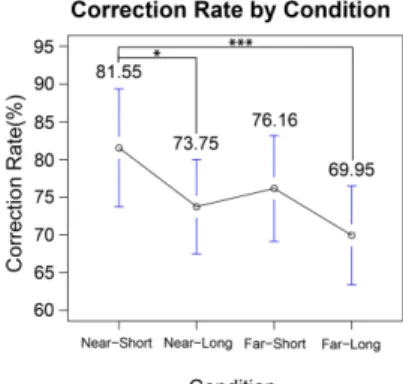

An ANOVA analysis examining the interaction effects between object distance and RT found no significant effects (F(1,19)=0.213, p>0.1). Post-hoc pairwise comparisons revealed that the correct rate of the Near-Short configuration was significantly larger than the Near-Long (p <0.01) and Far-Long (p<0.0001) configurations.

Figure 5: correct rates by object distance x RT.

Figure 5 shows the correct rate of these four configurations (Dis- tance level × RT level) where the significant differences between the correct rates are marked with asterisks. Since the Near-Short configuration differed from the Near-Long and Far-Long configu- rations, there seems to be a more pronounced influence of RT on distance perception in the near field.

5.2 Compensated Group - Interaction of Visual and Audio Cues on Reverberation

Compensation

In the Compensated Group, we investigate the interaction of visual and audio cues on distance perception in an attempt to compensate distance underestimation. Apropos this, we compare Reverberantly Compensated Distance varying RTs against corresponding Visually Compensated Distance with RT=0.

A ratio was calculated as the proportion of the trials (total trials divided by twenty-three participants × five distances) in which par- ticipants chose the Reverberantly Compensated Distance as nearer than the Visually Compensated Distance. The former was always nearer than the latter, but the RTs of the former were varied. If par- ticipants perceived Reverberantly Compensated Distances as nearer, it implied that they relied on visual cues more. Hence, the ratio can be interpreted as “the ratio of the tendency to rely on visual stimuli”

in making depth judgements. Figure 6 shows this ratio for each ob- ject distance. Figure 7 shows that the total ratio decreases with the increase in RT until 1.5s, after which it starts to increase again. This implies that in making depth judgements, participants increasingly relied on audio perceptual information as the RT increased until 1.5s after which shifted their reliance back to visual information.

6 DISCUSSION

The statistical analysis conducted on the Group One trials (RT- Comparison Group) revealed that participants associated shorter RTs with nearer distances even when the object’s distance was the same. Controlling for the object distance, in roughly 76% of the trials, participants perceived the virtual character to be nearer when the audio stimuli involved shorter reverberation time. In other words, when a virtual character is at a certain distance from the user, shorter reverberation time applied on the audio stimuli emanating from the character makes users perceive the character

Figure 6: Results of the Compensated Group by distance.

Figure 7: Results of the Compensated Group.

to be nearer than they appear. These results are suggestive of the potential to use longer reverberation time (on auditory stimuli) towards increasing users’ egocentric distance estimates, supporting our hypothesis developed for RQ1. This is in line with findings obtained by Bronkhorst et al. [Bronkhorst and Houtgast 1999]

who showed that within the 1-3 meter range, audio sources are perceived as farther when the RT is set to 0.5s as opposed to an RT of 0.1s (almost without reverberation). Our findings however, deviate from those obtained by Finnegan et al. [Finnegan 2017]

who found no effect of reverberation on distance perception. It is possible that the technique used to generate reverberation in their study was insufficient to elicit an effect of significance. In contrast to the delay network based artificial reverberation method used in the aforementioned study, we employed a convolution based algorithm to generate reverberation and this could potentially explain the differences in findings mentioned above [Valimaki et al.

2012]. Given these results, it may be useful to further investigate if physical room models like ray-tracing or wave-based modeling allow for more accurate distance perception in IVEs.

Analyses conducted on the data collected from this study also shed light on the differential influence of reverberation time on depth perception for near and far field VR experiences. As seen in 3(b) the rate of correctness was significantly higher at near field distances (77.72%) than at farther distances (72.96%). This trend can be also be seen in 3(a) where the rate of correctness drops with the increase in object distance. The lower rate of correctness observed for the virtual character placed at the 1m mark could have possibly occurred because the character may have been too close

to the observer at this distance. As such, participants tended to associate shorter RTs with nearer distances more in the near field than far field virtual experiences. This suggests the existence of a stronger influence of RT on depth perception in the near field, meaning that reverberation time differentially affects how users perceive distance depending on the distance range in question.

In contrast to [Finnegan et al. 2016], wherein depth compression and compensation using reverberation was explored in an open room without obstacles, we conducted our study in a closed virtual room. Furthermore, unlike the authors of [Bailey and Fazenda 2017]

who focused on comparing early and late reverberations in depth judgements, we manipulated RT in a way that affects the entire reverberation process. These design differences could help explain our results showing a differential influence of RT on near and far field contexts. More work is needed to thoroughly investigate this.

On analyzing the differential influence of shorter and longer RTs on distance perception, we found that the rate of correctness for short RTs was 79.40% while that of long RTs was 72.23%. This difference was statistically significant, indicating a pronounced and more significant effect of shorter RTs over longer ones in depth per- ception. While we observed no interaction effects between object distance and RT on depth judgements, post-hoc tests revealed that these significant difference between long and short RTs manifested in the near field and not its far field counterpart. This can be ob- served in Figure 5 where the conditions near-short and near-long are significantly different from each other. Overall, these results suggest that while longer RTs can lead users to perceive egocentric distances as further, it may not be viable to increase RT to a very large degree, especially in the near field, given the lower rates of correctness observed when RT is increased.

In the Group two trials (Compensated Group), participants com- pared the “Reverberantly Compensated Distance" and its “Visually Compensated Distance" (Figure 7). A ratio of 0.5 can be interpreted as a participant relying on audio information 50% of the time in making depth judgements. This also means that at this ratio, partic- ipants cannot distinguish between the Reverberantly and Visually Compensated Distances. When the ratio drops below 0.5, the former is perceived as farther than the latter, implying that the RT largely compensates the compressed distance. The ratio of choosing “Rever- berantly Compensated Distance" as nearer also can be interpreted as the ratio of relying on visual senses. As seen in figures 6 and 7, most ratios tend to be over 50% suggesting that participants typically rely more on visual information than auditory information in making depth judgements. This has been discussed by the authors of [Boyle 2018; Shams and Kim 2010] who explain that in the presence of multiple sensory signals, our perceptual system places more trust in certain sensory cues, weighting them based on their relative reliability. It may be possible that the sense of vision dominates that of audio, the perceptual weight of which can be altered by manipulating RT as seen in Fig 7, supporting our hypothesis de- veloped for RQ3. However, we observed that increasing the RT to a great extent caused participants to feel confused, resulting in sensory segregation. Unable to perceive the two sensory stimuli as one whole unit, users defaulted to their sense of vision, usually deemed the more dominant one. This being said, more research is required to address this binding problem associated with using RT to compensate distance underestimation in IVEs.

Based on our findings, we discuss some implications for VR researchers, designers and developers. Through our work we found that applying longer RTs to auditory stimuli can build a larger acoustic field and thus make users perceive egocentric distance as further than what they actually are. This partially relieves us of the need to have users be trained or spend time previewing the virtual space to address the distance compression issue as suggested by [Kelly et al. 2018; Li et al. 2014; Ng et al. 2017] respectively.

Additionally, manipulating the RT component to address distance compression can also help avoid visual distortions while providing a means to build a more complete acoustic field. This being said, it would be advisable to avoid increasing RT to an excessively large extent especially in the near field seeing as how this leads to sensory segregation of the stimuli presented in the experience. Overall, there seems to be merit in pursuing investigations that explore the manipulation of RT to address the classic distance compression problem that confronts contemporary immersive VR experiences.

7 CONCLUSION AND FUTURE WORK

In this work, we explored the use of audio reverberation in ad- dressing the effects of the classic distance compression problem in VR. Since users systematically underestimate distances in IVEs, we sought to examine if this shortcoming can be compensated by manipulating the reverberation time associated with an auditory stimulus in a multi sensory immersive VR experience. Towards this end, we conducted a two action forced choice study requir- ing participants to repeatedly make relative depth judgements be- tween a pair of scenes that featured a virtual character placed at certain distances with varying RTs. Our results indicate that RT influences how users perceive depth and that this influence is more pronounced in the near field (1 to 5m) than in the far field (5 to 10m). We also found that participants associated shorter RTs with closer distances (and vice versa) and that increasing the RT can cause users to increasingly rely on auditory information in making egocentric distance estimates. We were able to show that applying longer RTs can build a larger acoustic field and hence allow people to perceive egocentric distances as farther. However, it must be noted that overly increasing the RT (especially in the near field) can lead to sensory segregation wherein the visual information used in perceiving depth does not match with the auditory information, making users unable to perceive the sensory stimuli as one whole unit. Overall, there seems to be merit in pursuing further explo- rations that target the manipulation of RT towards compensating distance compression effects associated with IVEs.

In future work, we wish to explore how factors like the room size, volume, angles of the walls and other properties of an indoor environment affects distance estimates in IVEs. Given that this initial study explored the possibility of altering RT towards address- ing distance compression, these factors were controlled but not investigated per se. We also wish to explore these effects in exterior environments with limited reverberation and in similar augmented and mixed reality settings. Lastly, we wish to probe into the is- sue of sensory segregation associated with increasing RT, seeking to identify baseline thresholds above which this binding problem manifests in near and far field immersive virtual experiences.

ACKNOWLEDGMENTS

This contribution is based upon work partially supported by the U.S. National Science Foundation under grant number 2007435 and in part by the Ministry of Science and Technology of Taiwan under grant No. 109-2221-E-009-123-MY3.

REFERENCES

Scott M. Andrus, Graham Gaylor, and Bobby Bodenheimer. 2014. Distance Estimation in Virtual Environments Using Different HMDs. In Proceedings of the ACM Symposium on Applied Perception. ACM, 130–130.

Will Bailey and Bruno M Fazenda. 2017. The effect of reverberation and audio spatial- ization on egocentric distance estimation of objects in stereoscopic virtual reality.

The Journal of the Acoustical Society of America 141, 5 (2017), 3510–3510.

Leo Leroy Beranek and Tim Mellow. 2012. Acoustics: sound fields and transducers.

Academic Press, 473.

Jonathan S Berry, David AT Roberts, and Nicolas S Holliman. 2014. 3D sound and 3D image interactions: a review of audio-visual depth perception. Proc.SPIE 9014 (2014), 1–16.

Stephanie Claire Boyle. 2018. Investigating the neural mechanisms underlying audio- visual perception using electroencephalography (EEG). Ph.D. Dissertation. University of Glasgow.

P Breedveld, HG Stassen, DW Meijer, and LPS Stassen. 1999. Theoretical background and conceptual solution for depth perception and eye-hand coordination problems in laparoscopic surgery. Minimally invasive therapy & allied technologies 8, 4 (1999), 227–234.

Adelbert W Bronkhorst and Tammo Houtgast. 1999. Auditory distance perception in rooms. Nature 397, 6719 (1999), 517.

Gerd Bruder, Fernando Argelaguet Sanz, Anne-Hélène Olivier, and Anatole Lécuyer.

2015. Distance estimation in large immersive projection systems, revisited. In 2015 IEEE Virtual Reality (VR). IEEE, 27–32.

Lauren E. Buck, Mary K. Young, and Bobby Bodenheimer. 2018. A Comparison of Distance Estimation in HMD-Based Virtual Environments with Different HMD- Based Conditions. ACM Trans. Appl. Percept. 15, 3 (July 2018), 21:1–21:15.

Yi-Ting Chen, Chi-Hsuan Hsu, Chih-Han Chung, Yu-Shuen Wang, and Sabarish V Babu. 2019. iVRNote: Design, creation and evaluation of an interactive note- taking interface for study and reflection in VR learning environments. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, 172–180.

Marina A Cidota, Rory MS Clifford, Stephan G Lukosch, and Mark Billinghurst. 2016.

Using Visual Effects to Facilitate Depth Perception for Spatial Tasks in Virtual and Augmented Reality. In 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct). IEEE, 172–177.

Philip Coleman, Andreas Franck, P Jackson, R Hughes, Luca Remaggi, and Frank Melchior. 2017. Object-based reverberation for spatial audio. Journal of the Audio Engineering Society 65, 1/2 (2017), 66–77.

Brian Cullen, Karen Collins, Andrew Hogue, and Bill Kapralos. 2016. Sound and stereoscopic 3D: Examining the effects of sound on depth perception in stereo- scopic 3D. In 2016 7th International Conference on Information, Intelligence, Systems Applications (IISA). IEEE, 1–6.

Alexandre Gomes de Siqueira, Rohith Venkatakrishnan, Roshan Venkatakrishnan, Ayush Bharqava, Kathryn Lucaites, Hannah Solini, Moloud Nasiri, Andrew Robb, Christopher Pagano, Brygg Ullmer, and Sabarish V. Babu. 2021. Empirically Eval- uating the Effects of Perceptual Information Channels on the Size Perception of Tangibles in Near-Field Virtual Reality. In 2021 IEEE Virtual Reality and 3D User Interfaces (VR). 1–10. https://doi.org/10.1109/VR50410.2021.00086

Elham Ebrahimi, Bliss M Altenhoff, Christopher C Pagano, and Sabarish V Babu. 2015.

Carryover effects of calibration to visual and proprioceptive information on near field distance judgments in 3D user interaction. In 2015 IEEE Symposium on 3D User Interfaces (3DUI). IEEE, 97–104.

Elham Ebrahimi, Leah S Hartman, Andrew Robb, Christopher C Pagano, and Sabarish V Babu. 2018. Investigating the effects of anthropomorphic fidelity of self-avatars on near field depth perception in immersive virtual environments. In 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, 1–8.

Marc O Ernst and Martin S Banks. 2002. Humans integrate visual and haptic informa- tion in a statistically optimal fashion. Nature 415, 6870 (2002), 429.

Pablo Etchemendy, Ezequiel Abregú, Esteban Calcagno, Manuel Eguía, Nilda Vechiatti, Federico Iasi, and Ramiro Vergara. 2017. Auditory environmental context affects visual distance perception. Scientific Reports 7 (2017).

Daniel Finnegan. 2017. Compensating for distance compression in virtual audiovisual environments. Ph.D. Dissertation. University of Bath.

Daniel J. Finnegan, Eamonn O’Neill, and Michael J. Proulx. 2016. Compensating for Distance Compression in Audiovisual Virtual Environments Using Incongruence.

In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems.

ACM, 200–212.

Donald L Fisher, AP Pollatsek, and Abhishek Pradhan. 2006. Can novice drivers be trained to scan for information that will reduce their likelihood of a crash? Injury

Prevention 12, suppl 1 (2006), i25–i29.

Timofey Y. Grechkin, Tien Dat Nguyen, Jodie M. Plumert, James F. Cremer, and Joseph K. Kearney. 2010. How Does Presentation Method and Measurement Protocol Affect Distance Estimation in Real and Virtual Environments? ACM Trans. Appl.

Percept. 7, 4 (July 2010), 26:1–26:18.

William LeRoy Heinrichs, Patricia Youngblood, Phillip M Harter, and Parvati Dev. 2008.

Simulation for team training and assessment: case studies of online training with virtual worlds. World journal of surgery 32, 2 (2008), 161–170.

Fernando Iazzetta, Fabio Kon, Marcelo Gomes de Queiroz, Flávio Soares, Correa da Silva, and Marcio de Avelar Gomes. 2004. AcMus: Computational tools for measurement, analysis and simulation of room acoustics. In Proceedings of the European Acoustics Symposium.

Victoria Interrante, Brian Ries, and Lee Anderson. 2006. Distance perception in immersive virtual environments, revisited. In IEEE virtual reality conference (VR 2006). IEEE, 3–10.

Neofytos Kaplanis, Søren Bech, Søren Holdt Jensen, and Toon van Waterschoot. 2014.

Perception of Reverberation in Small Rooms: A Literature Study. In Audio Engineer- ing Society Conference: 55th International Conference: Spatial Audio.

Jonathan W. Kelly, Lucia A. Cherep, Brenna Klesel, Zachary D. Siegel, and Seth George.

2018. Comparison of Two Methods for Improving Distance Perception in Virtual Reality. ACM Trans. Appl. Percept. 15, 2 (March 2018), 11:1–11:11.

Scott A. Kuhl, William B. Thompson, and Sarah H. Creem-Regehr. 2006. Minification Influences Spatial Judgments in Virtual Environments. In Proceedings of the 3rd Symposium on Applied Perception in Graphics and Visualization. ACM, 15–19.

Eike Langbehn, Tino Raupp, Gerd Bruder, Frank Steinicke, Benjamin Bolte, and Markus Lappe. 2016. Visual Blur in Immersive Virtual Environments: Does Depth of Field or Motion Blur Affect Distance and Speed Estimation?. In Proceedings of the 22Nd ACM Conference on Virtual Reality Software and Technology. ACM, 241–250.

Markus Leyrer, Sally A Linkenauger, Heinrich H Bülthoff, Uwe Kloos, and Betty Mohler.

2011. The influence of eye height and avatars on egocentric distance estimates in immersive virtual environments. In Proceedings of the ACM SIGGRAPH symposium on applied perception in graphics and visualization. 67–74.

Markus Leyrer, Sally A. Linkenauger, Heinrich H. Bülthoff, and Betty J. Mohler. 2015.

Eye Height Manipulations: A Possible Solution to Reduce Underestimation of Egocentric Distances in Head-Mounted Displays. ACM Trans. Appl. Percept. 12, 1 (Feb. 2015), 1:1–1:23.

Bochao Li, Ruimin Zhang, and Scott Kuhl. 2014. Minication affects action-based distance judgments in oculus rift HMDs. In Proceedings of the ACM Symposium on Applied Perception. ACM, 91–94.

Chiuhsiang Joe Lin, Bereket Haile Woldegiorgis, Dino Caesaron, and Lai-Yu Cheng.

2015. Distance estimation with mixed real and virtual targets in stereoscopic displays. Displays 36 (2015), 41–48.

Jack M Loomis, Joshua M Knapp, et al. 2003. Visual perception of egocentric distance in real and virtual environments. Virtual and adaptive environments 11 (2003), 21–46.

Donald H Mershon and L Edward King. 1975. Intensity and reverberation as factors in the auditory perception of egocentric distance. Perception & Psychophysics 18, 6 (Nov. 1975), 409–415.

Ross Messing and Frank H Durgin. 2005. Distance perception and the visual horizon in head-mounted displays. ACM Transactions on Applied Perception (TAP) 2, 3 (2005), 234–250.

Damian T. Murphy and Simon Shelley. 2010. OpenAIR: An Interactive Auralization Web Resource and Database. In Audio Engineering Society Convention 129. Audio Engineering Society.

Adrian KT Ng, Leith KY Chan, and Henry YK Lau. 2017. Corrective feedback for depth perception in CAVE-like systems. In 2017 IEEE Virtual Reality (VR). IEEE, 293–294.

Alex Peer and Kevin Ponto. 2016. Perceptual space warping: Preliminary exploration.

In 2016 IEEE Virtual Reality (VR). IEEE, 261–262.

Kevin Ponto, Michael Gleicher, Robert G Radwin, and Hyun Joon Shin. 2013. Perceptual calibration for immersive display environments. IEEE Transactions on Visualization and Computer Graphics 19, 4 (2013), 691–700.

Rebekka S. Renner, Boris M. Velichkovsky, and Jens R. Helmert. 2013. The Perception of Egocentric Distances in Virtual Environments - A Review. ACM Comput. Surv.

46, 2 (Dec. 2013), 23:1–23:40.

Adam R Richardson and David Waller. 2005. The effect of feedback training on distance estimation in virtual environments. Applied Cognitive Psychology: The Official Journal of the Society for Applied Research in Memory and Cognition 19, 8 (2005), 1089–1108.

Jannick P Rolland, William Gibson, and Dan Ariely. 1995. Towards quantifying depth and size perception in virtual environments. Presence: Teleoperators & Virtual Environments 4, 1 (1995), 24–49.

Cynthia S Sahm, Sarah H Creem-Regehr, William B Thompson, and Peter Willemsen.

2005. Throwing versus walking as indicators of distance perception in similar real and virtual environments. ACM Transactions on Applied Perception (TAP) 2, 1 (2005), 35–45.

Ladan Shams and Robyn Kim. 2010. Crossmodal influences on visual perception.

Physics of life reviews 7, 3 (2010), 269–284.

Amy Turner, Jonathan Berry, and Nick Holliman. 2011. Can the perception of depth in stereoscopic images be influenced by 3D sound? Proc.SPIE 7863 (2011), 1–10.

Vesa Valimaki, Julian D Parker, Lauri Savioja, Julius O Smith, and Jonathan S Abel.

2012. Fifty years of artificial reverberation. IEEE Transactions on Audio, Speech, and Language Processing 20, 5 (2012), 1421–1448.

Koorosh Vaziri, Peng Liu, Sahar Aseeri, and Victoria Interrante. 2017. Impact of Visual and Experiential Realism on Distance Perception in VR Using a Custom Video See-through System. In Proceedings of the ACM Symposium on Applied Perception.

ACM, 8:1–8:8.

Ekaterina Volkova, Stephan de la Rosa, Heinrich H. Bülthoff, and Betty Mohler. 2014a.

The MPI Emotional Body Expressions Database for Narrative Scenarios. PLOS ONE 9, 12 (Dec. 2014), 1–28.

Ekaterina P Volkova, Betty J Mohler, Trevor J Dodds, Joachim Tesch, and Heinrich H Bülthoff. 2014b. Emotion categorization of body expressions in narrative scenarios.

Frontiers in psychology 5 (2014), 623.

Jaye Wald and Steven Taylor. 2000. Efficacy of virtual reality exposure therapy to treat driving phobia: a case report. Journal of behavior therapy and experimental psychiatry 31, 3-4 (2000), 249–257.

Peter Willemsen, Mark B Colton, Sarah H Creem-Regehr, and William B Thompson.

2009a. The effects of head-mounted display mechanical properties and field of view on distance judgments in virtual environments. ACM Transactions on Applied Perception (TAP) 6, 2 (2009), 1–14.

Peter Willemsen, Mark B. Colton, Sarah H. Creem-Regehr, and William B. Thompson.

2009b. The Effects of Head-mounted Display Mechanical Properties and Field of

View on Distance Judgments in Virtual Environments. ACM Trans. Appl. Percept. 6, 2 (March 2009), 8:1–8:14.

Bob G Witmer and Paul B Kline. 1998. Judging perceived and traversed distance in virtual environments. Presence 7, 2 (1998), 144–167.

Yang Xi, Ning Gao, Mengchao Zhang, Lin Liu, and Qi Li. 2018. The Bayesian Causal Inference in Multisensory Information Processing: A Narrative Review. In Interna- tional Conference on Intelligent Information Hiding and Multimedia Signal Processing.

Springer, 151–161.

Seraphina Yong and Hao-Chuan Wang. 2018. Using Spatialized Audio to Improve Human Spatial Knowledge Acquisition in Virtual Reality. In Proceedings of the 23rd International Conference on Intelligent User Interfaces Companion. ACM, 51:1–51:2.

Mary K. Young, Graham B. Gaylor, Scott M. Andrus, and Bobby Bodenheimer. 2014. A Comparison of Two Cost-differentiated Virtual Reality Systems for Perception and Action Tasks. In Proceedings of the ACM Symposium on Applied Perception. ACM, 83–90.

Zhiying Zhou, Adrian David Cheok, Xubo Yang, and Yan Qiu. 2004. An experimental study on the role of software synthesized 3D sound in augmented reality environ- ments. Interacting with Computers 16, 5 (2004), 989–1016.

Christine J Ziemer, Jodie M Plumert, James F Cremer, and Joseph K Kearney. 2009.

Estimating distance in real and virtual environments: Does order make a difference?

Attention, Perception, & Psychophysics 71, 5 (2009), 1095–1106.