行政院國家科學委員會補助專題研究計畫成果報告

※※※※※※※※※※※※※※※※※※※※※※※※※

※ ※

※ 中學生資訊科技之網路學習與評量系統之研究 ※

※子計畫二:網路化創造性學習環境之可行性研究(3/3)※

※

※

※※※※※※※※※※※※※※※※※※※※※※※※※

計畫類別:□個別型計畫 整合型計畫

計畫編號:NSC - 89 - 2520 - S - 009 - 011

執行期間: 89 年 8 月 1 日至 90 年 7 月 31 日

計畫主持人: 林盈達 教授

共同主持人:

本成果報告包括以下應繳交之附件:

□赴國外出差或研習心得報告一份

□赴大陸地區出差或研習心得報告一份

□出席國際學術會議心得報告及發表之論文各一份

□國際合作研究計畫國外研究報告書一份

執行單位: 交通大學資訊科學系

中

華

民 國

90

年

9

月

15

日

行政院國家科學委員會專題研究計畫成果報告

中學生資訊科技之網路學習與評量系統之研究 子計劃二:

網路化創造性學習環境之可行性研究(3/3)

計劃編號:NSC 89-2520-S-009-011

執行期限:89 年 8 月 1 日至 90 年 7 年 31 日

主持人:林盈達 教授

交通大學資訊科學系

一、中文摘要 本論文提出一個新穎的網路電腦輔助 測 驗 系 統 , DIYexamer (Do-It-Yourself Examer)。 其三項特色使得它和現有的系 統有著明顯的差別,分別為學生自創試 題 、 題 庫 共 享 以 及 自 動 評 鑑 機 制 。 DIYexamer 接受由老師和學生所提供的試 題 , 同 時 也 和 其 他 機 構 所 使 用 的 DIYexamer 共享題庫。我們採用一個可以 動態評估題庫內試題鑑別度的演算法,藉 此將不合格的試題由題庫中刪除。如此不 但保證題庫中試題的品質,也可以有效的 增加題庫內的試題數量。 關鍵詞:電腦輔助測驗系統,試題評量, 試題取得、鑑別度、遠距學習。 Abstr actThis paper presents a novel network CAT system, DIYexamer (Do-It-Yourself

Examer). It has three features that

differentiate it from existing CAT systems: student DIY items, item-bank sharing, and

automatic assessment of item

discriminability. DIYexamer accepts test items contributed form teachers as well as students, and allows limited item sharing between item-banks possibly maintained by different organizations. An algorithm is

applied dynamically to assess the

discriminability of items in item-banks in

order to filter out less qualified

contributions, hereby assuring the quality of stored items while scaling up the size of

item-banks.

Keywor ds: Computer Assisted Testing, Test

Evaluation, Test Item Acquisition,

Discriminability, Distant

Learning

二、緣由與目的

Computer-assisted Testing (CAT) or Computer-based Testing (CBT), the use of computers for testing purposes, has a history spanning more than twenty years. The

documented advantages of computer

administered testing include reductions of testing time, an increase in test security, provision of instant scoring, and an individualized adaptive testing environment [1][2][3][4]. Three categories of CAT are currently employed: standalone packages,

test centers and networked systems.

Regardless of which CAT system is employed, a critical issue in developing CAT is the construction of a test item-bank. Traditionally, asking teachers and content experts to submit items generates the item-bank. Three major drawbacks of the traditional method can be observed:

1) Limitation of item amount: Teachers and content experts tend to have similar views on the test subject. That is, in a given field vital subject matter might be confined. Therefore, although more teachers and content experts are invited to contribute test items, the total number of distinct items remains low.

2) Passive learning attitude: Students are

conventionally excluded from the

computer-assisted testing system, teachers generate tests, the system presents test sheets and students then complete the tests. That is, they play a passive role within the testing system, and are not afforded the opportunity to

conduct “meta-learning” or

“meta-analysis.”

3) No guarantee on item quality: Permitting students to generate tests may be a possible solution to the aforementioned problems. However, this raises a new problem: quality assurance and ensuring that the tests are worth storing and used for further tests. Even when the whole item-bank is contributed by teachers and content experts, ways to dynamically assess and filter test items are needed.

1 The Diyexamer Solution

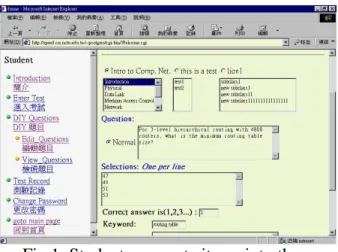

The DIYexamer[5] provides a web interface for users to remotely control and operate the system. Three kinds of users are supported: administrators, teachers, and students. It allows students to contribute test items, and provides an effective means of verifying the discriminability of these items. Three main ideas are introduced below: 1) Item DIY by students: DIYexamer allows

students to generate test items into the item-banks online as Fig 1, while teachers can query these items generated by students. In addition to rapidly increasing the total number of items in an item-bank, this feature also encourages

students to develop meta-learning, i.e.

creative learning. In order to submit tests,

students must thoroughly study the learning materials, develop higher-level overviews of the materials, and practice cognitive and creative thinking.

2) Assessment of item discriminability:

DIYexamer provides an

item-discriminability assessment method to ensure the quality of the stored items. In addition to ensuring the internal consistency of existing test items, this

method also continuously and

dynamically screens additional new items

in the item-bank.

Fig 1: Students generate items into the item-bank

3) Item-bank sharing: DIYexamer, a scalable multi-server system, connects many item-banks stored in different servers. Therefore, via the Internet, more items can be accessed and shared. The sharing is limited and controlled in a sense that a server issues a request, describing the criteria of a test item it requests, to another server.

Additional advantages have been identified and include the facts that since DIYexamer provides a real-time on-demand generation of test-sheet function, cheating is avoided. Also, DIYexamer provides an item cross-analysis function to which the degree of difficulty for each test as well as the entire test base can be accurately measured.

2 Discr iminability Assessment Of Diyexamer

When selecting sample students, only those whose scores have large gap with the average score should be considered. Accordingly, those with the top 30%, in terms of range, scores are defined as “high-score group (H’)”, while those with the bottom 30% scores are defined as “low-score group (L’)”.

To show the different criteria and effects of choosing samples in the traditional method and DIYexamer method, Fig.2 depicts the score distribution in a test. In this example, the highest score is 92, the lowest

score is 34, and the average score is 69. The “high rank score group” and the “low rank score group” are chosen according to these two methods. Take student X as an example, the score of X is 66, which differs only 3 points from the average score. The associated information of X should have little, if not none, referential value in computing item discriminability. However, X is chosen as a sample in the high rank group in the traditional method. This fallacy results from using rank group, in terms of count, as the criterion of choosing samples. In DIYexamer, X is not chosen since score group, in terms of range, rather than rank group is used. Only those with large gap with the average score are chosen as samples. 20 30 40 50 60 70 80 90 100 3 4 M I N X L AVG6 9 M AX9 2 H L ’ H ’ 6 6

Fig 2: Comparison of samples taken in the traditional method and DIYexamer method For different samples that have different impacts on discriminability, a referential value with respect to an item is generated for each student selected as a

sample. We first define the item

discriminability as the average of all associated referential values,

Discriminability = Sum of the referential values of sampled students

Number of sampled students

Since the referential values depend on students’ scores, the referential values are computed according to the ratio of correct and incorrect answers of the sampled students. The ratios of correct and incorrect answers are defined as,

Ratio of correct answer = Number of items answered correctly

Number of items on the test

Ratio of incorrect answer = Number of items answered incorrectly

Number of items on the test According to Table 1, the referential

value of a student correctly answered an item is the ratio of correct answer of the student. Alternately, the referential value of a student incorrectly answered an item is the ratio of incorrect answer of the student. This policy comes from the fact that an item should have increased discriminability if correctly answered by a competent student, while rendering decreased discriminability if correctly answered by a less competent student. In this way, a competent student contributes large referential value to a

correctly answered item and small

referential value to an incorrectly answered item, and vice versa.

三、結果與討論

1 Evaluation Of The Discr iminability Assessment In Diyexamer

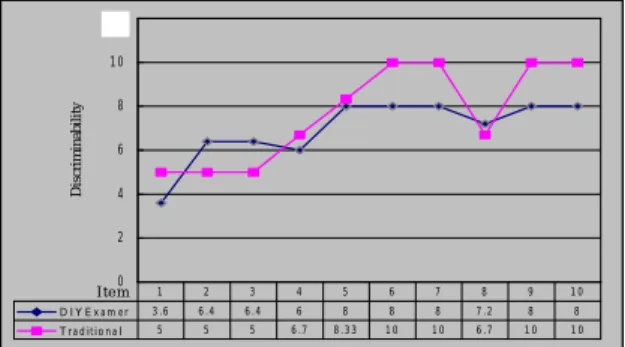

The fairness and performance of DIYexamer was evaluated. We conducted an experiment where 10 students took the test on-line using DIYexamer with 10 items. Discriminability for each item is computed using both the traditional method and the

DIYexamer method. However, the

discriminability originally falls between -1 to 1 using the traditional method, while falling between 0 to 1 using the DIYexamer method. To compare these two methods, both two ranges of discriminability are normalized from 0 to 10, as shown in Fig 3.

0 2 4 6 8 1 0 1 2 I te m Discriminability D I Y E x a m e r 3 .6 6 .4 6 .4 6 8 8 8 7 .2 8 8 T r a d itio n a l 5 5 5 6 .7 8 .3 3 1 0 1 0 6 .7 1 0 1 0 1 2 3 4 5 6 7 8 9 1 0

Fig 3: Comparison of item discriminability

Student Answer Item discr iminability

Refer ential value to compute discr iminability

Correct High Ratio of correct answer Competent

(With high ratio of correct

answer) Incorrect Low Ratio of incorrect answer Correct Low Ratio of correct answer Less competent

(With low ratio of correct

answer) Incorrect High Ratio of incorrect answer

2 Conclusion

This paper has presented a novel architecture for a networked CAT system, DIYexamer. It supports item DIY by students, item-bank sharing, and item discriminability assessment.

For discriminability assessment, new calculation formulas were proposed. When compared with the traditional assessment scheme, the main difference is that the top

and the bottom 30% of the score group, in

terms of range of scores were selected rather

than the rank group, in terms of count of

students. Thus, item discriminability is more

accurately reflected particularly when the tested students have close scores.

Item-bank sharing and item DIY by

students has increased both the amount and

the variety of questions in item-banks. Item

DIY by students promotes creative learning

within students, while automatic

discriminability assessment assures better quality than traditional CAT systems.

四、計畫成果自評

A questionnaire was used to survey subjective attitudes of students about DIYexamer and the outcome revealed that most students were interested in item DIY. The technique proposed herein is useful in general tuition not only to improve the

quality of test items and fairness; but also to save time from generating questions and computing scores. We recommend that DIYexamer be popularized to schools.

五、參考文獻

[1] C. V. Bunderson, D. K. Inouye, and J. B.

Olsen, “The four generations of

computerized educational

measurement,” in Educational

measurement (3rd ed.), R. L. Linn, Ed.

New York: American Council on Education— Macmillan, pp 367-407, (1989).

[2] S. L.Wise and B. S. Plake, “Research on the effects of administering tests via computers,” Educational Measurement: Issues and Practice, vol. 8, no. 3, pp. 5-10, (1989).

[3] A. C. Bugbee, Examination on Demand: Findings in Ten Years of Testing by Computers 1982-1991. Edina, MN: TRO Learning, (1992).

[4] Load, F. M., Applications of Item Response Theory to Practical Testing

Problems. Erlbaum, Hillsdale,

NJ ,(1980).

[5] “DIYexamer system”,

http://speed.cis.nctu.edu.tw/~diy