Interacting Object Tracking in Crowded Urban Areas

Chieh-Chih Wang, Tzu-Chien Lo and Shao-Wen Yang

Department of Computer Science and Information EngineeringNational Taiwan University, Taipei, Taiwan

Email:bobwang@ntu.edu.tw,{bright,any}@robotics.csie.ntu.edu.tw

Abstract— Tracking in crowded urban areas is a daunting

task. High crowdedness causes challenging data association problems. Different motion patterns from a wide variety of moving objects make motion modeling difficult. Accompany-ing with traditional motion modelAccompany-ing techniques, this paper introduces a scene interaction model and a neighboring object interaction model to respectively take long-term and short-term interactions between the tracked objects and its surroundings into account. With the use of the interaction models, anomalous activity recognition is accomplished easily. In addition, move-stop hypothesis tracking is applied to deal with move- move-stop-move maneuvers. All these approaches are seamlessly inter-graded under the variable-structure multiple-model estimation framework. The proposed approaches have been demonstrated using data from a laser scanner mounted on the PAL1 robot at a crowded intersection. Interacting pedestrians, bicycles, motorcycles, cars and trucks are successfully tracked in difficult situations with occlusion.

I. INTRODUCTION

Scene understanding is a key prerequisite for making a robot truly autonomous. Establishing the spatial and temporal relationships among the robot, stationary objects and moving objects serves as the basis for scene understanding. In [1], [2], we presented the theory and algorithms to solve the Simultaneous Localization, Mapping and Moving Object Tracking (or SLAMMOT) problem. The experimental results from a ground vehicle at high speeds in crowded urban areas demonstrated the feasibility of SLAMMOT. A wide variety of moving object in crowded urban areas are detected and tracked successfully. The stationary and moving object maps are built incrementally. However, we assumed that the robot and moving objects move independently of each other to reduce the complexity of SLAMMOT enormously. This inde-pendence assumption may be unrealistic in human inhabited environments such as crowded urban areas, shopping malls and railway stations. These environments contain a large number of constraints which affect the motions of moving objects. Targets interact both with other moving objects and their surrounding environments. Interactions among moving objects and stationary objects should be of interest for higher level scene understanding.

Without explicitly detecting and modeling interactions, a number of approaches address the filtering and data associa-tion issues of interacting object tracking. Veeraraghavan et al. [3] addressed a multilevel tracking approach using Kalman filter for tracking pedestrians and vehicles using cameras at intersections. Zhao and Shibasaki [4] accomplished tracking multiple pedestrians using multiple laser scanners where

Fig. 1. Left: The PAL1 robot. Right: the robot collecting data at a crowded intersection near National Taiwan University.

pedestrians’ feet are detected and the pattern of the rhythmic swing feet are tracked.

To properly address the interacting object tracking prob-lem, detecting and modeling of interactions are critical. Oliver et al. [5] described a coupled hidden Markov model framework for recognizing human interactions such as follow, approach+talk+continue, and change direc-tion+approach+talk+continue in a pedestrian plaza. Panan-gadan et al. [6] uses a simple distance-based method for detecting interactions among people crossing a courtyard. Bruce and Gordon [7] proposed a statistical learning method to model interactions between a target and the surrounding environment for better motion prediction. In [8], Khan et

al. proposed a Markov chain Monte Carlo (MCMC)-based

particle filter to track interacting ants in which interactions are modeled through a Markov random field motion prior.

In this paper, both short-term and long-term interactions are defined and integrated into the tracking process. The long-term interactions are modeled with the use of the stationary and moving object map built by SLAMMOT. In addition to the interaction between the tracked object and the stationary objects [9][7], behavior patterns of previous dynamic objects are taken into account. A simple abnormal activity recognition can be accomplished with this approach. The short-term interactions are modeled with the use of neighboring object tracking. As moving objects in urban areas obey the same traffic laws, strong interactions between the tracked object and the neighboring objects should always exist to avoid accidents. The tracking process is fused with the tracked object’s own motion and the motion of the neighboring objects. This short-term interactions deal with occlusion issues effectively, provide better move-stop

switching prediction and achieve better tracking performance and accuracy than traditional methods. Instead of designing more complex motion models, our novel approach is to simply update the target’s state estimate using the virtual measurement generated by the interaction models in the update stage of filtering.

As move-stop-move maneuvers often occur in crowded urban areas, a soft switching model-set algorithm of the variable structure multiple-model estimator [10], or the move-stop hypothesis tracking approach [2], is applied. The interaction models are seamlessly integrated with this theoretically solid multiple-model estimator framework. The feasibility of the proposed approaches are demonstrated using data collected from a SICK laser scanner mounted on the PAL1 robot at a crowded intersection as shown in Figure 1. The visual images are only for visualization in this work.

II. BACKGROUND

In this section, we review the theoretical foundations of tracking, describe the variable structure multiple-model esti-mator and introduce the mathematical notation for describing the proposed approaches.

A. Bayesian Tracking

The tracking problem can be solved with the mechanism of Bayesian approaches such as Kalman filter and Particle filter. Assuming that the true motion mode of a target is known, we can get a simple form of moving object tracking.

p(xk| Zk) (1)

where xk is the true state of the moving object at time k,

and Zk= {z1, z2, · · · , zk} is the perception measurement set

leading up to time k. According to the Bayes’ theorem and the Markov assumption, Equation 1 is derived and expressed as:

p(xk| Zk) ∝ p(zk| xk)

Z

p(xk| xk−1)p(xk−1| Zk−1)dxk−1 (2)

where p(xk−1| Zk−1) is the posterior probability at time k −1, p(xk| Zk) is the posterior probability at time k, p(xk| xk−1)

is the motion model and p(zk| xk) is the measurement or

perception model.

B. Motion Modeling

The true motion mode is often unavailable in many appli-cations. Online motion modeling is needed. Equation 1 can be modified and formalized in the probabilistic form as:

p(xk, sk| Zk) ∝ p(zk| xk, sk) (3)

∑

sk−1

Z

p(xk, sk| xk−1, sk−1)p(xk−1, sk−1| Zk−1)dxk−1

where sk is the true motion mode of the moving object at

time k.

Motion Modeling, or estimation of structural parameters of a system, is called system identification in the control literature and learning in the artificial intelligence literature.

From a theoretical point of view, motion modeling is as im-portant as perception/measurement modeling in Bayesian ap-proaches. From a practical point of view, without reasonably good motion models, the predictions may be unreasonable and cause serious problems in data association and inference. For online motion modeling, using more models is not necessarily the optimal solution. Additionally, it increases computational complexity considerably. Li [11] provided a theoretical proof that even the optimal use of motion models does not guarantee better tracking performance.

Use of a fixed set of models is not the only option for multiple model based tracking approaches. A variable structure (VS) can be used in multiple model approaches [12]. By selecting the most probable model subset, estimation performance can be improved. However, this requires more complicated computation procedures. Not only motion but also other types of information or constraints can be selected and added to the model set. In [13], terrain conditions are used as constraint models and are added to the model set to improve performance of ground target tracking via a variable structure interacting multiple model (VS-IMM) algorithm.

The details of the variable structure multiple-model es-timation and the related algorithms are available in [12]. Although our primary contribution is to take both stationary and moving object interactions into account in the up-date stage instead of in the predication stage, move-stop-move maneuvers are taken care under the variable structure multiple-model estimation framework.

C. Move-Stop Hypothesis Tracking

The move-stop hypothesis tracker follows the variable structure multiple-model estimation, which has two motion model set, the move model set and the stop model set. The model sets of the move-stop hypothesis tracker can therefore be expressed as:

Q = {q(move), q(stop)} (4) where q(move)can consist of common motion models such as the constant-velocity (CV) model, the constant-acceleration (CA) model, the constant-turn (CT) model. Here the inter-acting multiple model (IMM) approach [14] is applied to integrate all motion models. q(stop) is the stationary process

model described in Chapter 4.4 of [2].

In practice, the minimum detection velocity (MDV) can be obtained by taking account of the modeled uncertainty sources. For objects whose velocity estimates from the IMM algorithm with the moving models are larger than this minimum detection velocity, the objects are unlikely to be stationary and the IMM algorithm with the moving models should perform well.

For objects whose velocity estimates are less than this minimum detection velocity, tracking should be done with great caution. Instead of adding the stationary process model to the model set, move-stop hypothesis tracking is applied where the move hypothesis and the stop hypothesis are inferred separately.

For move hypothesis inference, tracking is done via the IMM algorithm. For stop hypothesis inference, the stationary process model is used to verify if the system is a stationary process at the moment with a short time period of mea-surements. The covariances from the move hypothesis and the stop hypothesis are compared. The hypothesis with more certain estimates will take over the tracking process.

Figure 2 demonstrates the performance of move-stop hypothesis tracking. It is clear that move-stop hypothesis tracking correctly tracked a move-stop-move maneuver of the tracked motorcycle. Without move-stop hypothesis tracking, the estimate diverged.

III. INTERACTION-AIDEDTRACKING

In this section, we describe a scene interaction model to represent the long-term interactions and a neighboring object interaction model to represent the short-term interactions. Instead of using complex motion modeling techniques, the interaction models produce virtual measurements to aid tracking via the update stage of filtering.

A. Scene Interaction Model

The scene interaction model is designed to represent the long-term interactions between the target and its surround-ings. As the temporal and spatial information is embedded in the stationary and moving object map built by SLAMMOT, the scene interaction model uses the map to predict/constrain the possible future motion and pose of the target.

1) Modeling: The environment map built by SLAMMOT

previously contains only the occupancy information of sta-tionary and moving objects. Here the map is stored with additional information such as speed and direction of moving objects. Motion directions of tracked targets are discretized into one of nine canonical values, i.e., eight for canonical directions and one for stationary objects. The stationary mode consists of one bin and each of the eight directions consists of b bins which is given as:

β(v) = ½ ¥ v interval ¦ for 0 ≤ v < b · interval b − 1 for v ≥ b · interval (5)

where v is the speed of the occupied object and interval is a pre-determined constant. In our experiments, interval is 10 km/hr and b is 8. Each bin records the occurrence count of each speed value for each direction. Figure 3 illustrates the information contained by a single grid of the built map. Figure 4 shows the built maps in which only the most observed direction of a grid is shown.

In our scenarios, the urban traffics contain strong long-term interactions because of traffic laws. Therefore, the SLAMMOT maps are automatically generated and main-tained according to these behavior patterns. The behavior patterns are classified with the use of motion directions of all moving objects in the scene. The scene interaction model will use the corresponding map to predict/constrian the tracked object’s motion. Figure 4 shows the SLAMMOT map according to three different behavior patterns.

−30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter)

(a) Tracking result of the whole scene. Rectangles denote tracked moving objects. The rectangle with a bold trajectory denotes the tracked motorcycle. The bold rectangle is enlarged in (c) and (d).

(b) The bold rectangle is the tracked motorcycle

−0.5 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 X (meter) Y (meter)

(c) Without move-stop hypothesis tracking −0.5 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 X (meter) Y (meter)

(d) With move-stop hypothesis tracking

Fig. 2. Move-stop hypothesis tracking: in (c) and (d), ×s are the measurements. The distributions of the estimates are shown by 1σ ellipse. The estimates are at the center of the ellipses which are not shown for clarity. E S N W E S N W

Fig. 3. The SLAMMOT map contains information of occupancy, speed and direction. Two examples are shown in which different motion patterns are embedded into the map. Left is from a road lane and right is from a crosswalk.

−30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter) Robot −30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter) Robot −30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter) Robot

(a) Three different behavior patterns of an urban scene. Only the most observed motion direction of a grid is shown by an arrow inside the grid. Black grids are belonging to stationary objects. White grids are unobserved or unoccupied areas. The robot is at the origin (0, 0) of the map.

(b) The photos illustrate different behavior patterns of the scene. Fig. 4. The SLAMMOT map.

2) Prediction: With the use of the SLAMMOT maps and

the scene behavior pattern recognition results, we predict the possible motions of a tracked object using a sampling-based method.

Let Ek be a set containing the state vectors of these

randomly generated samples e[i]k at time k where Ek=

S

ie[i]k.

The samples are weighted with respect to the SLAMMOT map. If the grid occupied by the sample belonging to stationary objects, the sample’s weight w[i]k is set to zero. If not, the weight w[i]k of the sample e[i]k is proportional to the probability of the motion specified by e[i]k at the occupied grid.

Given the samples and their corresponding weights, the effect of the the scene interaction model is represent by the mean and covariance (˜z(scene)) of these weighted samples as shown in Figure 5. The SLAMMOT process integrates the previous real measurements into the stationary and moving object map. The scene interaction model uses the map to generate the virtual measurement about the target to predict or constrain the target’s future motion. The rest of fusion is straightforward. The target state is simply updated with this virtual measurement.

With the use of the SLAMMOT map, the scene interaction model may only provide a more uncertain estimate than the prediction from the target’s motion models. However, this method effectively takes the constraints/interactions from

−26 −25 −24 −23 −22 −21 −20 −19 −1 0 1 2 3 4 5 6 X (meter) Y (meter) 15 16 17 18 19 20 21 22 13 14 15 16 17 18 19 20 X (meter) Y (meter)

Fig. 5. Sampling-based prediction from the scene interaction model. Left: a motorcycle passing a narrow gate. Right: a car moving near a median strip.

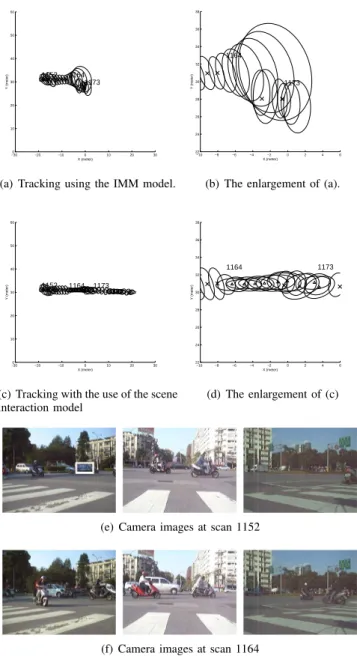

both stationary and moving objects into account. Figure 6 demonstrates the capability of tracking in a occlusion situa-tion with the use of the scene interacsitua-tion model. Figure 6(a) shows the tracking result using the IMM model. While the target is occluded, the estimate is predicted without update. The target state estimate uncertainty increases quickly and finally diverges. False data association results in failure in tracking of its surrounding objects. Figure 6(c) shows inter-acting object tracking with the proposed scene interaction model. The information contained in the SLAMMOT map is employed to predict the target’s motion. The occluded

ob-−300 −20 −10 0 10 20 30 10 20 30 40 50 60 X (meter) Y (meter) 1152 1164 1173

(a) Tracking using the IMM model.

−10 −8 −6 −4 −2 0 2 4 6 22 24 26 28 30 32 34 36 38 X (meter) Y (meter) 1164 1173

(b) The enlargement of (a).

−300 −20 −10 0 10 20 30 10 20 30 40 50 60 X (meter) Y (meter) 1152 1164 1173

(c) Tracking with the use of the scene interaction model −10 −8 −6 −4 −2 0 2 4 6 22 24 26 28 30 32 34 36 38 X (meter) Y (meter) 1164 1173 (d) The enlargement of (c)

(e) Camera images at scan 1152

(f) Camera images at scan 1164

Fig. 6. The scene interaction model: ×s are the measurements. The distributions of the estimates are shown by 1σ ellipse.

ject’s state was correctly tracked. The feasibility of tracking is evaluated using the visual images from the onboard camera images as depicted in Figure 6(e). We later will show that this approach also provides a simple way to detect abnormal events.

B. Neighboring Object Interaction Model

The neighboring object interaction model is designed to represent the short-term or immediate interactions between the target and its neighboring objects. As addressed in [5], there are several different types of short-term interactions. In this paper, we only deal with the follow interaction which frequently happens in crowded urban areas. According to the follow interaction assumption, we simply consider interactions between the target and the neighboring objects in front of the tracked object. Note that the proposed framework

allows more different types of short-term interactions via multiple hypothesis tracking approaches. However, it is a challenging problem to detect with which objects the target is currently interacting.

With the use of the same virtual measurement technique proposed in the scene interaction model, the neighboring object interaction model generates a corresponding virtual measurement ˜z(neighbor)according to the neighboring object’s motion to predict/constraint the target’s motion.

Given the estimate ˆxk of the target at time k, let ˆyk be the

state estimate of the nearest neighboring object in front of the target. The virtual measurement from the neighboring object interaction model and its corresponding covariance can be computed as: ˜zkj(neighbor)= Hkj ³ ˆxkj+ ³ Fkj− I ´ ˆykj ´ ∀mj∈ Q (6) ˜ Rkj(neighbor)= Hkj³FkjTkjFkjT+ Qkj´ ∀mj∈ Q (7)

where Fkj is the process model under the motion model mj

of the tracked target at time k, I denotes the identity matrix,

Hkj is the measurement model under the motion model mj

at time k, Tkj is the covariance of the neighboring object ˆyk

under the motion model mj at time k, and Qkj is the motion

noise model under the motion model mjof the tracked target

at time k.

The estimate of the tracked object’s pose is then updated with this virtual measurement straightforwardly. Figure 7 demonstrates that tracking using the neighboring object inter-action model perform well in the occlusion situation. Figure 7(a) shows the tracking result using the IMM model. While the target is occluded, the estimate is predicted without up-date. The target state estimate uncertainty increases quickly which results in wrong data association in this crowded scene. The track is lost in this case. Figure 7(c) shows interacting object tracking with the use of neighboring object interaction model. The motion of the target’s neighboring object is applied to predict the target’s motion. The occluded object’s state was correctly tracked. The correctness of track-ing is evaluated ustrack-ing the visual images from the onboard cameras, as shown in 7(e).

IV. EXPERIMENTALRESULTS

A couple of interacting object tracking results have been shown in the previous sections. Figures 8 demonstrates the tracking results of pedestrians, bicycles, motorcycles, cars and trucks.

Anomalous event detection can be easily accomplished using the interaction models. Here anomalous events are defined as that objects act differently from predictions of the scene interaction model and the neighboring interaction model. Figure 9 demonstrates an example of abnormal event recognition in which a bicyclist disobeyed the traffic laws. As there is no other object around this bicycle, the neighboring object interaction model was not activated but the scene interaction model quickly showed that the bicycle’s motion is very different from the prediction. The attached video shows

−300 −20 −10 0 10 20 30 10 20 30 40 50 60 X (meter) Y (meter) 7 10 11

(a) Tracking using the IMM model.

−10 −5 0 5 10 30 35 40 45 50 55 X (meter) Y (meter) 7 10 11

(b) The enlargement of (a).

−300 −20 −10 0 10 20 30 10 20 30 40 50 60 X (meter) Y (meter) 7 10 13

(c) Tracking with the use of the neighboring object interaction model

−10 −5 0 5 10 30 35 40 45 50 55 X (meter) Y (meter) 7 10 13 (d) The enlargement of (c)

(e) Camera images at scan 6

Fig. 7. The neighboring object interaction model: ×s are the measurements. The distributions of the estimates are shown by 1σ ellipse.

the whole sequence of this abnormal event recognition and interacting object tracking results.

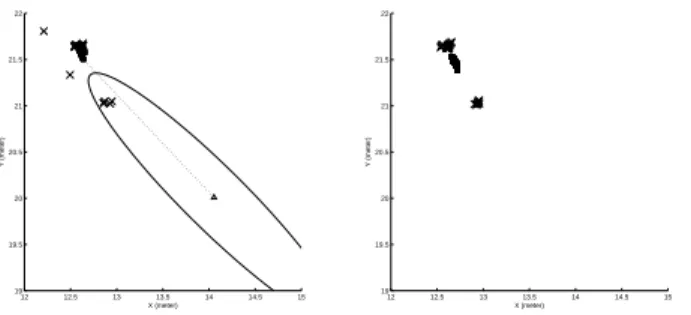

Another example of anomalous event detection is shown in Figure 10. A car broke down and stopped in the road. As the car was stationary, the neighboring object interaction model was not activated but the scene interaction model quickly showed that the car’s motion is very different from the prediction. Traffic accidents can be easily detected by employing the proposed interaction models.

V. CONCLUSION

Tracking a wide variety of interacting moving objects in crowded urban areas is difficult. Based on our previous contribution to SLAMMOT, the primary contribution of this paper is to introduce the scene interaction model and the neighboring object interaction model for taking both long-term and short-term interactions into account. These interaction models and move-stop hypothesis tracking are seamlessly integrated using the variable-structure multiple model estimation framework. Fusion of these interaction models is simply accomplished using the virtual measure-ments in the update stage of filtering. The ample experi-mental results using data from a laser scanner collected at a

−30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter)

Fig. 8. Experimental Tracking results of pedestrians, bicycles, motorcycles, and cars.

crowded urban intersection have demonstrated the feasibility and effectiveness of the proposed algorithms.

Future work will further add more short-term interactions such as passing to deal with more complicated scenarios, and collect more data to analyze the statistical properties of the scene interaction models in different urban areas. It would be of interest to study the effectiveness of the proposed algorithms in areas with weaker interactions such as offices and homes.

VI. ACKNOWLEDGMENTS

This work was partially supported by grants from Taiwan NSC (#94-2218-E-002-077, #94-2218-E-002-075, #95-2221-E-002-433), Quanta Computer, Australia’s CSIRO, and Intel.

REFERENCES

[1] C.-C. Wang, C. Thorpe, and S. Thrun, “Online simultaneous local-ization and mapping with detection and tracking of moving objects: Theory and results from a ground vehicle in crowded urban areas,” in Proceedings of the IEEE International Conference on Robotics and

Automation (ICRA), Taipei, Taiwan, September 2003.

[2] C.-C. Wang, “Simultaneous localization, mapping and moving object tracking,” Ph.D. dissertation, Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, April 2004.

[3] H. Veeraraghavan, O. Masoud, and N. P. Papanikolopoulos, “Computer vision algorithms for intersection monitoring,” IEEE Transactions on

Intelligent Transportation Systems, vol. 4, no. 2, pp. 78–89, June 2003.

[4] H. Zhao and R. Shibasaki, “A novel system for tracking pedestrians using multiple single-row laser-range scanners,” IEEE Transactions

on Systems, Man, and Cybernetics - Part A: Systems and Humans,

−30 −20 −10 0 10 20 30 −10 0 10 20 30 40 50 X (meter) Y (meter) 4880 4960

(a) An abnormal event: a bicyclist disobeyed the traffic lights. The bicycle is denoted by a black rectangle with a bold and longer (6.67 second) trajectory. The other moving objects are shown with 1.33 second trajectories. The bold rectangle area is enlarged in (d).

(b) Visual images at scan 4880. The bold rectangle indicates the tracked bicycle.

(c) Visual images at scan 4960. The bicycle is occluded.

−10 −9 −8 −7 −6 −5 −4 −3 −2 −1 0 24 25 26 27 28 29 30 31 32 33 34 X (meter) Y (meter)

(d) Enlargement of the bold rectangle area in (a): the symbol ×s are the measurements, 4s indicate the virtual measurements from the scene interaction model. The inconsistency between the tracked bicycle and the predictions from the interaction model is clearly shown.

Fig. 9. Anomalous event detection

12 12.5 13 13.5 14 14.5 15 19 19.5 20 20.5 21 21.5 22 X (meter) Y (meter)

(a) The state is updated with the map pattern 1. 12 12.5 13 13.5 14 14.5 15 19 19.5 20 20.5 21 21.5 22 X (meter) Y (meter)

(b) The state is updated with the map pattern 2. 12 12.5 13 13.5 14 14.5 15 19 19.5 20 20.5 21 21.5 22 X (meter) Y (meter)

(c) The state is updated with the map

pattern 3. (d) Visual images at scan 183. Fig. 10. Anomalous event detection: a car disobeyed the traffic law and stopped in the road. The symbol ×s are the measurements, 4s indicate the virtual measurements from the scene interaction model.

[5] N. M. Oliver, B. Rosario, and A. P. Pentland, “A bayesian computer vision system for modeling human interactions,” IEEE Transactions

on Pattern Analysis and Machine Intelligence, vol. 22, no. 8, pp. 831–

843, August 2000.

[6] A. Panangadan, M. J. Matari´c, and G. S. Sukhatme, “Detecting anomalous human interactions using laser range-finders,” in IEEE/RSJ

International Conference on Intelligent Robots and Systems. IEEE

Press, Sep 2004, pp. 2136–2141.

[7] A. Bruce and G. Gordon, “Better motion prediction for people-tracking,” in IEEE International Conference on Robotics and

Automa-tion (ICRA), New Orleans, LA, USA, April 2004.

[8] Z. Khan, T. Balch, and F. Dellaert, “Mcmc-based particle filtering for tracking a variable number of interacting targets,” IEEE Transactions

on Pattern Analysis and Machine Intelligence,, vol. 27, no. 11, pp.

1805–1918, November 2005.

[9] T. Kirubarajan and Y. Bar-Shalom, “Tracking evasive move-stop-move targets with an MTI radar using a VS-IMM estimator,” in Proceedings

of the SPIE Signal and Data Processing of Small Targets, vol. 4048,

2000, pp. 236–246.

[10] X. R. Li, X. Zwi, and Y. Zhang, “Multiple-model estimation with variable structure. iii. model-groupswitching algorithm,” IEEE

Trans-actions on Aerospace and Electronic Systems, vol. 35, no. 1, pp. 225–

241, January 1999.

[11] X.-R. Li and Y. Bar-Shalom, “Multiple-model estimation with variable structure,” IEEE Transactions on Automatic Control, vol. 41, no. 4, pp. 478–493, April 1996.

[12] X. R. Li and V. P. Jilkov, “Survey of maneuvering target tracking. part v. multiple-model methods,” IEEE Transactions on Aerospace and

Electronic Systems, vol. 41, no. 4, pp. 1255– 1321, October 2005.

[13] T. Kirubarajan, Y. Bar-Shalom, K. Pattipati, I. Kadar, E. Eadan, and B. Abrams, “Tracking ground targets with road constraints using an IMM estimator,” in Proceedings of the IEEE Aerospace Conference, Snowmass, CO, March 1998.

[14] H. A. P. Blom and Y. Bar-Shalom, “The interacting multiple model algorithm for systems with markovian switching coefficients,” IEEE