科技部補助專題研究計畫成果報告

期末報告

應用智慧型手機輔助視障導航之研究(2/2)

計 畫 類 別 : 個別型計畫

計 畫 編 號 : MOST

104-2218-E-004-002-執 行 期 間 : 104年09月01日至105年08月31日

執 行 單 位 : 國立政治大學資訊科學系

計 畫 主 持 人 : 余能豪

共 同 主 持 人 : 唐玄輝

計畫參與人員: 碩士班研究生-兼任助理人員:陳佳妤

碩士班研究生-兼任助理人員:王奕方

碩士班研究生-兼任助理人員:林禕瑩

碩士班研究生-兼任助理人員:鄭竣丰

碩士班研究生-兼任助理人員:劉康平

碩士班研究生-兼任助理人員:張智雅

碩士班研究生-兼任助理人員:陳萱恩

碩士班研究生-兼任助理人員:王紫綺

大專生-兼任助理人員:秦暐峻

大專生-兼任助理人員:王邦任

報 告 附 件 : 出席國際學術會議心得報告

中 華 民 國 105 年 11 月 30 日

中 文 摘 要 : 根據統計,視覺障礙者之外出人口有逐年增長的趨勢,隨著步行需

求的提高,友善的道路環境與適當的輔具工具是必要的。然而,無

障礙引導設施無一定的規範且缺乏管理,因此造成視障者行走時危

機重重。另一方面,視障者在外行走時須依賴定向行動訓練所學

,運用視覺之外的感知來探索環境,除了使用白手杖維持平衡或藉

由導盲犬引路之外,隨著智慧型手機的普及,行動裝置亦能成為獲

得行路資訊的重要管道。雖然市面上已有許多導航應用服務,但大

多為明眼人所設計,不僅無法提供視障者所需要的近身指引,其操

作與訊息提示也與視障者習慣不相符。

本研究透過脈絡訪查、隨身觀察等質化研究方法進行使用者經驗研

究,探討視障者於室外步行時的需求與面臨的問題。第一年提出一

套利用智慧型手機及iBeacon之視障導航服務,第二年針對解決過馬

路面向與直走於無追跡物區域等問題,本研究提出一藍芽穿戴式裝

置GuidePin,由視障者佩戴於胸前提供面向資訊,當行經佈建有

Beacon的場域時,即可透過融入定向行動概念的提示訊息建構空間

認知,此策略搭配第一年之智慧型手機導航服務,輔助視障者即時

獲取近身資訊並進行方向修正,完成完整的戶外行走體驗。經過迭

代的原型設計與實際場域測試,本研究設計之導航服務可引導視障

者外出行走、增加環境的熟悉與掌握度,讓視障者更自信且獨立地

行走於城市中。

中 文 關 鍵 詞 : 視覺障礙者輔助應用、定向行動能力、戶外步行導航、微定位導航

、使用者經驗設計、穿戴裝置

英 文 摘 要 : Today, we can freely travel to unfamiliar places and enjoy

the convenience of navigation features on mobile devices.

However, most of the current apps are presented via

visually-oriented interfaces, depriving visually impaired

smartphone users of the benefit of this technology.

Statistics shows that the visually impaired need to go out

as frequently as those having sight, yet this tremendous

demand for independence and mobility remains unsolved. Our

research present a new system called BlindNavi that

integrates knowledge obtained from Orientation and Mobility

training and the micro-location information of iBeacon to

provide a point-to-point outdoor navigation system for the

visually impaired. This year, in order to free hands from

holding smartphones while walking, we designed a GuidePin

that can be worn on the front of the users’ attire to

control their smartphone and provide the users‘ current

heading. Herein, we describe our iterative design process

including lessons learned through qualitative research

methods including contextual inquiry, shadowing, interviews

with experts, and a discussion of our findings from the

deployment of the app on test routes. Finally, the visually

impaired are able to move about in the public domain

英 文 關 鍵 詞 : Visually impaired, Accessibility, Orientation and Mobility

training, Outdoor Navigation, iBeacon, Micro Location, User

Experience Design, wearable device

(2/2)

(

)

104-2218-E-004-002

104

09

01

105

08

31

-1

iBeacon

GuidePin

Beacon

Today, we can freely travel to unfamiliar places and enjoy the

convenience of navigation features on mobile devices. However most of

the current apps are presented via visually-oriented interfaces, depriving

visually impaired smartphone users of the benefit of this technology.

Statistics shows that the visually impaired need to go out as frequently

as those having sight, yet this tremendous demand for independence and

mobility remains unsolved. Our research present a new system called

BlindNavi that integrates knowledge obtained from Orientation and

Mobility training and the micro-location information of iBeacon to

provide a point-to-point outdoor navigation system for the visually

impaired. This year, in order to free hands from holding smart phones

while walking, we designed a GuidePin that can be wore on the front of

the users’ attire to control their smartphone and provide the users current

heading. Herein, we describe our iterative design process including

lessons learned through qualitative research methods including

contextual inquiry, shadowing, interviews with experts, and a discussion

of our findings from the deployment of the app on test routes. Finally,

the visually impaired are able to move about in the public domain

independently with greater ease and confidence.

Bluetooth wearable device, Visually impaired, Accessibility, Orientation

and Mobility training, Outdoor Navigation, iBeacon, Micro Location,

User Experience Design

1

... 1

!

1.1

!...!1

!

1.2

!...!3

!

1.2.1

!...!3

!

1.2.2

!...!3

!

1.3

!...!7

!

1.4

!...!7

!

1.4.1

!...!7

!

1.4.2

!...!8

!

2

... 9

!

2.1

!...!9

!

2.1.1

!...!9

!

2.1.2

Orientation and Mobility, O&M

!...!10

!

2.2

!...!11

!

2.2.1

!...!11

!

2.2.1

-

!...!13

!

2.3

!...!13

!

2.3.1

!...!13

!

2.3.2

!...!15

!

2.3.3

!...!16

!

2.3.4 iBeacon

!...!17

!

2.5

!...!18

!

3

... 20

!

3.1

!...!20

!

3.2 GuidePin

!...!21

!

3.2.1

!...!21

!

3.2.2

!...!22

!

3.2.3

!...!25

!

3.3 APP

!...!25

!

3.3.1 APP

!...!25

!

3.3.2

!...!26

!

3.3

!...!27

!

3.3.1

!...!27

!

3.3.2

!...!28

!

3.3.3

!...!31

!

3.4

!...!32

!

4

... 34

!

4.1

!...!34

!

4.1.1

!...!34

!

4.1.2

!...!34

!

4.1.3

!...!35

!

4.1.4

!...!36

!

4.2

!...!40

!

4.2.1

!...!40

!

4.2.2

!...!40

!

4.2.3

!...!43

!

4.2.4

!...!44

!

4.3

!...!45

!

5

... 47

!

5.1

!...!47

!

5.2

!...!48

!

5.2.1

!...!48

!

5.2.2

!...!49

!

5.3

!...!50

!

5.3.1

!...!50

!

5.3.2

!...!50

!

5.3.3

!...!50

!

... 51

!

... 52

!

1

1.1

2016

115

6.5

6%

1

, 2003

1

O&M

VoiceOver

TalkBack

2

APP

GPS

GPS

Google map

Apple

map

APP

iBeacon

2

3

1.2

1.2.1

12

22

26

1

VoiceOver

Android

iPhone

Shadowing

50

1

No.

A1

19-24

A2

25-30

A3

19-24

A4

19-24

A5

19-24

A6

19-24

A7

19-24

A8

31-36

A9

19-24

A10

19-24

A11

31-36

A12

25-30

1.2.2

1

APP

2

3

2

2

_

A1

“(

)

...

”

A7

”

...

”

A10

“

...

...”

A12

“

M

...

”

3

3

_

A7

“

...”

A1

“

...

”

A3

“

”

“

...”

A11

“

..”

A9

“

...

...”

Shadowing

4~6

4

5

6

7

7

8

1.3

!!

!!

!!

APP

ibeacon

1.4

1.4.1

User Experience Research

Design Thinking

1.4.2

2

2.1

2.1.1

2012

(WHO)

International Classification of Functioning,

Disability and Health,

ICF

ICF

-

5

5

1.

0.1( )

0.2

( )

2.

20

3.

30

10DB(

)

4.

(

)

0.2( )

0.4( )

1.

0.1

2.

30

15DB

3.

0.2

1.

0.01

2.

30

20DB

Cattaneo, 2008

, 2007

, 1992

2012

2.1.2

Orientation and Mobility, O&M

Jacobson 1993

-2004

Griffin-Shirley Trusty

Richard 2000

2001

turns and compass directions

90

180

linear concepts

Hill Jacobson

1.

landmarks and clues

2.

shoreline :

3.

4.

5.

6.

7.

8.

9.

numbering system

10.

11.

self-familiarzation process

a.

b.

c.

Hanlu, 2014

APP

Navi'Rando

2015

9

9

Navi'Rando

2.2

2.2.1

2016

“

”

:

(1)

(2)

(3)

10

<

>

11

2.2.1

-2015

1.4

2012

2000

2012

2.3

2.3.1

braille

Shiri 2011

GoBraille

12

GPS

Shinohara, 2011

12 GoBraille

Dot InCorporation

2015

Dot Dot Braille Smartwatch

13

2016

13 Dot

Wilko 2008

14

PDA

GPS

15

14

2.3.2

HumanWare

Trekker Breeze+

GPS

15

15

Trekker Breeze+

Microsoft

Cities Unlocked

GPS

Microsoft Bing Maps

MiBeacons beacons

3D

clip-clops

indicator

16

Gaunet 2005

5-10

Nicolau 2009

1

2

3

Side

4

/

reference point

“

”

5

2.3.3

GPS

Blasch, 1995

GPS

Ahmetovic 2015

17

17

RFID NFC

GPS

Michitaka 2003

RFID

RFID

18

RFID

NFC

18

RFID

2.3.4 iBeacon

Apple

iBeacon

BLE

UUID

iOS

iBeacon

RSSI(Received Signal Strength Indication)

0

BlindSquare

GPS

FourSquare

Open Street

Maps

BlindSquare

2015

iBeacon

U-R Able

18

Beacons

iOS7

19

BlindSquare

Beacons

APP

20 NavCog

21 iBeacon

iBeacon

BlindNavi APP

22

22

BlindNavi APP

2.5

23

iBeacon

23

!!

!!

!!

3

24

!! GuidePin

!! APP

GuidePin

Beacon

!! Beacon

Wizard of OZ

24

3.1

iPhone

iOS

VoiceOver

“

iPhone

”

iOS 8.0

Swift

APP

Punch Through

Arduino

-LightBlue Bean

Honeywell

Bosch Sensortec

BMA250

LightBlue Bean

BLE Bluetooth Low

Energy,

Arduino

Bean Loader

Bean Loader

LightBlue Bean

Processing

25

Beacon

Apple

iBeacon API

APP

25

3.2 GuidePin

3.2.1

heading

MCU

MCU

wiki

Hall

Effect [ ]

iOS

“

”

APP

X

8

26

GuidePin

HMC5883L

I2C

gauss

HMC118X

Honeywell

1°~2°

12

8

Honeywell

Processing

1

XYZ

2

-

+

/ 2

3

x

x_gain

1

4 Y

y_gain = x_gain * Y_MAX-y_min / x_max-x_min

5 z

z_gain = x_gain * z_max-z_min / x_max-x_min

x_gain

XYZ

1

27

27 HMC5883L

1

2

3

3.2.2

G sensor

GuidePin

-BMA250

30

(3)

X

Y

arcTan(Y/X)

(1) (2)

Pitch

Roll Pitch(Φ)

x

Roll(θ)

y

29

28

29

30

GuidePin

Kalman Filter

optimal recursive data processing

algorithm

!!

Linear Stochastic Difference equation

!!

White Gaussian Noise

!!

Gaussian Distribution

31

1.

k

A

B

U(k)

k

X(k|k-1)=A X(k-1|k-1)+B U(k) ……….. (1)

2.

X(k|k-1)

covariance

P(k|k-1)

X(k|k-1)

P(k-1|k-1)

X(k-1|k-1)

A’

A

Q

P(k|k-1)=A P(k-1|k-1) A’+Q ……… (2)

3.

Kg(Kalman Gain) H

R

Kg(k)= P(k|k-1) H’ / (H P(k|k-1) H’ + R) ……… (3)

4.

(k)

X(k|k) Z(k)

k

5.

k

X(k|k)

P(k|k)= I-Kg(k) H P(k|k-1) ……… (5)

covariance

R=

1e-5

Q = 1e-7

Arduino

31

32

GuidePin

iOS

sandbox

iPhone

33

GuidePin

iPhone

GuidePin

iPhone

iPhone

1.5

GuidePin

GuidePin

sample rate

3.2.3

GuidePin

LightBlue Bean

34

X

34 GuidePin

3.3 APP

3.3.1 APP

iPhone

GuidePin

Beacon

APP

35

APP

GuidePin

Beacon

35 APP

3.3.2

6

Beacon

“id”

”degree”

navi_info

”passing”

“degree”

Beacon

”passing”

”degree”

6 Beacon

_

id

01

Beacon

degree

60

0~359

navi_info

“

”

Beacon

passing

“

” “

”

3.3

3.3.1

7

iPhone

Wizard of Oz

36

7

No.

B1

19-24

B2

25-30

B3

19-24

B4

19-24

B5

19-24

36

3.3.2

Wizard of Oz

!!

!!

“

”

!!

“ ”

!!

“

”

think aloud

Beacon

Beacon

Beacon

37

Beacon

38

“

”

8

37

Beacon

38

Beacon

8

39-1

39-3

39-2

39-3

/

/

40-1

“

”

40-2

3.3.3

9

B1

B3

B4

“ /

“

”

/

“

9

B1

B2

B3

B4

B5

“

”

3.4

1

GuidePin-2 APP

3

GuidePin

APP

Beacon

Contextual Inquiry

Wizard of OZ

10

_

/

“

”

41-1

41-2

“

”

4

GuidePin

“

”

GuidePin

4.1

4.1.1

!!

“

”

/

“

/

”

“

”

!! GuidePin

“

” “

”

“

”

!!

2

4.1.2

11

42

24.8

9

APP

FM

11

15

20

20

GuidPin

GuidPin

42

Beacon

3

5

Beacon

4.1.3

12

C5

C1

12

No.

C1

19-24

C2

19-24

C3

25-30

C4

19-24

C5

31-36

4.1.4

a.

”Bee”

“

”

“

”

TTS(Text-to-Speech)

“Bee”

“Bee”

TTS

b.

13

4

“

”

14

13

-1.

2.

3.

4.

“

”

“

”

(

)

“

”

“

”

14

-4.

5.

“

”

“

/

”

“

”

c.

GuidePin

43

43

GuidePin

GuidePin

iOS

MediaPlayer.framework

MPRemoteCommandEvent

remotePlay remotePause

Sony

15

Beacon

16

15

44

Beacon

44

d.

1

45

2

3D

46

46-1

46-2

46-3

46-3

GuidePin

46-2

GuidePin

APP

45 GuidePin

46

46-2

4.2

4.2.1

!!

Beacon

Beacon

47

48

47

48

!!

!! GuidePin

Kalman

0.5

/

4.2.2

17

1)

2)

50 3

*2 4)

24.8

51

8.6

52

APP

iPhone

17

15

10

15

20

GuidePin

Beacon

FM

49

FM

FM

SAMSUNG Galaxy Note 2

FM

49

APP

iPhone 6

3.5mm

50

15

“

/

”

“

”

52

4.2.3

18

iPhone

D2 D3

D4

18

No.

D1

23

D2

23

D3

22

D4

22

D5

28

D6

21

D7

22

55

4.2.4

a.

Beacon

“

”

b.

iPhone

D2

c.

53

“

”

53

d.

3D

T-shirt

4.3

GuidePin

GuidPin

D6

“

” D7

“

”

D4

“

”

54

5

15

5.1

GuidePin

iBeacon

APP

GuidePin

Beacon

APP

1.!

“

...”

Beacon

“

/

” “

/

”

“

”

“

” “

”

2.!

“

/

”

3.

1

2

3

5.2

5.2.1

Hard

Iron

Soft Iron

iBeacon

55

“

”

55

Apple

5.2.2

Beacon

56

Crowd annotation

56

5.3

5.3.1

“

”

hand free

APP

“

” “

”

“

5.3.2

Beacon

Beacon

APP

5.3.3

iBeacon

GuidePin

Beacon

1.!

BlindNavi

iBeacon

GuidePIn

App

2.!

3.!

Grand Prix & Best of Best

ACM CHISDC award

4.!

CVGIP 2016

x

5.!

!!

2016

https://goo.gl/OZCXsx

!!

2004

!!

2003

!!

1992

!!

2012

!!

2001

!!

2012

---!!

2000

!!

2008

!! J. Zegarra Flores and R. Farcy, “GPS and IMU (inertial measurement unit) as a

navigation system for the visually impaired in cities,” J. Assist. Technol., vol. 7, no. 1,

pp. 47–56, 2013.

!! M. Pielot, B. Poppinga, and S. Boll, “PocketNavigator: Vibro-tactile waypoint

navigation for everyday mobile devices.,” in MobileHCI 2010, 2010, pp. 423–426.

!! Azenkot, S. Prasain, A. Borning, E. Fortuna, R. E. Ladner, and J. O. Wobbrock,

“Enhancing Independence and Safety for Blind and Deaf-Blind Public Transit Riders,”

in Proceedings of the 2011 annual conference on Human factors in computing systems -

CHI ’11, 2011, pp. 3247–3256.

!! Gaunet and X. Briffault, “Exploring the Functional Specifications of a Localized

Wayfinding Verbal Aid for Blind Pedestrians: Simple and Structured Urban Areas,”

Human-Computer Interaction, vol. 20. pp. 267–314, 2005.

!! BlindSquare Inc. (2015) Press. Retrieved August 21, 2015 from

http://blindsquare.com/press/.

!! M. Hirose and T. Amemiya, "Wearable Finger-Braille Interface for Navigation of

Deaf-Blind in Ubiquitous Barrier-Free Space", Proceedings of 10th International Conference

on Human-Computer Interaction, Vol. 4, pp. 1417-1421, Crete, Greece, June 2003.

!! H. Nicolau, J. Jorge, and T. Guerreiro, “Blobby: how to guide a blind person,” in

Proceedings of the 27th international conference extended abstracts on Human factors in

computing systems, 2009, pp. 3601–3606.

!! Wearable Finger-Braille Interface for Navigation of Deaf-Blind in Ubiquitous

Barrier-Free Space http://yehnan.blogspot.tw/2012/02/arduinoled.html

!! Apple Inc. (2013) iBeacons Bible 1.0. Retrieved August 21, 2015 from

https://meetingofideas.files.wordpress.com/2013/12/ibeacons-bible-1-0.pdf.

!! Jacobson, W. H. (1993). The art and science of teaching orientation and mobility to

persons with visual impairments. New York, NY: American Foundation for the Blind.

!!

Cities Unlocked

http://innovation.mubaloo.com/news/microsoft-use-beacons-unlock-cities-blind/

!! Ye, M. Malu, U. Oh, and L. Findlater, “Current and future mobile and wearable device

use by people with visual impairments,” Proc. 32nd Annu. ACM Conf. Hum. factors

Comput. Syst. - CHI ’14, pp. 3123–3132, 2014.

GLIMNAVI: AN OUTDOOR NAVIGATION SERVICE FOR THE VISUALLY

IMPAIRED SMARTPHONE USERS

1

I-Fang Wang,

2Hsuan-Eng Chen,

1Yi-Ying Lin,

2Chien-Hsing Chen,

1Neng-Hao Yu,

2Hsien-Hui Tang

1

National ChengChi University,Taiwan

E-mail: 103753017@nccu.edu.tw, jonesyu@nccu.edu.tw

2National Taiwan University of Science and Technology,Taiwan

E-mail: drhhtang@mail.ntust.edu.tw

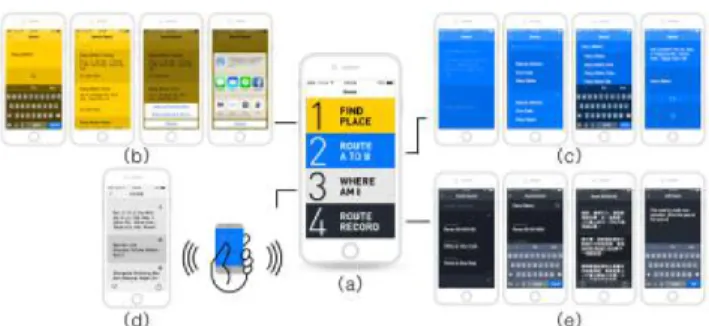

Fig. 1. GlimNavi is composed of two parts: 1) Beacons pro-vide micro-location information alerting the user to corners and stores. 2) A smartphone app functions as a hub to trans-late micro-location information into route information.

ABSTRACT

Today, we can freely travel to unfamiliar places and enjoy the convenience of navigation features on mobile devices. However most of the current apps are presented via visually-oriented interfaces, depriving visually impaired smartphone users of the benefit of this technology. Statistics shows that the visually impaired need to go out as frequently as those having sight, yet this tremendous demand for independence and mobility remains unsolved. Therefore, we present a new system called GlimNavi that integrates knowledge obtained from O&M training and the micro-location information of iBeacon to provide a point-to-point outdoor navigation sys-tem for the visually impaired. We describe our iterative de-sign process including lessons learned through qualitative re-search methods including contextual inquiry, shadowing, in-terviews with experts, and a discussion of our findings from the deployment of the app on test routes. Finally, the visually impaired are able to move about in the public domain inde-pendently with greater ease and confidence.

phone; Mobile app; Accessibility; Orientation and Mobility; User Experience; Micro-location

1. INTRODUCTION

According to the WHO (2014) there are approximately 285 million visually impaired people worldwide. Most of them necessarily or by choice make outing in public independently. According to the survey of Department of Statistics in Tai-wan, the percentage of the visually impaired population that makes an outing daily is even up to 58.09%, so the aid of-fered by such a navigation app for the visually impaired cer-tainly exists. Traditionally, when the visually impaired go on an outing independently they must carry a white cane to maintain balance and to aid in their perception of their sur-rounds, for example helping them to avoid obstacles on the street. Some of them rely on guide tiles to make sure they are on a safe lane. Some of them have a guide dog to lead the way to familiar places. However, these existing aids only solve a small part of their outing needs. To our knowledge, there is no total solution that takes into account the entire process of their venturing out.

In the course of this study, the general process by which the visually impaired prepare for and engage in an outing has been categorized into three stages. The first is the prepara-tion stage which involves a search for informaprepara-tion about their destination is carried out with addresses and contact informa-tion obtained either via the Internet or directly from others. As they acquire information on their route, they tend to go through it mentally to familiarize themselves with the path they must take.

The second stage is actually going out on the streets. In order to move within the public domain independently, the visually impaired must first acquire the fundamental skills of Orientation and Mobility (O&M). The success of this stage is based upon their O&M training, the visually impaired are

course a totally different process from that which the majority uses that is dependent upon visual cues.

The third stage is post-journey. If some destination ven-tured to is a place to be frequented, the visually impaired may need ask for additional O&M training, a service which pro-vides an instructor to lead them through the entire route to ensure that they can memorize all the details necessary for tracking cues. If it’s a one-time place, they can only ask for relatives or friend’s help. We examine the process of the entire journey in qualitative studies and excerpt an unsolved prob-lem for the later design.

Nowadays, smart phone has become a necessity for peo-ple in daily life. Not only for ordinary peopeo-ple, there is a growing number for the visually impaired using smart phones. By the service of screen reader from iOS and Android like Voiceover and talkback, the visually impaired can know the content and use smart phone without sight. However, the vi-sually impaired get frustrated on the existing navigation app because of two reasons: 1) the visually-oriented interface which is designed for sighted people have terrible flow for the visually impaired. 2) the lack of finer location info, like a cer-tain side on crossroad, sidewalks, driveway entrances and so on. These information cannot be precisely navigate by merely using GPS positioning technology.

For the first point, we did the qualitative research to learn what they encounter when they use app and how they use smart phone smoothly. And for second point, we see that the technology of iBeacon has been gradually adopted in shops, malls and so on. Unlike GPS which is used for car navi-gation, iBeacon provides micro-location info which can pre-cisely separate the different corners on the crosswalk. Based on our research, by well-planed setting of iBeacons can turn 2D map into a linear point-to-point route, which can definitely lower the load of cognitive for the visually impaired.

To conclude our research questions: 1) how to provide a simple and friendly user interface to the visually impaired in whole process of going out, 2) how to use iBeacons for visually impaireds outdoor navigation properly, 3) whats the rules of setting iBeacons and how to provide useful and clear navigation messages while they go on road.

In this paper, we present an iterative design process with three in-depth studies and tests. Overall, the contributions of this paper are: 1) we presented the needs, challenges and strategies when the visually impaired go out by three qual-itative researches. 2) we design a service called GlimNavi, which is composed of a navigation app and iBeacons, to pro-vide usable route info on-road by using micro-location

infor-2. RELATED WORK

2.1. Wayfinding information for the Visually Impaired According to relevant studies, with proper training the visu-ally impaired can build a mental map in almost the same way as people gifted with sight. However, abstract concepts like numbers, measures of distance, or colors may not be so easy for them to understand [1]. Research also shows that the vi-sual impaired are best served by itinerary descriptions as a directional guide due these descriptions being a part of their O&M training. Gaunet [2] explains that the visually impaired need different kinds of information compared to those having sight in given corresponding scenarios: e.g., 1) Straight road section. 2) Intersection area. 3) Crossing the roads. 4) Walk in progress: landmarks, special environment clues. This study by Gaunet also recommends that the guiding information pro-vided by a navigation system should be in 5-10 meters. Pro-viding notification at the best timing and giving continuous reminders are both necessary. Unlike those with sight, the visually impaired rely on advance planning when going on an outing [3]. Their hands, ears, and attention are occupied and they are concentrating intently while they are walking on the street. As a result, both the user interface and functional design should be simple so it will not distract users.

2.2. Existing Technologies for Navigation

Navigation notifications on route can be divided into two parts: voice or tactile. With regard to voice notification, ISAS [3] uses open-ear headphones to provide spatial POIs along the route using intersection-based rendering method instead of distance. In this way, visually impaired people can focus within a block, reducing confusion and fatigue. Trekker is a GPS-based handheld device that verbally broadcasts names of the streets, intersections and landmarks while walking. Vi-sually impaired users can easily get detailed descriptions and step-by-step instructions from start to end with large, distinc-tive buttons of the device. As for tactile notification, GoB-raille [4] combines BGoB-raille devices and smartphones to pro-vide information on public transportation to the visually im-paired. PocketNavigator [5] uses a combination of different vibration lengths to tell the user which way to go even when the phone is in the pocket. NavRadar [6] divides the journey into only two directions, present direction and desired direc-tion with one and two vibradirec-tions respectively. When inside a building, Listen2dRoom [7] uses image recognition, and IN-SIGHT [8] serves as a navigation system through utilization of Bluetooth and RFID technology. Recently, iBeacon has been widely used for indoor positioning and micro-location service. Comparing to aforementioned technologies, iBea-con can provide more precise location info with least effort

2.3. Location-based Wayfinder Service

BlindSquare (http://blindsquare.com/indoor/) is a dedicated GPS app for the visually impaired. By using FourSquare and Open Street Maps map data, it describes the environment, an-nounces points of interest and street intersections to the user en route. In May 2015, BlindSquare released the BPS (Bea-con Positioning System) version to provide an indoor naviga-tion system with iBeacons installed inside buildings. When GPS fails, BPS continues ensuring that visually impaired re-main aware of the surrounding landmarks. ILSI project [9] chose a shopping mall in Helsinki as a test bed to evaluate the feasibility of BlindSquare app with ibeacons. They used multi-sensory landmarks as an important feature on road and proposed four levels of information mediated with iBeacons for way-finding. We extend this idea to outdoor navigation which is a general need of visually impaired. We also pay attention to the entire outing experience from preparation to post-journey stages.

3. PILOT STUDY

In order to gain insight in regard to the challenges faced by, and issues involving, the visually impaired, we conducted 3 qualitative research procedures, utilizing the methods of con-textual inquiry, shadowing, and the expert interview, to reveal the needs of the visually impaired regarding mobile naviga-tion and to comprehensively understand their smartphone us-age patterns. The following are some interesting facts and problems encountered in regard to each method:

3.1. Contextual Inquiry

We conducted 30-minute interviews with 10 visually im-paired informants, including 6 smartphone users. Intervie-wees were categorized according to 5 factors: 1) Visual abil-ity, 2) Inherent or acquired cause, 3) Age, 4) Types of mo-bile phone, and 5) Acceptance of technology. Each intervie-wee shared their experience of using smartphones, naviga-tion relevant apps, and their outing habits. In general, the inherent blind, often with better O&M skills, are more inde-pendent than those who later acquired blindness. Their will-ingness to go to unfamiliar places on their own is also much higher. Acquired blindness, usually caused by disease or ac-cidents, forces these individuals to rebuild their lives without the convenience of sight. These individuals tend to feel in-secure while traveling to unfamiliar areas without accompa-niment. So the mobility of those with acquired blindness is more likely to be highly constrained. To sum up factors 3 to 5, older individuals among the visually impaired are less active in their use of smartphones. Average users use their smartphone only for basic functions like making phone calls, web browsing, chatting with friends...etc. Active users are the

ing smartphones, eager to try or share new technological ser-vices. Interestingly, these informants share numerous habits and problems.

3.1.1. VoiceOver Usage Pattern

The 6 informants that use smartphones all use VoiceOver, an iOS gesture-based screen reader. With VoiceOver enabled, a cursor will appear on the screen. Users can then swipe or drag to move the cursor from one object to another. The handset verbally informs the user of the properties of the object upon which the cursor rests (e.g., button, title, text field, etc.) and what options are available to the user (tap times, swipe direc-tions, number of fingers involved, etc.). Each gesture has its own advantages. A swipe is a more effective gesture when a user needs to quickly browse between options or go through the entire screen. Dragging the cursor, while the finger re-mains in contact with the screen, is more suitable for an ex-ploration scenario. VoiceOver allows for a unique smartphone usage pattern for the visually impaired.

3.1.2. Outing Habits

The visually impaired always go out with a very specific pur-pose, for example: go to work or classes, get food, do grocery shopping...etc. Because they need to prepare a lot before they go out, any abrupt change to the well planned daily schedule is not welcome. They prefer not go to unfamiliar places with-out accompany. If its inevitable, they must carry the address and phone number of destination in case they need to ask for help from sighted people.

3.1.3. Search Process

The informants need to prepare in detail prior to an outing to an unfamiliar location. Since text input on mobile devices is not as convenient as a physical keyboard, most of them pre-fer to search for information on a PC. Though voice input is not difficult there is an accuracy problem with identification of words that sometimes necessitates users to carry out mod-ification by texting. In addition, voice input is inappropriate in certain contexts and occasions. So we keep keyboard input in our later version. In the search process, all websites and apps are designed for the visually unimpaired. So the infor-mation displays in a manner usually only accessible to those with the power of sight, a circumstance that greatly inhibits visually impaired users. For those that can see a large amount of information is immediately available at a mere glance and the sought for information is found quickly. Using such an in-terface the visually impaired can only locate the information they seek slowly and painstakingly as often they spend a great deal of time only filtering out irrelevant messages before

hon-switch between different hierarchies, applications or even de-vices. Such a desultory information search process results to a bad navigation experience for the visual impaired.

3.1.4. Single Visit to Unfamiliar Place

If this trip to somewhere unfamiliar is a one-time visit, our informants will not exert the effort to memorize route de-tails. What is necessary for them to know before setting off is the means of transportation that they must take and generally what roads or streets that they will be traveling on. If there is any problem, they just ask people along the way or call someone for help. Since the visually impaired can easily get assistant inside the metro station or on the bus, the unsolved problem falls on the route navigation between each route by walk.

3.1.5. Multiple Visits to Unfamiliar Place

If our informants need to begin visiting a new place regu-larly, for example, when moving to a new apartment, getting a new job, attending a new class, they spend a great deal of time and effort on memorizing route details. To ascertain by what cues they recognize streets and neighborhoods, we ask them to describe a familiar route to another visually impaired friend. Interestingly, addresses and street numbers are seldom mentioned. Descriptions instead focus on other cues and fac-tors such as stairs, walls, the smell of the bakery shop, the sound of a grocery stores automated doorbell and so on. De-tails that those with sight probably do not even notice while walking, however, are crucial landmarks to our informants. Their information is perceived by auditory, tactile, and olfac-tory senses acting as cues to tell them where they are or which way to go. Unlike those who have sight using 2D map to be memorized, the visually impaired prefer linear point-to-point route information, which is easy to follow and can lower the cognitive load. For the first few visits to a new location, they may likely request O&M service to be helped by a profes-sional instructor to guide them. Since there are often too many details to remember, they usually have to travel a certain route several times to memorize it. To sum up (4) (5), addresses and streets are merely for recognizing areas and general headings. Multiple, non-visual sensory cues are used to identify more precise directions and ones exact location.

3.2. Shadowing

After the interview, we followed 5 of our interviewees on the streets to see exactly what they were dealing with. Our find-ings are as follows.

3.2.1. Transportation Preferences

methods. Some prefer Taipeis MRT, the Mass Rapid Transit system which is a light rail and subway offering service to much of Taipei, over using public buses because they can al-ways receive assistance from the staff at MRT stations. How-ever some prefer buses over the MRT since there are more bus stops often located closer to their destinations. In these cases it could also mean less transfer time or a shorter walking dis-tance. The final decision is a trade-off determined by several factors.

3.2.2. Other Outing Habits

While traveling in unfamiliar areas, our informants ask mem-bers of the public along their way for directions to make sure they are heading in the right direction. MRT station exits and bus stops are often used as meeting points. They also avoid journeying outside late at night because identifying directions can be difficult to when the surroundings are relatively quiet. A normally busy thoroughfare might be unrecognizable due to lack of traffic.

3.2.3. Crosswalk Intersections

Crossing an intersection is a challenge to the auditory sense and also comprises a feat to be accomplished by a more ad-vanced skill of O&M training. However it is a must-learn scenario for the visually impaired to be able to move about in-dependently. To accomplish this, one must know from where to start, when to cross, and how much time is required. First of all, the crosswalk must be located by listening to the al-ternating stops and forward motion which can be ascertained by the quality of engine noise and because crosswalks in Tai-wan are often adjacent to an area of intersections reserved for motorcyclists to wait at traffic lights. Then, listening to the stream of traffic noise informs a visually impaired pedestrian if it is flowing in parallel or vertical to their direction. Once they find the crosswalk, they must wait while listening and estimating the time provided to cross as the traffic takes one full turn of going through the intersection. In reality achiev-ing this feat without the use of visual cues is considerably difficult. Not every person who is visually impaired can with 100% accuracy chose the correct heading and cross the street perfectly, there is a probably of walking into the traffic, which is extremely dangerous. Therefore, the safest way to cross is to seek assistance and follow someone who is also walking in the same direction.

3.2.4. Getting Lost

Our informants do get lost quite often, even if at a familiar place or on a known route, as even a small distraction can cause them to lose their correctly held heading. When that happens, their first reaction is to confirm their current

loca-they were certain. Based on their habit of confirming di-rections along the way, the point at which they became lost usually is not too far from their original route. This method also has the advantage of not having to adopt a new route. Only under the circumstances that they realize that they are far from their original route or already comparatively close to their destination, do they try to determine and embark upon a new route.

3.2.5. Smartphone interactions while walking

Most of our informants place their smartphone in the pocket of their coat, pants, or bags while walking. Their white cane already occupies one hand. The free hand is regularly needed for use to deal with any sudden incidents or obstacles. If they must use their cell phone, they stop for that task. Only when they need the cell phone to assist in finding the way, do they use it while walking. All our informants expressed a prefer-ence for using headphones. Some use bone conduction head-phones or earbuds in one ear while keeping one ear entirely free to listen to the sound of their surroundings.

3.3. Expert Interview

We also interviewed an O&M instructor with 9 years of ex-perience, who showed us how a professional guide trains a visually impaired person to walk safely and effectively with-out use of sight. She revealed what are the skills required for traveling alone and how the visually impaired filter informa-tion while they walk. As meninforma-tioned prior, such an O&M ser-vice is only for learning a new daily route or living area. First, the instructor carries out an on-site survey of her own, and as-certains the many non-visual sensory cues available to act as mileposts for a certain route. Per individual differences the same route is perceived somehow differently and these dif-ferences also depend on the sensory abilities of each individ-ual; nonetheless there are some cues so obvious that everyone ought to notice. This is the type of cue the O&M instructor utilizes. The O&M instructor verbally describes the route to be learned to the trainee so they can form a rough concept of the route even before the walking session starts. However, with so many details to remember, both the instructor and our interviewees confirmed that they may have to actually walk it through 2-3 times to memorize an unfamiliar route entirely.

4. THE DESIGN OF GLIMNAVI SERVICE From the interviews with our study participants, we catego-rized all the problems faced by potential users into 8 distinct scenarios finding that the scenario of “outdoor walking” is where help is most needed. So we narrowed our focus to the

Fig. 2. (a) GlimNavi home screen: a table view structure makes the user more focused on their current task. (b) Feature 1: Search for Phone Numbers and Addresses. (c) Feature 2: Route A to B. (d) Feature 3: Where Am I? (Current location) (e) Feature 4: Route Record.

addresses, phone numbers, and modes of transportation to the destination are essential before setting off.

4.1. The design of App

So the four features of GlimNavi are: 1) Find Place, 2) Route plan, 3) Where am I? 4) Route Record. That is actually the process that our visually impaired informants use when visit-ing an unfamiliar place. Based on these explicit requirements and the principles of designing an app for the visually im-paired, we have developed the structure of each screen and detailed interactions that connect the entire flow. The follow-ing is a point-by-point description of the context of each fea-tures design.

Home Screen plays an important role when users first en-gage with our app. When designing the home screen of Glim-Navi, we carefully picked table view structure as the primary interface. The table view structure was chosen because its easy to navigate with voice-over and finger dragging opera-tion. In the latter test, the table view structure indeed encour-ages the user to better focus on their current task. Besides, all four items are designed in high-contrast color so that the peo-ple with low vision can quickly recognize the needed func-tion.

Feature 1: Find place. In order to test what kind of in-formation is necessary for traveling to an unfamiliar place, we ask our study participants to search for a means of trans-portation to a coffee shop previously unknown to them. They usually start with a keyword search. Here the problem is that they must spend much time filtering out a large volume of irrelevant information on the search result page before they obtain the address and phone number that they seek. After they finally obtain that information, copying the address to a map application is required. Unfortunately VoiceOvers copy

type those words into the text field of the map application. If the address is too long to remember easily, they might need to go back and forth between the browser and mapping app several times. In addition, keyboard input with VoiceOver is rather slow, and the Voice Input function itself is not always 100% correct. Either way, it is a very tedious and frustrat-ing task. As such the benefits and work required to produce a well planned daily itinerary is obvious as is the resistance to changing or revising that plan. Obviously, it is desirable that our design simplifies this complex procedure while negating or alleviating the need to switch between different apps and series of screens. Our goal is to provide a seamless in app ex-perience without interrupting the user flow. So on the search results screen, simply tapping twice on the phone number can initiate a direct call, while tapping twice on the address takes the user straight to feature 2: Route A to B.

Feature 2: Route A to B is the most complicated feature of GlimNavi. Many information display problems were en-countered when designing the task flow. The search results for modes of transportation contain much detailed informa-tion that appears at different levels. The challenge is how to present all that information on the screen. Our final solution is to design a time-sequential usage pattern that provides the correct information at the appropriate time separating the in-formation onto different screens. For example four modes of transportation information is distributed among three hierar-chies. At the first hierarchy, a user naturally wants to choose one among all those options. So the design should be able to help them quickly browse between options. At this point, the only thing they need to know is what kinds of vehicles are involved and the time required for each mode. Once that decision has been made, the users are led to the next hierar-chy. At this point, users would like to know more detailed information about their decision. So at this point it is appro-priate to provide an itinerary outline with step by step route details on this screen. While in transit, users can engage the Navigation Mode. At this stage a user just needs to follow the instruction provide and be guided without reference to the screen. So, GlimNavi only shows the instruction for a users current location, which basically is a description of their cur-rent surroundings that helps the user to locate and identify in which direction to go. This navigation content strongly dif-ferentiates GlimNavi from other navigation services.

Feature 3: Where Am I is a feature designed for this sce-nario, whenever the user feels uncertain or lost they can just shake the phone. It is possible to get lost even on a familiar route. “Where am I” GlimNavi will then provide not only the current address, but also the location of the nearest subway station or bus stop. In addition, the user is able to share the address with others if they need further help.

Feature 4: Route Record. This app will automatically record the route taken by a user. A special feature of this

func-of memory and recognition func-of crucial cues that allowed the individual user to find their own way, such as a memorable sound or smell that informed the user of being on the correct path. Consequently the next time that the user passes by the same spot, a customized navigation message written by the user will remind them of that special cue. And this feature al-lows sharing of this body of cues and experiences with other visually impaired users and friends.

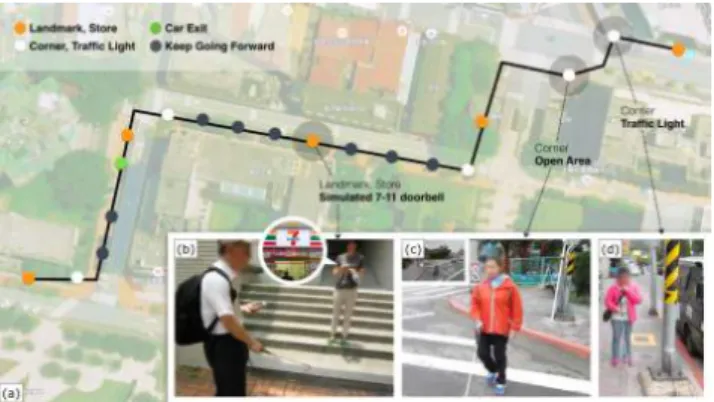

4.2. Rules of setting Beacons

According to the results of our pilot study, we concluded that different kinds of areas need installation of Beacons: 1) Fixed-distance marker on straight road, continuing straight message will be delivered every 10 meters in order to enhance confidence and safety while traveling. 2) Crossroads and ref-erence point, to help the visually impaired people crossing the street, GlimNavi guides users to find a reference point such as traffic lights, to adjust the orientation consistent with the crosswalk. 3) Dangerous places like lane entrances. 4) Stores and landmarks have multi-sensory clues, due to the lack of vi-sion, VI people recognize roads by auditory, olfactory or tac-tile clues like car noise, a doorbell or the smell of food they sense during the journey. GlimNavi informs users of specific landmarks and lets VI users build their own mental map. Be-sides, bluetooth signal can be vulnerable to interference, each iBeacon should be calibrated to ensure users receive every corresponding messages.

4.3. Navigation Message

Base on the insights from pilot study, we bring up the essen-tial rule of navigation message as following. User will get a long vibration when he/she enters the proximity range of 1-3 meters, and then the voice navigation message after 1 second interval. Navigation content combines O&M training expe-rience, providing tracking information they need most. Use o’clock position to guide users while in an open space, re-ducing confusion and the chance to get lost. Each navigating description includes three parts. 1) The action to take. Such as making turns, keeping straight, or looking for a reference point to help users crossing the street. 2) The environmen-tal description. Including landmarks and stores, and multi-sensory clues along with those places. For example, the car noise, a doorbell, or the smell of food can be the key for the visually impaired persons to memorize a specific landmark. 3) Next step to take. It gives users time to prepare for the coming action after theve done the current one. The complete navigation message is as following: “Please confirm the traf-fic light is on your 3 o’clock position, then cross the street.

Fig. 3. The test route inside the campus includes 19 iBeacon landmarks (a) which contains four areas concluded in the pre-vious section like store and landmark have multi-sensory (b), clues crossroads and reference point (c)(d).

5. USER STUDY

After study 1, we redesign GlimNavi app and set a test route inside the campus. For the safety reason, we simulate real en-vironmental conditions in the field. We set up an test route inside campus which contains four areas concluded in the previous section.(Figure 3a) Because there are no stores or landmarks with multi-sensory clues on the chosen route in campus, we set a tester to play doorbell sound to emulate a convenience store.(Figure 3b) The whole journey includes 19 iBeacon landmarks(Figure 3). Whenever users go into the proximity range of iBeacons will get a long vibration, and then the voice message after 1 second interval. In order to solve the crosswalk finding problem, we redesign the naviga-tion message in each corner and crosswalk. The message will ask visually impaired to locate the reference point nearby then walk straightly to cross the street. The reference point can be a traffic light which is right at the one end of the crosswalk. After they find the reference point, they can ensure they are on the position to cross the street. The invited participants were in different levels of involvement in technology, hoping to get more in-depth feedback.

5.1. Study Design

The testing process consists of three parts in line with the real life going out process: 1) Interface usability studies (prepa-ration at home). In order to evaluate the usability of app in-terface, users were asked to use GlimNavi to search for spe-cific destination and using routing planning function. 2) On-road navigation testing (actually on the On-road). In this part we wanted to know whether the messages delivered by GlimNavi help, and the situation of looking for a reference point to cross the road. 3) In-depth interviews (after going home). Upon arriving the destination, users should use history function to

5.2. Participants

We invited six visually impaired smartphone users, all of the participants are familiar with the use of iPhone and VoiceOver accessibility function and should equipped with independent orientation and mobility capability of going out. Three of them were were experts with good understanding of science and technologies.

5.3. Lessons Learned

In general, all participants were able to use GlimNavi search-ing and routes plannsearch-ing, and have given the followsearch-ing useful advice:

Fixed-distance marker on straight road

Every user like this kind of remind because sometimes they dont know how far theyve walked during the trip. And some suggested that the countdown number of meters should be user-defined, in order not to bother users too much.

Finding a reference point to crossing streets

Although a reference point can assist one find the crosswalk, the message might confuse users. For example, “Please con-firm the traffic light is on your 3 oclock position, then cross the street. Turn left after you cross the street.” Some of the participants lost the original direction after finding the refer-ence point, and considered every right-hand side is equal to a 3 oclock position. Consequently, only one user crossed the street correctly. Response to the problem, the guiding ap-proach should be redesigned or consider additional aids.

Vibration feedback

According to the interview, blind smartphone users are often unable to notice the voice has begun to broadcast since the existing map services dont have vibration, which leads to high chances to get incomplete messages. Many of our participants replied that the design of vibration before a voice message is easy but kind to them, and effectively solve the problem of missing messages.

Stores and landmarks with multi-sensory clues All the participants had noticed the doorbell sound played by our tester, and did feel certain and confident. However, they all knew the convenience store wasn’t real because that they had neither stepped on the carpet, nor feeling the air con-ditioning. It can clearly be seen that the way they perceive or recognize the same thing might be different. As a result, as long as GlimNavi informs that there will be specific land-marks, the visually impaired users would come out the rela-tive association and preparation to this place.

Replay function

During the test, the single-click replay function was very fre-quently used when the participants encountered noisy road conditions or long message content. Users had replayed on an average of 10 times, and 4 times in specific area. All the

Other feedbacks

All the participants considered the trailing messages were good for telling them the relatively safe side of a road. How-ever, none of the 6 participants had used the Where Am I func-tion because they walked in the area covering the beacon info. Visually impaired reported that they will use this function as they get lost or not receive the beacon message. Therefore, we should enable GPS to report the current location without beacon station nearby.

6. LIMITATION AND FUTURE WORK We do not expect any visually impaired user using our ser-vice to go out without training. Instead, we consider the user should have basic O&M skill and on-road experience because our navigation content combines O&M training prin-ciple, providing tracking information they need most to keep them safe on road. This is the basic requirement for our users and should not be eliminate in the future. While walking on the street, the visually impaired also reported two unsolved needs: 1) when is safe to cross the crosswalk, 2) precisely indicate the obstacle on road especially the parking motorcy-cles or plant pots. Not every traffic light has sound notifica-tions to indicate the green light of different direction. In this case, using IoT technology to broadcast the signal condition to the smartphone is doable. Adding sonar sensor onto the white cane can also provide alerts of the surrounding obsta-cles. These are the future work we envision to do.

7. CONCLUSION

Going out independently is a general demand of visually im-paired people, yet is not fully solved in previous works. We focus on the entire journey of outing and divided the out-ing experience into three stages: pre-journey, on-road, and post-journey. Three qualitative studies are used to find out the proper intervention of technologies. We design a mobile app that contains the essential functions of outing. The user in-terface has clear structure and easy-to-navigation interaction flow. We use iBeacon to provide micro-location information that can be attached in the entrance of a store, crossroads, cor-ners, and the dangerous places like lane entrances. The navi-gation message is well-designed with O&M principles to pro-vide clear information for visually impaired to track the route. In summary, by using a smartphone, micro-location technol-ogy helps the visually impaired people to properly and safely arrive at their destination and to enhance their ability and will-ingness to function independently in the public domain. Our vision is to build a VI-friendly city. Though it need more ef-fort to improve our system and deploy to the real space, we look forward to making it happen in the future.

This study was supported by the National Science Council, Taiwan, under grant MOST-104-2218-E-004-002.

REFERENCES

[1] Zaira Cattaneo, Tomaso Vecchi, Cesare Cornoldi, Irene Mammarella, Daniela Bonino, Emiliano Ricciardi, and Pietro Pietrini, “Imagery and spatial processes in blind-ness and visual impairment,” 2008.

[2] Florence Gaunet and Xavier Briffault, “Exploring the Functional Specifications of a Localized Wayfinding Ver-bal Aid for Blind Pedestrians: Simple and Structured Ur-ban Areas,” HumanComputer Interaction, vol. 20, no. 3, pp. 267–314, 2005.

[3] Hanlu Ye, Meethu Malu, Uran Oh, and Leah Findlater, “Current and future mobile and wearable device use by people with visual impairments,” Proceedings of the 32nd annual ACM conference on Human factors in computing systems - CHI ’14, pp. 3123–3132, 2014.

[4] Shiri Azenkot, Sanjana Prasain, Alan Borning, Emily Fortuna, Richard E. Ladner, and Jacob O. Wobbrock, “Enhancing Independence and Safety for Blind and Deaf-Blind Public Transit Riders,” Proceedings of the 2011 an-nual conference on Human factors in computing systems - CHI ’11, pp. 3247–3256, 2011.

[5] Martin Pielot, Benjamin Poppinga, and Susanne Boll, “PocketNavigator: Vibro-tactile waypoint navigation for everyday mobile devices.,” in MobileHCI 2010, 2010, pp. 423–426.

[6] Sonja R¨umelin, Enrico Rukzio, and Robert Hardy, “Navi-radar: A novel tactile information display for pedestrian navigation,” in Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA, 2011, UIST ’11, pp. 293–302, ACM.

[7] M Jeon and N Nazneen, “Listen2dRoom: helping blind individuals understand room layouts,” CHI’12 Extended . . . , pp. 1577–1582, 2012.

[8] Aura Ganz, Siddhesh Rajan Gandhi, Carole Wilson, and Gary Mullett, “INSIGHT: RFID and Bluetooth enabled automated space for the blind and visually impaired,” in 2010 Annual International Conference of the IEEE En-gineering in Medicine and Biology Society, EMBC’10, 2010, pp. 331–334.

[9] Merja Saarela, “Solving way-finding challenges of a visually impaired person in a shopping mall by strengthening landmarks recognisabil-ity with iBeacons,” http://www.hamk.fi/ english/collaboration-and-research/ smart-services/matec/Documents/