Verifying Fuzzy ain Theories Usi

Hahn-Ming Lee*, Jyh-Ming Chen, and En-Chieh Chang Department of Electronic Engineering

National Taiwan Institute of Technology Taipei, TAIWAN

E-mail: hmleeaet. ntit . edu. tw

Abstract

In this paper, a fuzzy neural network model, named Knowledge-Based Neural Network with Trapezoid Fuzzy Set (KBNN/TFS), that processes trapezoid fuzzy inputs is proposed. In addition to fuzzy rule revision, the model is capable of fuzzy rule verification and generation. To facilitate the processing of fuzzy information, LR-fuzzy interval is employed. Imperfect domain theories can be directly translated into KBNN/TFS structure and then revised by neural learning. A consistency checking algorithm is proposed for verifying the initial knowledge and the revised fuzzy rules. The algorithm is aimed at finding the redundant rules, conflicting rules and subsumed rules in fuzzy rule base. We show the workings of the proposed model on a Knowledge Base Evaluator (KBE). The result show that the proposed algorithm can detect the inconsistencies in KBNN/TFS.

By removing the inconsistencies and applying a rule insertion mechanism, the results are greatly improved.

Besides, a consistent fuzzy rule base is obtained.

1. ~ n t r o d u c t ~ o n

In this paper, we extend our previous work, K B F "

[9, lo], with the ability of processing trapezoid inputs.

The new model is named Knowledge-Based Neural Network with Trapezoid Fuzzy Set, KBNNKFS in short.

In addition to fuzzy rule revision, the new model is capable of fuzzy rule verification and generation. In a symbolic rule base, rule verification can be conducted by pattern matching between the premises and goal clauses [1,11,13]. On a knowledge-based neural network with symbolic inputs, such as [3,4,6], clustering of weight vectors and heuristics are often used to prevent from generating inconsistent rules. In [6], for checking the redundancy, each antecedent of a rule is examined to see if it can be removed from the rule. In [3], clustering of weight vectors is used to avoid generating redundant rules.

Besides, a consistent-shift algorithm is used for detecting the inconsistent connections in the neural network. In [4], three KT heuristics are used for removing the inconsistencies from the neural network. In fuzzy rule verification, we propose a fuzzy rule clustering method to find the inconsistencies, which include redundant rules, conflicting rules and subsumed rules, in fuzzy rule base.

The rule generation translates the KBNN/TFS structure and fuzzy weights into fuzzy rules with certainty factors.

*

Correspondence to this author.2. A Knowledge-

with Trapezoid Fuzzy Set

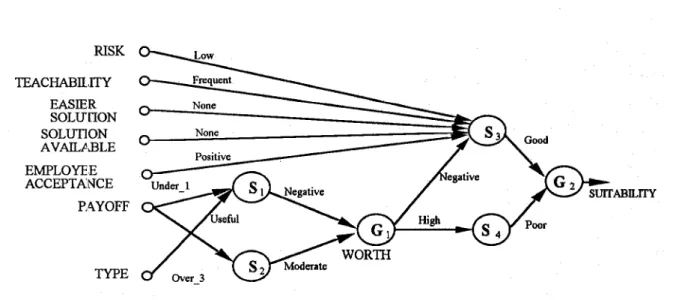

In "N/TFS, S-neurons, G-neurons, and fuzzy weights are used to form fuzzy rules. S-neurons calculate the firing degree of fuzzy rules, whereas G- neurons derive the conclusions. Each input connection of S-neuron represeiiits a condition of a fuzzy rule. Hence, a fuzzy rule's preimise part is composed of all input connections of a S-neuron. For each rule's conclusion variable, a G-neuron is used to represent it. For example, assume the existent fuzzy rules are listed as follows:

Rule 1: If PAYOFF is Under-1 and TYPE is Useful, then WORTH is Negative.

Rule 2: If PAYOFF is Over-3, then WORTH is Moderate.

Rule 3: If WORTH is Negative and EMPLOYEE ACCEPTANCE is Positive and SOLUTION AVAILABLE is None and EASIER SOLUTION is None and TEACHABILITY is Frequent and RISK is

Low,

then SUITABILITY is Good.

Rule

4;

If WORTH is High, then SUITABILITY is Poor.Fig. 1 shows the initial structure of KBNN/TFS generated by the fuzzy rules listed above. Where

PAYOFF,

TYPE,

EMPLOYEE ACCEPTANCE,SOLUTION AVAILABLE, EASIER SOLUTION, TEACHABILITY, and RISK are input variables, and SUITABILITY is the output. WORTH is the hidden conclusion. In thcse fuzzy rules, many linguistic fuzzy terms are used for each variable, such as Useful, Difficult, High,.., etc. They are applied to initialize the connection weights of KBNNKFS. For the learning algorithm, the readers may refer tci [IO].

3. Fuzzy rule verification and refinement

Before a knowledge base can produce satisfactory

results, the initial knowledge should undergo a number of refining process [7,12]. A knowledge-based neural network with learning ability is suitable for the task [2].

By combining the initial knowledge and neural learning from empirical data, the performance of the knowledge base can be greatly improved

[3,5,14].

3.1 Rule verification

To make sure the extracted rules are consistent, a checking methodology is proposed. In [13], the process of rule verification in symbolic rules is explained as removing the inconsistencies and incompleteness from the rule base. The inconsistencies may include:

0 conflict rules: two rules have the same premise but contradict in their conclusions.

0 redundant rules: two rules have the same premise and the same conclusion.

0 subsumed rules: two rules both fire in the presence of particular inputs; but one contains more conditions than the other.

We say the rule with more conditions is subsumed by the other.

Incompleteness in the rule base consists of:

0 missing rules: no rule will give the desired result in some cases. It means that there are missing rules for covering these cases.

The definitions illustrated above slightly differ with those in other papers. Some papers, for example, discussed the problem of circular rules. Because KBNN/TFS uses feed-forward structure, there is no link from output nodes to input nodes. Thus, circular rules do not exist in KBNNiTFS.

3.2

Consistency checkhg

For checking the inconsistencies in a fuzzy rule base, we check the similarities between the premises. We can illustrate the clustering procedure as follows:

1.

2.

3.

4.

5 .

Set rule R1 to be the center of rule cluster GC1

.

For each rule R, find a group whose center GCj is closest to R. That is, S(R,GCj) has the largest value among S g i GCi), i=l, 2 ,..., NE where Ng is the number of groups.

If S(R, GCj) is greater than a predefined threshold, then R is belong to group j. Othenvise, form a new group for R and goto step 2.

Add R to group j.

If dim(R)=dim(GCj), i.e., the two rules have the same attributes. Suppose GCj and R contain n attributes.

They can be express as:

As we mentioned above, in computing the similarity between rules, we consider the premises of rules only.

The att, and propn are the nth attribute of R and its corresponding property which is represented in LR- m e fuzzy number. In this case, GCj needs to be adjusted. The new center of the rule cluster is

Where Nr is the number of rules in rule cluster j.

6. If dim(R)<dim(GC,), then assign R as the new center

7. If there are rules which do not belong to any group, of group j.

go to step 2.

3.3 Rule insertion

The clustering of rules and removal of inconsistencies

can help the convergence of neural learning. However, due to the lack of nodes and connections to represent the rule base, the performance of the network may get stuck.

It is the same situation as missing rules in symbolic rule bases. To overcome this, when the performance get

stuck, an insertion mechanism is applied.

Intuitively, the deletion and insertion of rules can be combined. Upon finding the inconsistent rules, we set the values of their premises to unknown instead of deleting them. We use (0.5, 0.5, 0, 0) as the value of unknown. By this way, the neural network can learn new rules. However, if there is no inconsistency or the performance is still poor after the above-mentioned rule insertion, a more analytic method can be applied. The idea is to insert rules to cover the data that the network cannot correctly derive the desired outputs. Thus, in the inserted rules, the attributes and the output concepts ofthe data, which render large errors, are included. The fuzzy weights (values) of these newly inserted rules are set to unknown, and let the revision algorithm to learn them empirically.

4. Experimental results

To evaluate the performance of the proposed model, we implement Knowledge Base Evaluator (KBE) [8] in KBNNKFS. KBE is an expert system that can evaluate whether the expert system is suitable for a company or not.

Eight attributes, PAYOFF, PERCENT SOLUTION, TYPE, EMPLOYEE ACCEPTANCE, SOLUTION AVAILABLE, EASIER SOLUTION, TEACHABILITY, and RISK, are used to determine the output, SUITABILITY. An intermediate concept, WORTH, which is derived from the first three factors shown above, is employed. The imperfect KBE rules are used to initialize KBNN/TFS. A set of 200 training instances generated from the correct KBE rules is used to revise the imperfect rules. The learning rate for this example is 0.03.

RISK TEACHABILfITY

EASIER SOLTT[ION SOLUTION AVAILM3LE EMPLOYEE ACCEPTAINCE

P,i$YOFF

TYPE

Fig. 1. An initial structure of a KBNN/TFS example.

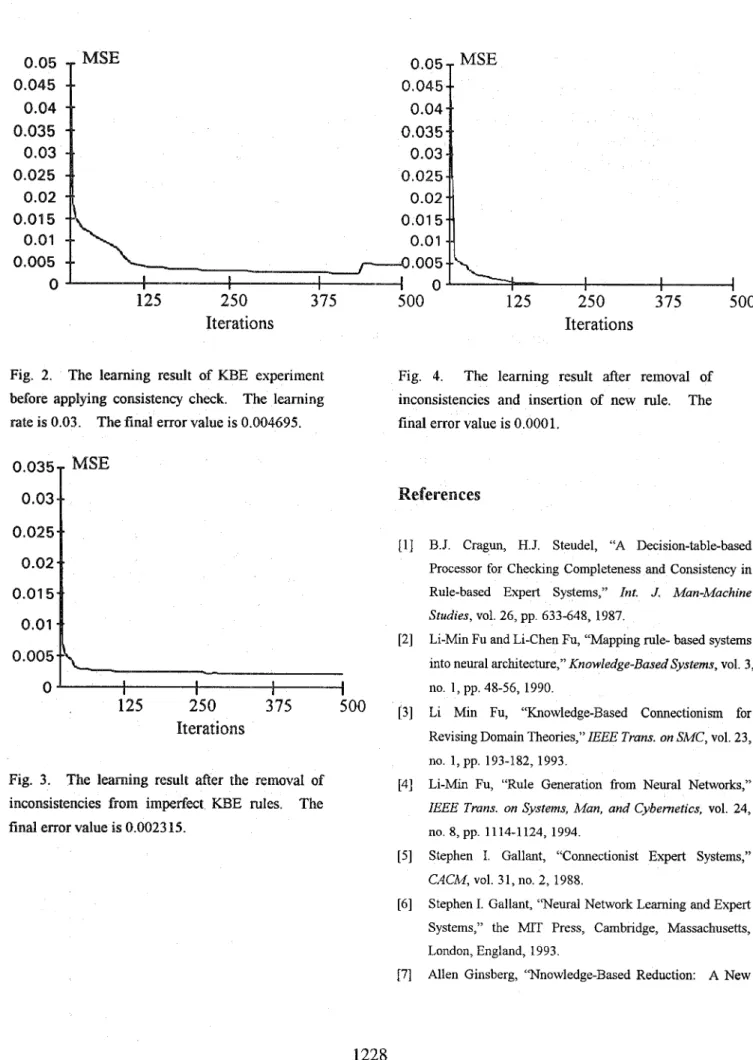

Fig. 2 shows the learning result before we apply the consistency check. The learning stops at 500 epochs, the upper limit we set, and the final error value is 0.00495.

By the gradient (descent algorithm, the average mean square error is minimized but the system gets stuck at local minimum. The inconsistencies in rule base may hinder the correct learning.

The next trial1 we apply the consistency check on imperfect KBE rules. These inconsistencies are detected by our checking algorithm. After detecting the inconsistencies, wc remove the inconsistent rules and then reconstruct and reltrain KBNN/TFS again. The result is shown in Fig. 3. Compared with the previous result, the error value, 0.002315, is lower and the convergent speed is faster.

By setting the premise of the inconsistent rule to the value unknown, the new rule is:

Finally, we apply the rule insertion method.

If

WORTH

is unknown then SUITABILITY is l1300r.The learning result is shown in Fig. 4 which is much

It is constructed by S-neurons and G-neurons.

better than the previous two results. The error is lower than 0.0001 and the learnihg stops by 500 epochs. This experiment shows the workings of the consistency checking algorithm and the rule insertion method.

5. Conclusion

In this paper, we extend our previous work, Knowledge-Based Fuzzy Neural Network (KBFNN), with the ability of processing trapezoid

fuzzy

inputs. Besides, a method for checking the inconsistencies in neural networks is proposed. To revise the domain theories, first, the imperfect domain theories are translated intoKBNNRFS

structure. Second, we apply the consistency check on the generated KBNNRFS. The inconsistencies in the neural networks areharmful

for the learning.From our experiments, we have proved that the removal of inconsistent rules and insertion of missing rules can speed up the convergence of neural learning.

125 250 375

Iterations

Fig. 2. The learning result of

lying consistency check. The learning rate is 0.03. The final error value is 0.004695.

0.02 0.01

50.01 0.005

U I I I I

125 250 375 500

Iterations

ing result after the removal of i n c o ~ s ~ s t e ~ c ~ e s from impe ct KBE rules. The final error value is 0.002315.

500 125 250 375 500

Iterations

Fig. 4. The learning result after removal of inconsistencies and insertion of new rule. The final error value is 0.0001.

rences

B. J. Cragun, H. J. Steudel, “A Decision-table-based Processor for Checking Completeness and Consistency in Rule-based Expert Systems,” Int. J. Man-Machine Studies, vol. 26, pp 633-648, 1987.

Li-Min Fu and Li-Chen Fu, “Mapping rule- based systems into neural architecture,” Knowledge-Based Systems, vol. 3 , no. 1 , pp. 48-56, 1990.

Li Min Fu, “Knowledge-Based Connectionism for Revising Domain Theories,” IEEE Trans. on SMC, vol. 23, no. 1 , pp. 193-182,1993,

Li-Min Fu, “Rule Generation from Neural Networks,”

IEEE Trans. on Systems, Man, and Cybernetics, vol. 24, no. 8, pp. 1114-1124, 1994.

Stephen I. Gallant, “Connectionist Expert Systems,”

CACM, vol. 31, no. 2, 1988.

Stephen I. Gallant, “Neural Network Learning and Expert Systems,” the MIT Press, Cambridge, Massachusetts, London, England, 1993.

Allen Ginsberg, ‘Nnowledge-Based Reduction: A New

Approach to Checking Knowledge Bases for Inconsistency & Redundancy,” Seventh National Conference on .U, pp. 120-125, August 1988.

R. Keller, EqIPert System Technology Development &

Application (Ytixudon Press, Englewood Cliffs, NJ, 1987).

H. M. Lee and B. H. Lu, “A Fuzzy Rule-Based Neural Network Modlel for Revising Approximate Domain Knowledge,” LiKEE Intemational Conference on Systems, Man and Cybeilnetics, pp. 634-639, 1994.

H. M. Lee, B H. Lu and F. T. Lin, “A Fuzzy Neural Network Model for Revising Imperfect Fuzzy Rules,” to appear in Fuzzy Sets and Systems, 1995.

T. A. Nguyen, T. J. Laffey, and D. Pecora,.“Knowledge Base Verification,” AI Magazine, vol. 8, no. 2, pp. 69-75,

1987.

Dirk Ourston and Raymond J. Mooney, “Changing the Rules: A Comprehensive Approach to Theory Refinement,” AIAAZ-90, pp. 815-820.

Motoi Suwa, A.Carlisle Scott, and Edward H.

Shortliffe,“An Approach to Verifying Completeness and Consistency iin a Rule-Based Expert System,” AI

Magazine, vol. 3 , no. 4, pp. 16-2 1, 1982.

Geoffrey G. Towell, Jude W. Shavlik, “Extracting Refined Rules from Knowledge-Based Neural Networks,” Machine Leaming, vol. 13, pp. 71-101, 1993.