Using a Full Counterpropagation Neural Network for Image Watermarking

全文

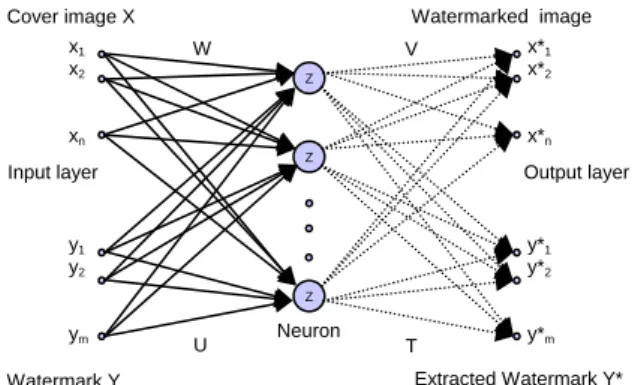

(2) Int. Computer Symposium, Dec. 15-17, 2004, Taipei, Taiwan.. procedures. In this paper we proposed a specific full counterpropagation neural network (FCNN) for watermarking. Different from the traditional methods, the watermark is embedded in the synapses of FCNN rather than the cover image. Hence the quality of the watermarked image is almost same as the original cover image. In addition, the quality of the extracted watermark image does not degrade after most attacks, because the watermark is stored in the synapses. [1] In our method, FCNN simplifies the complex embedding and corresponding extraction procedures. The experimental results show that there was no need for these procedures in FCNN-based watermarking. Furthermore, the proposed method is able to achieve robustness, imperceptibility and authenticity in watermarking. The following sections discuss the application of FCNN in watermarking in the proposed method. Section 2 introduces the FCNN, the algorithm of embedding, and the method of extraction. Section 3 summarizes the experimental results, and conclusions are given Section 4.. 2. Full Counterpropagation network for watermarking. training, the FCNN adaptively constructs a lookup table approximating the mapping between the presented input/output training pair: cover image X and watermark image Y. After being trained, the FCNN can be used to extract the corresponding watermark Y* if the embedded image X* is known. Figure 2 shows the architecture of the proposed FCNN. x1 x2. W. V Z. xn. x*1 x*2 x*n. Z. Input layer. Output layer. y1 y2. y*1 y*2 Z. ym. U. Neuron. T. y*m. Extracted Watermark Y*. Watermark Y. Figure 2. The architecture of the full counterpropagation neural network for watermarking. neural. The two-dimensional cover image X and watermark image Y can be written in vector form as: (1) X = {x1 , x 2 , L , x n } Y = {y1 , y 2 , L , y m } (2) where n is the number of pixels of the cover image X, and m is the number of pixels of the watermark image Y. The input vectors X and Y are connected to neuron Z i with weights W and U respectively. (3) W = {w1,1 , w1, 2 , w1,3 L , wn, i }. In this paper, a novel full counterpropagation neural network (FCNN) is proposed for image watermarking. The full counterpropatation neural network is a supervised-learning network with capacity of bidirectional mapping. Figure 1 shows the conceptual diagram of the FCNN. Attacks FCNN. Watermarked image. Cover image X. U = {u1,1 , u1, 2 , u1,3 L , u m,i }. (4). Cover Image. Watermarked Image. where wn, i denotes the weight between i-th neuron. Watermark. Extracted watermark. and input x n . Similarly, u m, i denotes the weight. Figure 1. The conceptual diagram of the FCNN. between i-th neuron and input y m . Accordingly, the total input of the i-th neuron is defined as. The traditional watermarking methods require complex embedding and corresponding extraction procedures. However, the proposed watermarking method integrates the embedding and extraction procedure into a full counterpropagation based neural network. In order to ensure that the proposed watermarking method has capability of embedded and extracted watermarks, the cover image and watermark image are input to the FCNN simultaneously during the embedding process. After the network’s evolution, a watermarked image was generated. On other hand, the same FCNN was used for extracting the corresponding watermark from the watermarked images with or without being attacked. The purpose of this paper is to present a full counterpropagation neural network for watermarking. The FCNN is designed to learn bidirectional mappings. Through the process of supervised. Zi =. n. m. k =1. k =1. ∑ (xk − wi,k )2 + ∑ (y k − ui,k )2 ,. (5). which represents the distance between the input x, y pair and the i-th neuron. The activation function for each neuron is given by ⎧1 if Zi is smallest for all i Γi = ⎨ (6) ⎩0 otherwise therefore, the j-th output of X * and Y * can be obtained as n. x*j = ∑ Γi v j ,i. (7). i =1. and n. y *j = ∑ Γi t j ,i i =1. 462. (8).

(3) Int. Computer Symposium, Dec. 15-17, 2004, Taipei, Taiwan.. {. Y * = y1* , y 2* , L , y m*. where v j , i denotes the weight between i-th neuron and output x *j . Similarly, t j , i denotes the weight. and. T = {t1,1 , t1, 2 , t1,3 L , t m,i }. (9) (10). where n and m are the number of pixels of the cover image X and watermark image Y respectively. The output errors of FCNN are calculated by n. E c = ∑ x i* − d i. (11). E m = ∑ y *j − d j. (12). i =1 m. j =1. where d i denotes the i-th pixel value of desired watermarked image (input cover image) and d j denotes the j-th pixel value of desired watermark image (input watermark image). If the output error is less than a predefined threshold, the network converges. Otherwise, the input weight vectors W and U associated with the winning neuron ( Γi = 1 ) are updated by wi , j (k + 1) = [1 − α (k )]wi , j (k ) + α (k )xi , i = arg(Γi = 1) ui , j (k + 1) = [1 − α (k )]ui , j (k ) + α (k ) yi ,. (13) i = arg(Γi = 1). k ⎞ ⎟ (15) ⎟ k ⎝ 0⎠ where α (0) is initial learning rates, k 0 is a positive constant. Similarity, the output weight vectors V and T associated with the winning neuron are updated by ⎛. α (k ) = α (0 ) exp⎜⎜ −. ti , j (k + 1) = ti , j (k ) + β (k )[t j − ti , j (k )]Γi ,. 2.2 Extracting algorithm. 3. Experimental results. j = 1,2,..., n. (16) j = 1,2,..., m. (17) where β (k ) is the learning rate of output layer. After the FCNN converged, the watermarked image is obtained as X * = x1* , x 2* , L , x n* (18) After the watermarked image is obtained, the same FCNN is used to extract the corresponding watermark from the watermarked images with or without being attacked. The extracted watermark image is obtained as. {. The watermark embedding approach is summarized as follows: Input: The cover image X and watermark Y Output: watermarked image X* Step 1. Arbitrarily assigns the initial weights to U, V, W, and T. Step 2. Use Eq.(5) and Eq.(6) to calculate the output of each neuron. Step 3. Use Eq.(7) and Eq.(8) to calculate the network outputs Step 4. Use Eq.(11) and Eq.(12) to calculate the output errors of FCNN, if E c and E m is less than a predefined threshold value, the network converged and use Eq.(7) and Eq.(18) to obtain the watermarked image X*, else go next step. Step 5. Use Eq.(13) and Eq.(14) to update the weight vectors W and U, and the learning rate α (k ) by Eq.(15). Step 6. Use Eq.(16) and Eq.(17) to update the weight vectors V and T. Then go to step2.. To extract watermark from the trained FCNN described in Section 2.1, the watermark extracting approach is summarized as follows: Input: Watermarked image X* Output: Extracted watermark Y* Step 1. Use Eq.(5) and Eq.(6) to calculate the neuron output. Step 2. Use Eq.(8) and Eq.(19) to obtain the extracted watermark image Y*.. (14) where α (k ) is the learning rate of input layer. In addition, the learning rate α (k ) is suitably decreasing functions of learning time k. The learning function α (k ) can be specified as follows:. vi , j (k + 1) = vi , j (k ) + β (k )[ x j − vi , j (k )]Γi ,. (19). 2.1 Embedding algorithm. between i-th neuron and output y *j . The synaptic weights can be written in a vector form as V = {v1,1 , v1, 2 , v1,3 , L , vn, i }. }. }. 463. In order to show that the proposed FCNN has good performance for watermarking, four experiments are proposed to demonstrate robustness, imperceptibility and authenticity achieved by the application. In our experiments, the cover images including Jet and Couple, and the image size is 256 × 256 . In addition, the watermark image is the school badge of NYUST with image size 32× 32 . Figures 3(a-c) show the cover images and watermark image respectively. To evaluate the robustness of conventional LSB method[2] and proposed FCNN method, seven types of attacks were used to attack watermarked image. These include 3× 3 averaging filter, crop left-top 1/4 part of watermarked image, 3× 3 Laplacian mask, jpeg compression, rotating 90°, 2 × 2 mosaic and Gaussian..

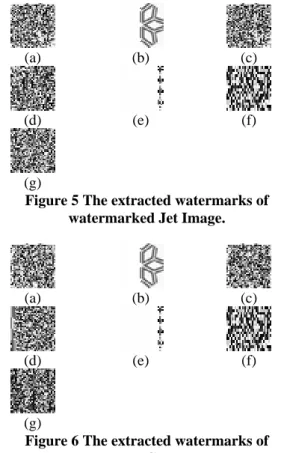

(4) Int. Computer Symposium, Dec. 15-17, 2004, Taipei, Taiwan.. values of the marked images by LSB method are shown in Table 1. Table 1. PSNR of Watermarked Image by LSB method Image PSNR Jet 65.43 dB Couple 65.43 dB. (a). (b). Figures 5(a-g) show the extracted watermarks of marked jet image attacked by (a) 3x3 averaging filter, (b) crop left-top 1/4, (c) 3x3 Laplacian mask , (d) jpeg compression , (e) rotating 90°, (f) 2x2 mosaic, and (g) Gaussian noise. Figures 6(a-g) show watermarks extracted from the marked Couple image under the same attacks as previous experiment. Obviously, the LSB method cannot extract complete watermark result in messy pattern. In other words, the hidden watermark has been destroyed by the attacks. Table 2 shows the PSNR values of the extracted watermarks of marked images under the seven types of attacks. From Table 2, the PSNR values are between 5.41 dB to 12.9dB. The low PSNR values indicate that the LSB method is unable to resist attacks by 3x3 averaging filter, crop left-top 1/4, 3x3 Laplacian mask, jpeg compression, rotating 90°, 2x2 mosaic and Gaussian noise.. (c) Figure 3. Cover image and watermark image, (a) Cover Jet Image. (b) Cover Couple Image. (c) Watermark. The Peak Signal to Noise Ratio (PSNR) was used to evaluate the quality of watermarked image and extracted watermark, which can be represented as: X 2 peak PSNR(dB) = 10 log10 (20) 2. σe. where. σ e2. is defined as. ∑∑ (X M. ⎛ 1 ⎞ ⎟ ⎝ MN ⎠ i =1. σ e2 = ⎜. N. ij. − Z ij. )2. (21). j =1. where M × N is the size of cover image, X ij is gray level of (i,j)th pixel of cover image. Similarly, Z ij denotes the gray level of (i,j)th pixel of watermarked image. X2peak denotes the squared peak value of cover image. The higher PSNR is, the more similar embedded image and the cover image are.. (a). (b). (c). (d). (e). (f). (g) Figure 5 The extracted watermarks of watermarked Jet Image.. (a) (b) Figure 4. Watermarked image, (a) watermarked Jet Image. (b) watermarked Couple image.. (a). (b). (c). (d). (e). (f). 3.1 Experimental 1: Robust testing for LSB Method (g) Figure 6 The extracted watermarks of watermarked Couple Image.. Since the watermark image is a 32*32 gray-level image. LSB method [2] requires 8192 pixels of cover image to embed the watermark. Figure 4(a) and 4(b) show the watermarked images of jet and couple image respectively. To evaluate the robustness of LSB method, seven kinds of noise were added to the watermarked images. The PSNR. 464.

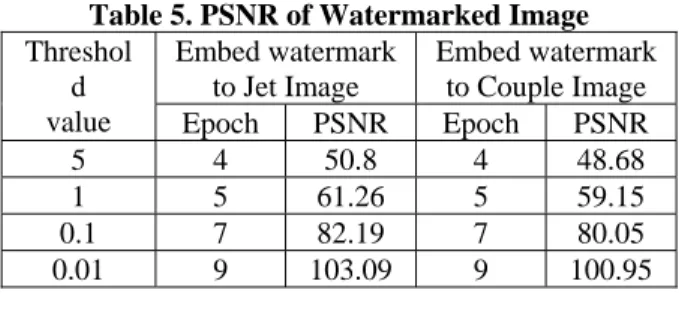

(5) Int. Computer Symposium, Dec. 15-17, 2004, Taipei, Taiwan.. completely extracted by means of FCNN, which evidences robustness facilitated by our method.. Table 2 PSNR of extracted watermark from watermarked Jet and Couple image by LSB method PSNR of PSNR of extracted extracted Attack watermarked watermark from embedded from embedded Jet Image Couple Image Blurred 7.22 dB 7.05 dB Cropped 12.9 dB 12.9 dB Sharpened 8.76 dB 8.12 dB JPEG 6.48 dB 6.92 dB Compressed Rotated 8.74 dB 8.74 dB Mosaic 5.41 dB 6 dB Gaussian Noise 6.83 dB 6.16 dB. (a). (b). (c). (d). (e). (f). (g) Figure 8 The extracted watermarks of watermarked Jet image.. 3.2 Experimental 2: Robust testing for proposed Method In this evaluation, the initial learning rates α and β was set to 0.95 and 0.7 respectively. The number of neurons is 32 and constant k0 was set to 10. The threshold value of 1 was established to terminate training. Figures 7(a) and 7(b) show the watermarked Jet and Couple images respectively. The PSNR values of watermarked images by FCNN method are shown in Table 3. The high PSNR values indicate that the watermarked image is the most similar to the cover image.. (a). (b). (c). (d). (e). (f). (g) Figure 9. The extracted watermarks of watermarked Couple image. Table 4 shows the PSNR values of extracted watermarks after various attacks. It indicates the proposed method is robust enough to resist attacks such as 3x3 averaging filter, crop left-top 1/4, 3x3 Laplacian mask, jpeg compression, rotating 90°, 2x2 mosaic and Gaussian noise Table 4. PSNR of Extracted Watermark from watermarked Jet and Couple Image by Proposed FCNN PSNR of PSNR of watermarks watermarks Attack extracted from extracted from the embedded the embedded Jet Image Couple Image Blurred 50.13 dB 50.06 dB Cropped 50.13 dB 50.06 dB Sharpened 50.13 dB 50.06 dB JPEG 50.13 dB 50.06 dB Compressed Rotated 50.13 dB 50.06 dB Mosaic 50.13 dB 50.06 dB Gaussian Noise 50.13 dB 50.06 dB. (a) (b) Figure 7. Watermarked image, (a) Watermarked Jet image. (b) Watermarked Couple image. Table 3. PSNR of Watermarked Image by proposed FCNN Image PSNR Jet 61.28 dB Couple 59.14 dB. Figures 8(a-g) demonstrate the extracted watermarks from the watermarked Jet image attacked by (a) 3x3 averaging filter, (b) crop left-top 1/4, (c) 3x3 Laplacian mask, (d) jpeg compression, (e) rotating 90°, (f) 2x2 mosaic and (g) Gaussian noise. Figures 9(a-g) show the extracted watermarks of the marked ‘couple’ image attacked in the same ways as in the previous experiment. All the watermarks were. 3.3 Experimental 3: Imperceptibility for proposed method The setting of the threshold value is the major parameter related to the quality of watermarked. 465.

(6) Int. Computer Symposium, Dec. 15-17, 2004, Taipei, Taiwan.. image. Table 5 shows the PSNR values and number of epoch of FCNN for different threshold values. We can obtain the higher PSNR from the table when the threshold is set to a small value. In other words, a small threshold value obtains a watermarked image that is the most similar to the cover image. However, the smaller threshold value implies more time for network training. The threshold value was selected to offer the best trade-off between imperceptibility and training time.. was almost the same as the original cover image. In addition, because of the watermark was stored in the synapses, most of the attacks could not degrade the quality of the extracted watermark image. This shows that the proposed FCNN could resist various attacks. In addition, the watermark embedding procedure and extracting procedure is integrated into the proposed FCNN. By doing so, the proposed approach simplifies traditional procedures. The experimental results show that our application achieved robustness, imperceptibility and authenticity in digital watermarking.. Table 5. PSNR of Watermarked Image Threshol Embed watermark Embed watermark d to Jet Image to Couple Image value Epoch PSNR Epoch PSNR 5 4 50.8 4 48.68 1 5 61.26 5 59.15 0.1 7 82.19 7 80.05 0.01 9 103.09 9 100.95. Acknowledgment This work was supported by the National Science Council, Taiwan, R.O.C., under grant nr. NSC 922213-E-224-041.. References [1]. 3.4 Experimental 4: Authenticity test Raw cover image were used to test the authenticity of the proposed FCNN. Figure 10(a) and 10(b) are non-watermarked Baboon and Girl images. Figure 11(a) and 11(b) show the extracted watermarks, which are not watermarked images. As the results show, we cannot extract watermarks from the raw cover images. Hence the proposed method is able to extract corresponding watermark from marked images, but not from unmarked images.. [2]. [3]. [4]. [5]. [6]. (a) (b) Figure 10. Non-training cover images: (a) the baboon, (b) the girl.. [7]. (a) (b) Figure 11. The extracted watermarks of Fig. 10(a) and Fig. 10(b). 4. Conclusions In this paper, a specific designed full counterpropagation neural network has been presented for digital image watermarking. Different from the traditional methods, the watermark was embedded in the synapses of FCNN instead of the cover image. The quality of the watermarked image. 466. [8]. Fredric M. Ham and Ivica Kostanic, Principles of Neurocomputing for Science & Engineering, McGraw-Hill, Singapore, 2001. R.G.van Schyndel, A.Z. Tirkel and C.F. Osborne, “A digital watermark,” Proceeding of IEEE International Conference on Image Processing, vol: 2, pp: 86-92, Nov, 1994. Ren-Junn Hwang, Chuan-Ho Kao and Rong-Chi Chang, “Watermark in color image,” Proceeding of First International Symposium on Cyber Worlds, pp: 225-229, Nov, 2002. Ahmidi N., Safabakhsh R., “A Novel DCT-based Approach for Secure Color Image Watermarking,” Proceedings ITCC 2004 International Conference on Information Technology: Coding and Computing, vol. 2, pp. 709 – 713, Apr, 2004 Fengsen Deng and Bingxi Wang, “A Novel Technique for Robust Image Watermarking in the DCT Domain,” Proceedings of the 2003 International Conference on Neural Networks and Signal Processing, vol. 2, pp. 1525 – 1528, Dec. 2003 K.J Davis and K. Najarian, “Maximizing Strength of Digital Watermarks Using Neural Networks,” Proceeding of International Joint Conference on Neural Networks, vol. 4, pp.2893 – 2898, July, 2001. Shi-chun Mei, Ren-hong Li, H. Dang and Yun-kuan Wang, “Decision of image watermarking strength based on artificial neural-networks,” Proceedings of the 9th International Conference on Neural Information Processing, vol. 5, pp. 2430 – 2434, Nov, 2002. Zhang Zhi-Ming, Li Rong-Yan, Wang Lei, “Adaptive Watermark Scheme with RBF Neural Networks,” Proceedings of the 2003 International Conference on Neural Networks and Signal Processing, vol. 2, pp. 1517 – 1520, Dec, 2003..

(7)

數據

相關文件

image processing, visualization and interaction modules can be combined to complex image processing networks using a graphical

Promote project learning, mathematical modeling, and problem-based learning to strengthen the ability to integrate and apply knowledge and skills, and make. calculated

In this paper, we have studied a neural network approach for solving general nonlinear convex programs with second-order cone constraints.. The proposed neural network is based on

Sequence-to-sequence learning: both input and output are both sequences with different lengths..

contributions to the nearby pixels and writes the final floating point image to a file on disk the final floating-point image to a file on disk. • Tone mapping operations can be

Sūtra that “the secular and the sacred are equal.” Su Shi’s writings on the Vimalakīrtinirdeśa Sūtra show his kulapati image of Vimalakīrtinirdeśa in the Song dynasty..

The purpose of this paper is to achieve the recognition of guide routes by the neural network, which integrates the approaches of color space conversion, image binary,

A digital color image which contains guide-tile and non-guide-tile areas is used as the input of the proposed system.. In RGB model, color images are very sensitive