A Framework for Traditional Chinese Semantic Web of the Law

Yung Chang Chi

A,Be-mail:charles.y.c.chi@gmail.com

Hei Chia Wang

Ae-mail:hcwang@mail.ncku.edu.tw

A. Department of Industrial and Information Management and Institute of Information Management,

National Cheng Kung University, Tainan City, Taiwan ROC

Ying Maw Teng

Be-mail: morris@isu.edu.tw

B. Department of International Business, I-Shou University,

Kaohsiung City, Taiwan ROC

Abstract - This paper aims to propose a semantic web framework based on ontology in criminal cases by using PATExpert structure, and the structure will be modified to be an ontological method deriving out of knowledge and analysis of legal documents, and the modified structure will be further employed to analyze criminal cases in Taiwan judicial decisions. By comparing the relevant criminal laws with judicial judgments, we can characterize the specific types of criminal cases and models in two different databases, while enhancing the feasible construction of the criminal precedents with the help of semantic network. An emerging research topic centers in law mining that consists of law retrieval, law categorization, and law clustering.

Keywords: PATExpert; criminal case; issue; criminal judgment; content analysis; Ontology; semantic web.

I. INTRODUCTION

The European project PATExpert, (Advanced Patent Document Processing Techniques), coordinated by Barcelona Media (BM), has successfully accomplished the objectives after the pre-established 30 months (from February 2006 to July 2008). PATExpert has a global objective to change the present textual processing of patents to semantic processing (treating the patents as multimedia knowledge objects).

WordNet was created in the Cognitive Science Laboratory of Princeton University under the direction of psychology professor George Armitage Miller starting in 1985. WordNet is similar to a dictionary on the surface, because it combines words together based on their literal meaning. But there are some significant differences. First of all, WordNet is not just a form of words, but a letter of the string and the specific feeling of the word. As a result, words closely adjacent to each other in the network are semantically disambiguated. Second, WordNet annotates the semantic relations between words, and the grouping of words in the dictionary does not correspond to any explicit pattern other than literal meaning.

The relational database depends on the architecture. Knowledge base relies on ontology to build structure. Relational databases are limited to a relationship - foreign key. Semantic Web provides multidimensional relationships, such as inheritance, part, association, and many other types, including logical relationships and constraints. An important note is that the language and instance used to form the

structure itself may be the same as the language in the knowledge base, but in the relational database it is completely different.

The criminal cases can be retrieved through a cluster-based approach. The distributed information retrieval of criminal cases can be done by generating a ranking list of CORI (Collecting Retrieval Reasoning Network) or KL (Kernighan-Lin) algorithm. Criminal cases classification can be done automatically by using k-nearest neighbor classifier and Bayesian classifier, classifiers established by back propagation networks, various machine learning algorithms, or k-nearest neighbors based on legal semantic structures. The clustering algorithm can also be used to form a thematic map for criminal cases to analyze and summarize the results and to create a system interface for retrieving legal documents.

Content analysis is the localization of communication research and may be one of the most important research techniques in the social sciences. In view of someone - group or culture - a sense of belonging to them, it tries to analyze the data in a particular situation. Communications, messages and symbols differ from observable events, things, attributes, or people, and they inform themselves of something outside; they reveal some of the attributes of their distant producers or operators, and to their sender, their Recipients and their exchange-embedded institutions have cognitive consequences. Content analysis is a research technique for replicable and effective inference from text to its environment. As a technology, content analysis involves specialized procedures. It provides new insights, increases the researcher's understanding of a particular phenomenon, or tells the actual action. Content analysis is a scientific tool.

Unlike criminal judgments, criminal cases documents can be exploited by word mining techniques because the judicial judgment is a legal document. As judicial judgments are translated into a model by content analysis, readers can easily read newspapers and understand the material facts, relevant issues, ruling of law and judgment.

This study proposes to strengthen the legal information network and criminal case framework, the rest of which is structured as follows. The second part introduces the research background and sets out the objectives. In the third part, we will describe our research methods. The conclusions of this paper reveal the expected results and future suggestions.

II. RESEARCH BACKGROUND AND OBJECTIVE The framework suggests that, through legal documents mining and criminal judgment analysis, a new criminal behavior and its similarity with other criminal acts can be found and the possibility of potential criminal behavior can be predicted. While building on a law semantic Web, the ontology and rules engine can be taken into account.

This framework of law database is based on the criminal law of Taiwan. The judgements are retrieved from the judicial judgments in Taiwan.

The purposes of this study is to construct the Semantic Web of law information, and to render useful reference regarding criminal issues, rulings, and precedents (stare decisis) in different layers of courts and implicate criminal judgment trends. With the help of the information, lawyers and judges can render reasonable and accurate decisions.

Lack of legal background makes laymen or ordinary readers difficult to fully grasp the gist of arguments and judgments made by attorneys and judges. By employing content analysis and the big data concepts, ordinary people can easily apply he content analysis technology from the semantic network to better understand the complex litigation in criminal world.

III. RESEARCH METHOD A. Criminal cases documents analysis

Based on the collected legal documents and natural language processing (NLP), this study uses content analysis methods to find out the concepts and relationships of relevant legal documents while extracting the subject-object (SAO) structure in criminal cases.

NLP is a text mining technique that can conduct syntactic analysis of natural language; NLP tools include the Stanford parser (Stanford 2013), Minipar (Lin 2003), and Knowledgist TM2.5. NLP tools will be used for building a set of SAO structures from the collected legal documents.

The analysis of legal documents for specific keywords can retrieve a list of the documents where the keywords were found. Then, the framework will use data and text mining technology to design a specific algorithm (still in progress) in order to analyze the legal documents.

B. Criminal judgements content analysis

The most obvious source of data for content analysis is the text of regular attribution: oral discourse, written document and visual representation. The text in the criminal judgment is important because it has meaning. Therefore, content analysis techniques must analyze the text of criminal cases to implement offensive and defensive strategies as claim, answer or reply in criminal cases.

Criminal judgements of different cases can be mined by employing text-mining techniques since judicial judgements are legal documents published by courts. The judgements can be divided into patterns by content analysis, and readers can easily access to them and understand the material facts and issues in dispute.

The analysis of criminal cases (precedents) is carried out by content analysis techniques for specific design algorithm (still in progress) for the purpose of searching for legal rulings of specific keywords, and briefing them to the list of legal documents. Leading precedents, together with up-to-date criminal cases can be simultaneously retrieved and compiled in order.

Content analyses commonly contain six steps that define the technique procedurally, as follows:

Design. Design is a conceptual phase during which

analysts (i) define their context, what they wish to know and are unable to observe directly; (ii)explore the criminal source of relevant information that either are or may become available; and (iii)adopt a criminal analytical construction that formalizes the knowledge available about the information-context relationship thereby justifying the inferential step involved in going from one to the other.

Unitizing. While the process of select representative

samples is not indigenous to content analysis, there is the need to (1) undo the statistical biases inherent in much of the symbolic material analyzed and (2) ensure that the often conditional hierarchy of chosen sampling cases become representative of the symbolic phenomena under investigation.

Coding. Coding is the step of describing the recording

criminal judgement or classifying them in terms of the categories of the analytical constructs chosen. This step replicates an elementary notion of meaning and can be accomplished either by explicit instructions to trained human coders or by computer coding. The two evaluative criteria, reliability as measured by inter coder agreement and relevance or meaningfulness, are often at odds.

Drawing inferences. Drawing inferences is the most

important phase in a content analysis. It applies the stable knowledge about how the variable accounts of coded criminal information are related to the phenomena the researcher wants to know about.

Validation. Validation is the desideratum of any

research effort. However, validation of content analysis results is limited by the intention of the technique to infer what cannot be observed directly and for which validation evidence is not readily available.

The content analysis searches the criminal judgements for specific keywords and returns a list of the documents as above criminal documents by introducing the content analysis technology into specific design algorithm (still in progress) in order to analyze the criminal cases/precedents. It also finds the nearest criminal judgements/precedents. C. Constructing Semantic Web

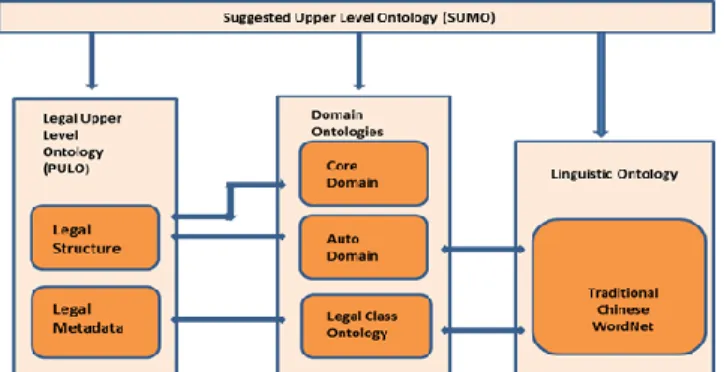

Our proposed semantic web in Figure 5 is a program that comprises the following parts:

1. The first part searches legal files by specific keywords and presents a list of recommended documents that fit the keyword. The program will then utilize data and text-mining techniques to design a specified algorithm to analyze legal documents while locating the most similar criminal cases, rulings, dicta, dissenting or concurring opinions and leading

precedents. The result of building part 1 of the reasoning knowledge base is shown in Fig.1.

2. Next, the semantic web searches criminal cases by specific keywords, and introduces content analysis technology into the specified design algorithm, coupled with the criminal case/precedent analysis. Suggestive lists of judicial criminal judgments/documents for the both parties (plaintiff and defendant) can be relatively found. The results of Section 2 of the Knowledge Base building the rules engine are shown in Figure 3.

3. Reasoners: Reasoning semantic web. Inference creates a logical addition that provides classification and implementation. The class is filled with a class structure that allows concepts and relationships to be properly associated with others, such as people being creatures, fathers are parents, married is a relationship, or married is a symmetrical relationship. The implementation provides the same, for example, John H is the same as JH. There are several types of reasoners who offer various reasoning. Reasoners usually insert other tools and frameworks. Reasoners use various statements to create logically valid auxiliary statements.

4. The Semantic Web component. The Semantic Web component of the rules engine supports reasoning, usually reasoning beyond the content can be derived from the logic description. The engine adds a strong dimension to the knowledge structure. Rules allow the combination of ontology and other large logical tasks, including programming methods as counting and string search.

So far, the whole method is purely theoretical. However, in the patent literature analysis, we successfully use WordNet to cluster similar words of words and combine them with lower-level dimension methods and similar semantic terms. In the field of data mining, WordNet semantic Web system can identify keywords, similar words, features and sparse matrix to prevent the loss of legal search of criminal cases, criminal offenses, plaintiff’s allegations and defendant’s answers. WordNet can also save storage memory while improving the accuracy of text mining in terms of the normal, literal and professional meaning of keywords, and improving the speed of research retrieval.

speed of patent research retrieval.

Figure 1 Part 1 the legal documents result constructs knowledge repository for the Reasoners module

D. The Framework for Semantic web of the Law

The top of the frame indicated in Figure 2 is to use PATExpert, ontology module and W3C standard RDF.

Legal information has been digested and transformed in this process. The framework builds the patent ontology library of the semantic Web as shown in Figure 3.

As a reasoning module, legal document analysis process includes SAO structure extraction (NLP) and criminal case feature measurement to find out legal concepts and relationships. At this stage, the study yields to some results based on previous findings of criminal cases.

Another part in Figure 3 is the rule engine module that represents the content analysis process. This process involves the execution of criminal case. The framework of the process is designed as an algorithm. Then, the research will build on knowledge and technical logic to support the semantic web.

Figure 2 PATExpert Ontology Modules

Figure 3 Part 2 Content analysis result constructs knowledge repository for the Rules engines

As to modifying the PATExpert, we hope that the

modifying PATExpert has a global objective to change the present textual processing of legal processing to semantic processing (treating legal process as multimedia knowledge objects). The patent document is one of the legal documents that have same or similar structures with the criminal judgments, the difference is that the patent describes an object or technique, while the criminal law denotes the impact of an overt act, general or specific intent and its responsibility and punishment, so that some PATExpert modules are modified to apply to the laws to facts, aiming to develop a pattern of legal ontology. Following are the modifying PATExpert module for the legal documents of criminal cases: semantic recorder Judicial Judge-ments

Figure 4 Modify PATExpert Ontology Modules for legal documents of criminal cases.

The Traditional Chinese WordNet is based on LOPE Lab at the Institute of Linguistics, National Taiwan University. The purpose of the Traditional Chinese WordNet is to provide a complete sense of Chinese meaning and a lexical semantic relation knowledge base. The distinction and expression of the literal meaning address on the perfect lexical semantics theory and the ontology architecture. In the lexical theory and cognitive research, this detailed analysis of the vocabulary knowledge base system will become the basic reference for linguistic research. In practical applications, this database is expected to be an indispensable base architecture for Chinese language processing and knowledge engineering. Since 2003, the plan has accumulated more than ten years of research results, the meaning of the definition and the meaning of knowledge expression has made many corrections for the generic meaning of words. In 2006, the network search interface of the Chinese word network was officially used for retrieval in the Institute of Linguistics at the Central Research Institute. The current program website is maintained by the National Taiwan Institute of Linguistics. The dynamic updating of data and the planning of more detailed research are underway. The study is to apply the same WordNet to help readers access to legal documents regarding criminal cases while promoting better understanding of criminal jargons and decisions.

IV. EXPECTED RESULT AND FUTURE WORK In order to enhance a semantic web of legal information and criminal judgements users can try to use a keyword to search, and the semantic web will automatically retrieve the texts, terms, sentences or phrases that correspond to related criminal cases and legal information. The legal challenge is subject to develop a semantic web that can apply AI function.

WordNet has been previously employed to enhance the accuracy of patent searches. By analogy, that WordNet can be also implemented by the same text mining techniques to retrieve more accurate legal meaning of criminal cases and judgments.

The difficulty of research lies on how readers use different analytical methods to analyze different databases, and further to integrate these results with semantic webs. The semantic web must be employed to build more data bases and implement accurate algorithms in different areas.

The most obstacle is how to integrate multiple research math from different databases. The results need to be harmonized, standardized and well communicated, compared and exchanged among them.

In order to construct accurate legal meaning and terms of criminal cases/judgments, this study is aimed to utilize a variety of analytical methods, through the analysis of semantic Web analysis via keywords, phrases, various databases to test whether the same algorithm mechanism can be equivalently applied in the analysis of criminal cases/judgments

The study then turns to employ the Semantic Web impacts functions and Semantic Web components shown in Figure 5 to increase the new data automatically: the right side indicates Rules engine, the left side represents the Reasoner, over the center side is base ontology from the modifying PATExpert ontology modules, and under the center side is language. The final step of Semantic Web will be further developed to be a multi-language Semantic Web that use different languages/databases to retrieve accurate legal meaning/terms in different countries.

Figure 5. Major Semantic Web components References

[1] Y. L. Chen, and Y. T. Chiu, “An IPC-based vector space model for patent retrieval” Information Processing and Management, pp.309-322.47, 2011.

[2] Chinese WordNet http://lope.linguistics.ntu.edu.tw/cwn/download/ [3] C. J. Fall, A. Torcsrari, K. Benzineb, and G. Karetka, “SIGIR Forum”

Automated categorization in the international patent classification, pp.10-25. 37(1), 2003.

[4] V. K. Gupta, and N. B. Pangannaya, Carbon nanotubes; “Bibliometric

analysis of patents”, World Patent Information,

pp.185-189.Vol.22,issue 3, Sep. 2000.

[5] J. Hebeler, M. Fisher, R. Blace and A. Perez-Lopez, “Semantic Web Programming “Wiley Publishing, Inc.2009.

[6] S. H. Huang, C. C. Liu, C. W. Wang, H. R. Ke, and W. P. Yang, “International Computer Symposium” Knowledge annotation and discovery for patent analysis, pp.15-20, 2004.

[7] J.H. Kim, and K.S. Choi, Patent document categorization based on semantic structural information, “Information processing & Management”, pp.1200-1215.43(5), 2007.

[8] K. Krippendorff, Content analysis In E. Barnouw, G. Gerbner, W. Schramm, T. L. Worth, and L. Gross (Eds.), International encyclopedia of communication New York, NY: Oxford University Press, pp.403-407.Vol. 1, 1989.

[9] K. Krippendorff, “Content Analysis An Introduction to Its Methodology” second Edition, Sage Publications, Inc. 2004. [10] J. B. Kruskal, Multidimensional scaling by optimizing goodness of fit

to a nonmetric hypothesis. Psychometrika, pp.1-27.29(1), 1964. [11] I. S. Kang, S. H. Na, J. Kim, and J. H. Lee, “Information Processing

& Management”Cluster-based patent retrieval, pp.1173-1182.43(5), 2007.

[12] Knowledgist retrieves, analyzes, and organizes information. https://invention-machine.com/ , retrieved: Apr., 2016

[13] L. S Larkey., Some issues in the automatic classification of U.S. patents. In: Working notes for the AAAI-98 workshop on learning for text categorization, pp.87-90, 1998.

[14] L. S. Larkey, Connell, M. E., and Callan, J. Collection selection and results merging with topically organized US patents and TREC data. In Proceedings of ninth international conference on informaiton knowledge and management, pp.282-289, 2000.

[15] L. S. Larkey, A patent search and classification system. In: Proceedings of the fourth ACM conference on digital libraries, pp.79-87, 1999.

[16] Y. Liang, R. Tan, and J. Ma, “Patent Analysis with Text Mining for TRIZ” IEEE ICMIT, pp.1147-1151, 2008.

[17] J. Michel, and B. Bettels, “Patent citation analysis: a closer look at the basic input data from patent search reports”, Scientometrics, pp.185-201. Vol.51. no. 1, 2001. [18] MINIPAR http://webdocs.cs.ualberta.ca/~lindek/minipar.htm , retrieved:Dec., 2015 [19] PATExpert http://cordis.europa.eu/ist/kct/patexpert_synopsis.htm , retrieved: Feb., 2016 [20] PATExpet http://www.barcelonamedia.org/report/the-european- project-patexpert-coordinated-by-bm-finishes-with-fulfilled-objectives-and-success , retrieved: Feb., 2016

[21] U. Schmoch, “International Journal of Technology Management” Evaluation of technological strategies of companies by means of MDS maps., pp.4-5.10(4-5), 1995.

[22] The Stanford Natural Language Processing Group, The Stanford Parser: A statistical parser, http://nlp.stanford.edu/software/lex-parser.shtml

[23] Y. H. Tseng, C. J. Lin, and Y. I. Lin, “Text mining for patent mapanalysis”, Information Processing & Mangement, pp.1216-1247. vol.43, issue 5, Sep. 2007.

[24] A. J.C. Trappey, F. C. Hsu, C V. Trappy,and C. I. Lin,”

Development of a patent document classification and search platform using a back-propagation network”, Expert Systems with

Applications, pp.755-765.31(4), 2006.

[25] WordNet https://wordnet.princeton.edu/, retrieved: May, 2016