A Fuzzy Rule-Based Neural Network Model for Revising Approximate Domain

Knowledge

Hahn-Ming Lee, member lEEE and Bing-Hui Lu Department of Electronic Engineering National Taiwan Institute of Technology

Taipei, Taiwan e-mail: hmlee@et.ntit.edu.tw

Abstract

In this paper, a Knowledge-Based Fuzzy Neural Network model, named KBF", is proposed. The initial structure of KBFNN can be constructed by approximate fuzzy rules. These approximate fuzzy rules may be incorrect or incomplete. Then, the approximate fuzzy rules are revised by neural network learning. Also, the fuzzy rules can be extracted from a revised KBFNN. To construct K B F " by fuzzy rules, two kinds of fuzzy neurons are proposed. They are S-neurons and G-neurons.

Besides, the KBFNN is capable of fuzzy inference. For processing fuzzy number efficiently, the LR-type fuzzy numbers are used. In a sample example, a Knowledge- Based Evaluator (KBE) is demonstrated. The experimental results are very encouraging.

1. Introduction

In the research of expert systems, knowledge acquisition is an important problem [ 11. Extracting knowledge from experts and building knowledge base is difficult. Ginsberg, et al., [ l ] divide the knowledge acquisition into two phases, In phase one, an initial rough knowledge base from experts is extracted by knowledge engineers. This knowledge base is sometimes incomplete and inconsistent. Then a knowledge base refinement phase is applied at phase two. The use of second phase is to acquire a high performance knowledge base. Recently, using artificial neural network on the work of knowledge refinement is a new topic [2, 3, 41.

However, the linguistic and ambiguous information always exist in the practical application problems [ 5 ] . Fortunately, the fuzzy set theory supports an appropriate method for ambiguous and linguistic information processing (51. Hence, We intend to build a fuzzy neural network model for revising imperfect fuzzy rule base.

In this paper, a Knowledge-Based Fuzzy Neural Net work model, named KBFNN, is proposed. The initial

inputs and perfoms fuzzy inference. To reduce the computational load of fuzzy number computation, the LR-type fuzzy numbers [6] are used. Also, a fuzzy rule extraction algorithm is used to extract fuzzy rules from a revised KBFNN. To convert fuzzy rules to network, we proposed two kinds of fuzzy neurons. They are S-neurons and G-neurons. In revision phase, the gradient descent method is applied. The learning algorithm adjust rule's parameters to minimize output error of KBF".

2. The LR-type Fuzzy Number

The LR-type fuzzy number representation was proposed by Dubois and Prade [ 6 ] . Its definition can be described as follows:

A fuzzy number M is said to be a LR-type fuzzy number iff

Where is the membership function of fuzzy number M. L and R are the reference functions for left and right reference, respectively. m denotes the mean value of M. a and fl are called left and right spreads, respectively. Therefore, a LR-type fuzzy numkr M can be expressed as (m.a,P>LR. If a and p are both zero, the LR- type fuzzy number indicates a crisp value.

In KBFNN, the LR-type fuzzy numbers are used on both fuzzy weight vectors and fuzzy input vectors.

N

Y

N

3. The Architecture of KBFNN

As stated above, the initial structure of K B F " is constructed by approximating fuzzy rules. To translate fuzzy rules to KBFNN, the S-neurons and G-neurons are proposed. The S-neurons deal with rules' premises, and the G-neurons derive the conclusions of fuzzy rules. Each input connection of S-neuron represents a premise of a fuzzy rule. Hence, a fuzzy rule's premise part is composed by all input connections of a S-neuron. For each rule's conclusion variable, a G-neuron is used to represent it. For example, assume the approximating fuzzy rules are listed as follows:

structure of is constructed by approximating fuzzy rules. The K B F " revises fuzzy rules by neural network learning. Besides, the K B F " can handle fuzzy

Rule 1: If PAYOFF is Under-1 and TYPE is Useful, then WORTH is Negative.

Rule 2: If PAYOFF is Over-3, then WORTH is High.

Rule 3: If TEACHABILITY is Difficult and WORTH is Negative, then SUITABILITY is Poor.

Rule 4: If RISK is Low and WORTH is High, then SUITABILITY is Good.

Fig. 1 shows the initial structure of K B F " generated by above fuzzy rules.

3.1 S-neurons

When an input vector is applied to input layer, many fuzzy rules will be fired. We use S-neurons to compute the firing degrees of fuzzy rules. The S-neurons carry out the similarity measure between fuzzy inputs and premise weights of fuzzy rules. In KBFNN, the fuzzy numbers are represented in LR-type. To measure similarity degree of LR-type fuzzy numbers, a simple formula is proposed.

Assume we have two fuzzy numbers I i = ( I ~ , b J p i ) and

E,=( Ffi,F~,Fp,). Function Similarity (Ii,Fi) calculates the similarity degree of Ii anti F,. It can be expressed as:

- - -

I N

-,.d

I I & F ~

Similarity(Ii,Fi) = Maximum( 1 - _l, 0) _

OV(Ii3i)

Where the output range of function Similarity is [0,1].

II,,,,-Fd is the distance of mean value between Ii and F,.

The function OV(Ii,FI) is used to compute the overlap degree of I, and F,. It is equal to the sum of inner spreads o f and

6.

That is,- -

- -

- -

A S-neuron is shown in Fig. 2, for example. Where

-

Iiis the input fuzzy variable. Fi is the fuzzy weight. The output SOj is the average similarity degree. Using similarity function, the activation of S-neuron can be expressed as:

-

Where I is the number of premises in the rule represented by this S-neuron.

3.2 G-neurons

The G-neuron combines the conclusions of fuzzy rules, which carries out the defuzzification operation. The output range of G-neurons is limited between [0,1].

When no rule is fired, the outputs of G-neurons will be forced to a default value. We use 0.5 as default value to represent a fuzzy condition in linguistic. In Fig. 3, a G- neuron is shown. Assume ~ = ( ~ , , , k , ~ , k , G O p k ) . The activation of G-neuron is expressed as:

-

default value,

f

Where CE(CFj) (CFmj ,CFaj,CFpj).

weights of rules.

SOj j

N

= CFmj + qCFp,-':Fa,), CFj =

N N 3

CF1-CF, are the conclusion fuzzy S 0 1 - S O m are the outputs of corresponding S-neurons. GO,, is the output mean value of G-neuron k. GO,k and GOpk are the left and right references of GOk. Here, GO,k is the distance from the most left point of significant fuzzy rules to GOmk.

That is,

-

Similarly, GOpk is the distance from the most right point of significant fuzzy rules to G o d .

We have

GOPk = Maximum(CFmj+CFPj)-GOmk, for all SOj >

threshold, j=l..m.

Where the significant fuzzy rules mean that fuzzy rules with firing degree larger than a threshold value (e.g., 0.5).

Besides, on the output layer, the crisp output value is equal to the mean value of G X . The function CE(CFj) computes the centroid of fuzzy number CFj [8].

-

N4. Revision Algorithm

For revising rule base on KBFNN, a revision algorithm based on gradient descent method is derived to adjust fuzzy connection weights. First, we define an error function as follows:

Where E, is the error value of learning pattern p. Dpk and GOpk are the desired output and network output of kth output node for learning pattem p. In this phase, the value of fuzzy weights on S-neurons and G-neurons will be changed to minimize the overall error Ex E,. At time t, the weight change AW(t) is defined as:

-

PAw(t) = -qVE,(t)+aAG(t- 1)

Where q is the learning rate, and a is a constant value.

VEp(t) computes the gradient error value of W(t) . The term aAW(t-I) is a momentum term which is added for improving convergent speed. When no rule is fired, SOs=O, the weight change values are forced to zero.

As shown i n Fig. 2 and Fig. 3, assume

N

N

F

- -

CFj=(CFmj,CFaj,CFpj) and Fi=(Fmi,Fai,Fpi), the changes O f CFmj is

3.

That is,aCFmj

Similarly,

-N

Also, the changes of Fmi is -. aEP If Simhity(&,Fi) # 0, aF,,,i

we have

, where

1 ifI,,,j2Fmj sgn(I,,,i-F,,,i)=

{

-1 if I,,,j < Fmi

1 i is the number of connections which are connected to ith S-neuron.

_N

If Similarity(Ii,Fi) = 0,

9

=OaFmi

aE, aE as follows:

Similarly, we can derive - and dFai dFpi

In revision phase, the above weight change formula is applied. The revision algorithm adjusts the connection weights on S-neurons and G-neurons to minimize output error of KBF”. Similarly, for the fuzzy weights on the hidden layers, the weight change formula is also can be CkiVed.

5. Fuzzy Rule Extraction 6. Experiment: Knowledge-Based Evaluator ( K W

For extracting rules, Towell, et al., [3, 41 propose a M of N method. Fu [2] uses heuristic search to generate rules.

But their methods are process numerical and symbolic rules. Where we propose a fuzzy rule extraction algorithm to extract fuzzy rules form trained KBFNN. On a trained KBFNN, the initial knowledge is revised. Knowledge can be extracted from the S-neurons and G-neurons. The linguistic terms of fuzzy rules are defined before initializing KBFNN. However, the semantic meanings of rules in a trained KBFNN are not defined. To express the uncertainty, in many expert systems, certainty factor is often used [TI. Hence, the revised fuzzy rules can be explained by predefined linguistic terms with certainty factor.

In what follows, a fuzzy rule extraction algorithm is summarized.

F u u y Rule Extraction Algorithm:

Stage I. Determine certainty factors of fuzzy weights:

For each fuzzy connection weights

Step 1: Use Similarity function to calculates similarity degree between fuzzy connection weight and predefined membership functions

Step 2: Select a membership function with maximum similarity degree. The value of maximum similarity degree will be assigned as certainty factor.

Step 3: Use the selected membership function with certainty factor to express the fuzzy weights.

Stage 11. Fuzzy rule extraction:

Step 1: For each input connection with membership For each S-neuron

function and certainty factor (CF).

Generate a premise i n form of follows:

[input term] is [membership function] (CF)

Where the input term is the input variable name or a G-neuron's variable name.

Step 2: Combine the set of generated premises as rule's premise.

Step 3: For each output connection Generate a conclusion in form as follows:

[output term] is [membership function] (CF)

According to generated premise and conclusion, build a fuzzy rule as:

If [premise 11 and [premise 21 and ... and [premise i]

Then [conclusion]

The KBE is an expert system to cvaluate the suitability of applying an expert system on a domain. In Table 1, the original fuzzy rules of KBE are listed.

Eight factors are used as inputs. They are PAYOFFKOST, PERCENT SOLUTION, TYPE,

EMPLOYEE ACCEPTANCE, SOLUTION

AVAILABLE, EASIER SOLUTION, WACHABILITY, and RISK. In these factors, three factors are applied to determine the WORTH. The WORTH is a hidden conclusion. On the output layer, the output node represents the final conclusion. It determines the SUITABILITY of D E . The output range of output node is between [0,1].

For simplicity, we use three membership functions, shown in Fig. 5(a)-(b), to represent linguistic terms of each factor. We use original fuzzy rules to generate a training data set. Before revision, the imperfect fuzzy rules are used to initial K B F " . Fig 4 shows a structure of KBFNN built by imperfect furzy rule base.

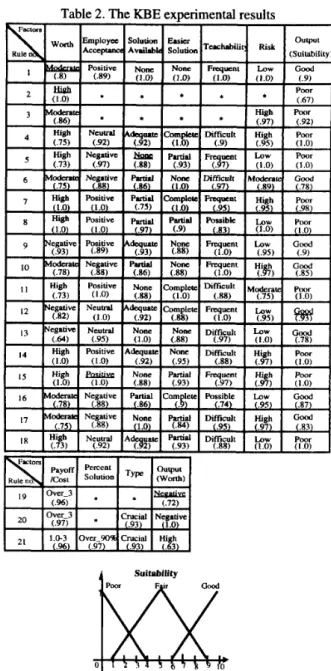

It is a hierarchical rule base. The imperfect fuzzy rules are listed in Table 1 with incorrect linguistic terms in underline. By applying the revision algorithm, the K B F " is revised. When the overall ewer is below 0.01, the revision algorithm stops. To show the experimental results, we apply a rule extraction algorithm to the trained KBFNN. Table 2 lists the rule extraction results. The certainty factor is assigned to each membership function of revised fuzzy rules.

From the experimental results, we find that Rule 5 is revised. Rule 1 and Rule 12 are close to original rules.

But Rule 2 and Rule 19 can not be revised. In fact, Rule 2 and Rule 19 have only one premise. That means that the other inputs are don't care. The combinations of these don't care terms are infinite. Because the training data set is generated in random. Hence, the training data concern with Rule 2 and Rule 19 might not enough to revise them. On the other hand, many rules are not in agreement with the original fuzzy rules. But they are consistent with the training set. Because the gradient descent algorithm is an optimal searching algorithm, it tries to minimize the overall error for all training patterns. The weight change direction of each learning cycle is determined by the error of learning patterns.

Hence, the original rules may be changed in learning phase to minimize error function. These changed rules can be viewed as new produced fuzzy ruBes which are consistent with the training set. In practice, the complete correct fuzzy rules are always unknown.

t tACHABlLI I Y

7. Conclusion

In this paper, we proposed a Knowledge-Based Fuzzy Neural Network model (KBF”). The K B F ” is constructed by approximate fuzzy rules. By applying The fuzzy rules can be extracted from a revised KBFNN.

Also, a fuzzy rule extraction algorithm is proposed. The primitive elements of KBFNN, called S-neuron and G- neuron, are introduced. The S-neurons deal with fuzzy rules’ premise. The G-neurons compose the conclusion of fuzzy rules. The experimental results of Knowledge-Based Evaluator (KBE) are very encourage. In the further works, we intend to apply more practical applications to show the usefulness of KBFNN. Moreover, the research of interval fuzzy number instead of triangle fuzzy number to extend the ability of KBFNN is also under investigation.

revision algorithm, the approximate fuzzy rules is revised. r m ITABILITY

Fig.

by S-neurons and G-neurons.

An initial structure of “N. It is

SOj

References: Fig. 2. A S-neuron. The S-neuron measures the

similarity degrees between input variables and fuzzy connection weights.

Allen Ginsberg, Sholom M. Weiss, and Peter Politakis, “Automatic Knowledge Base Refinement for Classification Systems,” Artificial Intelligence, Li Min Fu, “Knowledge-Based Conectionism for Revising Domain Theories,” ZEEE Transactions on

vol. 35, pp. 197-226, 1988. so‘o> SQ CFj - Gq,

G-neuron k System, Man, and Cybernetics, vol. 23, no. 1, pp.

wm

Fig. 3. A G-neuron. The G-neuron combines the conclusions of fuzzy

defuzhfication OpeEltion, 173-182, 1993.

G. G. Towell, J. W. Shavlik, and M. 0. Noordewier,

“Refinement of Approximate Domain Theories by Knowledge-Based Neural Networks,” Proc. AAAZ- 90, Boston, 1990, pp. 861-866.

Geoffrey G. Towell, “Extracting Refined Rules from Knowledge-Based Neural Networks,” Machine Learning, vol. 13, pp. 71-101, 1993.

H. -J. Zimmermann, F u ~ y Set Theory and Its Applications, Kluwer Academic Publishers, 199 1.

Didier Dubois and Henri Prade, Fuzzy Sets and Systems: Theory and Applications, Academic Press,

1980.

Dider Dubois and Henri Prade, “Fuzzy Sets in Approximate Reasoning, Par1 2: Logical Approaches,” Fuuy Sets and Systems, vol. 40, pp.

Hahn-Ming Lee and Bing-Hui Lu, “FUZZY BP: A Neural Network Model with Fuzzy Inference,” to

appear in the proceedings of 1994 IEEE International Conference on Neural Networks, 1994.

which carries out the

SLUIABILIW

203-244, 1991.

P A Y W W W L W I l Y r t W U > Y t t S O L U ” EASIER TUCHASIISTYRISK COST SOLWON AC(ZPTANCT AVAILABLE Fol IiIlON

Fig. 4. A structure of KBFNN built by imperfect KBE fuzzy rule base.

Table 1. The original fuzzy rules of KBE.

13

Output worth E m p l o p Solution Easier

i Available Solution Tuchabbili') Risk

Aaxepunc~ (Suitability

Negaove Neutrsl None None thftirult Low Good

1 s

Negativ Negative14 , M a k r a q

} 1 I

HighHigh Positive

P m e v r Adequatq None Dlfficuli High P w r I 1 Negative Positive

I 2 Negative Neutnl

Under-1 Useful Negative

Table 2. The KBE exDerimental results

Fig. 5(b). The membership functions of Suitability.

Fig. 5(a'). The membership functions of KBE.