data as well as scalar input data. The input features of the EID problems include the radio frequency, pulse width, and pulse repetition interval of a received emitter signal. Since the values of these features vary in interval ranges in accordance with a specific radar emitter, the VNN is proposed to process interval-value data in the EID problem. In the training phase, the interval values of the three features are presented to the input nodes of VNN. A new vector-type backpropagation learning algorithm is derived from an error function defined by the VNN’s actual output and the desired output indicating the correct emitter type of the cor-responding feature intervals. The algorithm can tune the weights of VNN optimally to approximate the nonlinear mapping between a given training set of feature intervals and the corresponding set of desired emitter types. After training, the VNN can be used to identify the sensed scalar-value features from a real-time received emitter signal. A number of simulations are presented to demonstrate the effectiveness and identification capability of VNN, including the two-EID problem and the multi-EID problem with/without additive noise. The simulated results show that the proposed algorithm cannot only accelerate the convergence speed, but it can help avoid getting stuck in bad local minima and achieve higher classification rate.

Index Terms—Convergence, emitter identification (EID), interval value, pulse repetition interval, pulsewidth, radio fre-quency, supervised learning, vector neural network, vector-type backpropagation.

I. INTRODUCTION

M

ODERN RADARS have been widely used to detect aircrafts, ships, or land vehicles, or they can be used for searching, tracking, guidance, navigation, and weather fore-casting [1]. In military operation, radar is an important piece of equipment which is used to guide weaponry [2]. Hence, an elec-tronic support measure (ESM) system such as radar warning receiver (RWR) is needed to intercept, identify, analyze, and locate the existence of emitter signals. The primary function of the RWR is to warn the crew of an immediate threat with enough information to take evasive action. To accomplish this function, a powerful emitter identification (EID) function must be involved in the RWR system. As the signal pulse density increases, further demands will be put on the EID function. Clearly, the EID function must be sophisticated enough to face the complex surroundings [3].Manuscript received August 3, 2000; revised June 27, 2001. This work was supported by the MOE Program for Promoting Academic Excellence of Uni-versities under Grant 91-E-FA06-4-4, R.O.C.

The authors are with the Department of Electrical and Control Engineering, National Chiao-Tung University, Hsinchu, Taiwan, R.O.C.

Publisher Item Identifier 10.1109/TAP.2002.801387.

a key man to validate and verify the analysis [4]. At present, a histogramming approach is accessed by radio frequency (RF), pulse width (PW), and pulse repetition interval (PRI) of the col-lected pulse descriptor words (PDWs). This approach is used in a current EID system designed for sorting and comparing tabulated emitter parameters with measured signal parameters. However, these techniques are inefficient and time-consuming for solving EID problems; they often fail to identify signals under high signal density environment, especially, in near real time.

For many practical problems, including pattern matching and classification, function approximation, optimization, vector quantization, data clustering and forecasting, neural networks have drawn much attention and been applied successfully in recent years [4]–[7]. Neural networks have a large number of highly interconnected nodes that usually operate in parallel and are configured in regular architectures. The massive parallelism results in the high computation rate of neural networks and makes the real-time processing of large data feasible.

In this paper, the EID problem is considered as a nonlinear mapping problem. The input features, including RF, PW, and PRI, are extracted from PDWs. Since the values of these fea-tures vary in interval ranges in accordance with a specific radar emitter, a vector neural network (VNN) is proposed to process value input data. The VNN can accept either interval-value or scalar-interval-value input and produce scalar output. The pro-posed VNN is used to construct a functional mapping from the space of the interval-value features to the space of emitter types. The input and output of the VNN are related through interval arithmetics. To train the VNN, a suitable learning algorithm should be developed. The training goal is to find a set of optimal weights in the VNN such that the trained VNN can perform the function described by a training set of if-then rules. Most existing learning methods in neural networks are designed for processing numerical data [4], [8]–[10]. Ishibuchi and his col-leagues extended a normal (scalar-type) backpropagation (BP) learning algorithm to the one that can train a feedforward neural network with fuzzy input and fuzzy output [11]. This BP al-gorithm was derived based on an error function defined by the difference of fuzzy actual output and the corresponding non-fuzzy target output through non-fuzzy arithmetics. Similar to their approach, we derive a conventional vector-type backpropaga-tion (CVTBP) algorithm for training the proposed VNN. Al-though, this algorithm can train the VNN for the if-then type training data, it has the problems of slow convergence and bad local minima as a normal scalar-type backpropagation (BP) al-gorithm does. To obtain better learning results and efficiency for the VNN, we further propose a new vector-type

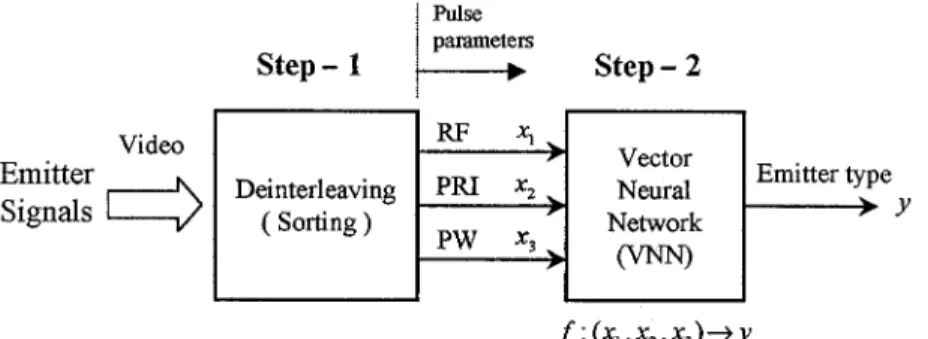

Fig. 1. The flowchart of emitter signal classification.

agation (NVTBP) algorithm derived from a different form of error function. This learning algorithm has a higher convergence rate and is not easily stuck in bad local minima. After training, the VNN can be used to identify the emitter type of the sensed scalar-value features from a real-time received emitter signal. The representation power of the VNN and the effectiveness of the NVTBP learning algorithm are demonstrated on several EID problems, including the two-EID problem and the multi-EID problem with and without additive noise.

The rest of this paper is organized as follows. Section II gives the problem formulation. In Section III, the basic structure of VNN is introduced. Section IV derives an NVTBP algorithm for training the VNN. In Section V, four examples are simulated to demonstrate the identification capability of the proposed VNN with a NVTBP algorithm for emitter signals with and without additive noise. Finally, conclusions are made in Section VI.

II. PROBLEMFORMULATION

In general, the problem of emitter signal classification is per-formed in a two-step process as illustrated in Fig. 1. The first step is called deinterleaving (or sorting), which sorts received pulse trains into “bins” according to the specific emitter from the composite set of pulse trains received from passive receivers in the RWR system. After deinterleaving, the second step is to infer the emitter type by each bin of received pulses to differen-tiate one type from another type. A PDW is generated from the sorting process. Typical measurements include RF, PW, time of arrival (TOA), and (PRI). Thus, a PDW describes a state vector in a multidimensional space. The primary focus of the paper will be on the problem of EID. Emitter parameters and performance are affected by the RF band in which they operate. Likewise, the range of frequency band chosen for a specific emitter is deter-mined by the radar’s mission and specifications. The frequency information is very important for both sorting and jamming. By comparing the frequency of the received pulses, the pulse trains can be sorted out and identified for different radars [2]. When the frequency of the victim radar is known, the jammer can con-centrate its energy in the desired frequency range. The param-eter PW can be used to provide coarse information on the type of radars. For example, generally speaking, weapon radars have short pulses. Another parameter of interest in electronic war-fare (EW) receiver measurements is the PRI. The information is the time difference between the leading edge of consecutive transmission waves and is the reciprocal of pulse repetition fre-quency (PRF). The parameter varies for different radars.

In this paper, the EID problem is considered as a nonlinear mapping problem, the mapping from the space of feature vectors of emitter signals to the space of emitter types. The three param-eters, , , and , are used to form the fea-ture vector in this problem. Such a nonlinear map-ping function can be approximated by a suitable neural network [4], [5]. However, these parameters operate in interval ranges for a specific radar emitter; for example, RF ranges from 15.6 to 16.6 GHz, PRI ranges from 809 to 960 s, and PW ranges from 1.8 to 3.6 s for some specific emitter type. To endow a neural network with the interval-value processing ability, we propose a VNN that can accept either interval-value or scalar-value input and produce scalar output. In the training phase, the VNN is trained to form a functional mapping from the space of interval-value features to the space of emitter types based on sam-ples of training pairs for the EID problem, where

indicating the th training pair, . In each training pair, is an interval value represented by , and is an -dimensional vector containing only one 1 to indicate the emitter type among candidates. Hence, the VNN has three-input nodes with each node receiving one feature’s value, and output nodes with each node repre-senting one emitter type. The input–output relationship of the VNN is denoted by

(1) where is a -dimensional vector indicating the actual output of the VNN, and represents the approximated function formed by the VNN. More clearly, the VNN is trained to represent the EID mapping problem in the following if-then form:

IF is in and and is in

THEN belongs to (2)

where denotes the th emitter type.

The objective of learning is to obtain an approximated model for the mapping in (1) and (2) such that the error function indicating the difference between and , , is minimized. Two different error functions are used in this paper, one is the common root-mean-square error function, and the other is

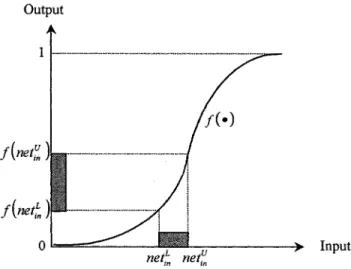

Fig. 2. Interval sigmoid function of each node in the VNN, where

(net ; net ) and f (net ; net ) are an interval input and an interval output,

respectively.

After training, the trained VNN can be used in the functional phase or the so-called testing phase. In this phase, the VNN on-line accepts a feature vector, containing scalar values from the sensors, and produces an output vector with the highest value element in indicating the identified emitter type.

III. STRUCTURE OFVNN

In this section, we shall introduce the structure and function of the VNN which can process interval-value as well as scalar-value data. Before doing so, let us review some operations of interval arithmetic that will be used later. Let

and be intervals, where the superscripts and represent the lower limit and upper limit, respectively. Then, we have

(4) and

if

if (5)

where is a real number. The activation function of a neuron can also be extended to an interval input–output relation as

(6) where is interval-valued and is a sig-moid function. The sigsig-moid function is denoted by

. The interval activation function defined by (6) is illustrated in Fig. 2.

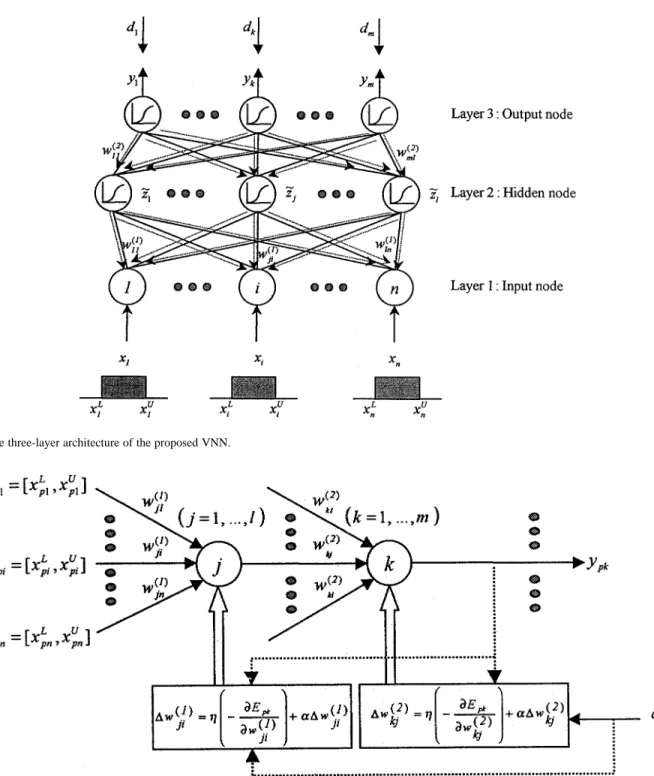

We shall now describe the function of the VNN using the above interval arithmetic operations. The general structure of VNN is shown in Fig. 3, where the solid lines show the forward propagation of signals, and the dashed lines show the backward propagation of errors. In order to identify any -dimensional in-terval-value vector, we employ a VNN that has input nodes, hidden nodes, and output nodes. When the interval-value input vector is presented to the input layer

(8) (9) Output nodes: (10) (11) (12)

where the weights , and the biases , are real parameters and the outputs , and are intervals. It is noted that the VNN can also process scalar-value input data by setting , where is the scalar-value input. Correspondingly, the VNN can produce scalar

output, .

IV. SUPERVISEDLEARNINGALGORITHMS FORVNN In this section, we shall derive a NVTBP learning algorithm for the proposed VNN with interval-value input data. A normal cost function in the CVTBP algorithm, , is first consid-ered using the interval output and the cor-responding desired output for the th input pattern, as

if if

(13) for the case of an interval-value input vector and a crisp desired output. However, to enhance the identification power of VNN, we propose a NVTBP algorithm, which the error cost function instead of the squares of the differences between the actual in-terval output and the corresponding desired output as in (13), where the subscript represents the th-input pattern and th-output node. The new error cost function is defined as

if if

(14) The learning objective is to minimize the error function in (13) and (14). The weight updating rules for the VNN are illustrated

Fig. 3. The three-layer architecture of the proposed VNN.

Fig. 4. Illustration of backpropagation learning rule for the VNN.

in Fig. 4. To show the learning rules, we shall calculate the com-putation of layer by layer along the dashed lines in Fig. 3, and start the derivation from the output nodes.

Layer 3: Using (10)–(12) to calculate for var-ious values of the weights and desired output. The results are summarized in (15), as shown at the bottom of the page.

if and

if and

if and

if and

Clearly, the value of is proportional to the amount of

rather than as in the CVTBP

learning rule. When the actual output (representing or ) approaches the value of 1 or 0, the factor

makes the error signal very small. This implies that an output node can be maximally wrong without producing a strong error signal with which the connection weights could be significantly adjusted. This decelerates the search for a minimum in the error. A detailed description of quantitative analysis can be found in [12].

In summary, the supervised learning algorithm for the VNN is outlined in the following:

NVTBP algorithm:

Consider a 3-layer VNN with input nodes, hidden nodes, and output nodes. The connection weight is from node of the input layer to the th node of the hidden layer, and is from the

th node of the hidden layer to the th node of the output layer.

Input: A set of training pairs , where the input vectors are in interval values.

1) (Initialization): Choose and

(maximum tolerable error). Ini-tialize the weights to small random

values. Set and .

2) (Training loop): Apply the th input pattern to the input layer.

3) (Forward propagation): Propagate the signal forward through the network from the input layer to the output layer. Use (7)–(9) to compute the net

input and output of the

th hidden node, and use (10)–(12)

6) (One epoch looping): Check whether the whole set of training data has

been cycled once. If , then

and go to Step 1; otherwise, go to Step 7.

7) (Total error checking): Check whether the current total error is

accept-able; if , then terminate the

training process and output the final

weights; otherwise, set , ,

and initiate the new training epoch by going to Step 2.

END New vector-type algorithm

The above algorithm adopts the incremental approach in up-dating the weights; that is, the weights are updated for each in-coming training pattern. Finally, the optimal weights and can be obtained through the training procedure and ex-pressed by .. . ... ... (19) and .. . ... ... (20) V. SIMULATIONRESULTS

In this section, we employ the proposed VNN trained by the NVTBP algorithm to handle the practical EID problems in

if and if and if and if and if and if and if and if and (16)

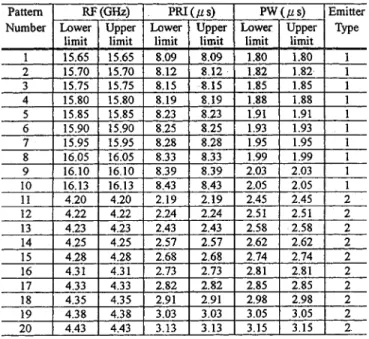

TABLE I

INPUT–OUTPUTTRAININGPAIRS FOR THETWO-EMITTERIDENTIFICATION

PROBLEM INEXPERIMENTS1AND3

TABLE II

PART OF THETESTINGSAMPLES FOR THETWO-EMITTERIDENTIFICATION

PROBLEM INEXPERIMENTS1AND3

real-life; i.e., to map input patterns to their respective emitter types. An input pattern is determined to belong to the th type, if the th output node produces a higher value than all the other output nodes when this input pattern is presented to the VNN. All reference data of the simulated emitters are given by refer-ences [3], [5], and [13]. Before the input patterns are presented to the VNN, the range of each parameter must be normalized over the following bound to increase the network’s learning ability:

RF GHz to GHz

PRI s to s

PW s to s

Two problems are examined to demonstrate the identification capability of the proposed VNN in this section, the two-EID problem and three-EID problem. The performance is compared to that of the VNN trained by CVTBP algorithm on the same training and testing data.

A. Performance Evaluation Without Measurement Error In this section, two experiments are performed for clean input data without measurement distortion to demonstrate the

identi-TABLE III

INPUT–OUTPUTTRAININGPAIRS FOR THETWO-EMITTERIDENTIFICATION

PROBLEM INEXPERIMENTS2AND4

TABLE IV

PART OF THETESTINGSAMPLES FOR THETWO-EMITTERIDENTIFICATION

PROBLEM INEXPERIMENTS2AND4

fication capability of the VNN trained by the NVTBP algorithm and the CVTBP algorithm, respectively.

Experiment 1: For the two-EID problem, we employ a VNN with three-input nodes, five-hidden nodes and two-output nodes (denoted by 3-5-2 network). We set the learning rate as

and momentum constant as in the NVTBP learning algorithm, and , in the CVTBP learning al-gorithm. The 10 input–output training pairs (five pairs for each type) are listed in Table I. In the training phase, we use these

(a)

(b)

training pairs to train two VNNs using the CVTBP and NVTBP algorithms, respectively, and find individually a set of optimal weights. In the testing phase, 80 testing patterns (40 patterns for each emitter type) are randomly selected from the ranges of emitter parameters and are presented to the trained VNNs for performance testing. Part of these testing patterns are shown in Table II. Once a testing pattern is fed to the trained VNNs, the networks identify immediately its emitter type in near real time. The testing results show that the two trained VNNs achieve high identification rates. However, the VNN trained by the NVTBP algorithm performs better than the VNN trained by the CVTBP algorithm; the former achieves an average identification rate of 99.91% and the latter 96.26% as listed in the last row of Table V(a).

Experiment 2: In this experiment, a three-EID problem is solved by two 3-8-3 VNNs trained by the NVTBP and CVTBP algorithms, respectively. We first set the learning constant as and momentum constant as in both learning algorithms. The 15 input–output training pairs (five pairs for each type) as listed in Table III are used to train the two VNNs. After training, 120 testing patterns (40 patterns for each emitter type) are presented to the trained VNNs for performance testing. Part of these testing patterns are shown in Table IV. Again, both networks show high identification capability, where the VNN trained by the NVTBP algorithm achieves an average

identifi-cation rate of 99.84% and the other VNN 91.08% as listed in the last row of Table V(b).

In the above two experiments, the results show that the two-emitter and three-EID problems can be easily handled by the VNN with the derived NVTBP algorithm and the CVTBP algo-rithm in real time. However, the VNN trained by the NVTBP algorithm has better identification capability than that trained by the CVTBP algorithm. These experiments are performed for clean input data without measurement distortion. The robust-ness of the proposed scheme in noisy environment is tested in the following experiments.

B. Performance Evaluation With Measurement Error

In this subsection, two experiments are performed to evaluate the robustness of VNN with measurement distortion. In these experiments, the measurement distortion is simulated by adding noise. To perform the testing at different levels of adding noise, we define the error deviation level (EDL) by

EDL (21)

for emitter type , where is a small randomly generated de-viation for the th input pattern.

Experiment 3: First, we consider the two-EID problem with the input data corrupted by additive noise. The noise testing

patterns are obtained by adding random noise, ,

to each testing pattern , ,

used in Experiment 1 to form the noise testing database, . The noisy testing patterns with different EDL’s (from 1% to 15%) are presented to the trained 3-5-2 VNNs in Experiment 1 for performance testing. The testing results are shown in Table V(a).

Experiment 4: In this experiment, we consider the three-EID problem with the input data corrupted by additive noise. The noise testing patterns are obtained by adding random

noise, , to each testing pattern ,

, used in Experiment 2 to form the noise testing

database, . The noisy testing

patterns with different EDLs are presented to the trained 3-8-3 VNNs in Experiment 2 for performance testing. The testing results are shown in Table V(b).

The testing results in Table V indicate that, as expected, the VNNs identification ability decreases as EDL increasing in noisy environments. In conclusion, the proposed VNN trained by the NVTBP algorithm not only has higher identification capability, but is also relatively more insensitive to noise than that trained by the CVTBP algorithm.

VI. CONCLUSION

In this paper, a VNN along with a new vector-type back-propagation (NVTBP) learning algorithm was proposed to solve the EID problem. The VNN can learn the teaching patterns in the form of interval-value input and scalar-value output in the training phase, and then operate in the way of scalar-value input and scalar-value output in the testing phase by means of interval arithmetics. The main contribution of this paper is to propose an idea for integrating the processing of interval-value and scalar-interval-value data into a single processing system and derive a NVTBP learning algorithm for solving the practical EID problem in real time. In fact, the proposed network with the NVTBP learning algorithm can not only solve the learning problem with interval-value data, but also improve the conver-gence of the CVTBP algorithm. The simulated results show that the proposed VNN can always produce high identification ac-curacy for the emitter signals. Also, it was demonstrated that the VNN is quite insensitive to the additive error. With these results achieved in this paper, the proposed VNN may be widely ap-plied to military applications (such as reconnaissance and threat reaction) for achieving high power of identification for emitter signals to replace the EID function in the electronics support measures (such as RWR). In this paper, we have shown that the proposed VNN can be used for identifying unambiguous ters. In the future work, we will use the extra parameters of emit-ters such as angle of arrival and amplitude to form a new en-larged input feature vector for handling the problem of multiple ambiguous emitter; i.e., different types of emitters have similar characteristics.

REFERENCES

[1] B. Edde, Radar Principles, Technology, Applications. Englewood Cliffs, NJ: Prentice-Hall, 1993.

[2] B. Y. Tsui, Microwave Receivers With Electronic Warfare

Applica-tions. New York: Wiley, 1986.

[3] D. C. Schleher, Introduction to Electronic Warfare. New York: Artech House, 1986.

[4] G. B. Willson, “Radar classification using a neural network,” in

Appli-cations of Artificial Neural Networks. Bellingham, WA: SPIE, 1990, vol. 1294, pp. 200–210.

[5] I. Howitt, “Radar warning receiver emitter identification processing uti-lizing artificial neural networks,” in Applications of Artificial Neural

Networks. Bellingham, WA: SPIE, 1990, vol. 1294, pp. 211–216. [6] K. Mehrotra, C. K. Mohan, and S. Ranka, Elements of Artificial Neural

Networks. Cambridge, MA: MIT Press, 1997.

[7] M. H. Hassoun, Fundamentals of Artificial Neural Networks. Cambridge, MA: MIT Press, 1995.

[8] S. K. Pal and S. Mitra, “Multilayer perceptron, fuzzy sets and classifi-cation,” IEEE Trans. Neural Networks, vol. 3, pp. 683–696, Feb. 1992. [9] J. M. Keller and H. Tahani, “Backpropagation neural networks for fuzzy

logic,” Inform. Sci., vol. 62, pp. 205–221, 1992.

[10] S. Horikawa, T. Furuhashi, and Y. Uchikawa, “On fuzzy modeling using fuzzy neural networks with the backpropagation algorithm,”

IEEE Trans. Neural Networks, vol. 3, pp. 801–806, Sept. 1992.

[11] H. Ishibuchi, R. Fujioka, and H. Tanaka, “Neural networks that learn from fuzzy if-then rules,” IEEE Trans. Fuzzy Syst., vol. 1, pp. 85–97, May 1993.

[12] A. V. Ooyen and B. Nienhuis, “Improving the convergence of the back-propagation algorithm,” Neural Networks, vol. 5, pp. 465–471, 1992. [13] M. I. Skolnik, Radar Handbook. New York: McGraw-Hill, 1991.

Ching-Sung Shieh received the B.S. and M.S.

degrees in electronic engineering from Chung-Yuan Christian University, Taoyuan, Taiwan, R.O.C., in 1984 and 1986, respectively. He received the Ph.D. degree from the National Chiao-Tung University, Hsinchu, Taiwan, R.O.C., in 2001.

Since 1986, he has been with the Chung-Shan Institute of Science and Technology (CSIST), Taiwan, R.O.C., where he is currently an Associate Researcher. His current research interests include radar signal processing, control systems design, neural networks, estimation theory, and applications.

Dr. Shieh was elected an Honorary Member of the society by National Chiao-Tung University, Hsinchu, Taiwan, R.O.C., in 2002.

Chin-Teng Lin (S’88–M’91–SM’99) received the

B.S. degree in control engineering from National Chiao-Tung University, Hsinchu, Taiwan, R.O.C., in 1986 and the M.S.E.E. and Ph.D. degrees in electrical engineering from Purdue University, West Lafayette, IN, in 1989 and 1992, respectively.

Since August 1992, he has been with the College of Electrical Engineering and Computer Science, National Chiao-Tung University, Hsinchu, Taiwan, R.O.C., where he is currently a Professor and Chairman of the Electrical and Control Engineering Department. From 1998 to 2000, he served as Deputy Dean of the Research and Development Office, National Chiao-Tung University. His current research interests include fuzzy systems, neural networks, intelligent control, human–machine interface, image processing, pattern recognition, video and audio (speech) processing, and intelligent transportation systems (ITSs). He is the coauthor of Neural Fuzzy Systems—A Neuro-Fuzzy Synergism to Intelligent

Systems (New York: Prentice-Hall, 1996), and author of Neural Fuzzy Control Systems with Structure and Parameter Learning (Singapore: World Scientific,

1994). He has published over 60 journal papers in the areas of soft computing, neural networks, and fuzzy systems, including about 40 IEEE TRANSACTIONS

papers.

Dr. Lin is a Member of Tau Beta Pi and Eta Kappa Nu. He is also a Member of the IEEE Computer, IEEE Robotics and Automation, and IEEE Systems, Man, and Cybernetics Societies. He was the Executive Council Member of the Chinese Fuzzy System Association (CFSA) from 1995 to 2001. He has been the President of CFSA since 2002, and Supervisor of the Chinese Automation Association since 1998. Since 2000, he has been the Chairman of the IEEE Robotics and Automation Society, Taipei Chapter, and the Associate Editor of the IEEE TRANSACTIONS ONSYSTEMS, MAN, ANDCYBERNETICS, and IEEE TRANSACTIONS ONFUZZYSYSTEMS,and Automatica. He won the Outstanding Research Award granted by the National Science Council (NSC), Taiwan, R.O.C., in 1997, the Outstanding Electrical Engineering Professor Award granted by the Chinese Institute of Electrical Engineering (CIEE) in 1997, and the Outstanding Engineering Professor Award granted by the Chinese Institute of Engineering (CIE) in 2000. He was also elected to be one of the Ten Outstanding Young Persons in Taiwan, R.O.C., in 2000.