國 立 交 通 大 學

電子工程學系 電子研究所碩士班

碩

士

論

文

IEEE802.16e OFDM 與 OFDMA 通道編

碼技術與數位訊號處理器實現之研究

Research in Channel Coding Techniques and

DSP Implementation for IEEE 802.16e

OFDM and OFDMA

研 究 生:陳勇竹

指導教授:林大衛 博士

IEEE 802.16e OFDM 與 OFDMA 通道編

碼技術與數位訊號處理器實現之研究

Research in Channel Coding Techniques and DSP

Implementation for IEEE 802.16e OFDM and OFDMA

研究生: 陳勇竹

Student: Yung-Chu Chen

指導教授: 林大衛 博士

Advisor: Dr. David W. Lin

國 立 交 通 大 學

電子工程學系

電子研究所碩士班

碩士論文

A Thesis

Submitted to Department of Electronics Engineering & Institute of Electronics College of Electrical and Computer Engineering

National Chiao Tung University in Partial Fulfillment of Requirements

for the Degree of Master of Science

in

Electronics Engineering June 2006

IEEE 802.16e OFDM 與 OFDMA 通道編

碼技術與數位訊號處理器實現之研究

研究生:陳勇竹

指導教授:林大衛 博士

國立交通大學

電子工程學系 電子研究所碩士班

摘要

IEEE802.16 無線通訊標準中,於系統的傳送端訂定了前向誤差改正編碼的機 制,藉此減低通訊頻道中雜訊失真的影響。通道編碼是本論文的重點。本篇論文的前半部份重點在於,實現 IEEE 802.16e OFDM 所訂定的前向誤差改正 編碼系統於數位訊號處理器(DSP)上,並且針對 DSP 平台的特性以及前向誤差改正編 碼的演算法進行程式的改進。在此篇論文中,我們將標準中制訂的四個必備的前向誤 差改正編碼系統,實現在以德州儀器公司所發展的 DSP 為核心的平台上。由於我們 關注的重點在於程式的執行效率,因此簡短地介紹過我們使用的前向誤差改正編碼的 演算法以及 DSP 平台的架構與軟體最佳化技巧後,我們將逐步地闡述如何在 DSP 平 台上最佳化我們的程式。最後,前向誤差改正編碼的編碼器部份,經過改進後,於 DSP 模擬器上,可以到每秒 8013K 位元的處理速度,而解碼器的部份可以達到每秒 769K 位元的處理速度。

本論文後半部份強調 IEEE 802.16e OFDMA 中低密度奇偶校驗碼複雜度的降低。 我們介紹一些分析低密度奇偶校驗碼的工具後,逐步地闡述低密度奇偶校驗碼傳統的 解碼演算法,並且介紹一些降低解碼複雜度的演算法。最後我們在加成性白色高斯通 道下模擬了各種調變與各種解碼演算法,並把模擬之結果與一些數學分析的結果做比 較。模擬的結果顯示這幾個降低複雜度的演算法和傳統的解碼表現相當接近,甚至更 好。若從性能,延遲時間,運算複雜度,延遲時間,及需要的記憶體的角度來看,我 們可以彈性的挑選適當的解碼演算法來使用,以取得之間的平衡。

Research in Channel Coding Techniques and DSP

Implementation for IEEE 802.16e OFDM and OFDMA

Student: Yung-Chu Chen

Advisor: Dr. David W. Lin

Department of Electronics Engineering

& Institute of Electronics

National Chiao Tung University

Abstract

In the IEEE 802.16e wireless communication standard, a Forward Error Correction (FEC) mechanism is presented at the transmitter side to reduce the noisy channel effect. The focus is on the channel coding.

The focus of the fist part of this thesis is DSP implementation of the FEC schemes defined in IEEE 802.16e OFDM standard and modifying FEC algorithms to match the architecture of DSP platform. We have implemented four required FEC schemes defined in the standard on the Texas Instruments (TI) TMS320C6416 digital signal processor (DSP). After a brief review of the algorithms, we describe the DSP hardware architecture and its software optimization techniques. We then explain how we optimize the FEC programs on the DSP platform step by step since the speed performance is our major concern. At the end, the improved FEC encoder can achieve a data processing rate of 8013 kbits/sec and the improved FEC decoder can achieve a processing rate of 769 kbits/sec on the TI C64xx DSP simulator.

The focus of second part is the complexity-reduction for low-density parity-check (LDPC) codes defined in IEEE 802.16e OFDMA. We describe some tools to analyze the LDPC codes. We then explain the conventional decoding algorithm, and some reduced-complexity decoding algorithms. Finally, we simulate the LDPC codes for all kinds of modulation and decoding algorithms in AWGN and compare the simulation results with analytical results. Simulation results show that these reduced-complexity decoding algorithms for LDPC codes achieve a performance very close to that of conventional algorithm, or even better. We can flexibility select the appropriate decoding scheme from performance, computational-complexity, latency, and memory-requirement perspectives.

誌謝

這篇論文能夠順利完成,最要感謝的人是我的指導教授 林大衛 博士。在這 二年的研究生涯中,不論是學業上或生活上,處處感受到老師的用心,尤其是修 改論文時相當的用心。除了豐富的學識和研究,老師親切、認真的待人處事態度, 也是我景仰、學習的目標。 另外要感謝的,是實驗室的吳俊榮學長和洪崑健學長。謝謝你們熱心地幫我 解決了許多通訊方面相關的疑問。 感謝通訊電子與訊號處理實驗室(commlab),提供了充足的軟硬體資源,讓 我在研究中不虞匱乏。感謝 91 級 eras(子瀚)、 hclin(筱晴)、長毛(建統),92 級 klinsman(昱升)、andlight(漢光)、ching(汝芩)、u8811021(思浩)、osban (承毅)、Richard Tung(景中)、gem(盈閔)、buggy(志楹)、enz(瑛姿),93 級 Gauss(鴻志、pay3)、

odom(旻弘)、allenlai(阿蛋)、ssdai(世炘)、stan(崇文少爺、林金龍)、lotus(國偉)、 shiryu(治傑)、蔡蟲(崇諺)、jackyboss(國洋)、Jerome(建志)、jerry(宜寬)等實驗室 成員,平日和我一起唸書,一起討論,也一起打混,讓我的研究生涯充滿歡樂又 有所成長。期待大家畢業之後都能有不錯的發展。 最後,要感謝的是我的家人,他們的支持讓我能夠心無旁騖的從事研究工作。 謝謝所有幫助過我、陪我走過這一段歲月的師長、同儕與家人。謝謝! 誌於 2006.7 風城交大 勇竹

Contents

1 Introduction 1

1.1 Scope of the Work . . . 1

1.2 Organization of This Thesis . . . 2

2 Overview of IEEE 802.16e FEC Specifications 4 2.1 FEC Specifications for WirelessMAN-OFDM [1] . . . 4

2.1.1 Reed-Solomon Code Specification . . . 5

2.1.2 Encoding of the Reed-Solomon Code [5] . . . 6

2.1.3 Convolutional Code Specification . . . 7

2.1.4 Encoding of Punctured Convolutional Code . . . 9

2.1.5 Interleaver . . . 9

2.1.6 Modulation . . . 11

2.2 FEC Specifications for WirelessMAN-OFDMA [7] . . . 11

2.2.1 Overview of LDPC Codes . . . 13

2.2.2 LDPC Codes Specification in IEEE 802.16e OFDMA . . . 15

2.2.4 Modulation . . . 20

2.3 Analysis of LDPC Codes in IEEE 802.16e OFDMA . . . 20

2.3.1 Girth Analysis . . . 20

2.3.2 Density Evolution . . . 23

3 DSP Implementation Environment 25 3.1 The TMS320C6416 DSP Chip . . . 25

3.1.1 TMS320C6416 Features . . . 25

3.1.2 Central Processing Unit Features [18] . . . 27

3.1.3 Cache Memory Architecture Overview [19] . . . 31

3.2 The Quixote Baseboard [20] . . . 34

3.3 TI’s Code Development Environment [21], [22] . . . 35

3.4 Code Development Flow [23] . . . 38

3.4.1 Compiler Optimization Options [23] . . . 40

4 Implementation and Optimization of IEEE 802.16e OFDM Channel Codec on DSP 43 4.1 Decoding of RS Code [5] . . . 43

4.2 Viterbi Decoding of Punctured Convolutional Code . . . 44

4.3 Decoding of Bit-Interleaved Coded Modulation . . . 45

4.4 Profile of the DSP Code . . . 47

5 Decoding Algorithms of LDPC Codes in IEEE 802.16e OFDMA 60

5.1 The Belief Propagation Algorithm [30] . . . 60

5.2 Some Reduced-Complexity Decoding Algorithms [30] . . . 62

5.2.1 BP-Based Algorithm . . . 62

5.2.2 Balanced Belief Propagation Algorithm [31] . . . 63

5.2.3 Normalized BP-Based Algorithm . . . 63

5.2.4 Offset BP-Based Algorithm . . . 64

5.3 Early Termination [33] . . . 65

5.4 Simulation Results and Analysis . . . 66

5.4.1 Determine the Number of Iterations . . . 66

5.4.2 Use of All-Zero Codewords in Simulation . . . 66

5.4.3 Performance of the IEEE 802.16e LDPC Codes under the BP Algorithm 67 5.4.4 Performance of Balanced BP Decoding Algorithm . . . 70

5.4.5 Choose Appropriate Early Termination Parameters . . . 72

5.4.6 Compare Early Termination and Parity Check Termination . . . 75 5.4.7 Performance of Some Reduced-Complexity Decoding Algorithms [30] 76

6 Conclusion and Future Work 83

List of Figures

2.1 Channel coding structure in transmitter (top path) and decoding in receiver

(bottom path). . . 4

2.2 Shortened and punctured Reed-Solomon encoder (from [5]). . . 8

2.3 Convolutional encoder of rate 1/2 (from [1]). . . . 8

2.4 BPSK, QPSK, 16-QAM, and 64-QAM constellations (from [1]). . . 12

2.5 Tanner graph of a parity check matrix (from [7]). . . 15

2.6 Base model of the rate-1/2code (from [2]). . . . 16

2.7 Base model of the rate-2/3, type A code(from [2]). . . . 17

2.8 Base model of the rate-2/3, type B code(from [2]). . . . 17

2.9 Base model of the rate-3/4, type A code(from [2]). . . . 17

2.10 Base model of the rate-3/4, type B code(from [2]). . . . 18

2.11 Base model of the rate-5/6 code(from [2]). . . . 18

2.12 QPSK, 16-QAM, and 64-QAM constellations (from [2]). . . 21

3.1 Block diagram of TMS320C6416 DSP (from [18]). . . 28

3.2 Pipeline phases of TMS320C6416 DSP (from [18]). . . 29

3.4 C64x cache memory architecture (from [19]). . . 34

3.5 Picture of the Quixote board [20]. . . 35

3.6 Block diagram of the Quixote board (from [16]). . . 36

3.7 Code development flow for TI C6000 DSP (from [23]). . . 39

4.1 Trellis diagram example of Viterbi decoder (from [24]). . . 45

4.2 The assembly codes of RS encoding (1/7). . . 49

4.3 The assembly codes of RS encoding (2/7). . . 50

4.4 The assembly codes of RS encoding (3/7). . . 51

4.5 The assembly codes of RS encoding (4/7). . . 52

4.6 The assembly codes of RS encoding (5/7). . . 53

4.7 The assembly codes of RS encoding (6/7). . . 54

4.8 The assembly codes of RS encoding (7/7). . . 55

4.9 The assembly codes of Chien search in RS decoding (1/4). . . 56

4.10 The assembly codes of Chien search in RS decoding (2/4). . . 57

4.11 The assembly codes of Chien search in RS decoding (3/4). . . 58

4.12 The assembly codes of Chien search in RS decoding (4/4). . . 59

5.1 Decoding performance at different iteration numbers. . . 67

5.2 Performance of random data versus all-zero codeword. . . 68

5.3 Performance of the rate-1/2 code, length 576 code. . . 69

5.4 Performance of the rate-1/2 code at different codeword lengths, under QPSK modulation and BP decoding. . . 70

5.5 Performance of different code rates at codeword length 576, under QPSK

modulation and BP decoding. . . 71

5.6 Conventional BP and balanced BP decoding with length 576, rate 1/2 code, and QPSK modulation. . . 72

5.7 Effects of differenct ways of early termination. . . 73

5.8 Distribution of iteration numbers for codes of different lengths. . . 74

5.9 Distribution of iteration numbers at different SNR values. . . 75

5.10 Comparison of the performance of parity check termination and early termi-nation. . . 77

5.11 Comparison of the iteration numbers of parity check termination and early termination. . . 78

5.12 Performance of different decoding algorithms with rate 1 2 and 23A, length 576. 79 5.13 Performance of different decoding algorithms with rate 2 3B and 34A, length 576. 80 5.14 Performance of different decoding algorithms with rate 3 4B and 56, length 576. 81 5.15 Performance of different decoding algorithms with rate 1 2, 23A, and 34B. . . . 82

List of Tables

2.1 Mandatory Channel Coding Schemes for each Modulation Method . . . 5

2.2 The Inner Convolutional Code with Puncturing Configuration . . . 9

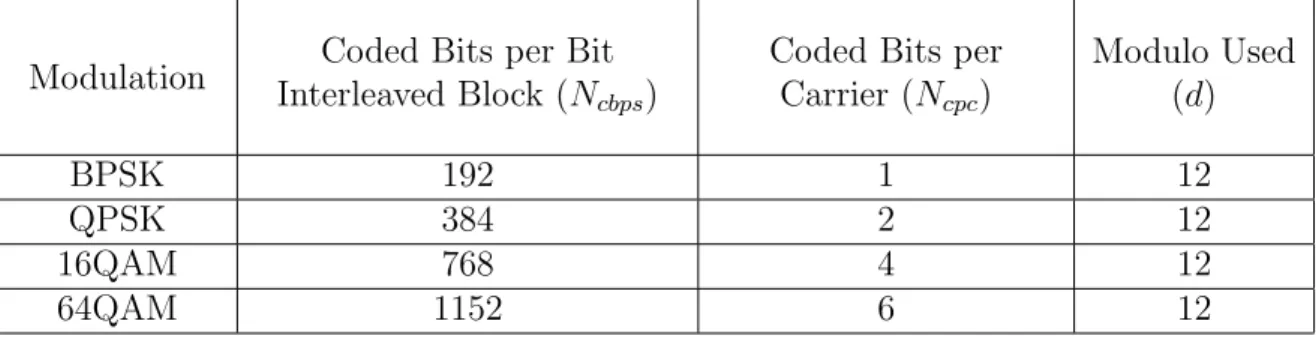

2.3 Bit Interleaved Block Sizes and Modulos . . . 10

2.4 Bit Interleaved Block Sizes and Modulos . . . 19

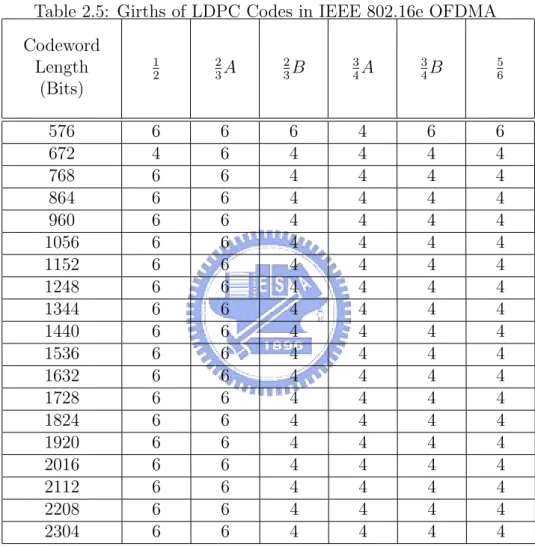

2.5 Girths of LDPC Codes in IEEE 802.16e OFDMA . . . 22

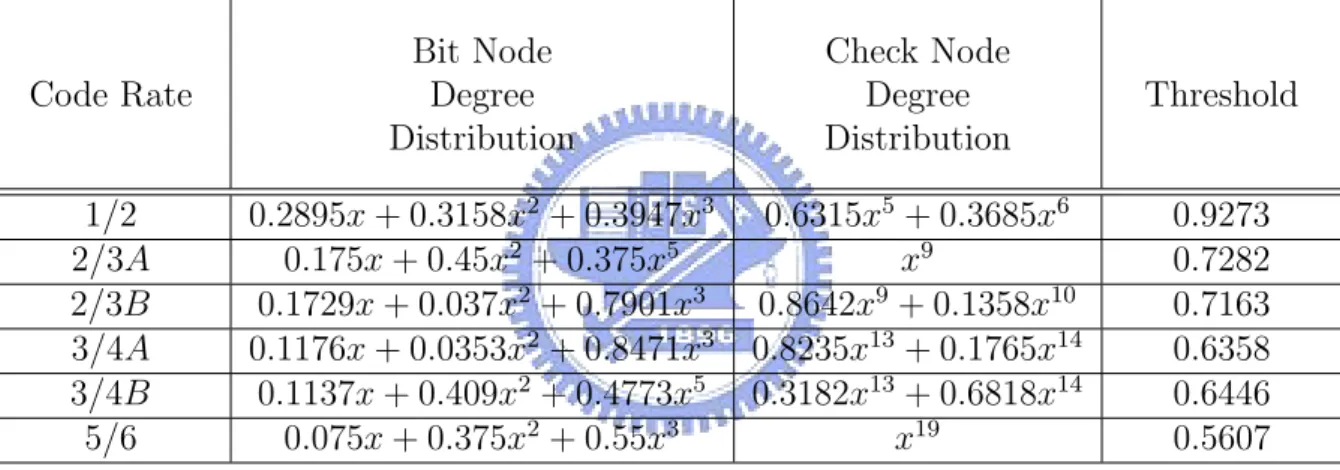

2.6 Degree Distribution and Threshold for Each Code Rate under BPSK Modu-lation, AWGN Channel, and BP Decoding . . . 24

3.1 Execution Stage Length Description for Each Instruction Type (from [18]). . 30

3.2 Functional Units and Operations Performed (from [18]) . . . 32

4.1 Profile of Channel Encoder under Different Coding and Modulation Modes . 48 4.2 Profile of Channel Decoder under Different Coding and Modulation Modes . 48 5.1 Operation Comparison for all Decoding Algorithms . . . 65

5.2 Relation between Eb N o, Girth, and Threshold . . . 71

Chapter 1

Introduction

1.1

Scope of the Work

Digital wireless transmission with multimedia contents is a trend in the next generation of consumer electronics field. Due to this demand high data transmission rate and mobility are needed. Thus the OFDM modulation technique for wireless communication has been the main stream in the recent years. IEEE has completed several standards such as IEEE 802.11 series for LANs (local area networks) and IEEE 802.16 series for MANs (metropolitan area networks) based on OFDM technique. Our study is based on the IEEE 802.16e stan-dard, which specifies the air interface of mobile broadband wireless access systems providing multiple access.

One major problem with wireless communication is that the transmission channel is not noiseless. The transmitted signals are easily interfered and distorted by different types of noise sources such as the crowd traffic, bad weather, the obstacle of buildings, etc. Mul-timedia service contains broad range of contents such as audio, video, still image, and the traditional speech. These services would exhibit untolerable quality if they cannot detect and recover the errors introduced from the noisy channel. To improve the robustness of the wireless communication against the noisy channel condition, the FEC

(forward-error-correcting coding) mechanism is usually a must to overcome the channel errors for almost every commercial communication standard, including the IEEE 802.16e.

This work studies two parts of IEEE802.16e. One is the implementation of the FEC schemes under OFDM on a digital signal processor (DSP). And the other part is mainly the complexity-reduced decoding algorithms for the FEC schemes under OFDMA for future implementation on DSP.

The second part of work is part of a group project that gears at studying and construction (using DSPs) of IEEE802.16e-based transmission system prototype for mobile broadband communication. The intended span of the group project is from August 2005 to July 2008. Our study constitutes part of the first year’s work.

The channel coding scheme in IEEE802.16e for OFDM employs concatenated coding with shortened punctured Reed-Solomon code as outer code and punctured convolutional code as inner code. In addition, bit interleaver and M-ary QAM modulation are used after the concatenated code, whereas the channel coding scheme in IEEE802.16e for OFDMA, we consider the LDPC codes, bit interleaver and M-ary QAM modulation.

1.2

Organization of This Thesis

This thesis is organized as follows.

• Chapter 2 introduces the FEC schemes of IEEE 802.16e and introduces some tools to

analyze the LDPC codes.

• Chapter 3 describes the DSP implementation environment.

• Chapter 4 introduces the DSP implementation and optimization of the OFDM FEC

• Chapter 5 presents some decoding algorithms and simulation results and compares

different decoding algorithms from simulation.

Chapter 2

Overview of IEEE 802.16e FEC

Specifications

2.1

FEC Specifications for WirelessMAN-OFDM [1]

The channel coding scheme used in IEEE 802.16e OFDM, as shown in Fig. 2.1, is a con-catenated code employing the Reed-Solomon (RS) code as the outer code and convolutional code (CC) as the inner code. Input data streams are divided into RS blocks, and then each RS block is encoded by convolutional code. The block-by-block coding makes the whole concatenated code a block-based coding scheme.

The convolutional code is used to “clean up” the channel for the RS code, which in turn corrects the burst errors emerging from the convolutional decoder. In this way, the bit error

Reed−Solomon Encoder Convolutional Encoder

Convolutional Decoder Reed−Solomon Decoder

Interleaver Modulation

De−interleaver Demodulation

Figure 2.1: Channel coding structure in transmitter (top path) and decoding in receiver (bottom path).

Table 2.1: Mandatory Channel Coding Schemes for each Modulation Method Modulation Uncoded Block Size (Bytes) Overall Code Rate Coded Block

Size (Bytes) RS Code CC CodeRate

BPSK 12 1/2 24 (12, 12, 0) 1/2 QPSK 24 1/2 48 (32, 24, 4) 2/3 QPSK 36 3/4 48 (40, 36, 2) 5/6 16QAM 48 1/2 96 (64, 48, 8) 2/3 16QAM 72 3/4 96 (80, 72, 4) 5/6 64QAM 96 2/3 144 (108, 96, 6) 3/4 64QAM 108 3/4 144 (120, 108, 6) 5/6

rate (BER) can decrease exponentially [3]. In addition, between the convolutional coder and the modulator is a bit interleaver, which protects the convolutional code from severe impact of burst errors and increases overall coding performance. This approach has been termed “bit-interleaver coded modulation (BICM)” in the literature [4].

To make the system more flexibly adaptable to the channel condition, there are seven coding-modulation schemes defined in IEEE 802.16e, as shown in Table 2.1. The different coding rates are made by shortening and puncturing the native RS code and with puncturing of the native convolutional code. The shortening and puncturing mechanisms in RS coding create different block sizes and different error-correction capability RS codes through one RS coder. The puncturing mechanism in CC coding can provide variable code rates through one CC coder.

2.1.1

Reed-Solomon Code Specification

The Reed-Solomon code in IEEE802.16e is derived from a systematic RS (N = 255, K = 239,

of data bytes before encoding, and T is number of data bytes which can be corrected. The following polynomials are used for the systematic code:

Field generator polynomial: p(x) = x8+ x4+ x3+ x2+ 1. (2.1)

Code generator polynomial: g(x) = (x + λ0)(x + λ1) · · · (x + λ2T−1), λ = 0x2, = g15x15+ g14x14+ · · · + g1x + g0. (2.2)

This code is then shortened and punctured to enable variable block sizes and variable error-correction capability. The modified RS code is denoted as (N0, K0, T0) and the generator

polynomial for RS code is given by

g(x) = (x + λ0)(x + λ1) · · · (x + λ2T−1). (2.3)

When a block is shortened to K0 data bytes, the first 239 − K0 bytes of the encoder block

are filled with 0s. When a codeword is punctured to permit T0 bytes to be corrected, only

the first 2T0 of the total 16 parity bytes are employed.

2.1.2

Encoding of the Reed-Solomon Code [5]

We use the (64,48,8) RS code to explain the encoding process. Let the information data to the (255,239,8) systematic RS be represented as:

I(x) = I238x238+ I237x237+ · · · + I37x37+ I36x36+ I35x35+ I34x34+ · · · + I1x + I0

= (I238, I237, · · · , I37, I36, I35, I34, · · · , I1, I0). (2.4)

Then the resulting codeword is given by

C(x) = I(x) · x16+ R(x)

where

R(x) = I(x) · x16 mod g(x)

= R15x15+ · · · + R5x5+ R4x4+ · · · + R1x + R0

= (R15, · · · , R5, R4, · · · , R1, R0). (2.6)

When shortened and punctured to (64,48,8), the first 191= (239 − 48) information bytes are assigned 0, i.e., I238 = I237 = · · · = I48 = 0, and the first 16= (2 · 8) bytes of R(x) will be

employed in the codeword. Now the information data of (64,48,8) will be

I0(x) = I47x47+ I46x46+ · · · + I1x + I0

= (I47, I46, · · · , I1, I0), (2.7)

and the codeword will be

C0(x) = I0(x) · x16+ R0(x)

= (I47, I46, · · · , I1, I0, R15, · · · , R1, R0) (2.8)

where

R0(x) = first 16 bytes of (I0(x) · x16 mod g(x))

= R15x15+ · · · + R1x1+ R0x0

= (R15, · · · , R1, R0). (2.9)

A systematic RS encoder is depicted in Fig. 2.2.

2.1.3

Convolutional Code Specification

Each RS block is encoded by a binary convolutional encoder, which has native rate of 1/2, a constraint length equal to 7, and the generator polynomials for the two output bits are 171OCT and 133OCT. The generator is depicted in Fig. 2.3.

first K’ ticks closed last 2T’ ticks open

first K’ ticks down last 2T’ ticks up 14 g g15 15 R 14 R Output g0 g1 g2 R2

I’(x) following by 2T’ zero

R0 R1

Figure 2.2: Shortened and punctured Reed-Solomon encoder (from [5]).

This convolutional code is then punctured to allow different rates, which is known as rate-compatible punctured convolutional coding (RCPC). A single 0x00 tail byte is appended to the end of each RS output data block to initialize the CC encoder’s memory.

2.1.4

Encoding of Punctured Convolutional Code

The convolutional code encoding structure is shown in Fig. 2.3. It consist of one input bit, six memory elements (shift registers), and two output bits generated by first performing the AND operations on the generator polynomial coefficients and the contents of the memory elements padded with the input bit, then performing the operation of XOR on each bits generated by the previous AND operation. Then we do the puncturing. The puncturing patterns and serialization order of the convolutional code in IEEE802.16e are defined in Table 2.2. In this table, “1” means a transmitted bit and “0” denotes a removed bit, whereas X and Y are in reference to Fig. 2.3. Note that the Dfree has been changed from that of the

native convolutional code with rate 1/2, which is equal to 10 [6, Chapter 8].

2.1.5

Interleaver

The encoded data bits are interleaved by a block interleaver with a block size corresponding to the number of coded bits per the specified allocation, Ncbps (see Table 2.3). The

inter-leaver is defined by a two-step permutation. The first ensures that adjacent coded bits are Table 2.2: The Inner Convolutional Code with Puncturing Configuration

Code Rates Rate 1/2 2/3 3/4 5/6 Dfree 10 6 5 4 X 1 10 101 10101 Y 1 11 110 11010 XY X1Y1 X1Y1Y2 X1Y1Y2X3 X1Y1Y2X3Y4X5

Table 2.3: Bit Interleaved Block Sizes and Modulos

Modulation Interleaved Block (NCoded Bits per Bit

cbps)

Coded Bits per Carrier (Ncpc) Modulo Used (d) BPSK 192 1 12 QPSK 384 2 12 16QAM 768 4 12 64QAM 1152 6 12

mapped onto non-adjacent carriers. The second insures that adjacent coded bits are mapped alternately onto less or more significant bits of the constellation, thus avoiding long runs of lowly reliable bits.

Let s = ceil(Ncpc/2), k be the index of the coded bit before the first permutation, m

the index after the first and before the second permutation and j the index after the second permutation, just prior to modulation mapping. The first permutation is defined by

m = (Ncbps

d ) · kmod(d)+ f loor( k

d), k = 0, 1, · · · , Ncbps− 1, (2.10)

and the second permutation by

j = s · f loor(m

s ) + (m + Ncbps− f loor( d · m Ncbps

))mod(s), m = 0, 1, · · · , Ncbps− 1. (2.11)

The de-interleaver, which performs the inverse operation, is also defined by two per-mutations. Let j be the index of the received bit before the first permutation, m be the index after the first and before the second permutation, and k be the index after the second permutation, just prior to delivering the coded bits to the convolutional decoder. The first permutation is defined by m = s · f loor(j s) + (j + f loor( d · j Ncbps ))mod(s), j = 0, 1, · · · , Ncbps− 1, (2.12)

and the second permutation by k = d · m − (Ncbps− 1) · f loor( d · m Ncbps ), m = 0, 1, · · · , Ncbps− 1. (2.13)

2.1.6

Modulation

After bit interleaving, the data bits are entered serially to the constellation mapper. BPSK, QPSK and Gray-mapped 16-QAM are supported, whereas the support of Gray-mapped 64-QAM is optional. The constellations as shown in Fig. 2.4 shall be normalized by multiplying the constellation points with the indicated factor c to achieve equal average power. The constellation-mapped data shall be subsequently modulated onto the allocated data carriers.

2.2

FEC Specifications for WirelessMAN-OFDMA [7]

One of the channel coding scheme used in IEEE802.16e OFDMA is using low-density parity-check (LDPC) code. The input data are first encoded by the LDPC encoder. The encoder output is then interleaved by the bit interleaver described in Section 2.2.3. To make the system more flexibly adaptable to the channel condition, there are three different modulation types which would be depicted in Section 2.2.4.

LDPC codes are a special case of error correcting codes that have recently been receiving a lot of attention because of their very high throughput and very good decoding performance. Inherent parallelism of the message passing decoding algorithm for LDPC codes makes them very suitable for hardware implementation. The LDPC codes can be used in any digital environment that high data rate and good error correction are important.

Gallager [8] proposed LDPC codes in the early 1960s, but his work received no attention until after the invention of turbo codes in 1993, which used the same concept of iterative decoding. In 1996, MacKay and Neal [9], [10] re-discovered LDPC codes. Chung et al. [11]

showed that a rate-1/2 LDPC code with block length of 107 in binary input additive white

Gaussian noise (AWGN) can achieve a threshold of just 0.0045 dB away from Shannon limit. LDPC codes have several advantages over turbo codes: First, the sum-product decoding algorithm for these codes has inherent parallelism which can be harvested to achieve a greater speed of decoding. Second, unlike turbo codes, decoding error is a detectable event which results in a more reliable system. Third, very low complexity decoders, such as the modified minimum-sum algorithm that closely approximate the sum-product in performance, can be designed for these codes.

Since our focus is on wireless communications, we would like to have low-power architec-tures and speed of decoding as it is needed for the IEEE 802.16e standard.

Complexity in iterative decoding has two parts. First, complexity of the computations in each iteration. Second, the number iterations. Both of these are manageable in prac-tice. There is a trade-off between the performance of the decoder, complexity and speed of decoding.

2.2.1

Overview of LDPC Codes

LDPC codes are a class of linear block codes corresponding to a sparse parity check matrix

H. The term “low-density” means that the number of 1s in each row or column of H is

small compared to the block length n. In other words, the density of 1s in the parity check matrix which consists of only 0s and 1s is very low and sparse. Given k information bits, the set of LDPC codewords c in the code space C of length n spans the null space of the parity check matrix H in which cHT = 0.

For a (Wc, Wr) LDPC code, each column of the parity check matrix H has Wc ones and

each row has Wr ones; this is called regular. If degrees per row or column are not constant,

regular ones. But irregularity results in more complex hardware and inefficiency in terms of re-usability of functional units. In the IEEE 802.16e standard irregular codes have been considered to achieve better performance. Code rate R is equal to k/n, which means that

n − k redundant bits have been added to the message so as to correct the errors.

LDPC codes can be represented effectively by a bipartite graph called a Tanner graph [12], [13]. A bi-partite graph is a graph (nodes or vertices are connected by undirected edges) whose nodes may be separated into two classes, and where edges may only be connecting two nodes not residing in the same class. The two classes of nodes in a Tanner graph are bit nodes and check nodes. The Tanner graph of a code is drawn according to the following rule: Check node fj , j = 1, · · · , n − k, is connected to bit node xi, i = 1, · · · , n, whenever

element hji in H (parity check matrix) is a one. Figure. 2.5 shows a Tanner graph made for

a simple parity check matrix H. In this graph each bit node is connected to two check nodes (bit degree = 2) and each check node has a degree of four.

Let dvmax and dcmax denote the maximum variable node and check node degree

respec-tively, and let λi and ρi represent the fraction of edges emanating from variable and check

nodes of degree and d(v) = i and d(c) = i respectively. Then we can define

λ(x) =

dXvmax i=2

λixi−1 (2.14)

as the variable node degree distribution, and

ρ(x) =

dXcmax i=2

ρixi−1 (2.15)

as the check node degree distribution.

Definition: Degree of a node is the number of branches that is connected to that node. Definition: A cycle of length l in a Tanner graph is a path comprised of l edges which

closes back on itself. The Tanner graph in Fig. 2.5 has a cycle of length four which has been shown by dashed lines.

Figure 2.5: Tanner graph of a parity check matrix (from [7]).

Definition: The girth of a Tanner graph is the minimum cycle length of the graph. The

shortest possible cycle in a bi-partite graph is clearly a length-4 cycle.

Short cycles have negative impact on the decoding performance of LDPC codes. Hence we would like to have large girths.

2.2.2

LDPC Codes Specification in IEEE 802.16e OFDMA

The LDPC codes in IEEE802.16e are a systematic linear block code, where k systematic information bits are encoded to n coded bits by adding m = n − k parity bits. The code rate is k/n.

The LDPC codes in IEEE802.16e are defined based on a parity check matrix H of size

m×n that is expanded from a binary base matrix Hb of size mb×nb, where m = z·mb and

n = z·nb. In this standard there are six different base matrices, one for the rate 1/2 code

depicted in Fig. 2.6, two different ones for two rate 2/3 codes, type A in Fig. 2.7 and type B in Fig. 2.8, two different ones for two rate 3/4 codes, type A in Fig. 2.9 and type B in Fig.

Figure 2.6: Base model of the rate-1/2code (from [2]).

2.10, one for the rate 5/6 code depicted in Fig. 2.11. In these base matrices, size nb is an

integer equal to 24 and the expansion factor z is an integer between 24 and 96 . Therefore we can compute the minimal code length as nmin = 24×24 = 576 bits and the maximum

code length as nmax = 24×96 = 2304 bits.

For codes 1

2, 23B, 34A, 34B, and 56, the shift sizes p(f, i, j) for a code size corresponding

to expansion factor zf are derived from p(i, j), which is the element at the i-th row, j-th

column in the base matrices, by scaling p(i, j) proportionally as

p(f, i, j) =

(

p(i, j), p(i, j) ≤ 0,

bp(i,j)zfzo c, p(i, j) > 0. (2.16)

For code 2

3A, the shift sizes p(f, i, j) are derived by using a modulo function as

p(f, i, j) =

(

p(i, j), p(i, j) ≤ 0, mod(p(i, j), zf), p(i, j) > 0.

(2.17)

A base matrix entry p(f, i, j) = −1 indicates a replacement with a z × z all-zero matrix and an entry p(f, i, j) ≥ 0 indicates a replacement with a z×z permutation matrix. The permutation matrix represents a circular right shift of p(f, i, j) positions. This entry p(f, i, j) = 0 indicates a z×z identity matrix.

Figure 2.7: Base model of the rate-2/3, type A code(from [2]).

Figure 2.8: Base model of the rate-2/3, type B code(from [2]).

Figure 2.10: Base model of the rate-3/4, type B code(from [2]).

Table 2.4: Bit Interleaved Block Sizes and Modulos

Modulation Coded Bits perCarrier (N

cpc) Modulo Used (d) QPSK 2 16 16QAM 4 16 64QAM 6 16

2.2.3

Interleaver

The encoded data bits are interleaved by a block interleaver with a block size corresponding to the number of coded bits per the encoded block size, Ncbps (see Table 2.3). The

inter-leaver is defined by a two-step permutation. The first ensures that adjacent coded bits are mapped onto non-adjacent carriers. The second insures that adjacent coded bits are mapped alternately onto less or more significant bits of the constellation, thus avoiding long runs of lowly reliable bits.

Let s = Ncpc/2, k be the index of the coded bit before the first permutation, m the

index after the first and before the second permutation and j the index after the second permutation, just prior to modulation mapping. The first permutation is defined by

m = (Ncbps

d ) · kmod(d)+ f loor( k

d), k = 0, 1, · · · , Ncbps− 1, (2.18)

and the second permutation by

j = s · f loor(m

s ) + (m + Ncbps− f loor( d · m Ncbps

))mod(s), m = 0, 1, · · · , Ncbps− 1. (2.19)

The de-interleaver, which performs the inverse operation, is also defined by two per-mutations. Let j be the index of the received bit before the first permutation, m be the index after the first and before the second permutation, and k be the index after the second

permutation, just prior to delivering the coded bits to the convolutional decoder. The first permutation is defined by m = s · f loor(j s) + (j + f loor( d · j Ncbps ))mod(s), j = 0, 1, · · · , Ncbps− 1, (2.20)

and the second permutation by

k = d · m − (Ncbps− 1) · f loor(

d · m Ncbps

), m = 0, 1, · · · , Ncbps− 1. (2.21)

2.2.4

Modulation

After bit interleaving, the data bits are entered serially to the constellation mapper. QPSK and Gray-mapped 16-QAM are supported, whereas the support of Gray-mapped 64-QAM is optional. The constellations as shown in Fig. 2.12 shall be normalized by multiplying the constellation points with the indicated factor c to achieve equal average power. The constellation-mapped data shall be subsequently modulated onto the allocated data carriers.

2.3

Analysis of LDPC Codes in IEEE 802.16e OFDMA

2.3.1

Girth Analysis

In this section, we compute the girth of LDPC in IEEE 802.16e for all kinds of code rate. Hence we can broadbrush estimate the specific code performance. The result is listed in Table 2.5.

From Table 2.5, we can roughly estimate the performance of code rate 2

3A is a little better

than 2

3B under the same condition of codeword length, modulation, channel, and decoding

algorithm, because of the longer average girth. While code rate 3

4B would perform slightly

better than rate 3

Table 2.5: Girths of LDPC Codes in IEEE 802.16e OFDMA Codeword Length (Bits) 1 2 23A 23B 34A 34B 56 576 6 6 6 4 6 6 672 4 6 4 4 4 4 768 6 6 4 4 4 4 864 6 6 4 4 4 4 960 6 6 4 4 4 4 1056 6 6 4 4 4 4 1152 6 6 4 4 4 4 1248 6 6 4 4 4 4 1344 6 6 4 4 4 4 1440 6 6 4 4 4 4 1536 6 6 4 4 4 4 1632 6 6 4 4 4 4 1728 6 6 4 4 4 4 1824 6 6 4 4 4 4 1920 6 6 4 4 4 4 2016 6 6 4 4 4 4 2112 6 6 4 4 4 4 2208 6 6 4 4 4 4 2304 6 6 4 4 4 4

2.3.2

Density Evolution

For many channels and iterative decoders of interest, LDPC codes exhibit a threshold phe-nomenon [14]: as the block length tends to infinity, an arbitrarily small bit error probability can be achieved if the noise level is smaller than a certain threshold. For a noise level above this threshold, on the other hand, the probability of bit error is larger than a positive constant.

Density evolution provides an efficient way to determine the thresholds of LDPC codes ensemble by tracking the probability density functions (pdf’s) of the message in the Tanner graph of an LDPC code. Since there is no theoretical guideline. for the design of LDPC codes, it is meaningful to optimize the code by density evolution [15].

Without loss of generality, assume that the all-0 codeword is transmitted. Firstly, choose a value for the threshold parameter δ to start density evolution. If the pdf of all bit messages tend to infinity after enough iterations, such a value of δ is within the threshold. Then, increase the value δ until the density evolution cannot succeed, that is, the pdf cannot tend to infinity after enough iterations. The maximum value of δ found is the threshold of the irregular LDPC codes with degree distribution pair (λ, ρ).

In Table 2.6, we list the degree distribution pairs(λ, ρ) and the thresholds of the LDPC codes in IEEE 802.16e OFDMA. Here we assume BPSK modulation, belief propagation (BP) decoding which will be introduced in Chapter 5, and AWGN channel.

From these threshold values, not only 2

3A is larger than 23B but 34B is larger than 34A,

this result is the same as what we broadbrush estimate the specific code performance by using the girth analysis.

Table 2.6: Degree Distribution and Threshold for Each Code Rate under BPSK Modulation, AWGN Channel, and BP Decoding

Code Rate Bit Node Degree Distribution Check Node Degree Distribution Threshold 1/2 0.2895x + 0.3158x2+ 0.3947x3 0.6315x5+ 0.3685x6 0.9273 2/3A 0.175x + 0.45x2+ 0.375x5 x9 0.7282 2/3B 0.1729x + 0.037x2+ 0.7901x3 0.8642x9+ 0.1358x10 0.7163 3/4A 0.1176x + 0.0353x2+ 0.8471x3 0.8235x13+ 0.1765x14 0.6358 3/4B 0.1137x + 0.409x2+ 0.4773x5 0.3182x13+ 0.6818x14 0.6446 5/6 0.075x + 0.375x2+ 0.55x3 x19 0.5607

Chapter 3

DSP Implementation Environment

We conduct a DSP (digital signal processor) implementation for the channel coding scheme of OFDM in our work. In this we employ the Quixote DSP-FPGA baseboard made by Innovative Integration (II), on which the DSP is Texas Instruments’s (TI) TMS320C6416. Because of our purely software implementation on the DSP, discussion in this chapter will mainly focus on the DSP chip and the associated system development environment.

3.1

The TMS320C6416 DSP Chip

The following text is mainly taken from references [16] and [17].

3.1.1

TMS320C6416 Features

The TMS320C64x DSPs are the highest-performance fixed-point DSP generation on the TMS320C6000 DSP platform. The TMS320C64x device is based on the second-generation high-performance, very-long-instruction-word (VLIW) architecture developed by TI. The C6416 device has two high-performance embedded coprocessors, Viterbi Decoder Coproces-sor (VCP) and Turbo Decoder CoprocesCoproces-sor (TCP) that can significantly speed up channel-decoding operations on-chip, but we do not make use of these coprocessors in the present

work.

The C64x core CPU consists of 64 general-purpose 32-bits registers and 8 function units. Features of C6000 devices include:

• The eight functional units include two multipliers and six arithmetic units:

– Execute up to eight instructions per cycle.

– Allow designers to develop highly effective RISC-like code for fast development time.

• Instruction packing:

– Gives code size equivalence for eight instructions executed serially or in parallel. – Reduces code size, program fetches, and power consumption.

• Conditional execution of all instructions:

– Reduces costly branching.

– Increases parallelism for higher sustained performance.

• Efficient code execution on independent functional units:

– Efficient C compiler on DSP benchmark suite.

– Assembly optimizer for fast development and improved parallelization.

• 8/16/32-bit data support, providing efficient memory support for a variety of

applica-tions.

• 40-bit arithmetic options add extra precision for applications requiring it. • Saturation and normalization provide support for key arithmetic operations.

• Field manipulation and instruction extract, set, clear, and bit counting support

com-mon operation found in control and data manipulation applications. The C64x additional features include:

• Each multiplier can perform two 16×16 bits or four 8×8 bits multiplies every clock

cycle.

• Quad 8-bit and dual 16-bit instruction set extensions with data flow support. • Support for non-aligned 32-bit (word) and 64-bit (double word) memory accesses. • Special communication-specific instructions have been added to address common

op-erations in error-correcting codes.

• Bit count and rotate hardware extends support for bit-level algorithms.

3.1.2

Central Processing Unit Features [18]

The block diagram of C6416 DSP is shown in Fig. 3.1. The DSP contains: program fetch unit, instruction dispatch unit, instruction decode unit, two data paths which each has four functional units, 64 32-bit registers, control registers, control logic, and logic for test, emulation, and interrupt logic.

The TMS320C64x DSP pipeline provides flexibility to simplify programming and improve performance. The pipeline can dispatch eight parallel instructions every cycle. The follow-ing two factors provide this flexibility: Control of the pipeline is simplified by eliminatfollow-ing pipeline interlocks, and the other is increasing pipelining to eliminate traditional architec-tural bottlenecks in program fetch, data access, and multiply operations. This provides single cycle throughput.

Figure 3.2: Pipeline phases of TMS320C6416 DSP (from [18]).

The pipeline phases are divided into three stages: fetch, decode, and execute. All in-structions in the C62x/C64x instruction set flow through the fetch, decode, and execute stages of the pipeline. The fetch stage of the pipeline has four phases for all instructions, and the decode stage has two phases for all instructions. The execute stage of the pipeline requires a varying number of phases, depending on the type of instruction. The stages of the C62x/C64x pipeline are shown in Fig. 3.2.

Reference [18] contains detailed information regarding the fetch and decode phases. The pipeline operation of the C62x/C64x instructions can be categorized into seven instruction types. Six of these are shown in Table 3.1, which gives a mapping of operations occurring in each execution phase for the different instruction types. The delay slots associated with each instruction type are listed in the bottom row.

The execution of instructions can be defined in terms of delay slots. A delay slot is a CPU cycle that occurs after the first execution phase (E1) of an instruction. Results from instructions with delay slots are not available until the end of the last delay slot. For example, a multiply instruction has one delay slot, which means that one CPU cycle elapses before the results of the multiply are available for use by a subsequent instruction. However, results are available from other instructions finishing execution during the same CPU cycle in which the multiply is in a delay slot.

four; each functional unit in one data path is almost identical to the corresponding unit in the other data path. The functional units are described in Table 3.2.

Besides being able to perform 32-bit operations, the C64x also contains many 8-bit and 16-bit extensions to the instruction set. For example, the MPYU4 instruction performs four 8×8 unsigned multiplies with a single instruction on a .M unit. The ADD4 instruction performs four 8-bit additions with a single instruction on a .L unit.

The data line in the CPU supports 32-bit operands, long (40-bit) and double word (64-bit) operands. Each functional unit has its own 32-bit write port into a general-purpose register file (see Fig. 3.3). All units ending in 1 (for example, .L1) write to register file A, and all units ending in 2 write to register file B. Each functional unit has two 32-bit read ports for source operands src1 and src2. Four units (.L1, .L2, .S1, and .S2) have an extra 8-bit-wide port for 40-bit long writes, as well as an 8-bit input for 40-bit long reads. Because each unit has its own 32-bit write port, when performing 32-bit operations all eight units can be used in parallel every cycle.

3.1.3

Cache Memory Architecture Overview [19]

The C64x memory architecture consists of a two-level internal cache-based memory archi-tecture plus external memory. Level 1 cache is split into program (L1P) and data (L1D) caches. The C64x memory architecture is shown in Fig. 3.4. On C64x devices, each L1 cache is 16 kB. All caches and data paths are automatically managed by cache controller. Level 1 cache is accessed by the CPU without stalls. Level 2 cache is configurable and can be split into L2 SRAM (addressable on-chip memory) and L2 cache for caching external memory locations. On a C6416 DSP, the size of L2 cache is 1 MB, and the external memory on Quixote baseboard is 32 MB. More detailed introduction to the cache system can be found in [19].

Table 3.2: Functional Units and Operations Performed (from [18]) Function Unit Operations

.L unit (.L1, .L2) 32/40-bit arithmetic and compare operations 32-bit logical operations

Leftmost 1 or 0 counting for 32 bits Normalization count for 32 and 40 bits Byte shifts

Data packing/unpacking 5-bit constant generation

Dual 16-bit arithmetic operations Quad 8-bit arithmetic operations Dual 16-bit min/max operations Quad 8-bit min/max operations .S unit (.S1, .S2) 32-bit arithmetic operations

32/40-bit shifts and 32-bit bit-field operations 32-bit logical operations

Branches

Constant generation

Register transfers to/from control register file (.S2 only) Byte shifts

Data packing/unpacking

Dual 16-bit compare operations Quad 8-bit compare operations Dual 16-bit shift operations

Dual 16-bit saturated arithmetic operations Quad 8-bit saturated arithmetic operations .M unit (.M1, .M2) 16 x 16 multiply operations

16 x 32 multiply operations Quad 8 x 8 multiply operations Dual 16 x 16 multiply operations

Dual 16 x 16 multiply with add/subtract operations Quad 8 x 8 multiply with add operation

Bit expansion

Bit interleaving/de-interleaving Variable shift operations and rotation Galois Field Multiply

.D unit (.D1, .D2) 32-bit add, subtract, linear and circular address calculation Loads and stores with 5-bit constant offset

Loads and stores with 15-bit constant offset (.D2 only) Load and store double words with 5-bit constant Load and store non-aligned words and double words 5-bit constant generation

Figure 3.4: C64x cache memory architecture (from [19]).

3.2

The Quixote Baseboard [20]

The DSP-FPGA embedded card used in our implementation is Innovative Integration’s (II) Quixote baseboard, which is illustrated in Fig. 3.5. Quixote is one of II’s Velocia-family baseboards for various applications requiring high-speed computation. Figure. 3.6 shows a block diagram of the Quixote board. It combines a 600 MHz C6416 32-bit fixed-point DSP with a Virtex-II FPGA, and some system-level peripherals. The FPGAs on our boards are the six-million-gate version. The TI C6416 DSP operating at 600 MHz offers a processing power of 4800 MIPS. Some detailed features of the board are as follows:

• TMS320C6416 processor running at frequency up to 600 MHz. • Onboard 32 MB SDRAM for the DSP chip.

• A 32/64 bits PCI bus host interface with direct host memory access capability for

Figure 3.5: Picture of the Quixote board [20].

3.3

TI’s Code Development Environment [21], [22]

TI provides a useful GUI development interface to DSP users for developing and debug-ging their projects: Code Composer Studio (CCS). The CCS development tools are a key element of the DSP software and development tools from Texas Instruments. The fully integrated development environment includes real-time analysis capabilities, easy to use debugger, C/C++ compiler, assembler, linker, editor, visual project manager, simulators, XDS560 and XDS510 emulation drivers and DSP/BIOS support.

Some of CCS’s fully integrated host tools include:

• Simulators for full devices, CPU only and CPU plus memory for optimal performance. • Integrated visual project manager with source control interface, multi-project support

and the ability to handle thousands of project files.

• Source code debugger common interface for both simulator and emulator targets:

– C/C++/assembly language support. – Simple breakpoints.

– Advanced watch window. – Symbol browser.

• DSP/BIOS host tooling support (configure, real-time analysis and debug). • Data transfer for real time data exchange between host and target.

• Profiler to understand code performance.

CCS also delivers foundation software consisting of:

• DSP/BIOS kernel for the TMS320C6000 DSPs:

– Pre-emptive multi-threading. – Interthread communication. – Interupt Handling.

• TMS320 DSP Algorithm Standard to enable software reuse.

• Chip Support Libraries (CSL) to simplify device configuration. CSL provides

C-program functions to configure and control on-chip peripherals.

• DSP libraries for optimum DSP functionality. The libraries include many C-callable,

assembly-optimized, general-purpose signal-processing and image/video processing rou-tines. These routines are typically used in computationally intensive real-time appli-cations where optimal execution speed is critical.

The DSP Library (DSPLIB) for TMS320C64x includes routines that are organized into seven groups:

• Correlation. • FFT.

• Filtering and convolution. • Math.

• Matrix functions. • Miscellaneous.

3.4

Code Development Flow [23]

The recommended code development flow involves utilizing the C6000 code generation tools to aid in optimization rather than forcing the programmer to code by hand in assembly. These advantages allow the compiler to do all the laborious work of instruction selection, parallelizing, pipelining, and register allocation. These features simplify the maintenance of the code, as everything resides in a C framework that is simple to maintain, support, and upgrade.

The recommended code development flow for the C6000 involves the phases described in Fig. 3.7. The tutorial section of the Programmers Guide [23] focuses on phases 1–2 and the Guide also instructs the programmer when to go to the tuning stage of phase 3. What is learned is the importance of giving the compiler enough information to fully maximize its potential. An added advantage is that this compiler provides direct feedback on the entire program’s high MIPS areas (loops). Based on this feedback, there are some very simple steps the programmer can take to pass complete and better information to the compiler allowing the programmer a quicker start in maximizing compiler performance. The following items list the goal for each phase in the 3-phase software development flow shown in Fig. 3.7.

• Developing C code (phase 1) without any knowledge of the C6000. Use the C6000

profiling tools to identify any inefficient areas that we might have in the C code. To improve the performance of the code, proceed to phase 2.

• Use techniques described in [23] to improve the C code. Use the C6000 profiling tools

to check its performance. If the code is still not as efficient as we would like it to be, proceed to phase 3.

• Extract the time-critical areas from the C code and rewrite the code in linear assembly.

We can use the assembly optimizer to optimize this code.

TI provides high performance C program optimization tools, and they do not suggest the programmer to code by hand in assembly. In this thesis, the development flow is stopped at phase 2. We do not optimize the code by writing linear assembly. Coding the program in high level language keeps the flexibility of porting to other platforms.

3.4.1

Compiler Optimization Options [23]

The compiler supports several options to optimize the code. The compiler options can be used to optimize code size or execution performance. Our primary concern in this work is the execution performance. The easiest way to invoke optimization is to use the cl6x shell program, specifying the -on option on the cl6x command line, where n denotes the level of optimization (0, 1, 2, 3) which controls the type and degree of optimization:

• -o0:

– Performs control-flow-graph simplification. – Allocates variables to registers.

– Eliminates unused code.

– Simplifies expressions and statements. – Expands calls to functions declared inline.

• -o1. Performs all -o0 optimization, and:

– Performs local copy/constant propagation. – Removes unused assignments.

– Eliminates local common expressions.

• -o2. Performs all -o1 optimizations, and:

– Performs software pipelining. – Performs loop optimizations.

– Eliminates global common subexpressions. – Eliminates global unused assignments.

– Converts array references in loops to incremented pointer form. – Performs loop unrolling.

• -o3. Performs all -o2 optimizations, and:

– Removes all functions that are never called.

– Simplifies functions with return values that are never used. – Inline calls to small functions.

– Reorders function declarations so that the attributes of called functions are known when the caller is optimized.

– Propagates arguments into function bodies when all calls pass the same value in the same argument position.

Chapter 4

Implementation and Optimization of

IEEE 802.16e OFDM Channel Codec

on DSP

In this chapter, we discuss the decoding algorithms of the IEEE 8021.16e OFDM channel codec on DSP. Our DSP is a TI TMS320C6416 chip, housed on II’s Quixote baseboard. We base our implementation on modification of the code of Lee [24] for IEEE 802.16a OFDMA to the specifications of IEEE 802.16e OFDM. We present the performance results obtained from the profiler generated by the built-in profiler in TI’s Code Composer Studio (CCS) tool set.

4.1

Decoding of RS Code [5]

The Berlekamp-Massey (BM) algorithm is a common decoding algorithm for RS codes [25]. It includes four steps:

1. Compute the syndrome value.

2. Compute the error location polynomial. 3. Compute the error location.

4. Compute the error value.

Under the unable-to-correct condition (e.g., errors number greater than T0), the received

word will not be dealt with.

The shortening does not affect the RS decoder because the RS code in IEEE802.16e is a systematic code and the initial zero bytes will not affect each step of the decoder. As for the puncturing, the punctured bytes can be viewed as erasures. Thus the decoder we adopt should be able to correct erasures [25].

4.2

Viterbi Decoding of Punctured Convolutional Code

Viterbi algorithm is the most well-known technique for the convolutional decoding process. The operation of Viterbi algorithm can be explained by the trellis diagram, which is provided by the CC encoder structure. The concept of the trellis diagram is based on the state transition diagram. Hence, we can expand the state transition diagram to a trellis diagram. The trellis diagram is consistent with all the features of finite state machine and can be regarded as the time axis expansion of the finite state machine. A simple trellis diagram is shown in Fig. 4.1 as an example. In this trellis diagram, the upper outgoing branch for each state corresponds to an input of 0, whereas the lower outgoing branch corresponds to an input of 1. Each state has two incoming and two outgoing branches. Each information sequence, uniquely encoded into an encoded sequence, corresponds to a unique path in the trellis. Therefore, for a given path through the trellis, we can obtain the corresponding information sequence by reading off the input labels on all the branches that make up the path. The procedure is called “traceback”.

Viterbi algorithm operates by computing the branch metric for each path at each stage of the trellis. The metric is calculated and stored as a partial metric for each branch as the

Figure 4.1: Trellis diagram example of Viterbi decoder (from [24]).

trellis is traversed. Since there are two paths merging at each node, the path with a smaller metric is selected while the other is discarded. This is based on the assumption that the optimum path must contain the sub-optimum survivor path. The survivor path for a given state at time instance n is the sequence of symbols closest to the received sequence up to time

n. For the case of punctured convolutional code, the metrics associated with the punctured

bits are simply disregarded in the metric calculation stage. The overall operation discussed above is the computational core of Viterbi algorithm and is the so-called add-compare-select (ACS) operation.

4.3

Decoding of Bit-Interleaved Coded Modulation

Similar techniques as that discussed in [5] and [27] can be used to demodulate and decode the received signal. The following gives a very brief introduction.

hard-decision is adopted, the metric used in decoding is the Hamming distance, which counts the bit errors, between each trellis path and the hard-limited output of the demodulator to find the path with least errors. The coding gain is worse by 2 to 3 dB compared to soft-decision decoding. Hence, soft-soft-decision is considered in this work.

For optimal soft-decision Viterbi decoding in AWGN channel, the metric should be the Euclidean distance between each trellis path and the soft-output of the demodulator. The problem now is that there is a bit interleaver between the convolutional encoder and the modulator in the transmitter. Therefore, the optimal decoder should be based on the super-trellis combining the convolutional code, the interleaver, and the QAM modulator, but this is too complex to be practical. Moreover, the puncturing mechanism adds further complexity to the super-trellis structure. Thus, we consider a suboptimal decoder based on bit-by-bit metric computation.

Consider 16QAM first. We denote the in-phase bits by bI,1 and bI,2, and the quadrature

bits by bQ,1 and bQ,2, which are the four bits corresponding to the transmitted 16QAM

symbol s. The soft-decision metric for bI,k is evaluated simply from yI[i] as

DI,1 =

−yI(i), |yI(i)| ≤ 2

−2(yI(i) − 1), yI(i) > 2

−2(yI(i) + 1), yI(i) < 2

∼= −yI(i), (4.1)

DI,2 = |yI(i)| − 2. (4.2)

where yI(i) is the real part of the received signal after channel compensation. The

evaluation of DQ,k for the two quadrature bits are the same as the evaluation of DI,1 and

DI,2 with yI(i) replaced by yQ(i), where yQ(i) is the imaginary part of the received signal

after channel compensation.

We also compute the log-likelihood ratio (LLR) of each received LDPC codeword bit by the above method [28].

Similar observations hold for QPSK and 64-QAM constellations. For QPSK, DI = −yI[i], (4.3) DQ = −yQ[i]. (4.4) For 64-QAM, DI,1 =

−yI[i], |yI[i]| ≤ 2

−2(yI[i] − 1), 2 < yI[i] ≤ 4

−3(yI[i] − 2), 4 < yI[i] ≤ 6

−4(yI[i] − 3), yI[i] > 6

−2(yI[i] + 1), −4 ≤ yI[i] < −2

−3(yI[i] + 2), −6 ≤ yI[i] < −4

−4(yI[i] + 3), yI[i] < −6

∼ = −yI[i], (4.5) DI,2 =

2(|yI[i]| − 3), |yI[i]| ≤ 2

−4 + |yI[i]|, 2 < |yI[i]| ≤ 6

2(|yI[i]| − 5), |yI[i]| > 6

∼= −4 + |yI[i]|, (4.6)

DI,3 =

½

−|yI[i]| + 2, |yI[i]| ≤ 4

|yI[i]| − 6, |yI[i]| > 4

¾

= ||yI[i]| − 4| − 2. (4.7)

4.4

Profile of the DSP Code

We mention again that our implementation is based on modification of the code of Lee [24] for IEEE 802.16a OFDMA to the specifications of IEEE 802.16e OFDM. If more detailed steps of optimization and implementation are needed, [24] is the reference.

In this section, we show the optimized profile of our FEC encoder, which concatenates the RS encoder and the convolutional encoder. Table 4.1 shows the code size and the execution speed of the final concatenated encoding program for processing 144 data bytes (which includes data input and output) on DSP, for four of the mandatory coding and modulation modes of IEEE 802.16e OFDM. Here “data input and output included” means the execution time spent on input and output operations using fread() and fwrite() are included. Table 4.2 shows the corresponding information for the concatenated program of decoding 144 data bytes.

Table 4.1: Profile of Channel Encoder under Different Coding and Modulation Modes Modulation RS Code CC Code Rate Code Size (% RS, % CC) Cycles (% RS, % CC) Processing Rate (kbps) QPSK (32,24,4) 2/3 2208 (30,70) 107592 (50,50) 6424 QPSK (40,36,2) 5/6 2544 (33,67) 70851 (25,75) 9755 16QAM (64,48,8) 2/3 2524 (33,67) 80821 (18,82) 8552 16QAM (80,72,4) 5/6 2908 (27,73) 94394 (9,91) 7322 Average 2546 (31,69) 88414 (26,74) 8013

Table 4.2: Profile of Channel Decoder under Different Coding and Modulation Modes Modulation RS Code CC Code Rate Code Size (% RS, % CC) Cycles (% RS, % CC) Processing Rate (kbps) QPSK (32,24,4) 2/3 8608 (68,32) 1063148 (13,87) 650 QPSK (40,36,2) 5/6 8864 (67,33) 889147 (14,86) 777 16QAM (64,48,8) 2/3 8148 (65,35) 903422 (4,96) 765 16QAM (80,72,4) 5/6 8876 (60,40) 782811 (8,92) 883 Average 8624 (65,35) 909532 (8,92) 769

As they stand now, the programs will require multiple DSPs to run in parallel to handle the data rate under a 10 MHz transmission bandwidth. Acknowledgeably, further optimiza-tion of the programs may be possible. In addioptimiza-tion, the C64x is equipped with a Viterbi decoder co-processor [29]. Using this co-processor may be helpful in raising the decod-ing speed. But its use requires study and testdecod-ing of the “enhanced direct memory access (EDMA)” mechanism of the C64x chips, which is bypassed in the present study.

Figure 4.2: The assembly codes of RS encoding (1/7).

4.5

Appendix

This section shows some figures that the assembly codes in RS encoding and the Chien search in RS decoding. In Figs. 4.2, 4.3, 4.4, 4.5, 4.6, 4.7, and 4.8, we show the assembly codes of RS encoding.

In Figs. 4.9, 4.10, 4.11, and 4.12, we show the assembly codes of Chien search in RS encoding.

Chapter 5

Decoding Algorithms of LDPC Codes

in IEEE 802.16e OFDMA

In this chapter, we describe some decoding algorithms for LDPC codes and some simulation results in AWGN channel. The simulation results provide us the information to select ap-propriate decoding scheme, code rate, codeword length, and modulation type according to the system performance requirement, computational complexity, and latency. The material in Section 5.1 and 5.2 is mainly from [30].

5.1

The Belief Propagation Algorithm [30]

Using Tanner graph representation of LDPC codes is attractive, because it not only helps understand their parity-check structure, but, more importantly, also facilitates a powerful decoding approach. The key decoding steps are the local application of Bayes rule at each node and the exchange of the results (messages) with neighboring nodes. At any given iteration, two types of messages are passed: probabilities or beliefs from bit nodes to check nodes, and probabilities or beliefs from check nodes to bit nodes.

Let M(n) denote the set of check nodes connected to bit node n, i.e., the positions of ones in the nth column of H, and let N(m) denote the set of bit nodes that participate in the mth

![Figure 2.6: Base model of the rate-1/2code (from [2]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/28.892.111.778.173.349/figure-base-model-rate-code.webp)

![Figure 2.7: Base model of the rate-2/3, type A code(from [2]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/29.892.108.778.188.366/figure-base-model-rate-type-code.webp)

![Figure 2.12: QPSK, 16-QAM, and 64-QAM constellations (from [2]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/33.892.154.739.366.764/figure-qpsk-qam-qam-constellations.webp)

![Figure 3.1: Block diagram of TMS320C6416 DSP (from [18]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/40.892.124.758.307.824/figure-block-diagram-tms-c-dsp.webp)

![Figure 3.4: C64x cache memory architecture (from [19]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/46.892.94.515.133.453/figure-c-x-cache-memory-architecture-from.webp)

![Figure 3.6: Block diagram of the Quixote board (from [16]).](https://thumb-ap.123doks.com/thumbv2/9libinfo/7643206.138255/48.892.138.718.337.804/figure-block-diagram-quixote-board.webp)