Predictive ¯ow control for TCP-friendly end-to-end real-time

video on the Internet

Yeali S. Sun

a,*, Fu-Ming Tsou

b,1, Meng Chang Chen

c,2aDepartment of Information Management, National Taiwan University, Taipei, Taiwan, ROC bInstitute of Communication Engineering, National Taiwan University, Taipei, Taiwan, ROC

cInstitute of Information Science, Academia Sinica, Taipei, Taiwan, ROC Received 21 December 2000; revised 1 November 2001; accepted 12 November 2001

Abstract

In order to cope with time-varying conditions in networks with no or limited QoS support like the current Internet, schemes have been proposed for real-time applications to dynamically adjust traf®c sources' data sending rate. However, employing adaptive rate control may not be suf®cient to prevent or handle network congestion. As most of the real-time applications are based on RTP/UDP protocols, an issue of possibly unfair sharing of bandwidth between TCP and UDP applications has been raised. In this paper, we propose an application-level control protocol called Real-time Rate and Retransmission Control Protocol Plus in which several control mechanisms are used and integrated to maximize the delivery performance of UDP-based real-time continuous media over the Internet while friendly sharing network bandwidth with TCP connections. Here we propose to use adaptive ®lters in network state characterization and inference. Both simulation and actual implementation performance results show that recursive least square-based adaptive prediction makes good use of past measure-ment in forecasting future condition and effectively avoids network congestion. It also shows that the scheme achieves reasonably friendly resource sharing with TCP connections. q 2002 Elsevier Science B.V. All rights reserved.

Keywords: Flow/congestion control; End-to-end real-time video; Prediction; TCP-friendly

1. Introduction

Although there are many on-going research on end-to-end QoS guarantee for the next generation Internet [1±4], it will take some time to have such networks and services available in general. In the meantime, there are growing interests and activities on deploying multimedia services, including real-time audio/video clipson the WWW, electronic commerce, IP-telephone and Web TV over the existing Internet. How to maximize the delivery quality of these streaming applica-tions in a best-effort network while `friendly' sharing band-width with non-real time applicationslike TCP hasbecome an important issue.

In thispaper, we consider real-time ¯ow and congestion control for stored multimedia applications, speci®cally MPEG video over the Internet. Workson real-time stored video transport in the past have focused on how to send

variable-bit-rate video streams over a constant-bit-rate communication channel, e.g. Refs. [5±8]. They all assume resource reservation and QoS support are supported in the network. Recently, there were studies on stored variable-bit-rate video over best-effort networks [9±14]. To cope with time-varying network loads, these schemes focus on how a real-time traf®c source dynamically adjust its transmission rate to prevent from sending excessive traf®c into the network.

Such rate adjustment is all based on the feedback infor-mation from the receiver(s). They do not consider the effect of such adjustment on other types of traf®c, e.g. TCP connectionsin the network. Differencesbetween these schemes are mainly in two areas: (a) characterization of network state so the rate adjustment decision can be reliably made; (b) the effects of such adjustment on the ¯ow itself and the ef®ciency of network bandwidth.

Employing adaptive rate control for real-time continuous media transmission may not be suf®cient. Congestion control and avoidance have become an important issue in the Internet due to possibly unfair bandwidth sharing caused by the very different naturesof the protocolsused by real-time and non-real time traf®c. In general, real-time applicationsare www.elsevier.com/locate/comcom

0140-3664/02/$ - see front matter q 2002 Elsevier Science B.V. All rights reserved. PII: S0140-3664(02)00002-6

* Corresponding author. Tel.: 1886-2236-30231; fax: 1886-2362-1327. E-mail addresses: sunny@im.ntu.edu.tw (Y.S. Sun), fmtsou@eagle. ee.ntu.edu.tw (F.-M. Tsou), mcc@iis.sinica.edu.tw (M.C. Chen).

1 Tel.: 1886-2236-35251x554; fax: 1886-2236-38247. 2 Tel.: 1886-2278-83799x1802; fax: 1886-2278-24814.

transported using RTP and UDP protocols, whereas most non-real time traf®c isbased on the TCP protocol. The former implementsno congestion control, while the latter does. Research results have shown that this may lead to unfair sharing of bandwidth in the two types of traf®c. In Ref. [15,16], the authorsshowed that TCP connectionsmay be starved if most of the bandwidth is taken by UDP/RTP traf®c. Thisismainly due to the lack of congestion control in UDP and the low update frequency of the sender reports and receiver reportsin RTP. On the other hand, when the network experiencescongestion, a TCP sender will reduce its transmission window by half (in the Reno version) or even down to one packet (in the Tahoe version) in one round-trip time (RTT). Note that round-trip delaysare typi-cally smaller than the time interval between RTCP control packets, e.g. 5 seconds. If a UDP ¯ow cannot react to the congestion as responsive as TCP ¯ows, it may continue to send more data and capture an arbitrarily large fraction of the link bandwidth at the cost of other traf®c. Therefore, it is essential that the UDP-based real-time transport protocol be aligned with TCP congestion control in the presence of network congestion.

A main challenge in the design of ¯ow/congestion control scheme for UDP-based real-time applications is how to make UDP ¯owsbehave asgood `network' citizensÐ consuming only their fair share of bandwidth as with TCP traf®c at the bottleneck link given that they have rigid delay and timing requirements. In this paper, we propose an appli-cation-level control protocol called Real-Time Rate and Retransmission Control Protocol Plus (R3CP1) in which

several control mechanisms are used and integrated to maxi-mize the delivery performance of UDP-based real-time continuousmedia over the Internet while these ¯owscan friendly share network bandwidth with TCP connections. Note that when congestion occurs, all the ¯ows affected will immediately experience performance degradation. Two key issues are raised here. The ®rst issue is how to minimize such negative effect on real-time video sessions, especially when all the senders might be forced to reduce sending rates to relieve congestion. Second, can real-time sessions behave smarter to more effectively avoid conges-tion. Two methods are proposed here. The ®rst method is to take the receiving buffer as a reserve bank. A video source will make use of the unused bandwidth available when the network isin an unloaded or lightly loaded condition to download additional packetsto the receiver'sbuffer. Such packet store will help the receiver to cope with supply short-age during congestion. In this paper, a target minimal queue length of the receiving buffer isperiodically recalculated according to the network state. The second method is to exercise selective transmission at the sender if the requested sending rate islessthan the desired sending rate during congestion. In selective transmission mode, the sender only transmits packets with higher levels of signi®cance, e.g. I frames. In the protocol, we also set the ¯ow control period in the order of a RTT so to assure both UDP sessions

and TCP connectionsact on congestion avoidance and control at the same time scale.

Another important issue in designing feedback-based congestion control mechanism is how to characterize network state and complement current state measurement so to achieve better ¯ow control and avoid congestion. In R3CP1, we show the effectiveness of adaptive ®lters in

network state forecase. Methods such as moving average and exponential average have been commonly used. In these schemes, the weighting factors of the current and past information are constant which limits the ability for systems to quickly adapt to network state changes while retaining network stability. Different from Ref. [8], we use an M-step adaptive linear predictor called recursive-least-square (RLS) predictor [17] to forecast network state. In RLS predictor, the weighting factor iscorrected every time a new measurement was taken. Particularly, it uses the estimation error between current measurements and previous prediction of them to adjust itself. It not only can respond to network dynamics quickly but also remains stable.

The rest of the paper is organized as follows. In Section 2, we describe the recursive prediction algorithm in network state characterization and forecast. The least mean-square Kalman Filter is used to estimate packet loss probability and RTT. In Section 3, the algorithmsthat compute the desired sending rate and requested sending rate are presented. The ¯ow and congestion control schemes for real-time video sessions are also described in detail. In Section 4, the perfor-mance of the scheme is evaluated via simulation, and the results are analyzed. The protocol was also implemented in a MPEG video player/browser running on Windows95. Some performance data run over the Internet are presented. Finally, Section 5 givesa conclusion.

2. Characterization and recursive prediction of network state

The characterization and inference of network state is performed by the receiver of a real-time video session and works as follows. Initially, before the sender starts sending the data, the receiver will probe the network in order to set proper initial values of several system parameters of the session, including the minimal amount of pre-downloaded data and the retransmission time interval for in-time packet recovery. Speci®cally, during the session setup phase, when the sender receives an acknowledgment of session establish-ment from the sender, it will send a number of Network Probe packets to collect the RTT and packet loss informa-tion between the sender and receiver. The RTTs are used to compute the minimal amount of data necessary to be pre-downloaded to the receiver'sbuffer before the playback starts. The goal is to make use of such preloading to accom-modate volatile delay variations to ensure smooth playback during the session. During the data transfer phase, receive

triesto maintain the data store in the receiving buffer at an adaptive target size. It continuously monitors packet receiving status and periodically sends ¯ow control packets to the sender to instruct how fast or slow it should send the data. Sender always transmits packets at the rate as speci®ed in the ¯ow control packet.

In Ref. [11], we showed that integrating rate control with `in-time' packet retransmission can signi®cantly improve overall performance. On the contrary to the widespread belief that `Retransmission of lost packets is unnecessary for continuousmedia applicationsaslate packetsare of no use as lost packets', we show that in best-effort networks, a major cause of performance degradation is due to packet loss, especially in the case of congestion. Therefore, as long as retransmitted packets can arrive at the destination before the deadline, the overall viewing quality can be greatly improved in particular for those packets carrying important information like GOP in MPEG 2 video stream. The RTT measured during each ¯ow control period is used to adjust retransmission time interval.

Here, two parameters are used to describe the state of a transmission path: packet loss probability and RTT. The packet loss probability is obtained based on the packet receiving throughput and the expected amount of data inferred by the receiver. The RTTsare measured through ¯ow control packets. Each parameter is recursively predi-cated by an adaptive Kalman ®lter. Based on the forecast and the desired sending rate, receiver computes the requested sending rate. The design rationale is to let the receiver decide based on its receiving and playout conditions, at what rate it would like the sender to send the data so it could share bandwidth with TCP connections in a `reasonably friendly' way while maximizing its playback performance.

2.1. Prediction of packet loss probability

The end-to-end adaptive ¯ow control isperformed at discrete time instants. The time interval between two conse-cutive ¯ow control pointsiscalled a ¯ow control period. At the end of each ¯ow control period, the receiver will send a ¯ow control message to the sender specifying a new rate at which the sender should send the packets, i.e. the requested sending rate. Along with it are two other information: the

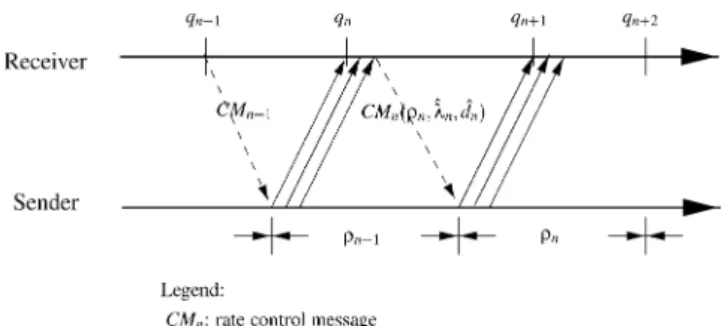

desired sending rate and the estimate of the duration of the ¯ow control period. If the requested sending rate is less than the desired sending rate, selective transmission is performed. Upon receiving a ¯ow control message, the sender immediately adjusts its sending rate to the new requested rate. If network is stable, receiver expects packets sent under the new rate to start to arrive after one RTT (see Fig. 1). The duration of ¯ow control periodsare on the order of one RTT. Thisisto ensure the congestion control of UDP ¯ows is at the same time scale as that in TCP.

The receiver computespacket lossprobability at the end of each ¯ow control period, which isde®ned asfollows: pn 1 2 gn

rn21; n 1; 2; ¼; 1

wheregnisthe packet receiving rate measured during the

nth control period andrn21is the requested sending rate sent

at the (n 2 1)th period. For end-to-end adaptive ¯ow control, if the decision is made solely based on the current state, a traf®c source may over-react to transient changes of network loads. Rapid oscillation of rate adjustment can easily cause instability of the end systems as well as the network. This indeed has severe impact on the loss and delay jitter performance of real-time sessions. Conse-quently, it isimportant that the receive be able to capture both the trend and transience of the network load and instruct the sender accurately and cautiously. Special attention should be paid especially to the detection of congestion, avoiding overlook of congestion and increasing undue rate. These may result in bandwidth starvation of TCP connections.

Assuming measured packet loss probabilities form a random process. Here, a ®xed amount of transmission history is taken into account in the estimation of packet loss probability. Let the size of the memory of the Kalman ®lter be ®xed and denoted as Mloss. Thisisthe amount of

previously measured data recorded. Let P(n) be a vector random variable de®ned asfollows:

P n pn; pn21; ¼; pn2Mloss11

T: 2

Next, we want to ®nd a weighted vector wp n such that we

can predict pÃn11given the memory P n 2 1 and the newly

measured data pn. The error between estimation and

measurement is corrected by de®ning a forward prediction error parameteran, i.e.

an pn2 ^pn; 3

where pÃn is our previous estimate. With a new measured

data, the estimator is corrected as follows:

wp n wp n 2 1 1 kp nan; 4

where kp n isthe Kalman gain, de®ned as:

kp n u21P n 2 1p n

1 1u21pT nP n 2 1p n; 5

and

P n 2 1 u21P n 2 2 2u21k

p n 2 1pT n 2 1P n 2 2;

6 whereuisthe memory factor whose value isno greater than one but typically isvery close to one. Typically, Mloss< 1= 1 2u: The estimate of (n 1 1)th isthe minimum mean-square estimate of the state pn11from Eqs. (3) and (4). The

estimate of the packet loss probability for the next ¯ow control period isthusasfollows:

^pn11 wTp np n: 7

2.2. Prediction of round-trip time for the ¯ow control period One of the main problemsthat causesTCP bandwidth starvation when sharing a bottleneck link with UDP ¯ows isthat when TCP connectionsreactsto congestion by throttling their congestion window, UDP ¯ows consider the newly available bandwidth asan excess. In order to be `TCP-friendly', it isimportant that the frequency of ¯ow or congestion control of real-time UDP sessions be synchro-nized with that of TCP. That is, the ¯ow control period should be in the order of one RTT so to assure both UDP and TCP connectionsact on congestion avoidance and control at the same time scale. The estimate of RTT is also used in the computation of the desired sending rate, and the number of packets to skip in selective transmission. The measurement of RTT is performed as follows. A ®eld is de®ned in the RTP header extension to distinguish between packets sent under different ¯ow control periods. When a ¯ow control message is sent, the receiver records its sending time. Upon receiving a ¯ow control message from the receiver, the sender will immediately adjust its packet sending rate and change the group indication ®eld in the RTP header extension to a new value to indicate the group of packetssent under the new rate. When the receiver receives the ®rst packet of a new group, it timestamps the arrival time. The difference between thisarrival time and the sending time of the ¯ow control packet gives a new measurement of the RTT between the receiver and the sender.

The estimation of RTT dÃnfollowsthe same approach asin

the prediction of packet loss probability [17]. The vector random variable dn n dn; dn21; ¼; dn2Mdura11

T isused

to record the history of RTTs. Parameter bn dn2 ^dn is

used to correct the estimation error. The estimate of RTT is obtained asfollows:

^dn11 ^wTd nd n; 8

where ^wd n ^wd n 2 1 1 kd nbn:

3. Adaptive ¯ow controlÐtake receiving buffer as a reserve bank

Note that when congestion occurs, all the ¯ows affected would immediately experience performance degradation. Two key issues are raised in the design of congestion control scheme for real-time video streams. The ®rst issue is that how to minimize such negative effect on the continuous playback performance of a video session given that during congestion fewer packet arrivals are expected. Second, can real-time ¯owsbehave smarter to more effectively avoid congestion. Two methods are proposed in this paper. The ®rst method is to have the sender transmit or pre-store more data at the receiver side when the network is in an unloaded or lightly loaded condition. When the state of congestion is forecasted (i.e. such tendency has been inferred by the receiver), the sender will refrain its packet sending via early cease of rate increase to avoid congestion and taking a more drastic rate decrease to quickly relieve congestion. Thisisdifferent from conventional methodsused in TCP in which window size is continuously increased until conges-tion occursand reduced afterwards. The idea isto make the receiving buffer a reserve bank. A video source will make use of the unused bandwidth available in the network to download additional packetsto receiver'sbuffer whenever allowed. These additional packets will help the receiver to cope with supply shortage from the sender and maintain continuousand smooth playback. In thispaper, a minimal amount of the target queue size is periodically recalculated according to the network state.

The second method is to exercise selective transmission at the sender. During a ¯ow control period, if the requested sending rate is less than the desired sending rate, the sender will calculate the amount of data allowed to transmit. It only transmits packets with higher levels of signi®cance, e.g. I frames. Our protocol adopts the principle of application level framing [18] in the fragmentation of MPEG video stream [19,20]. To play a video stream in real-time across the Internet, it ispre-parsed to generate a meta data ®le. The ®le describes the semantic data structures of the streams and contains information such as stream resolution, nominal frame rate, frame pattern, frame size and frame boundary. The meta data ®le is used in selective transmission and the segmentation and packetization of video frames at the sender. To pick up packets to skip we start from those of B type, then P type and so on. Among packets of the same type, frames are randomly chosen in such a way to avoid burst removal.

3.1. Receiver's desired sending rate and target queue length In the following, we present the method that computes the desired sending rate. The idea is to take the receiving buffer asa reserve bank and loaded with just enough amount of dataÐneither too much to excessively increase buffer space requirement nor too few not to be able to cope with

short-term congestion. The buffer queue length aims at a target minimal size at all times in accordance with the predicted network state, i.e. target queue length. Here, when we refer to the nth ¯ow control point, we mean the time instant at which the nth period starts. Let

lÃn: the desired sending rate;

rn: the requested sending rate;

qnp: the target queue length at the nth ¯ow control point;

qn: the number of packetsseen at the nth ¯ow control

point;

qnv: the virtual queue length at the nth ¯ow control point;

qÃnv: the estimate of the virtual queue length at the nth ¯ow

control point;

m: the average packet playback rate;

zÄn: the amount of skipped packets in the nth ¯ow control

period;

qÄnretx: the amount of packet retransmission in the nth ¯ow

control period.

Two parametersare de®ned here regarding the control of the receiving buffer queue length: target queue length and virtual queue length. The target queue length represents the receiver's wish of how fast or slow the sender should send the packetsin the next ¯ow control period. Thisrate may be different from the requested sending rateÐthe rate requested by the receiver in the ¯ow control message. In the case that the requested sending rate is less than the desired sending rate, selective transmission is performed at the sender. The sender gives higher transmission priority to more important packets, e.g. packets of I frames and retransmitted packets. As a result, less important frames (packets) are purposely skipped. The virtual queue length is the queue length if both selected and skipped packets were sent and received by the receiver.

3.1.1. Target queue length

R3CP1supports packet retransmission. The key issue for

real-time packet retransmission is that one must detect the loss of packets early enoughÐat least one RTT before its deadline to make retransmission effective. In our previous work [11], a minimal target queue length of the receiving buffer isderived which isone RTT-equivalent amount of data (PdÃn). The simulation results showed that packet retransmission for real-time sessions is feasible and effec-tive. By maintaining only thisminimal amount of packetsat the receiving buffer, one can achieve fairly good playback

performance. The target queue length isgiven asfollows: qpn P^dn1 PI_distance; 9 where PI_distanceisthe amount of packetsin the

retransmis-sion window, i.e. the amount of packets checked in each retransmission control.

3.1.2. Virtual queue length

When the requested sending rate is less than the desired sending rate, selective transmission is performed. The amount of packetsto skipzÄnisgiven asfollows:

~

zn ^ln2rn ^dn1 ^qretxn : 10

Otherwise,zÄnis0. The virtual queue length isde®ned as:

qv

n qn1 ~zn21: 11

To obtain the desired sending rate at the nth ¯ow control point, the receiver makesa prediction of the number of packetsthat will be present in the buffer at the (n 1 1)th and (n 1 2)th ¯ow control points. First, we have

^qvn11 qvn2m^dn1rn21 1 2 ^pn ^dn1 ~zn: 12

The virtual queue length at the beginning of the (n 1 1)th ¯ow control period isequal to the virtual queue length at the nth period less the number of packets removed (at the rate of

m) plusnew arrivalsduring the period, and the packets skipped, if any. In the equation, the sender's sending rate is assumed to be the previous requested sending rate. Simi-larly, we have

^qv

n12 qvn112m^dn1 ^ln 1 2 ^pn ^dn: 13

Here the sending rate is the desired sending rate to be computed.

We wish that at the beginning of the (n 1 2)th ¯ow control period, the virtual queue length can at least meet the target queue length, i.e. ^qvn12 qpn12: Rewriting Eqs.

(12) and (13), the desired sending rate is obtained as follows: ^ ln PI_distance1 3m^dn2 q v n2 ~zn 1 2 ^pn ^dn 2rn21: 14

3.2. Requested sending rateÐstoring extra data during unloaded state and avoiding congestion otherwise

The desired sending rate only represents the receiver's wish to the sender to ensure continuous playback of frames. Table 1

Determination of the requested sending rate

Current state/forecast UNLOADED LOADED CONGESTED

UNLOADED min ^lmax;rn211Dinc min ^lmax;rn211dinc min ^ln;rn211dinc

LOADED min ^ln;rn21 min ^ln;rn21 min ^ln;rn21

However, over the Internet, packet delivery performance is indeed dependent on the network load. The requested send-ing rate isdetermined accordsend-ing to the followsend-ing algorithm where both the history and the forecast of the network condition are taken into account. Strategically, receiver uses different levels of rate increase and decrease in the determination of the requested sending rate depending the state of the network. The network is assumed to be in one of the following three states:

² `UNLOADED' if pn , plow;

² `LOADED' if plow, pn, phigh;

² `CONGESTED' if phigh, pn:

The scheme is summarized in Table 1. The algorithm for determining the requested sending rate is explained as follows:

Case 1 (The current transmission path is in the state of UNLOADED). If the current transmission path is in the state of UNLOADED, the ¯ow control strategy is to store as many data as possible in the receiving buffer when the delivery condition isgood. In other words, receiver will ask sender to increase its sending rate but with different amount of increase depending on the forecast of the future network load.

² If the forecast state is also UNLOADED, receiver makes a more aggressive attempt to increase the rate with a larger amount of increaseDinc. The ®nal requested

send-ing rate is bounded by the peak rate of the sessionlÃmax.

² If the forecast state is LOADED and the current observa-tion isUNLOADED, it isconsidered that the current measurement shows a sign of improvement of the network condition. However, the receiver will take a cautious step by increasing the sending rate with only a smaller amount denoted bydinc,dinc,Dinc:

² If the forecast is in the CONGESTED state, the current measurement is only considered as a signal of possible congestion relief; more observations are needed. Thus, the receiver takesthe minimum of the current receiving throughput and the previous requested rate plus a smaller amount of increase.

Case 2 (The current transmission path is in the state of LOADED). If the current measurement indicates that the transmission path is in the LOADED state, the receiver will be conservative; the strategy is to retain the status in quoÐ no rate increase or decrease. The new requested sending rate takes the minimum of the new desired sending rate and the current sending rate (i.e. the previous requested sending rate). It means that if the new desired sending rate is less than the current sending rate, the receiver is not greedy; it only asks the sender to send the data at the rate necessary to maintain a reasonable smooth playback of the session even a

large amount of bandwidth may be available. Adversely, if the current sending rate is smaller than the new desired sending rate, the receiver (or the session) uses the current sending rate as the requested rate. It is self-controlled rather than using the network bandwidth arbitrarily without considering others. The goal is to avoid congestion and be fair to all other typesof traf®c.

² If the forecast state is UNLOADED, the current measure-ment might show a transient behavior of the network or it could be a sign that the network load is increasing. The strategy is to retain the status in quo.

² If the forecast is also LOADED, the receiver infers that the network condition is stable and the strategy is to keep the status unchanged.

² If the forecast isCONGESTED, it isconsidered that with a great chance that the network load will get worse. As a result, receiver will just follow the previous requested rate.

Case 3 (The current transmission path is in the state of CONGESTED). If the current measurement of the trans-mission path is in the CONGESTED state, it is inferred as a sign of possible network congestion. The design rationale is to start congestion avoidance by reducing sending rate. Receiver will continuously ask the sender to decrease its rate until the situation is relieved. Depending on the forecast of the future state, different levels of multiplicative decrease of the currently measured throughput are taken.

² If the forecast is UNLOADED or LOADED, receiver will take a small reduction of the rate. Again the session is self-controlledÐonly the minimum of the desired sending rate and the reduced throughput is taken. ² If the forecast is CONGESTED, it is taken as an

indica-tion that the network situaindica-tion will remain congested. Receiver takesa larger rate reduction action to relieve congestion.

In all cases, if the new desired sending rate is less than the reduced throughput, the receiver takesonly what it needsto maintain the target queue length.

4. Performance evaluation

We have evaluated the proposed prediction-based ¯ow/ congestion control protocol both via simulations and actual Internet experiments. The protocol was implemented in a video player/browser system on top of the RTP/UDP proto-cols on Windows95. The simulations focused on the detailed analysis of the protocol behavior. Some of the Internet experimental results are presented here. There are several goals: (a) to show the improvement of the proposed scheme in maximizing the real-time playback performance

Fig. 2. Simulation con®guration.

of stored video over the best-effort Internet; (b) to under-stand how well the scheme can react to transient network load ¯uctuation and loss; (c) to demonstrate the effective-ness of the scheme in achieving faireffective-ness among real-time traf®c while avoiding network congestion; and (d) to demonstrate the effectiveness of the scheme in achieving reasonably fair bandwidth sharing with TCP connections.

To provide some context, we compare the performance of R3CP1with that of three other schemes: transmission

with-out rate/congestion control, R3CP [11] and the

triple-feed-back based congestion control (TFCC) scheme. In R3CP,

the sender transmits data at the desired sending rate. It exhi-bits the same behavior in bandwidth sharing as of typical UDP-based real-time ¯owsÐthey grab as much bandwidth as they can. It is used as a baseline solely for comparison. TFCC employs loss-based congestion control and the popu-lar additive increase/multiplicative decrease algorithm to control of rate adjustment, i.e.:

if pn, plow; requested_rate max(current_throughtput

1 INC, max_rate);

else if plow, pn, phigh; requested_rate current_ throughtput;

else if phigh, pn; requested_rate max(current_ throughtput/DEC, min_rate).

All simulations are performed by using ns [21]. The network con®guration is shown in Fig. 2. We consider a

single 1.5 Mbps congested link shared by a number of TCP connections and UDP/RTP-based video ¯ows. We use an actual video trace (the `Star War' movie [22]) as the video traf®c source in the simulation. The ®rst ten-minute section (14,400 frames and 28,120 packets) is used. Some important meta data of the simulated video stream are as follows: the nominal frame rate is 24 frames/s; the frame pattern is IBBPBBPBBPBB; hence, I_distance is 12. For transmissions which do not use any control mechanisms, in order to start the playback smoothly, the ®rst I frame is transmitted with error recovery; once the ®rst I frame has been successfully received, the playback beginsimmediately. The target queue length in all the experimentsusing R3CP or R3CP1 isset to itsminimum

value as required by the look-ahead retransmission scheme. 4.1. Playback performance

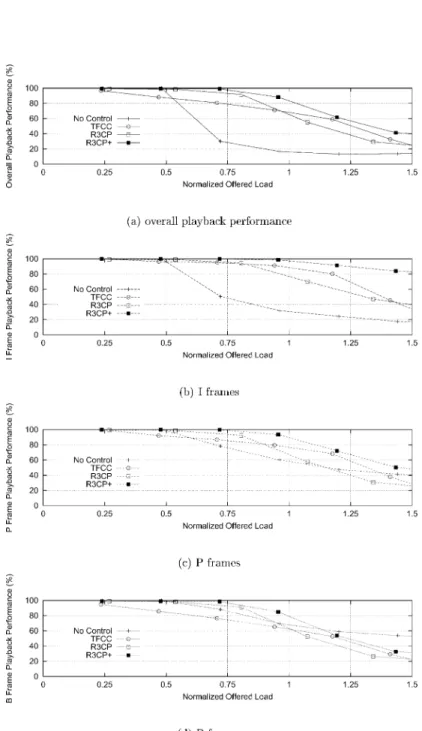

In Fig. 3, we show the overall playback performance and the performancesof individual frame types. The playback performance isde®ned asthe percentage of framesthat are successfully played back. In these experiments, an in-time completely received frame isnot played if itsreferenced frame(s) is not present due to the inter-frame dependence. Each video connection hasan average rate of 378 Kbps. From the ®gure, we can see that R3CP1 outperformsthe

other schemes in all ranges. This is mainly due to the bene®t of employing congestion control with a prediction Fig. 4. Comparison of the packet sending rate, receiving rate and packet loss probability when the normalized offered load is 0.96. (a) Under R3CP1; (b) under TFCC.

mechanism and the in-time recovery of lost packets. R3CP1

uses more information to assess network states and, thus, better adapts the sender's sending behavior to the actual network conditions. It successfully avoids the possibility of congestion by not increasing the rate too quickly. Notably, the percentage of type I frames that are success-fully played back remainshigher than 80% in the range where the normalized offered load isover 0.75.

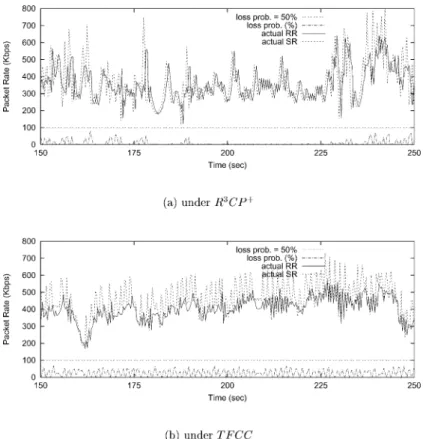

In Fig. 4, we show the histograms of the requested send-ing rate, receivsend-ing throughput and packet loss probability under R3CP1 and TFCC. The normalized offered load is

0.96. We can see that under R3CP1, the packet loss

prob-abilitiesare much lower than those in TFCC. Thisisagain because in R3CP1, the rate adjustment is based on not only

newly sampled state information, but also on the history. This helps avoid unnecessary response to transient load changes and enhances the system stability. Moreover, one can see in Fig. 4(a) that under the ®ne-grained rate adjust-ment algorithm in R3CP1, the receiving throughput isclose

to the sending rate. On the other hand, in TFCC, the receiv-ing throughput ismuch lower than the sendreceiv-ing rate, which ¯uctuateswildly throughout the course. Thisresultsin a large number of packet loss (i.e. up to more than 20% loss ratio).

Fig. 5. Comparison of the percentage of broken frames.

Fig. 6. Comparison of the total percentage of frames that are completely received but discarded due to the absence of the referenced frames. (a) Overall frames; (b) P frames; (c) B frames.

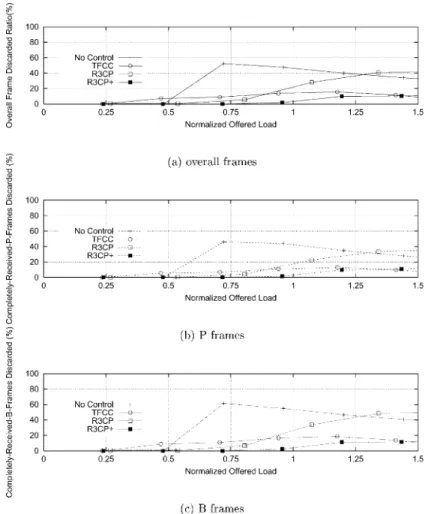

Next, we analyze the performance between the frames received and the frames that can be successfully played out. For frames received, they can be distinguished in four categories: broken frames, late frames, orphan framesand playable frames. Because most of the frames are segmented into a number of UDP packets, a frame is said to be broken if any of its constituent packets are lost. Broken frames are not playable. In Fig. 5, we compare the percentage of broken frames when different control mechanisms are used. One can see that R3CP1 has the best performance. For those

runs without any additional transmission control, they severely suffered from random packet loss. For R3CP1,

the congestion control and the execution of selective trans-mission, the real-time session focuses its packet delivery on transmitting those most important frames given the limited

bandwidth available to it, thusachieving the best perfor-mance.

Fig. 6 shows the comparison of the total percentage of framesthat are completely received on the schedule but could not be played due to the loss of the referenced frames. By employing selective retransmission of lost packets, especially the important I frames, both R3CP1and TFCC

with R3CP achieve better overall playback performance and

bandwidth utilization.

4.2. Responsiveness andstability

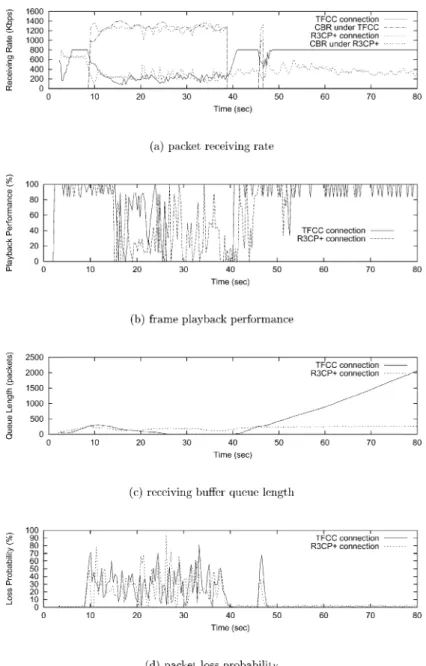

In this section, we want to show the effectiveness of the proposed rate/congestion control scheme in handling long-lasting as well as transient congestion events. In the Fig. 7. Responsiveness to transient and long-lasting congestion events. (a) Packet receiving rate; (b) frame playback performance; (c) receiving buffer queue length; (d) packet loss probability.

experiments, two background traf®c are generated: a constant-bit-rate UDP ¯ow which start to send ®xed-size packets(1000 bytes) at a rate of 1.454 Mbpsfrom time 8.5 to time 38.5; and a burst of ®xed-size UDP packets (1000 bytes) which is injected into the congested link at a rate of 1.454 Mbpsfrom time 45.4 to time 46.4. We compare the usage of various resources of a real-time ¯ow under R3CP1or TFCC. Playback of the video stream starts

at time 2. Fig. 7 shows the histograms of the packet receiv-ing rate and the correspondreceiv-ing frame playback performance, queue length and measured packet loss rate probability.

First, we can see that the ¯ow using R3CP1 scheme

converges to a steady state faster than does that under the TFCC scheme. Notice that under TFCC, the ¯ow continues

to grab available bandwidth until it reachesitsupper bound, which isset to 800 Kbps. At time 8, congestion occurs. The adaptive congestion control mechanism in R3CP1isable to compete with the background UDP traf®c

during the period of congestion and maintains a number of packets in the receiving buffer so to ensure continuous playback of video frames. On the other side, TFCC fails to contend for bandwidth with aggressive UDP traf®c. From time 20 to time 40, no framesare played under TFCC. In termsof the playback performance (in Fig.

7(b)), R3CP1 performsmuch better than TFCC. When

congestion ended at time 38, both R3CP1and TFCC pick

up the bandwidth rapidly. Note that R3CP1 convergesto

the rate it needs(around 380 Kbps) while TFCC hoardsthe Fig. 8. Bandwidth sharing between R3CP1.

bandwidth, resulting in continuous growth of the buffer queue length.

4.3. Fairness with real-time sessions

In Fig. 8, we study how real-time ¯ows employing R3CP1

control mechanisms share link bandwidth. First, R3CP1

¯ow starts at time 0.33, represented by the dark line. Another R3CP1 ¯ow joinsat time 100.19, represented by

the dash line. A background UDP ¯ow is always up which sends packets at a rate of 909.09 Kbps. The link capacity is 1.5 Mbps. One can see that after the second ¯ow enters the congested link, the ®rst ¯ow detects this situation and reducesitsrate; in a very short period of time, two ¯ows reach almost the same throughput and share the link band-width equally.

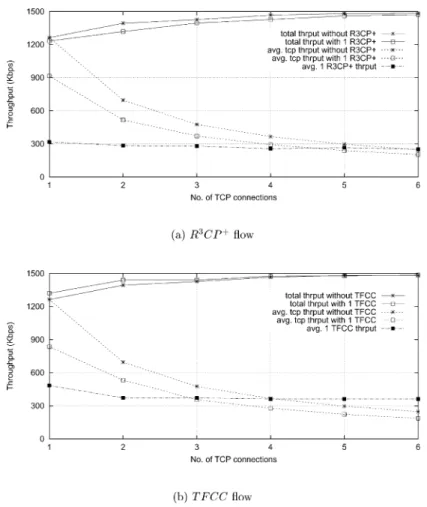

4.4. Fairness with TCP traf®c

In this section, we will study the bandwidth shared between R3CP1¯owsand TCP connections. de®nition of

TCP `friendliness' has been widely adopted in the conges-tion control of real-time applicaconges-tions [12±15]. In essence, if a UDP connection shares a bottleneck link with TCP connectionsof the same link, then the UDP connection should receive the same share of bandwidth (i.e. achieve the same throughput) as does a TCP connection. A concern is raised with this approach. That is, real-time applications have more stringent performance requirements than do non-real time applications (those mainly using TCP). While equally sharing the bandwidth over a bottleneck link might sound fair at the ®rst glance, we believe that relative fairness between UDP and TCP traf®c is only achievable if a congestion control algorithm for real-time applications can be shown to be `reasonably fair' to TCP. This philosophy is more practical to meet the performance objectivesof both typesof applicationsaswell asto maximize link utilization. Nevertheless, one still needs to be careful about how much more bandwidth should be given to a real-time connection, which is still an open research issue [23]. In the following, we will show that the proposed real-time ¯ow/congestion

control scheme only allows a real-time connection to obtain a slightly larger amount of resources than can TCP during the congestion.

Let uslook at the average throughput a TCP connection can obtain when there are multiple TCP connectionstrans-mitting over a shared link. In Fig. 9(a), one can see that the average throughput continuesto decline when more TCP

connectionsjoin in. Now, consider the addition of a

R3CP1 connection. When there isno congestion, the

R3CP1connection is very self-controlled because it adjusts

its rate without aggressively increasing it and causing congestion. It takes only what it needs and shares bandwidth with TCP connections in a consistent and friendly manner. When more TCP connectionsare added to the link, the

network becomescongested. The R3CP1 connection

executesitscongestion control and reducesthe rate. There is no `TCP bandwidth starvation' situation. For TCP connections, the average throughput is reduced a bit less than isthe case without the R3CP1connection. The effect

on the throughput reduction for TCP connectionsismainly due to the competition among them with or without the R3CP1connection. In Fig. 9(b), we again show results of

the same experiments using TFCC. One can see that the connection using TFCC obtains more bandwidth than do the connection using R3CP1. Hence, the average throughput

of TCP connectionswith the R3CP1connection isgreater

than that with the TFCC connection. 4.5. Internet experiment results

Here, we present some of the performance results of the experimentsthat were conducted over the Internet. Three different Internet path environmentsare tested, each repre-senting a different transmission characteristic. A video server is located at each of the three sites (bigzoo.g1.ntu. edu.tw, fanga.iim.nctu.edu.tw and deepblue2.standord.edu). The client station is located at the computer center of the National Taiwan University (140.112.3.120). Table 2 gives a snapshot of the RTT and loss probability statistics of the three sets of the experiments. In Table 3, we compare the frame playback performance of the runsunder R3CP1,

R3CP and transmission without any control. Detailed results

are reported in Ref. [24]. 5. Conclusions

In this paper, we have presented a prediction-based ¯ow/ congestion control scheme called R3CP1 for real-time

stored packet video transfer over the best-effort based Internet. In this protocol, several control mechanisms are used. First, an adaptive ®lter is used to predict the network state based on the current measurement as well as the history. The objective is to capture both the `trend' and the `transiency' of the network load behavior. With the forecast, the receiver calculates its desired sending rate by taking into account the occupancy of the receiving buffer. In Table 2

Statistics of the round-trip delay and loss probability

Source Avg. RTT (ms) Loss Prob.

NTU 10.205 0.1267

NCTU 32.378 0.0522

Stanford Univ. 258.891 0.00933

Table 3

Comparison of frame playback performance

Source R3CP1(%) R3CP (%) No control (%)

NTU 91.25 90.63 9.55

NCTU 90.24 93.36 8.27

this way, we ensure that the receiver is self-controlled, and that it does not ask the sender to send more data than it needs to assure its continuous playback of frames. Finally, depending upon the different assessments of the network condition, the receiver determines the requested sending rate differently.

We have studied and compared the performance of the proposed R3CP1protocol with other schemes via simulation

and through actual implementation. The simulation results show that R3CP1 outperformsschemeslike the popular

linear-increase/multiplicative-decrease algorithm in all ranges. It is due to the use of adaptive ®lter in network state forecast and assessment as well as ®ner control of rate adjustment. Thus, it cannot only better adapt the packet sending rate to the actual condition, but also avoid unneces-sary response to transient load changes. This indeed helps to maintain system stability. The combined use of a buffer-occupancy based desired sending rate with in-time packet retransmission and selective transmission also signi®cantly improvesthe playback performance.

We have also studied the issue of resource sharing

between R3CP1 ¯owsaswell aswith TCP connections.

The results show that because each R3CP1¯ow

isconges-tion-conscious when it performs rate adaptation, it can achieve fair bandwidth sharing at a bottleneck link. More-over, a R3CP1 ¯ow can also share bandwidth with TCP

connections in a reasonably friendly fashion. By reasonably friendly, we mean that the real-time connection only takesa slightly larger amount of bandwidth than does TCP through-put during congestion. The reason for the throughthrough-put reduction for TCP connectionsismainly the competition among them with or without connection. Therefore, there is no TCP bandwidth starvation situation when real-time connections using the ¯ow/congestion control mechanisms of R3CP1.

References

[1] R. Braden, L. Zhang, S. Berson, S. Herzog, S. Jamin, Resource ReSer-Vation Protocol (RSVP)ÐVersion 1 functional speci®cation, RFC 2205, September 1997.

[2] J. Wroclawski, The use of RSVP with IETF integrated services, RFC 2210, September 1997.

[3] J. Wroclawski, Speci®cation of the controlled-load network element service, RFC 2211, September 1997.

[4] S. Shenker, C. Partridge, R. Guerin, Speci®cation of guaranteed qual-ity of service, RFC 2212, September 1997.

[5] S. Chong, S-Q. Li, J. Ghosh, Predictive dynamic bandwidth allocation for ef®cient transport of real-time VBR video over ATM, IEEE JSAC January (1995).

[6] J.M. McManus, K.W. Ross, Video-on-demand over ATM: constant-rate transmission and transport, IEEE JSAC August (1996). [7] M. Grossglauser, S. Keshav, D.N.C. Tse, RCBR: a simple and

ef®-cient service for multiple time-scale traf®c, IEEE/ACM Transactions on Networking December (1997).

[8] A.M. Adas, Using adaptive linear prediction to support real-time VBR video under RCBR network service model, IEEE/ACM Trans-actionsNetworking October (1998).

[9] Z. Chen, S. Tan, R. Campbell, Y. Li, Real time video and audio in the World Wide Web, Fourth International World Wide Web Conference, 1995.

[10] C. Papadopoulos, G.M. Parulkar, Retransmission-based error control for continuousmedia applications, Proceedingsof NOSSDAV'96, 1996.

[11] Y. Sun, F.M. Tsou, M.C. Chen, A buffer-occupancy based adaptive ¯ow control scheme with packet retransmission for stored video trans-port over Internet, IEICE Transactions on Communications Novem-ber (1998).

[12] D. Sisalem, H. Schulzrinne, The loss-delay based adjustment algo-rithm: a TCP-friendly adaptation scheme, Proceedings of NOSS-DAV'98, July 1998.

[13] R. Rejaie, M. Handley, D. Estrin, RAP: an end-to-end rate-based congestion control mechanism for realtime streams in the Internet, INFOCOM'99, 1999.

[14] J. Padhye, J. Kurose, D. Towsley, R. Koodli, A TCP-friendly rate adjustment protocol for continuous media ¯ows over best effort networks, ACM Sigmetrics'99, May 1999.

[15] S. Floyd, K. Fall, Router mechanisms to support end-to-end conges-tion control, Technical Report, February 1997.

[16] D. Sisalem, Fairness of adaptive multimedia applications, ICC'98, 1998. [17] S. Haykin, Adaptive Filter Theory, Prentice-Hall, Englewood Cliffs,

1986.

[18] D.D. Clark, D. Tennenhouse, Architectural considerations for a new generation of protocols, ACM SIGCOMM'90, September 1990. [19] ISO/IEC International Standard 11172, Coding of Moving Pictures

and Associated Audio for Digital Storage Media up to about 1.5 Mbits/s, November 1993.

[20] ISO/IEC International Standard 13818, Generic Coding of Moving Pictures and Associated Audio Information, November 1994. [21] UCB/LBNL/VINT, Network Simulator-ns(version 2),

http://www-mash.cs.berkeley.edu/ns/.

[22] M.W. Garrett, A. Fernandez, MPEG-1 Video Trace, Bellcore, ftp:// thumper.bellcore.com/pub/vbr.video.trace/MPEG.data, 1992. [23] F. Kelly, Charging and rate control for elastic traf®c, European

Trans-actionson Telecommunications8 (1997).

[24] I-K. Tsay, An implementation and performance evaluation of end-to-end joint ¯ow/congestion control scheme for real-time stored packet video over Internet, Master Thesis, June 1999.