國 立 交 通 大 學

資訊科學與工程研究所

博 士 論 文

人機介面及視窗應用程式之通用橋接介面系統

Generic Interface Bridge between HCI and Applications

研 究 生: 彭士榮

指導教授: 陳登吉教授

人機介面及視窗應用程式之通用橋接介面系統

Generic Interface Bridge between HCI and Applications

研 究 生:彭士榮 Student:Shih-Jung Peng

指導教授:陳登吉教授

Advisor:Dr. Deng-Jyi Chen

國 立 交 通 大 學

資 訊 學 院

資 訊 科 學 與 工 程 研 究 所

博 士 論 文

A Dissertation Submitted to

Institute of Computer Science and Information Engineering College of Computer Science

National Chiao Tung University in Partial Fulfillment of the Requirements

for the Degree of Doctor of Philosophy in

Computer Science and Information Science May 2009

Hsinchu, Taiwan, Republic of China

人機介面及視窗應用程式之通用橋接介面系統

研究生: 彭士榮 指導教授: 陳登吉 博士

國立交通大學資訊科學與工程研究所

摘要

視窗環境下,傳統方法要讓已開發完成的應用程式,具有介面控制能

力,以達到操控應用程式之目的,一般常用的方法,是在應用程式設計時,

將介面控制系統之控制功能程式,利用函數呼叫以及模組化之方式,直接

寫入並包裝在單一的應用程式内。在如此設計開發方式下,存在以下幾個

問題:1、使用者必須要對控制介面及程式語言,具備相當豐富的知識背景,

才有可能設計出具有人機介面控制功能的應用程式。2、如果要爲現有之應

用程式加上人機介面控制功能,設計者必須擁有此應用程式的原始碼,才

有可能為此應用程式新增或修改介面控制功能;若沒有應用程式原始碼,

我們將很難為應用程式新增或修改介面控制程式。3、即使擁有程式原始

碼,設計者亦必須針對應用程式的原理、架構及技術重新加以分析,才有

可能撰寫出適合的介面控制程式。如此開發過程顯得非常沒有彈性及效

率,且將造成相當大的困難及人力、時間之浪費。基於以上原因,本論文

針對軟體工程方法學的應用研究,開發出一個具有通用架構的視覺化介面

平台,做為介面控制裝置與應用程式間的橋樑,使用者只需經由此橋樑,

針對應用程式之控制元件做些簡易的描述設定,不需要撰寫任何程式,便

可將介面控制裝置與視窗應用程式銜接,輕易地將原先不具有介面控制能

力的視窗應用程式,設計成為具有人機介面控制功能的視窗應用程式。

本視覺化通用介面系統研究包括兩大部分,第一:我們提出視覺化通

用介面架構觀念,可將開發的視覺化通用橋樑介面,銜接一般的介面控制

與視窗應用程式,並且提供使用者簡易之介面操作及指令設定。使用者可

以在視窗之任意座標上設定可控制圖格物件(Square Object),並附予每

個圖格物件一個名稱。只要在視窗應用程式之相對應位置上設定此圖格物

件,然後利用語音將控制命令送至開發之通用橋樑介面(Generic Interface

Bridge, GIB),再經由 GIB 介面解析輸入之指令後,模擬操作滑鼠或鍵盤

控制此圖格物件,如此便可達到利用語音來操作視窗應用程式之目的。為

了增加操作的彈性及擴充性,我們採用巨集指令(Macro Command)來定義

及組合控制指令,使得單一巨集指令可以連續執行數個語音命令,如此可

增快指令下達之速度,並可避免因指令過長,造成輸入過程中雜訊進入而

影響辨識結果。第二:我們想用 PDA 手機,透過網路操作遠端 PC 或數位電

視上的多媒體視窗應用程式。然而想要讓手機具有操作遠端多媒體應用程

式功能,需要在手機及多媒體應用程式中,撰寫複雜的程式才可以達到。

因此我們採用提出之視覺化通用介面方法,並結合發展之程式碼剖析器

(Parser Generator),解析特定裝置上的多媒體應用程式,經由通用橋樑

介面的操作及應用系統元件之描述設定,程式開發者可以在不需要撰寫手

機介面控制程式下,自動且快速地在手機上產生具有控制多媒體應用程式

功能的介面環境,達到以手機遙控多媒體應用程式的目的。

為了展現提出方法的適用性及可行性,在論文展示範例中,我們將原

先不具有語音或遙控功能之視窗應用程式,在不撰寫任何程式語言之情形

下,用簡單、快速、有效率之方法與我們研發的通用橋樑介面結合,經由

語音或 PDA 手機輸入控制命令,再由橋接介面系統解析,最後達到輕易控

制視窗應用程式之目的。

Generic Interface Bridge between HCI and Applications

Student: Shih-Jung Peng Advisor: Prof. Deng-Jyi Chen Institute of Computer Science and Information Engineering

National Chiao-Tung University

Abstract

In a Windows environment, the commonly used traditional method, which allows developed window application programs with Human Computer Interaction (HCI) control ability, is directly to write the control procedures into the application programs while using low-order designing formula to package procedures into single application system. To apply such devising method, the designer must possess certain knowledge about application system designing and programming in order to devise an application system with HCI control functions. Particularly when the design is completed, it is relatively difficult to revise or add any system functions to it without the primitive code.

Three major problems may exist under such development of HCI control procedures. First, system designers must be equipped with abundant knowledge about the design of HCI and programming languages in order to design an application with HCI function. Second, if we want to design an application, which lacked interaction ability before, we need to obtain the primitive code of the particular application due to the difficulty in modifying new programs without the code. Third, even if we have obtained the code, we need to re-analyze the entire structure of application in order to write a suitable control program. These tasks will leave the designer with much trouble and seemingly resulting in less flexibility and efficiency. In this thesis we will emphasize on the research of Software Engineer Methodology to develop a visual generic interface bridge (GIB) system. Under this GIB system, designers

devise the application system with speech or remote HCI control functions in a much easier and efficient manner with the need for defining only some parameters of objects in an application environment whereas the user are not required to write any program code.

The research on GIB system consists of two parts: “Integration of GIB and HCI,” and “GIB-based Application Interface (GAI) generation”. Under the GIB system, we propose the concept of GIB system in connection with HCI and applications. The GIB provides a visual operating interface in which designers draw controllable square objects at any corresponding position on the windows and name each square object. Subsequently, we use speech function to operate actions for mouse and keyboards corresponding to the square object; implying that we can easily control the application with speech as well. In order to increase the operation of applications with more flexibility and expandability, we may use macro command to define and combine the control commands. One macro command may combine with several control commands; this implies that noise effects between long commands can be avoided, making the application control more flexible. Under the GAI system, we use PDA cellular phone to control application programs on the PC in a convenient manner. Nonetheless this process is not simple due to many complicated procedures in and between these systems must be written. For this reason, we use proposed concept of GIB system and adding program parser method, under which the PDA can easily connect with HCI and multimedia applications to achieve the goal of controlling via simple interface operating and setting of AP’s environment.

In regards to demonstration of the feasibility and suitability of the proposed method, we put in practice of developing a GIB system to be used as the bridge between HCI interface and window applications. In the following examples, we literally manipulate the connection of a generic visual interface with application program in a very simple, fast, and effective manner. Speech or remote control HCI ability is implemented more easily and efficiently throughout this GIB system without the need for writing any program code.

Acknowledgement

Foremost, I would like to sincerely give thanks to my advisor, Professor Deng-Jyi Chen, who not only provided me with his creative suggestions and discussions over my studies but also his immense assistance, support, patient and inspiration.

Particularly thanks to Chien-Chao Tseng (Prof. of NCTU) for his tremendous assistance and support. Especially thanks to Shih-Kun Huang (associate Prof. of NCTU), Yeh-Ching Chung (Prof. of NTHU), Koun-Tem Sun (Prof. of NUTN), Wu-Yuin Hwang (associate Prof. of NCU), and Chorng-Shiuh Koong (assistant Prof. of NTHU), who are the members of the oral examination committee for my doctoral dissertation. They have offered me many valuable suggestions to help me with expand a broader scope of research and more important applications than I had initially started with.

I would like to thank gratefully to my friends Chin-Eng Ong and Jan Karel Ruzicka, masters of Science in Computer Science and Information Engineering, for their cooperation and assistance on this dissertation which eventually transformed into applicability and completeness. Thanks to all of my friends who have helped me in my life with researching and studying.

I have many thanks to my wife, Pei-Ling Chen, and my children, Yi-Ping and Yi-Han, parents and parents-in-low for their love, patience, support and always standing by me in my life at all time. Finally, I would like to dedicate this dissertation to my family.

Table of Contents

摘要 ...I

ABSTRACT ... IV ACKNOWLEDGEMENT ... VI LIST OF FIGURES ... X LIST OF TABLES ... XII

INTRODUCTION ... 1

-1.1INTRODUCTION ... -1-

1.2MOTIVATION AND GOALS ... -11-

1.3ORGANIZATION OF THIS DISSERTATION ... -11-

2. RELATED WORK ... 13

-2.1TOUCH PANEL INTERFACE ... -13-

2.2SPEECH RECOGNITION ENGINE ... -14-

2.3SCRIPT LANGUAGE ... -15-

2.4COMPILE GRAMMAR DEFINITION ... -16-

2.5MACRO COMMAND ... -19-

2.6OS’S API ... -20-

3. INTEGRATION GIB WITH HCI ... 22

-3.1RUN APPLICATION IN GIB(RAGIB)WINDOWS ... -24-

3.1.1 Input module ... - 24 -

3.1.1.1 Speech command ... 24

3.1.1.2 Composed command ... 24

3.1.1.3 Macro command registration ... 25

3.1.1.4 Command translation flow ... 25

3.1.2 Kernel module ... 26

3.1.2.1 Lexical translator ... 27

3.1.2.2 Syntax analyzer ... 27

3.1.2.3 Command analyzer flow ... 28

3.1.3 Output module ... 29 3.1.4 Identifiers ... 30 3.1.4.1 Command class ... 30 3.1.4.2 Command parameter ... 30 3.1.4.3 Connector ... 31 3.1.4.4 Terminator ... 31

3.1.4.5 Operators ... 32

3.1.4.6 Constant parameters ... 32

3.1.4.7 Selection commands ... 33

3.1.4.8 Setting commands ... 34

3.1.4.9 Mouse action commands ... 34

3.1.4.10 Keyboard action commands ... 35

3.1.4.11 System action commands ... 36

3.1.5 Process description ... 36

3.1.6 Limitation ... 39

-3.2SEPARATE APPLICATION AND GIB(SAGIB)WINDOWS ... -39-

3.2.1 Identifiers ... 41

3.2.1.1 Command class ... 41

3.2.1.2 Mouse position control ... 42

3.2.1.3 Mouse action control ... 42

3.2.1.4 Keyboard control ... 43

3.2.1.5 File execution control ... 44

3.2.2 Process description ... 44

-4. GIBBASED APPLICATION INTERFACE (GAI) GENERATION ... 47

-4.1 INTERFACE ... -47-

4.2PROCEDURE ... -48-

4.3ALGORITHM ... -51-

4.3.1 Algorithm of application interface loader ... 51

4.3.2 Algorithm of control interface generator ... 55

-5. DEMONSTRATION ... 59

-5.1INTEGRATION OF GIB AND SPEECH RECOGNIZER ... -59-

5.1.1 Running an Application ... 59

5.1.2 Registering an application ... 59

5.1.3 Registering a square object ... 60

5.1.4 Registering grids ... 61

5.1.5 Registering a stage ... 62

5.1.6 Registering a macro command ... 63

5.1.7 Examples of application “Sol” ... 64

-5.2SEPARATE GIB AND APPLICATION WINDOWS ... -67-

5.2.1 Application running ... 67

5.2.2 Setting spring distance of mouse cursor ... 67

5.2.3 Jumping and moving control of mouse cursor ... 69

5.2.5 Example of application “Word” ... 72

Example of document reading ... 73

-5.3GAI GENERATION ... -75-

5.3.1 Example of application “VCard” ... 75

-6. CONCLUSIONS AND FUTURE WORK... 79

-6.1CONCLUSION ... -79-

6.2FUTURE WORK ... -80-

REFERENCE ... 81

APPENDIX ... 86

-A.BNF OF CONTROL COMMANDS ... -86-

B.PARTIAL SYNTAX TREE OF CONTROL COMMANDS ... -88-

VITA ... 90

PUBLICATIONS ... 91

-[1]REFERRED JOURNAL PAPER ... -91-

[2]REFERRED CONFERENCE PAPER ... -91-

List of Figures

FIGURE 1-1GENERAL DEVELOPING METHOD OF SPEECH CONTROL ... -1-

FIGURE 1-2OS INTEGRATION APPROACH ... -3-

FIGURE 1-3ARCHITECTURE OF GIB CONTROL SYSTEM ... -5-

FIGURE 1-4GENERAL DEVELOPING METHOD OF HANDHELD DEVICE CONTROL ... -6-

FIGURE 1-5RAJICON SYSTEM ARCHITECTURE ... -7-

FIGURE 1-6PUCSYSTEM ARCHITECTURE ... -8-

FIGURE 1-7SAMPLE GROUP DECISION TREE FOR A SHELF STEREO ... -8-

FIGURE 1-8ARCHITECTURE OF PROPOSED HANDHELD DEVICE CONTROL SYSTEM ... -10-

FIGURE 2-1TOUCH PANEL INTERFACE ... -13-

FIGURE 2-2CLICK-THROUGH BUTTONS. ... -14-

FIGURE 2-3RECOGNITION TRAINING STEPS OF MS SPEECH RECOGNIZER V5.1 ... -15-

FIGURE 2-4SPEECH COMMAND INPUT FORMAT ... -16-

FIGURE 2-5SCRIPT LANGUAGE SYNTAX TREE OF COMMAND “DRAGSQUARE” ... -16-

FIGURE 2-6MICROSOFT SPEECH SDK ... -18-

FIGURE 2-7MICROSOFT GRAMMAR COMPILERS ... -19-

FIGURE 3-1SYSTEM ARCHITECTURE OF GIB ... -22-

FIGURE 3-2RUN APPLICATION UNDER GIB SYSTEM ... -23-

FIGURE 3-3FORMAT OF FILE GRAMMAR.XML ... -24-

FIGURE 3-4FORMAT OF COMPOSED COMMANDS ... -25-

FIGURE 3-5MACRO COMMAND REGISTRATION ... -25-

FIGURE 3-6TRANSLATION FLOW OF SPEECH COMMAND ... -26-

FIGURE 3-7COMMAND PROCESSING FLOW ... -26-

FIGURE 3-8LEXICAL TRANSLATING ... -27-

FIGURE 3-9GRAMMATICAL RULES IN FILE “GRAMMAR.XML” ... -28-

FIGURE 3-10GRAMMATICAL RULE FLOW IN FILE “GRAMMAR.XML” ... -28-

FIGURE 3-11EVENT DELEGATION ... -29-

FIGURE 3-12FILE HIERARCHICAL ORGANIZATION ... -30-

FIGURE 3-13OPERATING FLOW CHART OF GIB SYSTEM ... -38-

FIGURE 3-14RULE COMPOSITION FLOW ... -40-

FIGURE 3-15MOUSE ACTION ENVIRONMENTS ... -40-

FIGURE 3-16OPERATING FLOW CHART OF MODIFIED GIB SYSTEM ... -46-

FIGURE 4-1FRAMEWORK OF TRADITIONAL CELLULAR PHONE INTERFACE SYSTEM ... -47-

FIGURE 4-2FRAMEWORK OF PROPOSED CELLULAR PHONE CONTROL INTERFACE SYSTEM ... -48-

FIGURE 4-3 SAMPLE OF SPECIFICATION FILE OF AP“VCARD” ... -49-

FIGURE 4-4CELLULAR PHONE INTERFACE MODULES ... -50-

FIGURE 4-5CELLULAR PHONE INTERFACE GENERATED PROCEDURES ... -51-

FIGURE 5-2OPERATION STEPS OF REGISTERING AN APPLICATION ... -60-

FIGURE 5-3OPERATION STEPS OF REGISTERING A SQUARE ... -61-

FIGURE 5-4OPERATION STEPS OF REGISTERING GRIDS ... -62-

FIGURE 5-5OPERATION STEPS OF REGISTERING A STAGE ... -63-

FIGURE 5-6OPERATION STEPS OF REGISTERING A MACRO COMMAND ... -63-

FIGURE 5-7DEFINED CONTROL OBJECTS OF APPLICATION “SOL” ... -65-

FIGURE 5-8OPERATION IN APPLICATION “SOL” ... -66-

FIGURE 5-9SET COMMAND STREAM “OPEN MY DOCUMENT” IN MACRO DESIGNED FOLDER ... -67-

FIGURE 5-10 MOUSE CURSOR ONE-STEP MOVING DISTANCE SETTING ... -68-

FIGURE 5-11 MOUSE CURSOR ONE-STEP MOVING CONTROL ... -69-

FIGURE 5-12 MOUSE CURSOR JUMPING CONTROL ... -69-

FIGURE 5-13 MOUSE CURSOR MOVING CONTROL ... -70-

FIGURE 5-14EXECUTING STEPS OF APPLICATION “MEDIA PLAYER” ... -72-

FIGURE 5-15WRITING ENGLISH OR CHINESE ALPHABET WITH SPEECH COMMANDS... -73-

FIGURE 5-16USING SPEECH COMMAND TO HELP USER READING DOCUMENT ... -74-

FIGURE 5-17EXECUTING APPLICATION PROGRAM “VCARD” ... -75-

FIGURE 5-18LOADING AND RUNNING JAVAAP ON THE SYSTEM ... -76-

FIGURE 5-19FLOW OF GENERATING CONTROL TABLE OF AP“VCARD” ... -76-

List of Tables

TABLE 1-1 REMOTE CONTROL FUNCTIONS WITH CELLULAR PHONE KEYPAD MAPPING ... - 7 - TABLE 5-1 SAMPLE MACRO COMMAND OF CONTROL APPLICATION “SOL” ... - 65 -

Introduction

1.1 Introduction

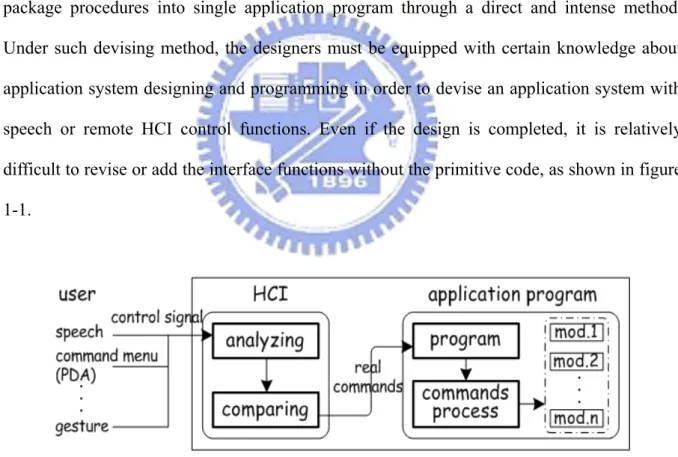

In a typical window-base interface system, users control application programs containing the HCI (Human Computer Interaction) control functions in speech or handheld device such as PDA or cellular phone directly and conveniently. The commonly used traditional method which allows developed window application programs with speech or wireless remote HCI control ability to follow the control commands for achieving the goal of operating application programs, will directly integrate the HCI control function and API procedures of speech or handheld device into the application programs by using low-order designing formula to package procedures into single application program through a direct and intense method. Under such devising method, the designers must be equipped with certain knowledge about application system designing and programming in order to devise an application system with speech or remote HCI control functions. Even if the design is completed, it is relatively difficult to revise or add the interface functions without the primitive code, as shown in figure 1-1.

Figure 1-1 General developing method of speech control

Three major problems exist in such development of speech or remote HCI control procedures: first, system designer are required to possess abundant knowledge about system design for control interface and programming language in order to design an application with

speech or remote HCI control functions. Second, if we want to obtain an application program with speech or remote HCI control functions lacking previous interface control ability, we must obtain the primitive code for that application program due to the difficulty in designing without the code. Third, even if the code is attainable, we are still required to reanalyze the entire structure of application in order to write a suitable interface control program. These tasks will leave the designer with much difficulty and seemingly resulting in less flexibility and efficiency.

To overcome these issues, in this thesis we emphasize on the research of Software Engineer Methodology to develop a visual generic interface bridge (GIB) system and introducing this system into two parts: First, the “Integration of GIB and Speech HCI,” and secondly, “GIB-based Application Interface (GAI) generation,” in which a wireless handheld device is taken as an example. Under the GIB system, designers devise the application system with speech or wireless remote HCI control functions in an easier and more efficient manner with the only requirement for defining some parameters of square objects in a application environment where users do not need to write any program code.

In part one, most of voice control robots employ such development method in designing their voice control interface, such is in the case of AT&T’s Speech-Actuated Manipulator (SAM) [1], whereas the voice commands via telephone are comprehended and then performed with respective actions. Under such means of intense design, we discover that if there is any need for modification in speech control function, the primitive source code of application system must be acquired and comprehended before using the low level design method to write application control functions, while the entire program must be recompiled and executed. Such design manner does not offer extreme efficiency, moreover it is hard to design, increase or revise the control function without the original source code of the application.

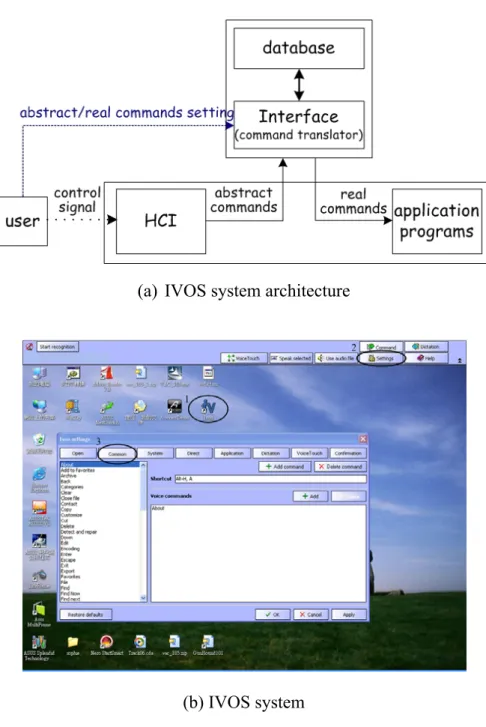

(a) IVOS system architecture

(b) IVOS system

Figure 1-2 OS integration approach

An OS integration approach allowing one speech recognizer to be intact with the domain of applications such as Vspeech 4.0 [2], Voxx 5.0 [3] and IVOS [4], utilizes the appearance of windows interface, designs some fixed pronunciation operation functions and establishes the name of relative operation instructions. Different instruction names are used to control the same operating function such as “open file” to <Alt>-F and “file open” to <Alt>-F, and then using the designed file name of speech command to achieve the goal of controlling the

application system with speech commands, as shown in figure 1-2.

However such development method of speech control system contains two problems: first, the current approach leaves no flexibility for future modifications about control functions. The control functions were already designed steadily in the HCI system and unable to be increase or revise. If some functions we use to control application were not defined, we must get the primitive source code of the application and redesign it. This will increase difficulty and loading on the work. Second, the current approach may only control application functions with hot-key commands. Due to the system only defines the control functions of keyboard, therefore only keyboard actions can be controlled. Nonetheless there are still many applications with control functions of mouse actions, the current approach will not be able to fully handle theses applications.

Based on the reasons states above, we develop a visual generic interface bridge (GIB) system between speech HCI and window-base application programs. The GIB provides visual operating interface, under which designers draw recognizing square object at any corresponding position on the windows and name each square object. Subsequently, we can easily use speech command to control mouse and keyboard actions corresponding to the position of square object. By increasing the operation of application with more flexibility and expandability, we use macro command to define and combine the control commands. One macro command may be combined with several control commands; this will avoid noise effect between long commands and make the application control more flexible with grammar analysis technology.

Through this process we can make any application program which did not have speech HCI control ability previously, and to control easily with speech commands simply after defining simple square object and sets of macro commands without the need for writing any program code, as shown in figure 1-3.

Figure 1-3 Architecture of GIB control system

In part two, due to remote control methods have been discussed in recent years; in 2001, it was proposed that cellular phone to be used in controlling remote HCI running on the PC. Cellular phone is a mobile device containing a small screen without showing the same GUI (Graphical User Interface) as on the PC screen. Therefore we must analyze the GUI and develop some function programs for the cellular phone based on its use in the remote control interaction with GUI on the PC. However along with progressing existing techniques and increasing functions of communication equipments for family use, operation and interaction styles between people and devices have increased in complexity. Most of the multimedia contents can be run and displayed on different kinds of platforms without containing remote control ability originally. Consequently people may believe that if they can simply use some simple instruments, such as cellular phone or PDA, to remotely control the multimedia application module running on the PC or digital TV, subsequently the control will become more vivid and interesting. However due to the variation in control instruments, display devices, and different kinds of methods, it is not easy to make a specified device with remote control ability. To achieve this, we must repeat the design process: (1) Write control command protocols and HTTP wireless protocols into the applications running on the PC; (2)

Write interface program and wireless control protocols into cellular phone, as shown in figure 1-4, for every application system in successive sequence. Despite this designing process is a difficult work in nature; we must write many complex procedures even for simply adding or modifying a new control functions. In addition, there is still one major problem remaining if we lack the original source code of application system, as it becomes impossible to make the specified device with remote control ability. Such repeated design procedures would increase developing application systems a financial burden, waste of time, inflexibility and inefficiency.

Figure 1-4 General developing method of handheld device control

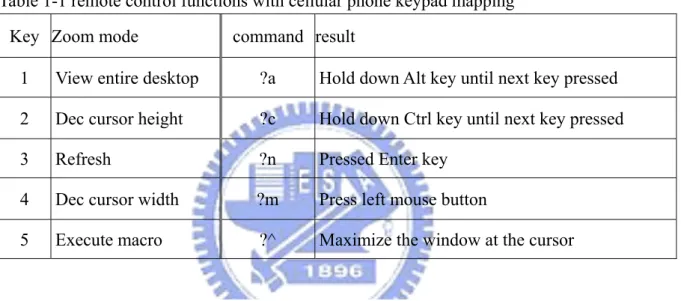

As the problems reveal, Rajicon System [31] was using cellular phone to control the remote PC, as shown in figure 1-5. It works on a specific device, so, if we want to perform some complex operation functions, we must define a set of interactive rules. Rajicon System uses macro commands to formulate every operating function, therefore, the more operating functions there are, the more likely that more macro commands would be required, as described in table 1.

Figure 1-5 Rajicon System Architecture

Table 1-1 remote control functions with cellular phone keypad mapping Key Zoom mode command result

1 View entire desktop ?a Hold down Alt key until next key pressed 2 Dec cursor height ?c Hold down Ctrl key until next key pressed

3 Refresh ?n Pressed Enter key

4 Dec cursor width ?m Press left mouse button

5 Execute macro ?^ Maximize the window at the cursor

Through pressing keypad of cellular phone to input command for controlling application running on the PC, these operation methods get complex and disorient, in addition to the difficulties in memorizing these control commands. On the other hand, this system is designed for a specific device when there are some control functions that need to be created or modified; such designed procedures should be restarted repeatedly.

In 2002, Jeffery Nichols and Brad A. Myers published a paper on “Generating remote control interfaces for complex appliances” in [32]. They proposed a method of personal universal control (PUC) with a content describing how to create a control interface with graphics or speech by downloading a functional specification explanation of equipment for family use, as shown in figure 1-6.

Figure 1-6 PUC System Architecture

Hence the PUC system analyzes specification document and uses decision tree algorithm to create a specification group tree, as shown in figure 1-7. Then, establish an appropriate control interface according to the structure of this group tree. However there are many design factors which need to be taken into account, including: (1) Download a functional specification explanation for a specific equipment before establishing an interface, however it is not an easy task to establish this specification description; (2) Design a control interface is weary because interface designed algorithm needs to take into account users’ requirement with the presentation of control device objects.

In [36], because traditional remote control typically allows users to activate the functionality of a single device, consequently qualitative and quantitative results from a study of two promising approaches creating such a remote control are presented: end-user programming and machine learning. In end-user programming, users manually assign the buttons which they believe to be sufficient to accomplish their tasks of graphical “screens”. They then work with a single, handheld remote control that can display those screens. Machine learning also uses a single, handheld remote to display screens; however, the learning uses the recorded history of a user’s actual remote interactions, to infer appropriate groups of buttons for the performed tasks. Some questions arise as described in the following: (1) the end-user programming is too complicated to carry on the design of remote control to the device with graphical screens. User must define the components required for operation on a screen which can control many device interfaces remotely concurrently, and all the control procedures require writing a large number of programs; (2) The ML can record the operation of users automatically and utilizes the performing algorithm to produce the operating component. Perhaps due to the difference in device, it cannot completely define each function of device component; (3) The methods of automatically producing remote control interface are required to follow to the characteristic of the device and the preference of user, but it also needs a large number of procedures to be written in order to design algorithm automatically.

In [38], a new widget and interaction technique known as a “Frisbee,” was described for interacting with areas of a large display that is difficult or impossible to be accessed directly. It consists of a local “telescope” and a remote “target”. This design meets five design principles in: (1) minimizing physical travel, (2) supporting multiple concurrent users, (3) minimizing visual disruption while working, (4) maintaining visual persistence of space, and (5) application independence. However, the design requires writing many procedures for the specific and large-scale showing device. Moreover, because of the limitation of hardware

specification and the difficulty in obtaining relevant information, it is comparatively difficult and complicated to design the interface.

Figure 1-8 Architecture of proposed handheld device control system

Based on the issues mentioned above, our proposal for solving this issue will be to construct an interface generating system; with it the designer can easily develop remote control interface program into cellular phone without even having to write the program of cellular phones. We comply with the concepts of GIB to develop a bridge interface for remote signal control system, under which designer can easily transform from specified control object of Java application system running on PC, such as control buttons and labels, and generating remote control interface with objects, into cellular phone automatically. This will simplify the development process of creating a control interface and makes the control system development and modification more flexible and elastic. Through directly generating controlling objects and functions onto the cellular phone by interface generator, we may directly and easily control the Java application system running on the PC without having to write complex programs into the cellular phone, as shown in figure 1-8.

This thesis emphasizes on the research of Software Engineering Methodology in order to unfold the feasibility and the serviceability. We literally develop a GIB system by adopting a

proposed method which is used as the bridge between HCI and window applications, and through which the interaction of HCI and applications will become more vivid and friendly without any requirement of writing any program code from the user.

1.2 Motivation and Goals

In general, if we want to modify HCI (Human Computer Interaction) control functions or increase some new control functions in the application controlled by speech or handheld device, we are required to obtain the original source code of the application system or simply redesign the system. Despite that we might obtain the primitive source code of application system, we might also need to spend much time on analyzing the entire system accordingly; and then use the low level designed way to write and recompile the whole application system; such design method is very difficult and inefficient. The objective of this thesis is to develop a visual GIB platform as a connection bridge between HCI and applications. We can use this GIB system as an interaction interface, to operate applications without previous recognition functions by speech command or PDA device, through simple definition of square objects, parameters description and setting of macro commands without the need of writing any program code.

1.3 Organization of this dissertation

We have briefly introduced the problems of current approach regarding control application with speech or PDA HCI, and have proposed GIB platform to overcome these issues in chapter one. The rest of this dissertation is organized as follows: in chapter 2, introduction of related works regarding proposed GIB system. This discussion contains a touch-panel interface, speech recognition engine, script language, compile grammar definition, Macro command and OS’s API. In chapter 3, we propose integration of GIB and speech HCI system architecture which includes three parts: input module, kernel module and output

module. In this chapter, the operations on how to design this bridge interface, and how to define and set control environment of application in order to provide a successful interaction interface between HCI and applications without writing any program code, will be described in details. In chapter 4, applying the concept of proposed GIB system in chapter 3, we propose GIB-based Application Interface (GAI) generation to generate control interface automatically on the PDA through simple object descriptions, in order to control the applications on the PC Windows. In chapter 5, we show real demonstration to explain how to operate applications through speech command or PDA without the need for writing any program under proposed interface generation. Finally, in the last chapter a conclusion is drawn and future work will be described.

2. Related Work

To develop Generic interface bridge (GIB) system, we need some support of technologies in order to allow the system to work in a more generic, flexible, simple, and effective manner. The corresponding technologies include: touch panel interface, speech recognition engine, script language, compiler grammar, macro command, and OS’s API. All these technologies would be described briefly in the following sections.

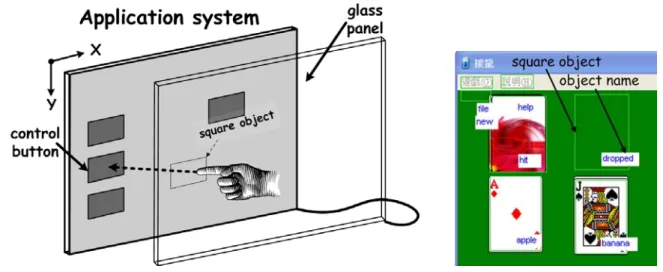

2.1 Touch panel interface

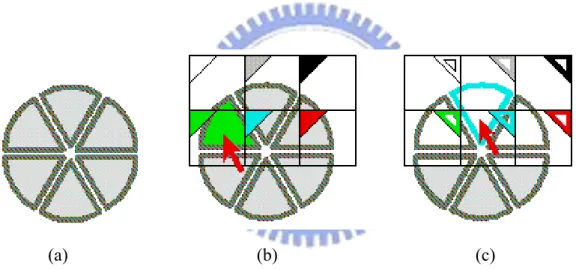

Our visual HCI interface system utilizes the concept of touch panel, as shown in figure 2-1; develops a general transparent sheet of glass between application and mouse cursor, then executes application under this developed circumstance by control mouse cursor actions through controlling drawn and defined square objects above control application on the screen with a name given to each object. That means, through the concept of see-through interface [6-8], if we can use devices such as speech or PDA to control the square objects drawn and defined from the corresponding position of application program on the Windows, we can control this application as well.

Applying concepts of touch panel may yield many widgets in visual interface without requiring new offers of extra screen space and allows the control steps become easier and friendlier to follow. An example in figure 2-2 [6] shows that many simple widgets called click-through buttons were used to change the color of objects below them. The user positions the nearby widget and appoints which object for coloring by clicking through buttons with the cursor over that object, as shown in figure 2-2(b). Furthermore buttons in figure 2-2(c) can change the outline colors of objects.

That means, we can use speech or remote control device to control application through controlling mouse actions including mouse moving, mouse events, click, double click, drag, drop, and keyboard press actions by using touch panel concept

(a) (b) (c)

Figure 2-2 Click-through buttons. (a) Six widget objects. (b) Clicking through a green fill-color button. (c) Clicking through a blue outline-color button.

2.2 Speech recognition engine

The pronunciation of every person may differ. Each person must do speech recognition training before using recognizer as a controller to operate the application, resulting in a more correct recognition. In this study, we chose Microsoft Speech Recognizer v5.1 as our system recognition engine and the figures to complete the training steps of speech recognition as shown in figure 2-3. After speech command was inputted and processed by speech

recognition engine [16-17], if command was correct, the system would recognize the commands and transfer the recognition result to the system function RecoContext_Recognition( ). The parameters of this function are as below.

Figure 2-3 Recognition training steps of MS speech recognizer V5.1

DimWithEvents RecoContext As SpeechLib.SpSharedRecoContext Public Sub RecoContext_Recognition (

ByVal StreamNumber As Integer, _ ByVal StreamPosition As Object, _

ByVal RecognitionType As SpeechLib.SpeechRecognitionType, _

ByVal Result As SpeechLib.ISpeechRecoResult ) Handles RecoContext.Recognition)

For a more convenient and efficient control with speech commands, we apply script language [11-15] as commands format. Script language is different from system programming language in which it could achieve a higher level programming and a more rapid development than the system programming language. There are some advantages in using script language: 1. it is always stored as a plain text file which users could easily edit or read with any text file editor such as “Word” or “Notepad” application. 2. It is an excellent tool for developing application rapidly. User could edit a simple script file to solve a simple problem with less code and time. Using script language as command format might enable the development and combination of control command string to be easier, rapid and flexible. The speech command input format is as shown in figure 2-4, single command example move to apple send for figure 2-4-a, compound command example move to file then click by leftclick send for figure 2-4-b, and script language syntax tree of “dragsquare” is as shown in figure 2-5.

a. single command input b. compound commands input Figure 2-4 Speech command input format

Figure 2-5 Script language syntax tree of command “dragsquare”

2.4 Compile grammar definition

Speech SDK 5.1/ Microsoft Speech SDK 5.1 Help/grammar compiler/索 引 /Designing Grammar Rules”. Consequently, we designed our speech recognizing rule of interface system based on the designing grammar rules in “Microsoft Speech SDK 5.1 Help,” from file “test.xml”.

<GRAMMAR>

The GRAMMAR tag is the outermost container for the XML grammar definition.

<DEFINE>

The DEFINE tag is used for declaring a set of string identifiers for numeric values.

<ID>

The ID tag is used for declaring a string identifier for numeric values.

<RULE>

The RULE tag is the core tag for defining which commands are available for recognition.

<RULEREF>

The RULEREF tag is used for importing rules from the same grammar or another grammar. The RULEREF tag is especially useful in reusing component or off-the-shelf rules and grammars.

<L>

The L tag is used for specifying a list of phrases or transitions, and anything in it should be spoken once but not all times.

<P>

The P tag is used for specifying text to be recognized by the speech recognition engine, or anything in it should be spoken.

<O>

The O tag is used for specifying optional text in a command phrase.

In regards to the help on checking grammar composition, we can use the function “//Microsoft Speech SDK 5.1/tools/grammar compiler/Build” to check the correctness of rules.

If the compiling result is successful, it would show compile successful; else wise it would show compile fail and depict the error reasons. The process figures of Microsoft Speech SDK and Microsoft Grammar Compiler are shown in Figure 2-6 and 2-7.

Figure 2-7 Microsoft Grammar Compilers

2.5 Macro command

In the proposed system, we use speech as one of the control tools; if speech commands are too long, the correctness of recognition result would be affected due to the pronunciation of people or the noise of environment between command and commands. According for easy interaction with GIB (Generic Interface Bridge) system, we adopt macro command method to simplify and combine longer or complex commands into single context-free command. For users this method is much easier and more flexible to input commands and to increase the recognition correctness and efficiency. The command format of macro command and relative complex or compound commands are as following.

Macro command Relative commands

Clickfile move to file then click by leftclick

; move mouse cursor to defined position named “file,” and then left click the mouse

Start menu *g02-@cm

command “then” and “-” is used as a separator of commands.

2.6 OS’s API

Upon obtaining abstract commands from HCI, GIB translates command lexical and analyzes the syntactic, validating those tokens and then manipulating the actions of mouse and keyboard through calling OS’s API. In the proceeding section, we will describe the parameters of mouse API and keyboard API.

Dllimport (“user32.dll”)

Mouse API:

Private Shared sub mouse_event (

Byval dwFlags as mouse_event_flags, Byval dx as integer,

Byval dy as integer, Byval dwData as integer, Byval dwextrainfo as integer) End

dwFlags:

MOUSEEVENTF_ABSOLUTE: Specifies that the dx and dy parameters contain

normalized absolute coordinates. If not set, those parameters will contain relative data: the changes in position since the last reported position.

MOUSEEVENTF_MOVE: Specifies that movement occurred.

MOUSEEVENTF_LEFTDOWN: Specifies that the left button is down. MOUSEEVENTF_LEFTUP: Specifies that the left button is up.

MOUSEEVENTF_RIGHTDOWN: Specifies that the right button is down. MOUSEEVENTF_RIGHTUP: Specifies that the right button is up.

MOUSEEVENTF_MIDDLEDOWN: Specifies that the middle button is down. MOUSEEVENTF_MIDDLEUP: Specifies that the middle button is up.

dx: It specifies the absolute position of mouse along the x-axis or its amount of motion since the last mouse event was generated, depending on the setting of MOUSEEVENTF_ABSOLUTE.

dy: It specifies the absolute position of mouse along the y-axis or its amount of motions since the last mouse event was generated, depending on the setting of MOUSEEVENTF_ABSOLUTE.

dwData: If dwFlags contains MOUSEEVENTF_WHEEL, then dwData specifies the amount of wheel movement. A positive value indicates that the wheel was rotated forward and away from the user; a negative value indicates that the wheel was rotating backward and towards the user.

dwExtraInfo: It specifies an additional value associated with the mouse event.

Keyboard API:

Private Shared sub keybd_event ( Byval bVk as byte,

Byval bScan as byte, Byval dwFlags as long, Byval dwExtraInfo as integer) End sub

bVk: It specifies a virtual-key code defined in MSDN. The code value is from 1 to 254. bScan: This parameter is not used.

dwFlags: It specifies various aspects of function operation.

3. Integration GIB with HCI

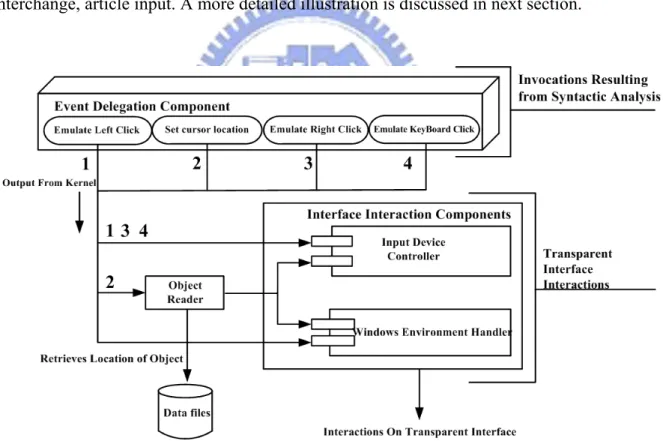

The architecture of the proposed GIB system for bridging HCI and applications is as shown in figure 3-1. This GIB system includes three parts: input module, kernel module and output module. The main functions of input module include the reception of controlling signal from user into the system. When controlling signal is valid, the system would translate input control-signal to relative abstract command string of script language from the command database. The kernel module breaks down the abstract command string into token sets, and makes syntactic analysis for each token set. Finally, the output module would call OS’s API, to simulate mouse and keyboard control according to the real command request.

Figure 3-1 System architecture of GIB

To conveniently introduce the advantage of the GIB system, we classify GIB system architectures into two approaches: 1. Run Application in GIB (RAGIB) Windows, 2. Separate Application and GIB (SAGIB) Windows, as shown in figure 3-2 (a) and (b).

(a) Run application in GIB Windows

(b) Separate application and GIB Windows

Figure 3-2 Run application under GIB system

In RAGIB system, application must be run under the GIB system. This implies that, every time if we want to control an application under RAGIB system, we must select the application and execute it under the proposed GIB system. For a more convenient control, we proposed a SAGIB system. In SAGIB, GIB system can be run behind the Windows and would not occupy the extra working space of Windows, and consequently makes the control of application more conveniently. That means we can control all the applications which are executed on the Windows by controlling mouse and keyboard through control signal from user, under the SAGIB system.

3.1 Run Application in GIB (RAGIB) Windows

3.1.1 Input module

The main functions of input module include speech command recognizer, context setting and commands composing. We can use speech recognizer to recognize inputting speech command, defined control objects and environment setting such as objects defining, grids setting, macro command setting and stage registering.

3.1.1.1 Speech command

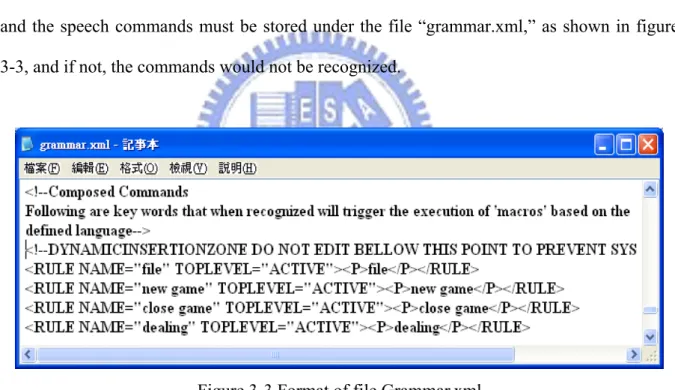

We use Microsoft’s Speech SDK V5.1 as the speech input recognizer of this GIB system, and the speech commands must be stored under the file “grammar.xml,” as shown in figure 3-3, and if not, the commands would not be recognized.

Figure 3-3 Format of file Grammar.xml

3.1.1.2 Composed command

The relative command string of macro commands are stored into the file “composed command,” the file format is as shown in figure 3-4. If the command spoken valid, this system would get the relative commands string from the database.

Figure 3-4 Format of composed commands

3.1.1.3 Macro command registration

For using this system in a more convenient manner, we develop a GUI interface for user to register macro commands and to store the commands string to file “grammar.xml” and “composed command” automatically. The registration interface of macro commands is as shown in figure 3-5.

Figure 3-5 Macro command registration

For example, if the user speaks a macro command “open file,” this would be compared with the commands set in the file “grammar.xml”. If the command is found valid, it would get and translate relative command string “move to close then click by left click” from the file “composed command,” as in figure 3-6.

Figure 3-6 Translation flow of Speech command

3.1.2 Kernel module

The main functions of Process module include separating command string into token sets and analyzing its syntax. If the command is valid after analyzing, it would be executed by calling OS’s API. The processing flow is shown in figure 3-7.

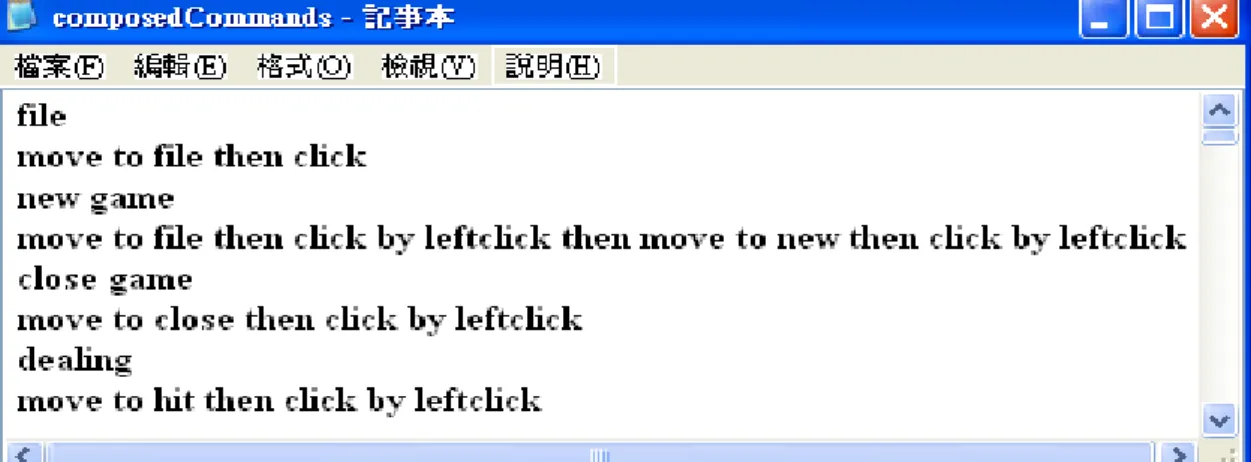

3.1.2.1 Lexical translator

The lexical translator separates command string into token sets according to the separator command “then,” as shown in figure 3-8. Each token set represents a stand-along command that is sent to syntax analyzer.

Figure 3-8 Lexical translating

3.1.2.2 Syntax analyzer

After receiving one token set at a time sent from section 3.1.2.1 lexical translator, it would check into their syntax by following the grammatical rules defined in the file “grammar.xml,” as in figure 3-9.

Figure 3-9 Grammatical rules in file “grammar.xml”

3.1.2.3 Command analyzer flow

For example, a command string “move to close then click by left click” obtained from section 3.1.1, would be broken into token sets of “move to close” and “click by left click” by lexical translator. After analyzing, it would be parsed into target function events and then call the corresponding OS’s API:

1. Move cursor to square object which named close,

2. Perform a left click of mouse button, as shown in figure 3-10.

3.1.3 Output module

The main function of this part is to execute the recognition result from the syntax analysis by calling OS’s API. To perform the related actions of mouse and keyboard easier, we have written functions to emulate the actions, as in shown in figure 3-11. The mouse control functions are shown in the following: “mouse-left-click,” “mouse-right-click,” “mouse-left-double-click,” “mouse drag,” “mouse drop,” “mouse spring,” “mouse jumping” to pre-defined position, “mouse move” with different speed, “move stop,” and “mouse moving speed”. Whereas the keyboard control functions include the following: single key press (0-9, a-z, and @, #, etc), compound key (Alt-, Shift-, and Ctrl-), Chinese/English input interchange, article input. A more detailed illustration is discussed in next section.

Figure 3-11 Event delegation

The application program and information, such as defined squares, grids, locations, macro commands, and parameters, are stored into a hierarchical directory structure, as in figure 3-12.

Figure 3-12 File hierarchical organization

3.1.4 Identifiers

3.1.4.1 Command class

The control commands can be classified as some sections according to their operation functions: “Selection commands,” “Setting commands, “Mouse action commands,” “Keyboard action commands,” “File action commands” and “System action commands,” and will be described in the following sections.

3.1.4.2 Command parameter

Square:

The “square” object is a graphical rectangle drawn by the user from the direction left-up to right-down at static desired location. The variable of square is associated with locations (integer, integer).

Coordinate:

The “coordinate” object is a graphical rectangle grid, which is auto-generated by the system, and drawn by the user from direction left-up to right-down at static desired location. The variable of each grid in the “coordinate” is associated with locations (integer, integer).

Stage:

The “stage” is a file that stores group sets of squares.

Grid:

The “grid” is a file that stores group sets of grids squares.

Number:

The “number” operand is an integer and used with commands “coordinate” or “loop.”

Text:

The “text” operand is a text string. It is used with command “sendkey” for emulating typing on the keyboard.

3.1.4.3 Connector

Then:

The connector “then” is used for conjunction with statement x and statement y, and is delimited from one statement to others.

3.1.4.4 Terminator

Times:

The terminator “times” is used as the end of loop command.

Separator Comma:

3.1.4.5 Operators

To:

The “to” operator is used for conjunction with the action or assignment commands. It is strictly used for commands “move,” “dragsquare” and “dragcoordinate,” for use with right operands of data “square x” and “coordinate x,” and for use with left operands of data “square x” and “coordinate x”.

By:

The “by” operator is used for conjunction with the assignment commands. It is strictly used for commands “move,” “drag,” “dragsquare,” “dragcoordinate,” “click,” “clicksquare” and “clickcoordinate,” for use with right operands of data “distance” and “clicktype,” and for use with left operands of data “square x,” “coordinate x,” “direction” and “pattern”.

Loop:

The “loop” operator is used for executing repeatedly pre-spoken commands for “munber” times. Its left operand is always a command, and its right operand is always a “number”.

3.1.4.6 Constant parameters

clickType:

The types of “clicktype” include: leftclick, rightclick and doubleclick. This “clicktype” type is used for triggering a mouse click.

Distance:

The types of “distance” include: very short, short, normal, long and very long. This distance type is used for restricting the moving “distance” of mouse cursor.

Direction:

southeast and southwest. This “direction” type is used for setting the moving direction of

mouse cursor.

Pattern:

The types of geometric moving “pattern” include: triangle, square, pentagon, hexagon,

octagon, curves, zigzag, and spiral. This “pattern” type is used for setting the moving

manner of mouse cursor.

Speed:

The types of “speed” include: very slow, slow, normal, fast and very fast. This “speed” type is used for restricting the moving speed of mouse cursor.

Boolean:

The types of “boolean” include: true and false. This “boolean” type is used for setting the command “setdrop”.

3.1.4.7 Selection commands

selectApplication:

The “selectApplication” command is used for selecting an application program into the GIB system to execute.

The “selectStage” command is used for selecting a stage file and loading all its defined squares into the executing application.

selectGrid:

The “selectGrid” command is used for selecting a grid file and loading all its defined grids into the running application.

3.1.4.8 Setting commands

setDragSpeed:

The “setdragspeed” command is used for setting the mouse cursor to move speed according to the parameter “speed”.

setDistance:

The “setDistance” command is used for setting the mouse cursor moving distance according to the parameter “distance”.

setDrop:

The “setDrop” command is used for disabling the drop of drag command according to the parameter “boolean”.

showGrid:

The “showGrid” command is used to show or hide defined grids according to the parameter “boolean”.

3.1.4.9 Mouse action commands

Move:

The “Move” command is used for controlling the mouse cursor moving to the specified location, according to the parameters “direction” and “distance”. If the “distance” is omitted, it would follow the setting parameter via command “setDistance”

The “Drag” command is used for dragging the mouse cursor moving to the specified location, according to the parameters “direction” and “distance”.

dragSquare:

The “dragsquare” command is used for dragging the specified square x moving to the specified location square y or coordinate x, according to the parameters “direction” ,”distance” and “pattern”.

dragCoordinate:

The “dragCoordinate” command is used for dragging the specified coordinate x moving to the specified location coordinate y or square x, according to the parameters “direction,” “distance” and “pattern”.

Click:

The “Click” command is used for triggering a mouse click under the current mouse position, according to the parameter “clicktype”.

clickSquare:

The “clickSquare” command is used for moving cursor to the specified square location first and then triggering a mouse click under the current position according to the parameter “clicktype”.

clickCoordinate:

The “clickCoordinate” command is first used for moving cursor to the specified coordinate location and secondly triggering a mouse click under the current coordinate position according to the parameter “clicktype”.

3.1.4.10 Keyboard action commands

sendKey:

The “sendKey” command is used for emulating keyboard stroke, and is limited to the combination of number 0 to 9 and character “a” to “z”.

clearText:

The “clearText” command is used for emulating keyboard <Backspace> stroke once. 3.1.4.11 System action commands

Send:

The “Send” command is a stand-along command and used for sending all the spoken commands to lexical translator, in order to analyze and process spoken commands.

clearConsole:

The “clearConsole” command is a stand-along command, and used for clearing all the spoken commands in the input command buffer.

undoPhrase:

The “undoPhrase” command is a stand-along command and used for deleting the preceding spoken command stored in the input command buffer.

storeCursor:

The “storeCursor” command is a stand-along command and used for storing the current cursor position into position buffer.

recoverCursor:

The “recoverCursor” command is a stand-along command and used for moving cursor to the previous stored position of mouse cursor from position buffer.

captureIt:

The “captureIt” command is a stand-along command and used for capturing the most front application program windows into the GIB interface system.

3.1.5 Process description

The process description of GIB system is illustrated in the following: Step1. Waiting for control commands input

Step3. Analyzing whether the inputted commands are valid and defined in the macro composer

Select Case (inputted command) Case (the command is macro)

Translate inputted commands according to command table Store translated command to command buffer, go to Step1 Case “system action command”

Examples:

“Send”; store speech commands to command buffer, then go to Step4 Case (the command is invalid)

Discard inputted speech command, and then go to Step1. End Select

Step4. Lexical translation:

Parsing command string to token set according to separator “then” Store translated token sets to command string array

Step5. Syntactic analysis: pointer = 0

Do while (commands string array [pointer] < > empty) Select case (command string)

Case “mouse action command” Examples:

“Move to file”; move mouse cursor to defined square object “file” Case “keyboard control”

Examples:

“SendKey hello”; press keys “hello” Case “selection commands”

Examples:

“SelectApplication mspaint”; selecting application “mspaint” into system to run

Case “setting commands” Examples:

“SetDragSpeed slow”; set mouse cursor drag moving speed slow Case else

Commands are un-defined; discard it and clear command buffer End select

pointer = pointer + 1 End do

Step6. Repeat Step1, until system end.

The corresponding operation flow chart of GIB system is described in figure 3-13.

3.1.6 Limitation

In the method of “Run Application in GIB Windows,” we can easily control the application which has no prior speech recognition control ability, via speech commands under the RAGIB system. But even so, there are still some limitations under this system:

1. Application programs must be executed under the proposed RAGIB environment. 2. The input commands would be analyzed after command “send” has been spoken. 3. User would be unable control mouse moving to undefined position with speech

command.

4. The label name of defined square objects would affect the action control of mouse cursor.

3.2 Separate Application and GIB (SAGIB) Windows

For solving problems mentioned in section 3.1, we will make some modification to the GIB system and separate application from the GIB Windows. It implies that GIB would be executed behind the Windows and applications need not to be executed under the SAGIB system. The speech command would be sent to the SAGIB system after the validation of automatic recognition, without have to wait for the speech command “send”. This would increase the correctness of recognition without disturbance from the noise of the environment during time space of input commands and command “send,” while in turn this process would also make the system control more user-friendly.

Figure 3-14 Rule composition flow

For accelerating movement control of mouse cursor, we virtually cut the Windows into 3*3 big grid space and 10*8 small grid space, and pre-define each center coordinate of small grid space a name (x, y), x: 1~10 and y: 1~8, such as (2, 4), and named the grids of big space as “left-up,” “center-left,” “left-down,” “center-up,” “center-axis,” “center-down,” “right-up,” “center-right,” and “right-down.” For a more precise mouse cursor movement, we define the whole screen as working space with wrapped-around pixel reference. The flow of rule composition is shown in figure 3-14, and the mouse control environment is shown in figure 3-15.

3.2.1 Identifiers

3.2.1.1 Command class

We have classified the speech commands into sections: “mouse position control,” “mouse action control,” “keyboard control” and “file execution control,” as shown in the following.

Command input format:

<command1> - <command2> -..<commandn>

“-”: The connector “-” is used as a separator with statement x and statement y in composed

3.2.1.2 Mouse position control

Mouse position control includes:

1. Store position of current mouse cursor as an object,

2. Set current mouse cursor to that gotten position stored before *sn ; s: store current mouse cursor position

*gn ; g: get stored mouse cursor position before and set current mouse

cursor position to the gotten position

; n: 01~99, number of stored position ( or defined ) in program

3.2.1.3 Mouse action control

Mouse action control includes:

1. Mouse clicking control: control mouse button action via commands “click mouse,” “right click,” “double click,” “drag” and “release,”

2. Mouse moving control: control mouse cursor moving continuously or stop with different speed via one command,

3. Mouse spring control: control mouse cursor spring once to the directions, “up,” “down,” “left,” and “right” with distance via system default or set by the user,

4. Mouse jumping control: control mouse cursor jumping to the default locations, “right up,” “center down,” (2, 3), etc, pre-defined by the system.

Mouse clicking control:

Example:

@cm ; click mouse left button once @dc ; double click mouse left button

Mouse moving control:

@ms ; control mouse cursor moving stop @mu ; mouse cursor moving up

Mouse spring control:

Example:

@su ; mouse spring up

@v01 ; set Vertical spring distance y1, jumpstepx=|y2-y1| @v02 ; set Vertical spring distance y2, jumpstepx=|y2-y1|

Mouse jumping control:

Example:

@ru ; mouse jumping to the pre-defined location “right up”

move to 2,5 send ; mouse jumping to the pre-defined object coordinate “2, 5”

3.2.1.4 Keyboard control

Keyboard control includes the follows:

1. Single key press control: control single keyboard pressed actions

2. Compound key press control: control compound keyboard pressed action 3. Special key press control: control special command keyboard pressed action 4. Sentence writing control: sentence writing with English or Chinese code

Single key press control:

~<char> ; manipulating single key pressed, <char>: a~z, 0~9 Ex. “~f” means “f” key pressed, etc.

Compound key press control:

Example:

#a<char> ; manipulating compound keys <Alt>-<char> pressed, <char>: a~z, 0~9

Special key press control: control special command keyboard pressed

#f<num> ; manipulating function key pressed, <num>: 1~12

Sentence writing control: control sentence writing with English or Chinese code

#il ; compound key “Ctrl Space” pressed, “change language” #ii ; compound key “Ctrl Shift” pressed, “change input”

|<En char string>]<CH char string>: #ie ; set parameter emode=1, “english code”

#ic ; set parameter emode=0, “chinese code”

3.2.1.5 File execution control

File execution control: executing file by setting application file path in macro.

File execution control:

![file path] ; execute file stored in the system for path=[file path]

; Ex. “!c:\program files\microsoft office\office11\execel.exe”

3.2.2 Process description

The process description of SAGIB system is illustrated in the following: Step1. Waiting for speech commands input

Step2. Recognizing control commands

Step3. Analyzing whether the inputting commands are valid and defined in the macro composer

If (inputted command is invalid) then

Discard inputted command and clear commands buffer, then go to Step 1. Else

Translate inputted commands according to command table Store translated command to command buffer

End If

Parsing command string to token set according to separator “-” Store translated token sets to command string array

Step5. Syntactic analysis: pointer = 0

Do while (commands string [pointer] < > empty) Select case (command string)

Case “mouse position control” Examples:

“*sn”; store current cursor position to database record n;

“*gn”; set mouse current cursor to the position n stored before.

Case “mouse action control” Examples:

“@dc”; double click mouse left button,

“@mu”; mouse cursor moving up continuously Case “keyboard control”

Examples:

“~niceday”; press keyboards “niceday” continuously. “#aa”; press Alt a simultaneously

Case “sentence writing control”, commands format is as “|***]###” If (variable enmode=0) then

write out characters “***” with Chinese mode; Else if (enmode=1) then

write out characters “###” with English mode End if

Case “file execution control”

Case else

Commands are un-defined; discard it and clear command buffer End select

pointer = pointer + 1 End do

Step6. Repeat Step1, until system end.

The corresponding operation flow chart of modified GIB system is described in figure 3-16.

4. GIB-based Application Interface (GAI) Generation

4.1 interface

On the traditional developing of remote control functions into the cellular phone, the designer needed to write the complex Midlet program into cellular phone at first, and then write the JAVA AP controlling interaction statement between PC and the cellular phone. It must redesign and recompile the whole application while adding or deleting functions. This designed process was a hard job, which wasted much time and was highly inefficient, as shown in figure 4-1.

Figure 4-1 Framework of traditional cellular phone interface system

Our proposed solution for solving this problem is to construct an interface generating system; with which the designer can easily develop remote control interface program into cellular phone without the need to write any program code of cellular phones. Finally, using this cellular phone as a remote controller, users may interact with the JAVA application running on the PC directly and easily. The framework of remote control interface system is as shown in figure 4-2.