2006 IEEEInternational Conference on Systems, Man,and Cybernetics

October 8-11, 2006, Taipei, Taiwan

Text

Extraction

from Complex

Document Images

Using

the

Multi-plane

Segmentation Technique

Yen-Lin Chen, Student Member, IEEE, and

Bing-Fei

Wu*, Senior Member, IEEEAbstract-This study presents a new method for extracting characters from various real-life complex document images. The

proposed method applies a multi-plane segmentation technique to separate homogeneous objects including text blocks, non-text graphical objects, andbackground textures into individual object planes. It consists of two stages - automatic localized multilevel thresholding, and multi-plane region matching and assembling. Then a text extraction process can beperformed on the resultant planes todetect and extract characters with differentcharacteristics

intherespective planes. Theproposedmethod processes document imagesregionallyandadaptively accordingtotheirrespectivelocal features. This allows preservation of detailed characteristics from

extracted characters, especially small characters with thin strokes,

aswell as gradationalilluminations of characters. This alsopermits background objects with uneven, gradational, and sharp vanations incontrast, illumination, andtexture tobehandledeasilyandwell. Experimental results on real-life complex document images

demonstrate that the proposed method is effective in extracting characters with various illuminations, sizes, and font styles from varioustypesofcomplexdocument

images.'

I. INTRODUCTION

Textual information extraction from document images provides many useful applications in documentanalysis and understanding, such as optical character

recognition,

document retrieval, and compression. To-date, many effective techniques have beendeveloped

forextracting

characters from monochromatic document

images [1].

In recentyears,advances in multimediapublishing

andprinting

technology have led to an increasing number of real-life documents in which

stylistic

textstrings

areprinted

with decorated objects and colorful, variedbackground

components. However, these approaches do not work well for extracting characters from real-life complex document images. Comparedtomonochromatic documentimages, text extraction incomplex document imagesbrings

with it many difficulties associated with the complexity ofbackground

images, variety and shading of character illuminations, superimposing characters with illustrations and

pictures,

aswellasother decoratedbackgroundcomponents.

Since most characters show sharp and distinctive edge features, methods based on edge information

[2]-[4]

have been developed. Such methods utilize an edge detection operatorto extract theedgefeatures of characterobjects, and then use these features to extract characters from document images. Such edge-based methods are capable of extracting characters in different homogeneous illuminations from graphic backgrounds. However, when the characters are adjoined or touched with graphical objects, texture patterns,This workis supported by the National Science Council under GrantNo. NSC94-2213-E-009-066.

*Theauthorsarewith Department ofElectrical and Control Engineering, National Chiao TungUniversity,1001Ta-HsuehRoad, Hsinchu 30050,

Taiwan(e-mail: bWL occnCt1u.edut w)

or backgrounds with sharply varying contours, edge-feature vectors of non-text objects with similar characteristics may also beidentified as text, and thus the characters in extracted textregions are blurred by those non-text objects.

In recent years, several color segmentation-based methods for text extraction from color document images have been proposed [5]-[7]. Thesemethods utilizecolor clustering or quantization approaches for determining the prototype colors of documents so as to facilitate the detection of character objects in these separated color planes. However, most of these methods have difficulties in extracting characterswhich are embedded in complex backgrounds or that touch other graphical objects. This is because the prototype colors are determined in a global view, so that appropriate prototype colors cannot be easily selected for distinguishing characters from those touched graphical objects and complex backgrounds without sufficient contrast. In this study, we propose an effective method for extracting characters from these complex document images, and resolving the above issues associated with the complexity of their backgrounds. The document image is processed by the proposed multi-plane segmentation technique to decompose it into separate object planes. The proposedmulti-plane segmentation technique comprises two stages: automatic localizedhistogram multilevel thresholding, andmulti-planeregion matchingandassemblingprocessing. After the multi-plane segmentation technique has been carried out,homogeneous objects includingtextblocks,other non-text objects, and backgroundtextures are separated into individual object planes. The textextraction process is then performed on the resultant planes to detect and extract characters with different characteristics in the respective planes. The document image is processed regionally and adaptively according to local features by the proposed method. This allows detailed characteristics ofthe extracted characters to be well-preserved, especially the small characters with thin strokes, as well as the gradational illuminations ofcharacters. This also allows for characters adjoined ortouched with graphical objects andbackgrounds with uneven, gradational, and sharp variations in contrast, illumination, and texture to be handled easily and well. Experimental results demonstrate thatthe proposed method is capable of extracting characters from various types of complexdocumentimages.

II. LOCALIZED HISTOGRAMMULTILEVELTHRESHOLDING The multi-plane segmentation, if necessary, begins by applying a color-to-grayscale transformation on the RGB components of a color document image to obtain its illumination component Y After the color transformation is performed, the illumination image Y still retains the texture features of theoriginal color image, as pointed out in [2], and

thus the character strokes in theiroriginal colorarestill well-preserved. Then the obtained illumination image Ywill be divided intonon-overlapping rectangular blockregions with dimension

MHXMv,

asshowninFig. 1(b). Thus the mission is to extract objects with similar characteristics from these rectangular block regions into different sub-block regions to facilitate further analysis in the following stage. Hence, an effective multilevel thresholding technique is needed for automatically determining the suitable number ofthresholds for segmenting the block region into different decomposed object regions. By using the properties of discriminant analysis, we have proposed an automatic multilevel global thresholding technique for image segmentation [8]. This technique automatically determines the suitable number of thresholds, and utilizes afast recursive selection strategy for selecting the optimal thresholds to segment the image into separate objects with similar characteristics in a computationally frugal way. In this study, we utilize this multilevel threshold selection technique and make necessary modifications to adapt it for segmenting block regions into differentobjectswithsimilar characteristics.We utilize the concept of separability measure described in [8] as a criterion ofautomatic segmentation of objects in a given block region, denoted by 91 . This segmentation criterion is denoted by the "separability factor"- ST as in[8], and isdefinedas,

SE = VBC(T)!v91 = 1-

vwc

(T)/vqf

(1)where VBC VWC and

v,,

are between-class variance, within-class variance, and total variance of the gray intensities of pixels in the region SR, andv,,

serves as the normalization factor; and T is the evaluated threshold set composed ofnthresholds,topartitionpixelsintheregion 91 into n±+1 classes. Thesepixelclasses arerepresentedbyCoto'...,

tl}I

I .. Ck= {tk +1X..tk+1}1

Cn {tn+1, .l--,UBased on this efficient discriminant criterion, an automatic multilevel thresholding is applied for recursively segmenting the block region

91

into different objects of homogeneous illuminations, regardless of the number of objects and image complexity ofthe region91.

It can be performed until the SE measure is large enough to show that the appropriate discrepancy among the resultant classes has been obtained. This objective is reached by the scheme that selects the class with the maximal contributionWk

Uk2

(wherewk andok

arethe cumulative probabilityand variance ofthe gray intensities in class Ck, respectively) of the total within-class variancevwc(T),

denoted byCp,

for recursively performing an optimal bi-class partition procedure, as described in [8], until the separability among all classes becomes satisfactory, i.e. the condition where the ST measureapproximates

asufficiently large

value. The classCp

will be divided into two classesCpo

andCpl

by applying the optimal thresholdt*

determined by the localized histogram thresholding procedure as described in [8]. Thus, theST

measure will most rapidly reach the maximal increment to satisfy sufficient separability amongthe resultant classes of pixels. As a result, objects with homogeneous gray illuminations will bewell-separated.

Furthermore, if a region 91 comprises of a set of pixels with homogeneous gray intensity features, consisting of parts of a larger object or background region, then it should not be partitioned and should keep its original components. Thesehomogeneous regions can be determined by evaluating two statistical features: 1) the bi-class ST measure, denoted as

S(F,

which is the SF value obtained by performing the initial bi-class partition procedure on region 91 , i.e. the SF value associated with the determined thresholdt*;

and2) theillumination variance,v,

of pixels in the region 91. Hence, if both theSFb

andv,

features have small values, this reveals that the distribution of the region 91 is concentrated within a compact range, and thus the 91 comprises a set of homogeneous pixels representing a simple object or parts thereof. Therefore, the following homogeneity condition is utilized for determining the situation where both theSfb

andv,

features are small:SFb

<Thho

,and vS <Thhl

(2)

where Thho and Thhl are pre-defined thresholds. Therefore, if the homogeneity condition is satisfied, the region 91 is recognized as a homogeneous region, and does not need to undergo the partition process and hence keeps its pixels of homogeneous objects unchangedto be processed by the next stage. The values ofthe two thresholds

Thho

and ThhI are experimentallychosen as 0.6 and 90, respectively.Then the localized automatic multilevel thresholding processis performedasthefollowing steps:

Step 1: To begin, the illumination image Y with size

Wi,ngXHimg

is divided intorectangular

blockregions

91'j with dimensionMHxMv,

as shown in Fig. 1(b). Here(i,

j)

are the location indices, and i=0,...,N,, andj

=0,...,Nv

, where N,, =([WJmg

/MI,

I

-l) andNv=

(H,,ng

/M,

-1)

, whichrepresent

the numbers ofdivided blockregionsper rowand per column, respectively. Step2: For each block region

91"'

, compute the histogram of pixels in 9 1" , and then determine the illumination variance - V9j' and the bi-class separability measureSE,

initially, there is only one class

,2"i;

let q represent the present amount of classes, and thus set q = 1. If thehomogeneity condition, i.e. Eq. (2), is satisfied,thenskip the localizedthresholdingprocessforthisregion

91"'J

and go to step 7;elseperformthefollowingsteps.Step3: Currently, q classes exist, having been decomposed from

91"j.

Compute the class probabilitywIj,

the class mean u4', andthestandarddeviation S',ofeachexisting classCk'i

of pixels decomposed from 91"', where k denotestheindex of the present classes andn=0,. q-1.Step 4: From all classes Ck", determine the class Cp" which has the maximal contribution

(Wi' k'/

2) of the totalwithin-class variance

v"4

of9"i

", to be partitioned in the next step in order to achieve the maximalincrement of ST. Step 5: PartitionCo"':

{ti'

+1, ...,t>

} into two classesCpjo

:{ti

+1,...,ts1

i},

andC(j

j:{ tsj+l,

...,t,}

, usingthe optimal threshold

t"I

* determined by the bi-classpartitionprocedure. Consequently, the gray intensities of the region

9V'i

arepartitioned intoq+l classes, cI',I ... I(l

. , and then let q =q±+l toupdatetherecord of the currentclassamount.

Step 6: Compute the SE value of all currently obtained classes using Eq. (1), if theobjective condition, SE .ThSF, is satisfied, thenperform the following Step 7; otherwise, go back to Step 3 to conduct further partition process on the obtained classes.

Step 7: Classify the pixels of the block region 91"j into separate sub-block regions, SR

i,,O,

SRi,j,l

..., SRi,j,q-, corresponding to the partitioned classes of gray illumination intensities,GC',

Iq

I',

...,Cq,'l

, respectively, where the notation SRi,j,k represents the k-th SR decomposed from the region 91"j . Then, finish the localized thresholding process on91"j

andgobackto step 2and repeatSteps 2-6 to process the remaining block regions; ifall blockregions

have beenprocessed,gotostep 8.Step 8: Terminate the segmentation process and deliver all obtainedsub-blockregions of the

corresponding region

91.The value oftheseparabilitymeasurethreshold

ThsF

is chosen as 0.92 according to theexperimental

analysis

described in [8] to

yield satisfactory

segmentation

results onthe block regions. With regard to the dimension

MHXMv

of theblockregion, suitable larger values of theparameters MH and Mv shouldbe selected so that allforeground objects

in the images can be clearly segmented. Since atypical

resolution ofthe document images ranges from 200 dpi to 600 dpi for scanning books, advertisements,journals

and magazines, etc,we utilizeM,,

-Mv

=100 (8.5 mm on the300 dpi resolution) in our experiments. The multi-plane region matching andassemblingprocess, aspresented in the followingsection, isthencarriedout toassembleand

classify

them into object planes, denoted as P. All SRs obtained from the localized multilevelthresholding

process arecollected intoahypothetical "Pool", inwhich the

multi-plane

region matching andassemblingprocess is conducted.III. MULTI-PLANEREGION MATCHINGANDASSEMBLING This section describes an algorithm for constructing the object planes from the SRs, generated from the localized multilevel thresholding procedure introduced in the preceding section. Some statistical and spatial features of the adjacent SRs areintroduced into the multi-plane region matching and assembling procedure in order to assemble all SRs of the homogenous text region or object. The proposed multi-plane region matching and assembling process is conducted by

performing the following three phases - the initial plane

selection phase, the matching phase and the plane constructingdecision phase.

Several concepts and definitions are first introduced to facilitate the matching and assembling process of SRs obtained from the previous localized thresholding procedure. An SR may comprise several connected object regions of pixels decomposed from its associated region 9t. Thus the pixelsthat belong to the regions of a certain SR are said to be objectpixelsof this SR, whileotherpixelsinthis SR are non-objectpixels. Thesetof theobject pixelsin one SR isdefined asfollows,

OP(SR

ii)

={g(SRiJ,kx,y)|

The pixel at (x,y) is an object pixel in SRiijk} where g(SRi 1,x,y) is thegrayillumination intensity of the

pixel atlocation (x, y) in SR i,j,k , and the range of x is within

[0,

MH

-1]

and y is within[0,

M,,

-1]

. The concept 4-adjacentreferstothesituationinwhich each SR has four sides thatborder the top, the bottom, the left or theright boundary ofits adjoiningSRs. The SRs which arecomprised ofobjects with homogeneous features are assembled to form an object plane, denoted by P.A.InitialPlane SelectionPhase

In the first processing phase, to improve the speed and accurateness of the final convergence of the multi-plane region matching and assembling process, the mountain clusteringtechnique [9] can be applied todetermine the SRs with the most prominent and representative gray intensity features, and these SRs are selectedas seedsto establisha set of initial planes. The mountain method is a fast, one-pass algorithm, which utilizes the densityof features todetermine the most representative feature points. Here we consider SRs asfeaturepointsinthemountainmethod.

First,given the localized multilevel thresholdingprocess to segment the image into r SRs in the Pool, the mean

pu(SR

Ik)

associated with each of them is also obtained.,u(SR

i} k) is the mean of gray intensities of object pixelscomprised by SR ,j,k andis equivalent to

Atj

obtainedby localized multilevel thresholding process. Then the region dissimilarity measure, denoted by DRM, between two 4-adjacentSRs canbecomputedas,DR4(SRi

j1,k,

SRi'j2

k,)

=U(SR i'Jl1k))u(SRiJ2)|

(4)

The range of the DRM is within

[0,255].

The lower the computedvalueofD.,

thestrongerthesimilarity amongtwo SRs.Therefore, the mountain function at a SR can be computed as,

M,

(SR

i),

jkSEP-oDRM

(SR,SR'j

) (5) VSRi,jiEpooIwhere a is a positive constant. A higher value of the mountain function reflects that SRi,j,k possesses more homogenous SRs in its vicinity. Therefore, it is sensible to

selecta SR

'j'

k with ahigh value of mountain function as arepresentative seed to establish a plane. Let M0 be the maximalvalueof the mountain function values, and

SR,,

be the SRwhose mountain value is M*:MO`

(SR

)= max[MO

(SR jo)]

(6)

Thus,

SRO*

is selected as the seedof thefirstinitial plane. Aftercomputingthemountain functionof each SR in the Pool,the following representative seeded SRs are determined by destroying the mountains. Since the SRs whose gray intensity features close to SR0 also have high mountainvalues, itisnecessary toeliminate the effects of theidentified seeded SRs before determining the follow-up seeded SRs. Toward this purpose, the updating equation of the mountain function, after eliminating the previous

(m-i

)th seeded SR-SRm

i*

X is computedby,m,, (SR 'jk') mA-, (SR 'j') M* (SR, e')e OD(SR lSR, (7)

wheretheparameter ,B determinesthe neighborhood radius thatprovide measurable reductions in the updated mountain function. Thenrecursively performing the discountedprocess of the mountain function given by Eq. (7), new suitable seededSRscanbedeterminedinthesameway,untilthelevel of the current maximal M* falls bellow a certain level compared to the first maximal mountain MO The terminativecriterion ofthisprocedure is defined as,

M*-

*)<g

~~~~~(8)

(MG /MO)

(8

where 6 is a positive constant less 1. Here the parameters are selected as a=5.4, ,B=1.5 and -=0.45 as suggested by Pal and Chakraborty

[10].

As a result, this process converges to the determination of resultant N seeded SRs:{SR,*,

m=0:N-1}, and they are utilized to establish Ninitialplanesforperformingthefollowing matching phase. B.Matching Phase

Inthematching phase,thesimilarityandconnectedness between each currentunclassified SR andall current exiting planesare analyzedtodetermine the best belongingplane. If the best matching plane for a certain unclassified SR is

determined,

then this SR is assembled into that plane and removed from thePool;

otherwise, if there is no matching plane for a certain unclassified SR, then this SR remains in the Pool. Since new planes will be established in the plane constructing phase ofthefollowingrecursions,

the SRswhich cannot find matching planes in the current recursion of the matching phase will be analyzed in subsequent recursions untilthey findtheirbestmatching plane.

Twomeasurements of thecontinuityandsimilaritybetweentwo4-adjacentSRs-the side-match measure, denoted as DSM, and the region dissimilarityDRM, ascomputedbyEq. (4), are employedfor theassemblingprocess. Then both DSM andDRMmeasuresare

considered to determine the match

grade

oftwo4-adjacent

SRs. The matching phaseprocess is basedon

evaluating

the matchgradeamongthe SRs andassemblingthemintoplanes.First, the side-match measure - DSM, which reveals the dissimilarity of the touching boundary between the two 4-adjacent SRs, is described as follows. Suppose that two SRs are 4-adjacent. They mayhave one of two types of touching boundaries: 1) the vertical touching boundary shared by two horizontally adjacent SRs, or 2) the horizontal side shared by two vertically adjacent SRs. Here we take the case of two horizontally adjacent SRs for example, and the case of vertically adjacent SRs can also be similarly derived. For a pair oftwo horizontally adjacent SRs -SR ,ij,

k"

on the left, and SR ,,J2,k,

onthe right, the pixel values on the rightmost side of SRi',jk

I' and the leftmost side of SR "X"2"

can be described as: g(SR'"k',

M1-l,y)

and g(SRkJ_kl,0,y) respectively. The sets of object pixels on the rightmost side and theleftmost side of an SR, denoted by RS(SRisj,k) andLS(SR i,jk ), respectively, are defined as follows,

RS(SR'

ij.k)={yg(SR

)O(jR,

M,+-n1,dy)

g(Sijk,,,-l,y)E-OP(SR I-k),and O<y<M,-1} LS(SR )

=g(SR

o(

(9) 10) g(SR'i k 0,y)eOP(SR j), and

O<y<Mv

-1} To facilitate the following descriptions of the side-match features, the denotations of SR ,,j k and SR' k', are simplified asSR'

and SR , respectively. The vertical touching boundary ofSR'

and SR , denoted asVB(SR',

SRIr),

is represented by a set of side connections formed by pairs of object pixels that are symmetrically connected on their associated rightmost and leftmost sides, andisdefinedasfollows,VB(SR',

SRr)

=(g(SR',

MH-,y),g(SRr,

0,

Y))

(11)

g(SRI,

M-1,y)

ERS(SR'),

andg(SRr

,O,y)

ELS(SRr)}

Also, the number of side connections of the touching boundary, i.e. the amount of connected pixel pairs in VB(SR

'iJii,k

SRi2j12k2J

) Xshould also be considered for the connectedness of the two 4-adjacent SRs, and is denoted byNSC

(SRj,'

JI, ,SRi2 J2 2). Therefore, the side-match measure,DSM,

ofthetwo4-adjacent

SRs canbecomputed

as,DSM

(SRI,SRr)

=I

11

g(SR',

M,

-1,y)-

g(SRr,

0,Y)||

(12)(g(SR,MH-I-X).g(SR

.0J#))

VB(SRl,SRr)Ns,.(SRI,SRr)

If the DSM value oftwo 4-adjacent SRs is sufficiently low, then these two SRs are homogeneous with each other, and shouldbelongtothe sameobject plane P. The range ofDSM values is within

[0,255].

Accordingly,theDSMmeasure canreflect thecontinuity oftwo4-adjacent

SRs,

and theDRM

value,asobtainedbyEq. (4), reveals the similarity between them. Hence the homogeneity and connectedness oftwo 4-adjacent SRs can be measured bydetermining thedominant effect of theDSMand the DRM. Therefore, based on the abovedefinitions, the match grade of two 4-adjacent SRs, denoted by m , is determinedas,

m(SR'

IX,k1ISRi.,~

XRk,

max(DSM (SR k,,

SRij

k,),

DRM (SR"l,kI

I,sRk-,k

)) (13)max(a(SR" i1k1 ) +U(ysrii)ko), 1)

where o(SRi,j,k) is the standard deviation of gray intensities of all object pixels associated with the SR i,j,k andisequivalent to < obtained from localized histogram multilevel thresholding process. Here the denominator term max(o7(SR"j1 k)+ 7(SR'i2k,), 1) in Eq. (13) serves as the normalization factor.

In each recursion ofthe matching phase, each of the unclassified SRs, i.e. SRi,j,k in the Pool, is analyzed by evaluating the match grades of SR

i,j,k

associated with those SRs, denoted by SRi',j',k' which have been grouped into current existing object planes(the subscript q represents that SR i',j',k' belongs to the q-th planeEq)

to seek for the matching planeinto which SRi,j,k canbegrouped.

Inorder to facilitate match grade determination, the set AS(SRiIj.k,p

) isutilized forcontainingthe SRqS inaplanePq

which are4-adjacentto SRisjk iSdefinedby,

AS(SRijk) (14)

=1SRqj j k E

CqI

SRqiiqk

is4-adjacent

tO SRi3j1k Then thematchgrade2R(SR

ik),

which revealshowwellSRi,j,k matches with

Pq

, can be determined by the followingoperation,9t(SR

ik)

)-min

k m(SRij,kS qi'jk)

(15)VSRq'Ik',EAS(SRLJk,Pq)

It must be noted that if none of the SRs in

P,

are 4-adjacent to SRi,jk, i.e.AS(SRJk,7f)=0,

then P is excluded from the consideration formatching

with SRi,jk Thus the plane which has the best match grade associated with SRij,k among allexisting planes,denotedby Cm, canbe determinedby,

9i(sR i,j,k

,P4)min9MGSR

i,j,k,pq

(16)

VPq

The following matchingcondition is then applied to check whether the selected candidate plane (m and SRi,j,k are sufficiently matched, and thus (m can be determined for suitably absorbing SR i,j,k,defined as follows,

95L(SR

i,j,kP

C)

<Thm

(17)where

Th.

is apredefined

threshold which represents the acceptable tolerance of dissimilarity for SR i,j,k to be grouped intoP,.

The matching condition has a moderate effectonthenumberof resultant object planes, and the value choice ofThm

=1.2 is experimentally determined to obtainsufficiently distinct planes and avoid excessive splitting of planes. Accordingly, if SR ,jk and its associated

P"m

satisfythe matching condition, then SRi,j,k is merged intoPm,

andremoved from the Pool. If the matching condition cannotbe satisfied, this reflects that there is no appropriate matching plane for SRi,j,k in this current matching phase recursion. As a result, SRijk should remain in the Pool until its suitable matching plane emerges after more plane constructing phase recursions. Afteradeterminationhas been made for SRi,jkk the matchingprocessis in turn appliedon the subsequent unclassified SRs in the Pool, until all have beenprocessedonceinthecurrentmatching phase recursion. C. PlaneConstructing Decision PhaseAfterperforming the previous matching phaserecursion, ifthere are unclassified SRsremaining, and the Pool is not drained, these unclassified SRs must be analyzed and a determination madeas towhether it is necessarytoestablish a newplaneto assemble SRswith such features into another homogeneous region. Theplane constructing decision phase determines whetherto 1)establish andinitialize a newplane byselecting the unclassified SR "farthest" from all existing planes as an initialseed, or 2) extend one selectedplane by merging one unclassified SR "nearest" to this plane. The decision is made according to theanalysis ofthe following gray intensity and location features. The dissimilarity measure between one unclassified SR, not adjoined to any existing planes in theprevious matching phase recursion, and a certain object plane CP, is determined by the relative-difference of gray intensities between this SR and its nearest

SRqi'ko

among allSRqs

thatbelong

to T, and iscomputedas,

D1

(SRjk,

mm r DRM(SR ijkSRqJk

)(1k8)

VsRq-Pq

max(o0(SRlk"I'

(Sqik,

)where DRM(SRilj,k

,SRqi

jlk) iscomputed byEq.(4). Then the smallest dissimilarity ofgrayintensity between SRi,j,k and theplane beingmostsimilartoit amongallcurrentlyexisting object planes, denoted by(PS,,SR

.j)k,

isdetermined by,DI

(SR

k,jSI(SR

j.k))=mn(D,))

i(19)

q

Then the dissimilarity measure of SR

,jk

and its associatedPSI(SR

ij)is

alsoexpressed

as Ds,(SR

ijk)

torepresent

theleast dissimilarity of gray intensity between this SR and all existing planes. If SRi,j,k has a sufficiently low dissimilarity

D,

with theplaneP1(SRJ.k),

and they are also locatively close to each other, this means that this SR is homogeneous withuSl(SRi.(.k,

, even if it is not currently4-adjacentto

PSI(SR

j-)-To determine this situation, the locative distance betweenanSRand a plane

Pl,

denoted as DE(SR.J-kJp),

is computedby the Euclidean distance between this SR and theclosest SRq among all SRqs associated with the plane

Pq;

and is determined as,DE(SR , GP)

mi(SR

(SR kRSRq

i ) (20)where De(SR

ij*,kSRqI'ij'k')=

V(i

_i,2+(j_)'2)2

If SRij,k and its

PSI(SR

Q.k) are homogeneous in gray illumination and alsolocatively close to each other, i.e. both D,(R SI((SR

k)) and DE(SR p, ,(SR,i)) values aresufficiently small, then SRi,j,k should join the plane

PSI(SR

A)k,

rather than establish a newindependent

plane,

inorder to prevent a text region orhomogeneous object to be split into morethan one plane. Otherwise, if no such planes are found, a newplane should be created to aggregate those SRswithdistinct features.

To ensure that the newplane contains distinct features with currently existing planes, a scheme for selecting the suitable SRastherepresentative seed for constructing a new plane isgivenas follows. Bythis means, this seedSR, which is most dissimilar to any currently existing planes in illuminationintensities, canbeobtained by,

DLI

(SRLI)

= maxDL

(SR

i)jk

(21)VSRE Pool

where DLI (SR

ijilk)

=max(DI

(SRi,jk

)) VPqHence, the determined

SRLI

with the largestDL,

value will be selected as the seed SR to establish a new plane to aggregate those SRs whose features are distinct from other existing planes.By means of the definitions given above, the plane constructing decision isperformed accordingtothefollowing steps:

Step1: First,the SRswhich havesufficientlylow

Ds,

values areselected into thesetSRs5

using thefollowing operation:SRs,

={SR i,j,kE Pool|Ds,

(SR iljk)<Ths,

},

(22)where

Ths,

is a predefined threshold for determining whether the SR is homogeneous with any one of existing planes. If noneofthe SRsare selected bytheabove condition, i.e.SRsI

isempty, thengodirectlytoStep 3for constructing a newplane; otherwise, performthefollowing Step 2. Step 2: The set SRsI now contains the SRs which are homogeneous with other currently existing planes, but are not4-adjacent with them, andthusremainunclassifiedin the previous matching phaserecursion. The SRlocativelynearest to its associatedPI(SR,j,)'

denotedbySR'

, is determined asfollows,DE (SRN,(PS

(sRN)

)= min DE(SRi,j,k

,(pi,(,k

)Si VSRE=-SRs, SI(SR'' (23)

If SRN and its

PS,(sR,V

are sufficiently close to each other, i.e. the conditionDE(SRNj'PS,(SRN,)

<ThL is satisfied, then SRN is decided to be merged withPs,(,R,)

to extend its influential area on nearby SRs, andproceeds

to Step 4. Otherwise, performStep3forconstructinga newplane.Step 3: The

SRLI,

the SR most dissimilar to any currently existing planes, is determined by Eq. (21). ThusSRLI

is employed as aseeded SR toestablish anewplaneqP,,

and thencontinuestoStep 4.Step 4: Finish the plane constructing decision phase, and thenconductthe nextmatchingphaserecursion.

The threshold

Th5,

utilized in Eq. (22) moderately influences the number of resultant planes. IfThs,

is low, then the number ofresultant planes will be increased and a homogeneous region may be broken into more than one plane, althoughits influence on text extraction is not serious. Ifthe value is large however, then the number of planes is reduced, andsomeobjects maybe merged to a certain degree. Reasonably, its value should be tighter than theTh,,

value, which isutilizedinthe pre-match condition to ensure that the determined SRN is sufficiently homogeneous with PSI(SR% and thus SI(SRN) can appropriately absorb homogeneous SRs near the extended influential area benefited from participation with SRN. Therefore, in our experiments, the value ofThs5

is chosen as (3/4).Th,,

. Normally, text-lines or text-blocks usuallyoccupyperceptible area of the image, and thus their width or height should be in appreciable proportion to those of the whole image. Therefore,ThL=

min(N,,,NV

)/4 is used for experiments, where N,,and

NV

are the numbers ofblock regions per row and per column, respectively.D. Overall Process

Based on the three above-mentioned processing phases, the region matching and assembling process begins by applying the initial plane selection phase on allunclassified SRs in the Pool to determine the representative seeded SRs {SRm m m=1:N} for setting up Ninitial planes. Thus, the

matching phasecan then becarried out for the rest ofSRs in the Pool and the initial planes

P,

*, , . Then thematchingphaseand theplane constructing decision phase are recursively performed in turns on the rest of the SRs in the Pool and emergingplanes, until each SR has been classified and associated with a particular plane, and the Pool is eventually cleared.

Consequently, afterthemulti-planeregion matchingand assembling process is completed, each of the homogenous objects with connected and similar features is separated into corresponding object planes. To facilitate further analysis, they are represented as

P,,

C, ...,,L-

where L is the number of theresultant planes obtained. We use Fig. 1 as an example of theproposed

method procedure. The original image in Fig. l(a) consists of three different colored text regions printed on a varying and shaded background. Moreover, the black charactersaresuperimposedonthe white characters. First, as shown in Fig.1(b),

the original imageis transformedinto grayscale,and is divided into blockregions. Figures1(c)-(i)

are seven object planes obtained from Fig. l(a) by performing the multi-plane segmentation technique. As aresult, the homogeneous objects in which all charactersand backgroundtextures are segmentedinto several separate

planes can effectively be analyzed in detail. By observing

these obtained planes,we can seethat threetextregionswith differentcharacteristicsaredistinctly separated. Extraction of

text strings from each binarized plane in which objects are

well separated, and canbe easily performed by ourprevious

proposed projection-based textextraction method [11]. After the text extraction process being conducted on all object

planes, the text lines extracted from these planes are then

collectedintoaresultanttextplane,as shown inFig.

o(j).

(a)Original image. (b) Sectored regions corresponding totheoriginalimage

(d) Object plane (P

(e) Object plane P2 (f)Object plane 3

..d s.^'

m--* ~sitL^*.X As4lt rz.itS

(g) Object plane P4 (h) Object plane (5

(j)Thetextplaneextractedfromall

~~~planesderived fromFig. (a) Fig. 1. Anexampleofthetestimage, "Calibre",processed bytheproposed multi-plane segmentation technique (imagesize:1929x1019)

IV. EXPERIMENTALRiESULTS

Theperformanceof theproposedmethod isevaluated in this section and compared to other existing text extraction methods, namely Jamn and Yu's color-quantization-based

method [6], and Pietikainen and Okun's edge-based method [4]. A set of 46 real-life complex document images was

employed forexperiments on performance evaluation oftext

extraction [12]. These test images are scanned from book

covers, book andmagazine pages, advertisements, and other real-life documents at the resolution of 200 dpi to 300 dpi. They were transformed into gray-scale images, and then

processed by the proposed method. Most are comprised of

characterstrings invariouscolorsorilluminations, font styles

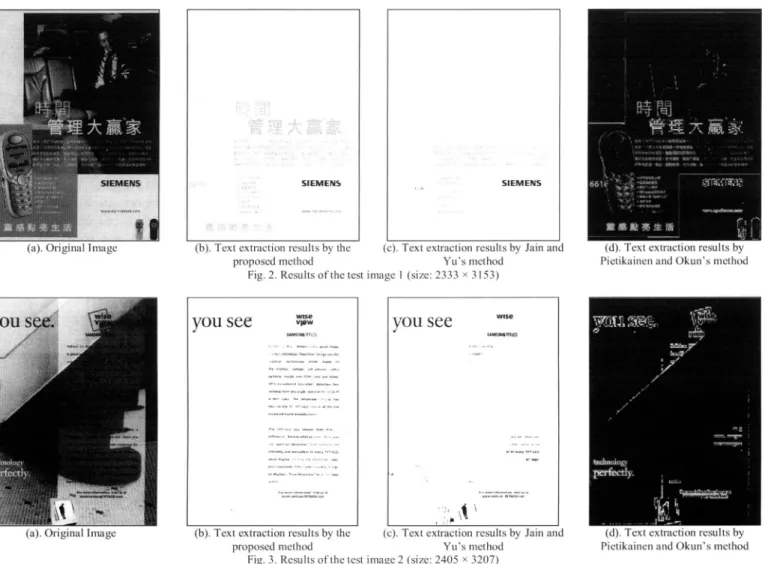

and sizes which areoverlapped with multi-colored, textured, or uneven illuminated backgrounds. Figures 2(a) - 3(a) are

themostrepresentative samples with typical characteristics of complex document images, andmoreresults oftest samples inthe experimentalsetarealso shown in [12].

Figures2-3 show the results oftextextractionproduced

by the proposed method, Jain and Yu's color-quantization-based method [6], and Pietikainen and Okun's edge-based method [4]. Here the extraction results of Pietikainen and Okun's method in Figs 2(d) - 3(d) were converted into

masked images where the black maskwasadoptedtoexhibit

the non-textregion. Figure 2(a) contains background objects with sharp illumination variations across text regions, and someof these alsopossesssimilarcolors and illuminationsto

those characterstouched withthem. Asaresult, the character

illuminations are influenced and have gradational variations

due to the scanning process. After performing the proposed

method, the extractionresultsshown inFig. 2(b) demonstrate that themajority ofthe charactersare successfully segmented

fromthesharply varying backgrounds.AsshowninFig. 2(c), Jain and Yu's method fails to extract the large captions and many characters of the main text region, because many characters are fragmented due to the influence of those background objects in color-quantization process. The edge-based method extracts most characters except some broken

large characters and several missed small characters, as shown in Fig. 2(d); however, several graphical objects with sharply varying contours arealso identified as text,and thus the characters in extracted text regions are blurred. In Fig.

3(a), large portions of the main bodytexts are printed on a

large blacktextured and shadedregion, and thus the contrast

between the characters and this textured region is extremely degraded. As shown in Fig. 3(b), the characters in three different text regions are successfully extracted by the

proposed method. BothJain and Yu'smethodandthe edge-based method fail to extract characters from the textured region with degradedcontrast,asshown inFigs. 3(c)and3(d),

respectively. Therefore, as seen from Figs. 2 - 3, our

proposed method performs significantly better than Jain and Yu's method and the edge-based method in various difficult

cases. By observing the results obtained, while character

strings comprised of variousilluminations, sizes, and styles,

are overlapped with background objects with considerable variations incontrast, illumination, andtexture, nearly all the

textregionsareeffectivelyextractedbytheproposed method.

To quantitatively evaluate text extraction performance,

twomeasures, the recallrateandtheprecisionrate, whichare

commonly used for evaluating performance in information retrieval,areadopted. Theyarerespectively definedas,

,, + _No. of correctly extracted characters AX

recail

rate

No. of actual charactersprecision rate=

No. of correctly extracted characters (25)

No. of extracted character-like components

Wecompute the recall and precision ratesfortextextraction results oftestimages in this study by manually counting the

number of actual characters of the document image, total :70:::Er

., i,,2.= .a.B>.,--X<. 70 --;;

i. i.- ..55-:;j,,,,;,f,fi.i-., .ff.§,i,z,,<-'u,,M-.,2s&>>.100

.,,,-,....,...Efi-;ES'SSES-'-E

iSiE1EU;7jR,,;,;,;,'),,,7.,{,,=,T:h::E

-000t0Xdyt'{=S';-'':i".'W-"''-''''==-"::i'""-"-"'S.ttitEStEtEST;

7: 7.t:f: ?;- t; bES,,:D iL.: tC'd: t47:S ffit: itiSjf ff:0:: it0 itiC'VE t: .t L.E

iES::SXiti)iDiyS)iE0:

(c) Object plane (P0... 1

-1 Li

extracted character-like components, and the correctly extracted characters, respectively. The quantitative evaluation were performed on our test set [12] of 46 complex document images totaling 22791 visible characters. Since these quantitative evaluation criteriaareperformed on the extracted connected-components, the results of Pietikainen and Okun's method [4] is inappropriate for quantitative evaluation using these criteria. Table I depicts the results of quantitative evaluation of Jain and Yu's method [6] and the proposed method. By observing Table 1, wecan see that the proposed method exhibits significantly better text extraction performanceascomparedwith that of Jain and Yu's method.

TableI.Experimentaldata of Jain and Yu'smethod and proposedmethod Method Recall Rate Precision Rate JainandYu'smethod 79.8% 95.2%

Proposedmethod 99.1 % 99.3% REFERENCES

[1] L. 0' Gorman, R. Kasturi, Document image analysis, IEEE Comput. Soc.Press,SilverSpring, MD, 1995.

[2] V. Wu, R. Manmatha, and E.M. Riseman, "Textfinder: an automatic system todetect andrecognize textin images," IEEE Trans. Pattern

Anal. Mach.Intell., vol. 21,no. 11,pp. 1224-1229, 1999.

[3] Y. M. Y. Hasan,and L. J. Karam,"MorphologicalTextExtractionfrom Images,"IEEE Trans. Image Process., vol. 9, no. 11, pp. 1978-1983, 2000.

[4] M.Pietikinenand0.Okun,"Edge-based method for text detection from

complexdocumentimages,"Proc. 6thInt'lConfDoc.Anal. Recognit., pp.286-291,2001.

[5] Y. Zhong, K. Karu, A. K. Jain, "Locating text in complex color images," Pattern Recognit.,vol.28, no.10,pp. 1523-1535, 1995. [6] A. K. Jain, B. Yu, "Automatic text location in images and video

frames," Pattern Recognit.,vol. 31, no. 12, pp. 2055-2076, 1998.

[7] C.Strouthopoulos,N.Papamarkos,A.E.Atsalakis,"Textextractionin

complex color documents,"Pattern Recognit.,vol. 35, pp. 1743-1758, 2002.

[8] B.-F. Wu, Y.-L. Chen, and C.-C.Chiu,"Adiscriminantanalysis based recursive automatic thresholding approach for image

segmentation,"IEICE Trans. Info. Systems,vol. E88-D, no.7, pp.1 716-1723,2005.

[9] R.R. Yagerand D.P. Filev, "Approximate clustering via the mountain

method," IEEETrans.Syst. ManCybern,vol. 24, no. 8, pp. 1279-1284, 1994.

[10]N.R. Pal and D. Chakraborty, "Mountain and subtractive clustering method: improvements and generalization," Int'l.J.Initell.Sy7st.,vol. 15, pp.329-341,2000.

[11]B.-F. Wu, Y.-L. Chen, and C.-C. Chiu, "Multi-layer segmentation methodforcomplexdocumentimages,"Int'l. J. PatternRecognit.Artif.

Intell., Vol.19,No.8,pp.997-1025,2005.

[12]Experimentalresultsofall testimages inourdatabaseareavailableat:

http://140.113.150.97/SMC06 TestDatabase.htm

SIEMENS SIEMENS

(b).Textextraction resultsby the (c).Textextractionresultsby Jain and

proposedmethod Yu'smethod

Fig. 2.Resultsofthe test image I (size: 2333x3153)

you

see

vyou

see

SAMSIH* TFTtCB~~~~~~~~~~M~S00

(b).Textextraction resultsbythe (c).TextextractionresultsbyJamnand

proposedmethod Yu'smethod

Fig.3.Results of thetestimage2(size:2405x 3207)

(d). I ext extractionresultsby

Pietikainenand Okun's method

(a). 1extextractionresultsby