行政院國家科學委員會專題研究計畫 成果報告

子計畫五:多媒體架構與數位視訊浮水印在網際網路之應用

(3/3)

計畫類別: 整合型計畫 計畫編號: NSC91-2219-E-009-043- 執行期間: 91 年 08 月 01 日至 92 年 07 月 31 日 執行單位: 國立交通大學電子工程學系 計畫主持人: 蔣迪豪 計畫參與人員: 林耀中、黃項群、莊孝強、王俊能 報告類型: 完整報告 處理方式: 本計畫涉及專利或其他智慧財產權,2 年後可公開查詢中 華 民 國 92 年 10 月 30 日

行政院國家科學委員會補助專題研究計畫

5 成 果 報 告

□期中進度報告

多媒體架構與數位視訊浮水印在網際網路之應用(3/3)

Applications of Multimedia Framework and Digital Video

Watermarking on Internet

計畫類別:□ 個別型計畫

5

整合型計畫

計畫編號:

NSC-91-2219-E-009-043

執行期間: 91 年 8 月 1 日至 92 年 7 月 31 日

計畫主持人:

蔣迪豪 交通大學電子工程系所 副教授

共同主持人:

計畫參與人員:

林耀中、黃項群、莊孝強、王俊能

成果報告類型(依經費核定清單規定繳交):□精簡報告

5

完整報告

本成果報告包括以下應繳交之附件:

□赴國外出差或研習心得報告一份

□赴大陸地區出差或研習心得報告一份

□出席國際學術會議心得報告及發表之論文各一份

□國際合作研究計畫國外研究報告書一份

處理方式:除產學合作研究計畫、提升產業技術及人才培育研究計畫、

列管計畫及下列情形者外,得立即公開查詢

5

涉及專利或其他智慧財產權,□一年 □二年後可公開查詢

執行單位:

交通大學電子工程系所

中 華 民 國 92 年 10 月 30 日

行政院國家科學委員會專題研究計畫成果報告

多媒體架構與數位視訊浮水印在網際網路之應用(3/3)

Applications of Multimedia Framework and Digital Video

Watermarking on Internet

計畫編號:NSC-91-2219-E-009-043

執行期限:91 年 8 月 1 日至 92 年 7 月 31 日

主持人:蔣迪豪 國立交通大學電子工程學系 副教授

目錄 Table of Contents

中文摘要...3 Abstract ... 4 一、 背景與目的...5 二、 報告內容...6 I. Introduction... 6II. The Architecture Of Uma Multimedia Delivery System... 6

III. System Simulations... 9

IV. Conclusion... 11

V. References ... 14

三、 計畫成果自評...15

中文摘要

多媒體技術的發達促使資訊的存取與資料的傳遞之相關應用廣泛地發展。多媒體應用在 百家爭鳴情況下,卻造成彼此之間在資料傳遞與存取發生互動困難的問題。因此,MPEG-21 制定了多媒體通用存取的架構與法則。透過這架構與法則,多媒體應用環境中廣大的異質的 元件,比如存取裝置、網路、終端器、自然環境以及使用者偏好等等,可以透過談判來連接 與互動。為了支援不同格式的視訊資料之互通,我們也採用即時的轉碼器,將 FGS 視訊位元 流轉碼成適用於該終端機的格式。再者,為了增進 FGS 視訊位元流在傳輸的網路中之抗噪能 力,我們也提出一個新的 FGS 編解碼技術。最後,這多媒體架構也提供保護智慧財產權的方 法。如此,方讓使用者可以隨時隨地隨心所欲地享用多媒體資訊。同時,為了提供一個嚴謹 的模擬環境,我們正在發展一套 FGS 視訊為主的在網路上多媒體傳送的測試平台。 關鍵詞:MPEG-21、MPEG-4、MPEG-7、通用多媒體存取、FGS(Fine Granularity Scalable)、數位 浮水印、轉碼器、多媒體傳送測試平台, Digital Item Adaptation (DIA)。

Abstract

MPEG-21 provides a unified solution, Universal Multimedia Access (UMA), for constructing a multimedia content delivery and rights management framework. Based on the concepts of UMA, we build a simplified UMA model on the Internet. In this framework, the source video material is encoded and archived as FGS bitstreams. The video features such as motion vectors are recorded in the format of MPEG-7 descriptors. To support video contents of different formats, we create a transcoder to convert the bitstream from the FGS format to an MPEG-4 simple profile format that fits with the terminal capability. Moreover, a novel FGS coding scheme is created to improve the coding efficiency and robustness of the FGS bistreams in an Internet transmission scenario. Consequently, the multimedia information can be streamed through the networks without networks jitters and significant quality degradations existed in the current commercial implementations. To have a more strict evaluation methodology according to the specified common conditions for scalable coding, an FGS-based unicast streaming system as a test bed of scalability over the Internet.

Keywords:

MPEG-21, Universal Multimedia Access, MPEG-4, MPEG-7, Digital Watermarking, FGS, Transcoding, RFGS (Robust FGS), SRFGS (Stack Robust FGS), Vide streaming test bed, Digital Item Adaptation (DIA).

一、

背景與目的

近年來,多媒體通訊 (multimedia communications) 有突飛猛進的發展,網際網路 (Internet) 以驚人速度開展。不論在國際多媒體標準及工業產品上,這幾年都有相當的進步。動態影像 專家群委員會已制定幾個成功音視訊的標準,例如 MPEG-1,MPEG-2,MPEG-4 和 MPEG-7。前不久,在動態影像專家群委員會裡有另一個新標準活動其目標在解決多媒體資訊 傳輸和各別標準互相交換的機制。這個新標準目前稱作 MPEG-21 其使命為定義未來電子資訊 傳輸系統之“多媒體架構”或“多媒體架構全貌”。這個新標準活動之短期目標是以概觀的方式 作一由上而下的分析來了解消費者的需要和期望。它將因此發展出一套 MPEG-21 新的要求。 在公元 2000 年 3 月將有徵求資訊之公告並且在公元 2000 年 7 月以前將完成一分份技術報告。 在這個新標準 MPEG-21的活動中,對於管理和保護智慧財產權的領域中急需新的技術。 這個重要關鍵技術在容許多媒體資訊傳輸過程中之交易個體能輕易的記錄電子多媒體資訊的 使用歷史。這些交易個體包括: 多媒體資訊的所有者,多媒體資訊的創作者,媒體傳輸系統 業者,發行權利所有者,多媒體資訊購買者, 獨特識別號碼發行者,智慧財產權資料庫管理 者,及認證機構。我們可以應用數位視訊浮水印技術來達成這個目標。此數位視訊浮水印可 嵌入識別資訊且必須強韌得足以通過多媒體資訊傳輸的過程而不受破壞。

二、

報告內容

I. Introduction

Recently, multimedia technology provides different players in the multimedia value and delivery chain with excess of information and services. Access to information and services can be provided with ubiquitous terminals and networks from almost anywhere at anytime. However, no solution enables all the heterogeneous communities to interact and interoperate with one another so far.

MPEG-21 provides a solution named as Universal Multimedia Access (UMA) [1]. The major aim is to define a description of how these various elements such as network, terminal, user preference, and natural environments, etc. can fit together. It defines a multimedia framework to enable transparent and augmented use of multimedia resources across a wide range of networks and devices employed by different communities. Additionally, it allows content adaptation according to terminal capability.

II. The Architecture Of Uma Multimedia Delivery System

For achieving UMA, we propose a video server that contains the key modules described in MPEG-21 [1]. In this model, we combine the tools as referred to MPEG-4 Fine Granularity Scalability (FGS) [2], MPEG-4 Simple Profile, MPEG-7 [3], Digital Watermarking techniques [4], and Internet protocols.

A. Efficient FGS-to-Simple Transcoding

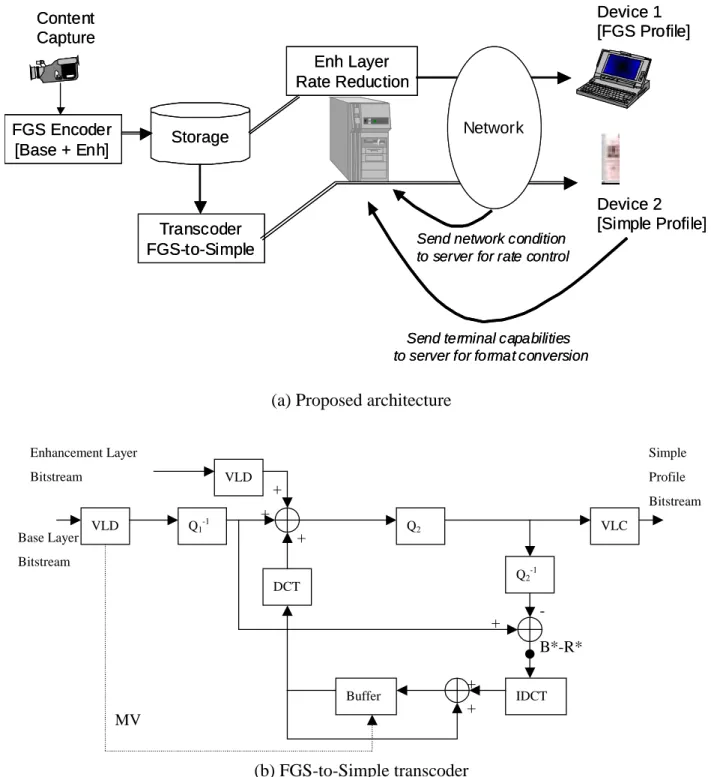

To fit with the issue of content adaptation according to terminal capability, we propose a real-time transcoding system that converts the FGS bitstreams into Simple Profile bitstreams covers five modules as shown in Figure 1(a).

1) Video Content Capture

The source video is inputted from the image acquisition device and saved in a digital form like YUV or RGB formats.

2) FGS Encoder and Bitstream Archive

Each video signal is encoded as FGS bitstreams and stored in mass storages. When any request from users, the server will send bitstreams directly to the terminals, or pass them through the Transcoder when format conversion is necessary.

3) Real-time Transcoder:

The transcoding depends on the channel conditions, terminal capabilities, and video content features. Assuming the terminals only support the decoding processes of MPEG-4 Simple Profile bitstreams. When justifying the transmission conditions, the server will ask the transcoder to convert the archived FGS bitstreams into Simple Profile bitstreams with specified formats, sizes, and qualities in an adaptive manner. The real-time FGS-to-Simple transcoder [5] is as illustrated in Figure 1 (b).

The reference method to perform FGS-to-Simple transcoding adopts a cascaded architecture that connects a FGS decoder and a Simple Profile encoder. Our primary objective is to simplify this reference architecture and demonstrate the high quality results of our FGS-to-Simple transcoder.

In the proposed architecture, the motion vectors within the FGS base layer bitstream are reused in MPEG-4 Simple Profile encoder. Additionally, the transcoding is processed in DCT-domain that can provide a low-complexity transcoder.

4) Channel Monitor: Device 2 [Simple Profile] Device 1 [FGS Profile] Content Capture FGS Encoder

[Base + Enh] Storage

Transcoder FGS-to-Simple

Enh Layer Rate Reduction

Send terminal capabilities to server for format conversion

Network

Send network condition to server for rate control

Device 2 [Simple Profile] Device 1 [FGS Profile] Content Capture FGS Encoder

[Base + Enh] Storage

Transcoder FGS-to-Simple

Enh Layer Rate Reduction

Send terminal capabilities to server for format conversion

Network

Send network condition to server for rate control

(a) Proposed architecture

VLD VLC Q2-1 DCT Q2 Q1-1 VLD IDCT Buffer Base Layer Bitstream Enhancement Layer Bitstream Simple Profile Bitstream + + + -+ + + MV B*-R* (b) FGS-to-Simple transcoder

Figure 1. The application scenario of the proposed UMA multimedia delivery system that employs the archived FGS bitstreams. Figure (b) is the block diagram of the FGS-to-Simple Transcoder in Figure (a).

It accepts feedback information from the terminals and also estimates the characteristics of channels, which mean the round-trip time, packet lost ratio, bit error rate, and bandwidth. All obtainable information is passed to the server for adapting the content delivery.

5) Terminals:

Priori to receiving the bitstream, the terminal exchanges its capabilities with the server. As shown in Figure 1(a), the terminals of different decoding capabilities including FGS and Simple Profile are supported. Consequently, the terminals receive and reconstruct the demanded video signals.

Thus in our framework, the source video is encoded and archived as FGS bitstreams, which can provide various QoS service like SNR scalable video coding schemes [2]. With the FGS bitstreams saved in FGS BitStream Archive module, the proposed system can serves heterogeneous terminals through the Internet. Moreover, according to Internet and Terminal devices capabilities, the Channel Monitor can adapt the different resources to each Terminal.

B. FGS Streaming on the Internet

The delivery of multimedia information to mobile device over wireless channels and/or Internet is a challenging problem because multimedia transportation suffers from bandwidth fluctuation, random errors, burst errors and packet losses [2]. However, it is even more challenging to simultaneously stream or multicast video over Internet or wireless channels under UMA framework. The compressed video information is lost due to congestion, channel errors and transport jitters. The temporal predictive nature of most compression technology causes the undesirable effect of error propagation.

To address the broadcast or Internet multicast applications, we proposed a novel technique named Stack RFGS (SRFGS) to improved the temporal prediction of RFGS [6]. SRFGS first simplified the RFGS prediction architecture and then generalized its prediction concept as: the information to be coded can be inter-predicted by the information of the previous time instance at the same layer. With this concept, the RFGS architecture can be extend to multiple layers, which form the stack architecture. While RFGS can only optimize at one operating point, SRFGS can optimize at several operating point to serve much wider bandwidth with superior performance. With the biplane coding and leaky prediction that used in RFGS, SRFGS hold its fine granularity and error robustness. SRFGS can also support temporal scalability by simply dropping some B-frames in the FGS server. An optimized MB-based alpha adaptation is proposed to further improve the coding efficiency. SRFGS has been proposed to MPEG committee in [11] and has been ranked as one of the best in the Report on Call for Evidence on Scalable Video Coding [12].

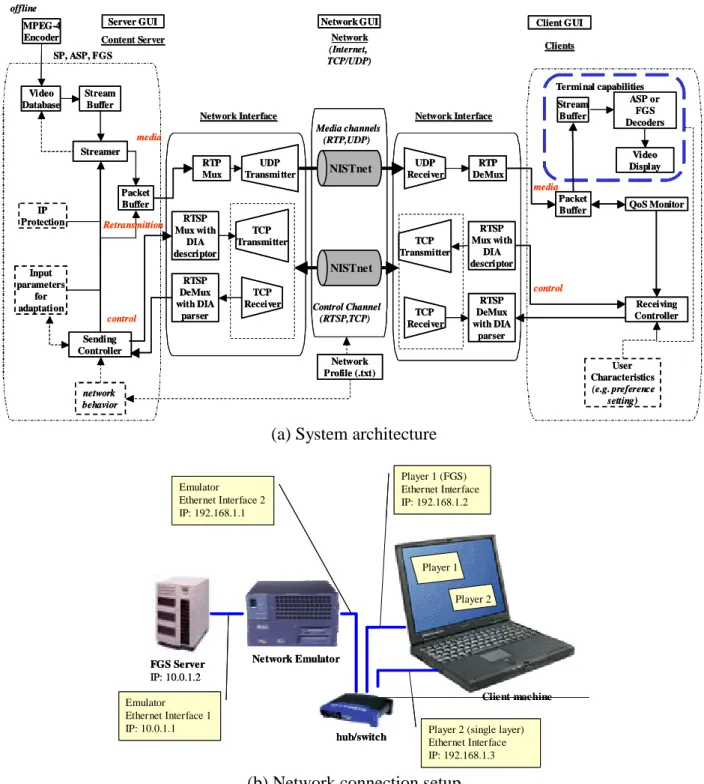

C.FGS-Based Video Streaming Test Bed for MPEG-21 UMA with Digital Item Adaptation

As shown in Figure 2, we are developing an FGS-based unicast video streaming test bed, which is now being considered by the MPEG-4/21 committee as a reference test bed [7], [8]. The proposed system supports MPEG-21 DIA scheme which leads to a more strict evaluation methodology according to the MPEG committee specified common test conditions for scalable video coding. It provides easy control of media delivery with duplicable network conditions. To provide the best quality of service for each client, we will propose relevant rate control, error protection, and transmission approaches in the content server, network interface, and clients, respectively.

III. System Simulations

A. Efficient FGS-to-Simple Transcoding

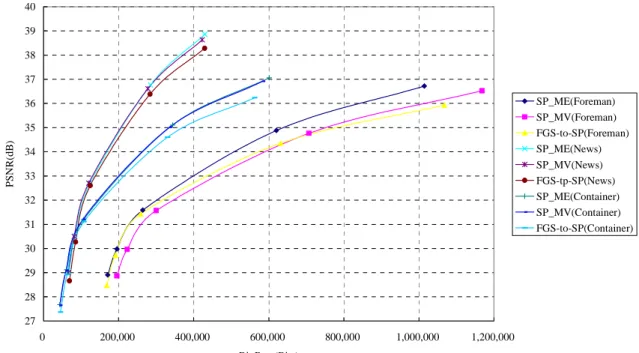

To demonstrate the performance of our proposed UMA multimedia delivery system in Figure 1(a), we build an FGS-to-Simple transcoder in Figure 1(b). In the proposed system, each sequence is pre-encoded and stored in the FGS Bitstream Archive.

Three methods are considered for comparison:

1. A simple profile encoder using the original video sequence (SP_ME)

ASP or FGS Decoders Video Display Stream Buffer Content Server Content Server Terminal capabilities User Characteristics (e.g. preference setting) Input parameters for adaptation IP Protection SP, ASP, FGS MPEG-4 Encoder offline Clients Clients Receiving Controller QoS Monitor media control Streamer media Sending Controller Packet Buffer Stream Buffer Media channels (RTP,UDP) Control Channel (RTSP,TCP) Network Network (Internet, TCP/UDP) network behavior Video Database Network Profile (.txt) UDP Transmitter RTP Mux RTSP Mux with DIA descriptor TCP Transmitter RTSP DeMux with DIA parser TCP Receiver Network Interface Network Interface control RTSP Mux with DIA descriptor TCP Transmitter RTSP DeMux with DIA parser TCP Receiver UDP Receiver RTP DeMux Network Interface Network Interface Packet Buffer Retransmittion NISTnet NISTnet

Server GUI Network GUI Client GUI

ASP or FGS Decoders Video Display Stream Buffer Content Server Content Server Terminal capabilities User Characteristics (e.g. preference setting) Input parameters for adaptation IP Protection SP, ASP, FGS MPEG-4 Encoder offline Clients Clients Receiving Controller QoS Monitor media control Streamer media Sending Controller Packet Buffer Stream Buffer Media channels (RTP,UDP) Control Channel (RTSP,TCP) Network Network (Internet, TCP/UDP) network behavior Video Database Network Profile (.txt) UDP Transmitter RTP Mux RTSP Mux with DIA descriptor TCP Transmitter RTSP DeMux with DIA parser TCP Receiver Network Interface Network Interface control RTSP Mux with DIA descriptor TCP Transmitter RTSP DeMux with DIA parser TCP Receiver UDP Receiver RTP DeMux Network Interface Network Interface Packet Buffer Retransmittion NISTnet NISTnet

Server GUI Network GUI Client GUI

(a) System architecture

FGS Server IP: 10.0.1.2 Network Emulator Emulator Ethernet Interface 1 IP: 10.0.1.1 Emulator Ethernet Interface 2 IP: 192.168.1.1 Player 1 (FGS) Ethernet Interface IP: 192.168.1.2

Player 2 (single layer) Ethernet Interface IP: 192.168.1.3 hub/switch Player 1 Player 2 Client machine FGS Server IP: 10.0.1.2 Network Emulator Emulator Ethernet Interface 1 IP: 10.0.1.1 Emulator Ethernet Interface 2 IP: 192.168.1.1 Player 1 (FGS) Ethernet Interface IP: 192.168.1.2

Player 2 (single layer) Ethernet Interface IP: 192.168.1.3 hub/switch Player 1 Player 2 Client machine

(b) Network connection setup

2. A cascaded transcoding using a complete FGS enhancement bitstream and motion vectors from the base layer bitstream (SP_MV)

3. An efficient transcoding with complete FGS enhancement video and motion vectors from the base layer bitstream (FGS-to-SP).

The test video sequences, named as Foreman, News, and Container, are in CIF and YUV format. The first frame is coded as an I-VOP and the others are coded as P-VOP's at 30Hz. For the FGS encoding, the quantization step size (QP) used in the base layer is set at 10 for I-VOP's and 12 for P-VOP's. The MPEG-4 Simple Profile encoder employs constant quantization, where the set of QP used is {5, 7, 14, 21, 28}. As shown in Figure 3, our transcoding schemes (FGS-to-SP) have neglected quality loss in PSNR at low and medium bit-rates and have about 0.5~0.9 dB loss in PSNR at high bit-rate.

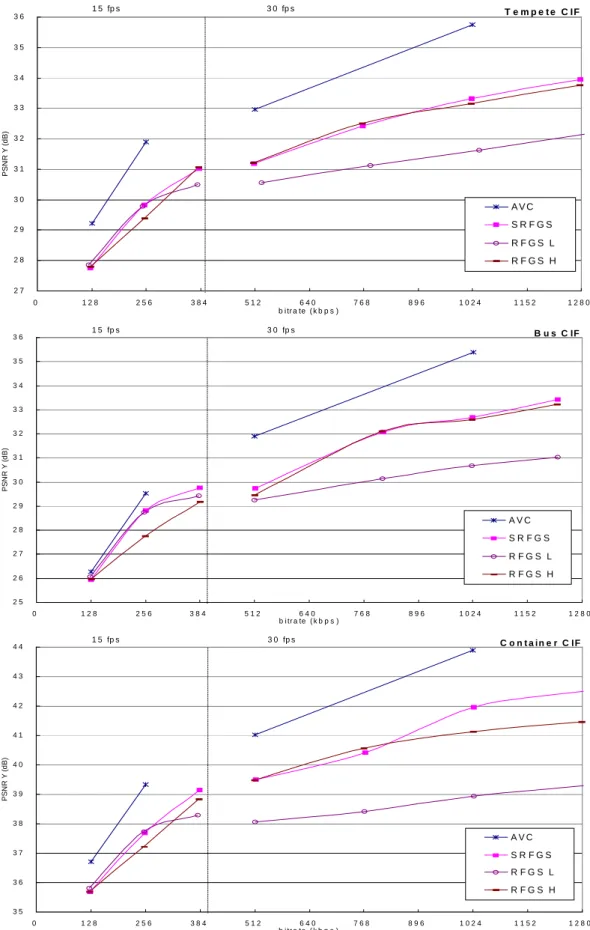

B. FGS Streaming on the Internet

The coding efficiency of Stack Robust FGS (SRFGS) is compared with those of our previous work RFGS (Robust FGS) and MPEG-4 Part-10 Advance Video Coding (AVC)/H.264. The test conditions are identical to the testing procedure specified by the MPEG Scalable Video Coding AHG [9]. The sequences including Tempete, Bus and Container in CIF resolution and YUV format are tested at four bitrates/frame-rates including 128kbps/15fps, 256kbps/15fps, 512kbps/30fps, and 1024kbps/30fps.

For AVC simulation, JM42 test model is used with the following encoding parameters. Tools including RD-optimization and CABAC are enabled. Quarter-pixel motion vector accuracy is employed with search range of 32 pixels. Four reference frames are used for motion compensation. Only the first frame of each test sequence is encoded as I-VOP’s. The P-VOP’s period is 3 in both 15fps and 30 fps. For RFGS and SRFGS, the base layer is AVC test model of version JM42. The test conditions for evaluating SRFGS and RFGS are the same as those for AVC except that we have disabled RD-optimization tool and only one reference frame is used for motion compensation. At the frame rate of 30 fps, the P- VOP’s period is 6 for Tempete and Container sequences. The P-VOP’s period is 4 for Bus sequence. At 15 fps, the P-VOP’s period is 2. The bitplane and entropy coding are identical to as those in the MPEG-4 FGS. In SRFGS, 2 enhancement layer loops are used for Tempete and Bus sequences, and 3 enhancement layer loops are used for Container sequence. A simple frame-level bit allocation with a truncation module is adopted in the streaming server to obtain the optimal quality under the given bandwidth budget.

The simulation results are shown in Figure 4. Two RFGS results are shown. One has lower reference bitrate (labeled as RFGS_L) and the other has higher reference bitrate (labeled as RFGS_H). SRFGS has similar performance with RFGS_L at low bitrate, and has 1.7 to 3.0 dB improvement at high bitrate. This is because SRFGS has remove the temporal redundancy at high bitrate while RFGS_L not. As compare with RFGS_H, SRFGS has 0.4 to 1.0 dB improvement at low bitrate. This is because there is more drift error of RFGS_H at low bitrate. At high bitrate, the SRFGS has 0.8 dB improvements at low motion sequence such as Container and has similar performance at high motion sequence, such as Tempete and Bus. This is because at high motion sequence the correlation between successive frames are lower and the improved prediction technique in SRFGS may not help too much. At medium bitrate, SRFGS has 0.15 dB losses than RFGS_H at most. This is because the increased dynamic range and sign bits of each layer in SRFGS slightly degrade the coding efficiency. The above simulation results show that while RFGS can only optimized at one operating point, SRFGS can optimized at several operating point to serve much wider bandwidth with superior performance. Compare with AVC, SRFGS has 0.4 to 1.5 dB loss at base layer. This is because the MV in SRFGS is derived by considering not only the base layer but also the enhancement layer information. Further, the high quality prediction image of B-frame has not totally received at this bitrate. There are 0.7 to 2.0 dB loss at low bitrate and 2.0 to 2.7 dB loss at high bitrate.

IV. Conclusion

We have developed an efficient FGS-to-Simple transcoder to adapt the bit rate and convert the format of the archived FGS bitstream for video streaming applications. For streaming video, we have proposed a FGS-based scheme. Based on the scheme, the need of the FGS-to-Simple transcoder is satisfied. Base on the DCT linearity and the reuse of the motion vectors existing in the archived bitstream, an efficient FGS-to-Simple transcoder is derived from the cascaded one. The performance of the proposed transcoder is almost identical to that of the cascaded one, but with

27 28 29 30 31 32 33 34 35 36 37 38 39 40 0 200,000 400,000 600,000 800,000 1,000,000 1,200,000 Bit Rate(Bits) PS NR (d B ) SP_ME(Foreman) SP_MV(Foreman) FGS-to-SP(Foreman) SP_ME(News) SP_MV(News) FGS-tp-SP(News) SP_ME(Container) SP_MV(Container) FGS-to-SP(Container)

Figure 3. The performance of transcoding with the luminance components of the three video sequences and using various sources of motion vectors and different enhancement information.

much less complexity. A prototype of the streaming system with the rate-shaping transcoding is developed and tested. The experimental results show that the streaming system with the rate control has better channel utilization and provides better video quality.

In additional, we have proposed a novel FGS coding technique named SRFGS. Based on RFGS, SRFGS generalized its prediction concept and extend its architecture to multiple layers that form the stack architecture. In each layers, the information to be coded can be inter-predicted by the information of the previous time instance at the same layer. The stack concept make SRFGS can be optimized at several operating point for various applications. With the biplane coding and leaky prediction that used in RFGS, SRFGS hold its fine granularity and error robustness. An optimized MB-based alpha adaptation is proposed to further improve the coding efficiency. The simulation results show that SRFGS has 0.4 to 3.0 dB improvement over RFGS. Further investigation of the bit allocation among each layer for various video contend may provide better coding efficiency.

Finally, we have joined the project to build MPEG-21 Multimedia Test Bed for Resource Delivery. With the test bed, various video streaming schemes over Internet could be evaluated with realistic network conditions.

T e m p e t e C IF 2 7 2 8 2 9 3 0 3 1 3 2 3 3 3 4 3 5 3 6 0 1 2 8 2 5 6 3 8 4 5 1 2 6 4 0 7 6 8 8 9 6 1 0 2 4 1 1 5 2 1 2 8 0 b i tr a te ( k b p s ) PS N R Y ( d B) A V C S R F G S R F G S L R F G S H 1 5 fp s 3 0 fp s B u s C IF 2 5 2 6 2 7 2 8 2 9 3 0 3 1 3 2 3 3 3 4 3 5 3 6 0 1 2 8 2 5 6 3 8 4 5 1 2 6 4 0 7 6 8 8 9 6 1 0 2 4 1 1 5 2 1 2 8 0 b i tr a te ( k b p s ) PSN R Y (d B ) A V C S R F G S R F G S L R F G S H 1 5 fp s 3 0 fp s C o n t a i n e r C I F 3 5 3 6 3 7 3 8 3 9 4 0 4 1 4 2 4 3 4 4 0 1 2 8 2 5 6 3 8 4 5 1 2 6 4 0 7 6 8 8 9 6 1 0 2 4 1 1 5 2 1 2 8 0 b i tr a te ( k b p s ) PSN R Y (d B ) A V C S R F G S R F G S L R F G S H 1 5 fp s 3 0 fp s

Figure 4. PSNR versus bitrate comparison between SRFGS, RFGS and AVC coding schemes for the Y component.

V. References

[1] A. Vetro, “AHG report on describing terminal capabilities for UMA, ” MPEG01/m7275, Sydney, Australia, July 2001.

[2] W. Li, "Overview of fine granularity scalability in MPEG-4 video standard,” IEEE Trans. Circuits Syst. Video Technol., pp. 301-317, Mar. 2001.

[3] J. Magalhaes and F. Pereira, “Describing user environments for UMA applications,” MPEG01/m7312, Sydney, Australia, July 2001.

[4] F. Hartung and M. Kutter, “Multimedia watermarking techniques, ” Proc. IEEE, vol. 87, no.7, pp.1079-1107, July 1999.

[5] Y.-C. Lin, C.-N. Wang, Tihao Chiang, A. Vetro and H.F. Sun, “Efficient FGS to Single layer transcoding, “ Published by ICCE2002.

[6] H. C. Huang, C. N. Wang, T. Chiang, “A Robust Fine Granularity Scalability Using Trellis Based Predictive Leak, ” IEEE Trans. Circuits Syst. Video Technol., vol. 12, pp. 372–385, June 2002. [7] MPEG Video Group, “Draft Testing Procedures for evidence on scalable coding technology”,

ISO/IEC JTC1/SC 29/WG 11 N4927, July 2002.

[8] MPEG Requirements Group, “Draft Applications and Requirements for Scalable Coding,” ISO/IEC JTC1/SC 29/WG 11 N4984, July 2002.

[9] ''Call for evidence on scalable video coding advances,'' ISO/IEC JTC1/SC29/WG11/N5559, March 2003.

[10] ''Streaming video profile-- Final Draft Amendment (FDAM 4),'' ISO/IEC JTC1/SC29/WG11/N3904, Jan. 2001.

[11] H. C. Huang, W. H. Peng, C. N. Wang, T. Chiang, and H. M. Hang, “Stack Robust Fine Granularity Scalability: Response to Call for Evidence on Scalable Video Coding ” ISO/IEC JTC1/SC29/WG11/M9767, July 2003.

[12] ''Report on Call for Evidence on Scalable Video Coding (SVC) technology,'' ISO/IEC JTC1/SC29/WG11/N5701, July 2003.

[13] T. Rusert and M. Wien, “AVC Anchor Sequences for Call for Evidence on Scalable Video Coding Advances ” ISO/IEC JTC1/SC29/WG11/M9725, July 2003.

[14] JVT reference software version 4.2,

三、 計畫成果自評

本計畫旨在發展與多媒體架構在網際網路應用之各項技術。MPEG-21 多媒體架構是近 來 MPEG 標準會議正在進行標準制定的主題之一。MPEG-21 多媒體架構中整合了多項多媒 體標準(MPEG-1, MPEG-2, MPEG-4, MPEG-7)、網路協定(Network protocols)、智慧財 產權管理與保護等。在國科會的支持下,我們較產業早一步對這些規格標準加以探討, 發展其中關鍵技術。如第四部份研發成果所示, 本計畫已獲得相當豐碩成果,發表學術 論文與專利申請,與原訂目標相符。

協同參與本項計畫的杭學鳴、王聖智老師,並在業界廠商合作計畫補助旅費情況下 參與 MPEG 標準會議,並於每次會議兩週後即舉行公開之說明會對業界提供最新之訊息。 現已提建議案多項。例如:ISO/IEC JTC1/SC 29/WG 11 14496-2 M8049: A Robust Fine Granularity Scalability (RFGS) Using Predictive Leak, ISO/IEC JTC1/SC29/WG11 M9767: Stack Robust Fine Granularity Scalability 等。目前我們在 MPEG 標準會議 進行之主要工作項目有 MPEG-4 Part 7 Optimised Reference Software 以及 MPEG-21 Part 12 Multimedia Test Bed for Resource Delivery。(我們參與 MPEG 標準會議的技術開 發與活動經費,亦受到交通大學李立台揚網路研究中心與多媒體標準資源共享等計畫之 贊助。) 此外,更直接並且對國內產學界更直接且有價值的貢獻將是人才訓練。同學們在學 校階段已熟悉較前瞻的世界多媒體標準 MPEG-21,畢業後進入產業, 直接投入產業界開 發新產品,以提昇我國多媒體技術之研發與整合能力。 綜合評估:本計畫產出相當多具有學術與應用價值的成果,並積極參與國際 MPEG 標 準會議,將我國人研發成果推廣到國際舞台。此外亦達到媒體技術之研發與整合之人才 培育之效,整體成效良好。

四、 研發成果

1. Journal papers (1) FGS optimization:

a、 H.-C. Huang, C. N. Wang, and Tihao Chiang, “A robust fine granularity scalability using trellis based predictive leak, “IEEE Transaction on Circuits

and Systems for Video Technology—Special Issue on Wireless Video, June 2002.

2. International conference papers (5) FGS optimization:

a、 Hsiang-Chun Huang, C.-N. Wang, and Tihao Chiang, “A robust fine granularity using trellis based predictive leak, ” in Proceeding of ISCAS02, 2002.

b、 Chung-Neng Wang et al, “Fgs-based video streaming test bed for mpeg-21 universal multimedia access with digital item adaptation, ” in Proceeding of ISCAS2003, vol.2, 2003, pp.364-367.

c、 Wen-Hsiao Peng, Chung-Neng Wang, Tihao Chiang and Hsueh-Ming Hang,

“Context-Based Binary Arithmetic Coding With Stochastic Bit Reshuffling

For Advanced Fine Granuality Scalability, ” Submitted to ISCAS2004, Oct. 2003.

d、 Hsiang-Chun Huang and Tihao Chiang, “Stack Robust Fine Granularity Scalability, ” Submitted to ISCAS2004, Oct. 2003.

Fast transcoding:

a、 Y.-C. Lin, C.-N. Wang, Tihao Chiang, A. Vetro, and H.F. Sun, “Efficient FGS to Single Layer Transcoding, ” in Proceeding of ICCE02, 2002.

3. Domestic conference papers (2) FGS optimization:

a、 Hsiang-Chun Huang, C.-N. Wang, and Tihao Chiang, “MPEG-4 Streaming Video for Wireless Applications, ” in Proceeding of ICOM01 (Tainan, Taiwan), 2001.

b、 Hsiang-Chun Huang, C.-N. Wang, and Tihao Chiang, “MPEG-4 Streaming Video Profile, “ in Proceeding of Lee Center’s Workshop, 2001.

4. Patents (3)

FGS optimization:

a、 H.-C. Huang, C.-N. Wang, Tihao Chiang, and H.-M. Hang, “Architecture and Method For Fine Granularity Scalable video Coding,” Filed in U.S patent Office (IAM10/229580), August 27th, 2002.

b、 H.-C. Huang, C.-N. Wang, Tihao Chiang, and H.-M. Hang, “可調整位元流大 小的影像編解碼裝置, “Filed in R.O.C patent (091134745), 11 29th

, 2002

Fast transcoding:

a、 Y.-C. Lin, C.-N. Wang, Tihao Chiang, A. Vetro, and H.F. Sun, “Video Transcoding of Scalable Multi-Layer Videos to Single Layer Video, “Filed in U.S patent, October, 2002

5. Master thesis (2)

a、 Yao-Chung Lin and Tihao Chiang, “一個漸進精細可調層次式到單層的快速 位元流轉碼技術(An Efficient FGS to Single Layer Transcoding), “Master Thesis, Dept. of Electronics Engineering and Institute of Electronics, 2003. b、 Zihao Liu, Tihao Chiang, and Yuang-Cheh Hsueh “一個在行動通訊環境下

基於 MPEG-7 與 JPEG2000 標準之內容擷取系統(A Content Retrieval System Based on MPEG-7 Descriptors and JPEG2000 for Mobile

Applications), “Master Thesis, Dept. of Computer and Information Science, 2003

6. MPEG contributions (8) FGS optimization:

a、 H.-C. Huang, C.-N. Wang, and Tihao Chiang, “ISO/IEC JTC1/SC 29/WG 11

14496-2 M6890: Verification Result of PFGS, ” Jan. 2001 (Pisa).

b、 H.-C. Huang, C.-N. Wang, and Tihao Chiang, “ISO/IEC JTC1/SC 29/WG 11

14496-2 M7339: Evidence for improving the existing fine granularity

scalability tool, ” July 2001 (Sydney, Australia).

c、 H.-C. Huang, C.-N. Wang, Tihao Chiang, and Hsueh-Ming Hang, “ISO/IEC JTC1/SC 29/WG 11 14496-2 M8049: A Robust Fine Granularity Scalability (RFGS) Using Predictive Leak, ” May 2002 (Fairfax, VA, USA).

d、 H.-C. Huang, C.-N. Wang, Tihao Chiang, and Hsueh-Ming Hang, “ISO/IEC JTC1/SC 29/WG 11 14496-2 M8604: H.26L-based Robust Fine Granularity Scalability (RFGS), ” July 2002.

e、 C.-N. Wang, Chia-Yang Tsai, Han-Chung Lin, Hsiao-Chiang Chuang, Yao-Chung Lin, Jin-He Chen, Kin Lam Tong, Feng-Chen Chang, Chun-Jen

Tsai, Tihao Chiang, Shuh-Ying Lee, and Hsueh-Ming Hang, “ISO/IEC JTC1/SC 29/WG 11 M8887: FGS-Based Video Streaming Test Bed for MPEG-21 Universal Multimedia Access with Digital Item Adaptation, ” Oct. 2002

f、 C.-N. Wang, Chia-Yang Tsai, Yao-Chung Lin, Han-Chung Lin, Hsiao-Chiang Chuang, Jin-He Chen, Kin Lam Tong, Feng-Chen Chang, Chun-Jen Tsai, Tihao Chiang, Shuh-Ying Lee, and Hsueh-Ming Hang, “ISO/IEC JTC1/SC 29/WG 11 M9182: FGS-Based Video Streaming Test Bed for Media Coding and Testing in Streaming Environments, ” Dec. 2002.

g、 H.-C. Huang, Wen-Hsiao Peng, C.-N. Wang, T. Chiang, and Hsueh-Ming Hang, “ISO/IEC JTC1/SC 29/WG 11 M9767: Stack Robust Fine Granularity

Scalability: Response to Call for Evidence on Scalable Video Coding, ” July 2003.

h、 Sam S Tsai, Hsueh-Ming Hang, and Tihao Chiang, “ISO/IEC JTC1/SC 29/WG 11 M9756: Motion Information Scalability for MC-EZBC: Response to Call for Evidence on Scalable Video Coding, ” July 2003.

可供推廣之研發成果資料表(一)

□ 可申請專利 5 可技術移轉 日期:92 年 10 月 30 日國科會補助計畫

計畫名稱:多媒體架構與數位視訊浮水印在網際網路之應用(3/3) 計畫主持人:蔣迪豪 交通大學電子工程系所 副教授 計畫編號:NSC-91-2219-E-009-043 學門領域:電信國家型技術/創作名稱

RFGS發明人/創作人

黃項群, 王俊能, 蔣迪豪, 杭學鳴 中文:技術說明

英文:We proposed a novel FGS coding technique, the Robust FGS. The RFGS is a flexible framework that incorporates the ideas of leaky prediction and partial prediction. Both techniques are used to provide fast error recovery when part of the bitstream is not available. The RFGS provides tools to achieve a balance between coding efficiency, error robustness and bandwidth adaptation. The RFGS covers several well-know techniques such as MPEG-4 FGS, PFGS and MC-FGS as special cases. Because, the RFGS uses a high quality reference, it can achieve improved coding efficiency. The adaptive selection of bitplane number used for the reference frame allows three prediction techniques: intra, inter and partial inter modes. The intra mode is used to remove the drift. The inter mode is used when the bandwidth is high and the packet loss rate is low and the partial inter mode provides a balance between intra and inter modes. The used enhancement layer information in the enhancement layer prediction loop is further scaled by a leak factor α,

where 0 ≤ α ≤ 1 before adding to the base layer image to form the high

quality reference image. Such a leak factor is also used to alleviate the error drift problem.

Our experimental results show that the RFGS framework can improve the coding efficiency up to 2 dB over the MPEG-4 FGS scheme in terms of average PSNR. The error recovery capability of RFGS is verified by dropping the first P-picture of a GOV in the enhancement layer. It also demonstrated the tradeoff between coding efficiency and error attenuation that can be controlled by the value of α. Further optimization of the RFGS parameters is necessary to provide the best balance between coding efficiency and error resilience. The optimal bit allocation or truncation for each frame and a given average bitrate constraint is also an interesting topic for further study.

可利用之產業

及

可開發之產品

1. Surveillance

2. Wireless multimedia communication 3. Video media service

4. Content providers

5. MPEG video related software encoder 6. Wireless multimedia communication 7. Video on demand

技術特點

The Robust FGS (RFGS) is a flexible framework that incorporates the ideas of leaky prediction and partial prediction. Both techniques are used to provide fast error recovery when part of the bitstream is not available. The RFGS provides tools to achieve a balance between coding efficiency, error robustness and bandwidth adaptation.

推廣及運用的價值

※ 1.每項研發成果請填寫一式二份,一份隨成果報告送繳本會,一份送 貴單位

研發成果推廣單位(如技術移轉中心)。

※ 2.本項研發成果若尚未申請專利,請勿揭露可申請專利之主要內容。 ※ 3.本表若不敷使用,請自行影印使用。

可供推廣之研發成果資料表(二)

5 可申請專利 5 可技術移轉 日期:92 年 10 月 30 日國科會補助計畫

計畫名稱:多媒體架構與數位視訊浮水印在網際網路之應用(3/3) 計畫主持人:蔣迪豪 交通大學電子工程系所 副教授 計畫編號:NSC-91-2219-E-009-043 學門領域:電信國家型技術/創作名稱

Stack RFGS發明人/創作人

黃項群,蔣迪豪 中文:技術說明

英文:The delivery of multimedia information to mobile device over wireless channels and/or Internet is a challenging problem because multimedia transportation suffers from bandwidth fluctuation, random errors, burst errors and packet losses. However, it is even more challenging to simultaneously stream or multicast video over Internet or wireless channels under UMA framework. The compressed video information is lost due to congestion, channel errors and transport jitters. The temporal predictive nature of most compression technology causes the undesirable effect of error propagation.

To address the broadcast or Internet multicast applications, we proposed a novel technique named Stack RFGS (SRFGS) to improved the temporal prediction of RFGS. SRFGS first simplified the RFGS prediction architecture and then generalized its prediction concept as: the information to be coded can be inter-predicted by the information of the previous time instance at the same layer. With this concept, the RFGS architecture can be extend to multiple layers, which form the stack architecture. While RFGS can only optimize at one operating point, SRFGS can optimize at several operating point to serve much wider bandwidth with superior performance. With the biplane coding and leaky prediction that used in RFGS, SRFGS hold its fine granularity and error robustness. SRFGS can also support temporal scalability by simply dropping some B-frames in the FGS server. An optimized MB-based alpha adaptation is proposed to further improve the coding efficiency. SRFGS has been proposed to MPEG committee and has been ranked as one of the best in the Report on Call for Evidence on Scalable Video Coding.

可利用之產業

及

可開發之產品

同 RFGS 附件二 附件二技術特點

Based on RFGS, SRFGS generalized its prediction concept and extend its architecture to multiple layers that form the stack architecture. In each layers, the information to be coded can be inter-predicted by the information of the previous time instance at the same layer. The stack concept make SRFGS can be optimized at several operating point for various applications. With the biplane coding and leaky prediction that used in RFGS, SRFGS hold its fine granularity and error robustness. An optimized MB-based alpha adaptation is proposed to further improve the coding efficiency. The simulation results show that SRFGS has 0.4 to 3.0 dB improvement over RFGS. Further investigation of the bit allocation among each layer for various video contend may provide better coding efficiency.

推廣及運用的價值

同 RFGS ※ 1.每項研發成果請填寫一式二份,一份隨成果報告送繳本會,一份送 貴單位 研發成果推廣單位(如技術移轉中心)。 ※ 2.本項研發成果若尚未申請專利,請勿揭露可申請專利之主要內容。 ※ 3.本表若不敷使用,請自行影印使用。可供推廣之研發成果資料表(三)

□ 可申請專利 5 可技術移轉 日期:92 年 10 月 30 日國科會補助計畫

計畫名稱:多媒體架構與數位視訊浮水印在網際網路之應用(3/3) 計畫主持人:蔣迪豪 交通大學電子工程系所 副教授 計畫編號:NSC-91-2219-E-009-043 學門領域:電信國家型技術/創作名稱

Fast FGS-to-Simple Transcoder發明人/創作人

林耀中, 王俊能, 蔣迪豪, A. Vetro, H.F. Sun中文:

技術說明

英文:

A method transcodes a compressed multi-layer video bitstream that includes a base layer bitstream and an enhancement layer bitstream. The base and enhancement layers are first partially decoded, and then the partially decoded signals are combined with a motion compensated signal yielding a combined signal.

The combined signal is quantized into an output signal according to a quantization parameter, and the output signal is variable length encoded as a single layer bitstream. In a preprocessing step, the enhancement layer can be truncated according to rate control constraint, and the same constraints can also be used during the quantization.

可利用之產業

及

可開發之產品

1. Content providers 2. Video streaming 3. Video broadcasting 4. Video media service 5. Content providers6. MPEG video related software encoder

技術特點

1. Fast trasncoding

2. Retained quality using a rate shape method 3. Video delivery with multiple bitstream formats