The implementation and evaluation of a mobile self- and peer-assessment system

Chao-hsiu Chen

*Institute of Education, National Chiao Tung University, Taiwan, 1001 Ta Hsueh Road, Hsinchu 30010, Taiwan

a r t i c l e

i n f o

Article history:

Received 17 December 2008

Received in revised form 6 August 2009 Accepted 13 January 2010

Keywords:

Evaluation of CAL systems Improving classroom teaching Teaching/learning strategies

a b s t r a c t

Recently, more and more researchers have been exploring uses of mobile technology that support new instructional strategies. Based on research findings related to peer and self assessment, this study devel-oped a Mobile Assessment Participation System (MAPS) using Personal Digital Assistants (PDAs) as the platform. In addition, the study proposes an implementation model of the MAPS that should facilitate the effectiveness of self- and peer-assessment in classrooms. The researcher argues that teachers and stu-dents can benefit from MAPS in various regards including more flexible assessment arrangement, more efficient use of time, and more opportunities for student reflection on learning and assessment. Thirty-seven students taking teacher-education courses with the researcher participated in this study, and these students employed the MAPS to conduct two-round assessment activities that would help these students assess both their own and one another’s final projects. Both the students’ valid responses in a survey herein and scores obtained from the assessment activities confirmed the benefits of the MAPS and its implementation model. Yet, the students voiced such concerns as the objectivity of peer-assessment and the difficulty of providing constructive feedback, and the correlation analysis indicated a lack of con-sistency between teacher-grading and student-grading.

Ó 2010 Elsevier Ltd. All rights reserved.

1. Introduction

1.1. Self- and peer-assessment

High-quality assessment can facilitate learning, but poor-quality assessment can discourage high-quality learning or even be harmful to students (Novak, Mintzes, & Wandersee, 1999). Except for adherents to traditional norm-referenced assessment, most theorists in the field currently emphasize the importance of finding weaknesses and strengths in student knowledge. Assessment should be ongoing, so that teachers can keep providing useful feedback to students and so that students can learn how to monitor their own performance (Bransford, Brown, & Cocking, 2000; Perkins & Unger, 1999;Shepard, 2001). Researchers have been encouraging teachers to employ self- and peer-assessment that helps students reflect on their own learning and enter into classroom-based collaborative learning. For example,Orsmond, Merry, and Reiling (2000)asked first-year undergraduate biology students to conduct self- and peer-assessment on a poster assignment, and argued that self- and peer-assessment strengthened the association between feedback and learning improvement. Before conducting self- and peer-assessment, teachers and students should engage in meaningful discussion and communication to select explicit criteria about how student performance and student work will be assessed. Thus, students will pay more attention to the processes of undertaking learning activities rather than focus solely on final products or grades. And thus, self- and peer-assessment stresses the importance of understanding student progress at different phases of learning.

The use of peer assessment can be very flexible. Peer assessment can help in assessing the performance of individual students as well as of groups, and can help in formative evaluation as well as summative evaluation. Students can assess their group members or other groups’ members (McLuckie & Topping, 2004). Although many students have less expertise and experience in assessment than teachers have, stu-dent assessment need not always be less effective than teacher assessment.Magin (2001)employed statistics techniques to compare peer-assessment results with single-teacher peer-assessment results gathered from two courses, Introductory Clinical & Behavioural Studies and Sci-ence & Technology Studies at a University in UK. Magin found that the single-teacher assessment and the average of the two- or three stu-dent assessment reached similar levels of reliability. Hence, appropriately implementing peer assessment can attain sufficient reliability, and students can thereby receive more information and feedback to improve their performance than otherwise would be the case. Yet,

0360-1315/$ - see front matter Ó 2010 Elsevier Ltd. All rights reserved. doi:10.1016/j.compedu.2010.01.008

* Tel.: +886 3 5712121x58056; fax: +886 3 5738083. E-mail address:chaohsiuchen@mail.nctu.edu.tw

Contents lists available atScienceDirect

Computers & Education

Gibbs (2006)argued that over-emphasizing the reliability of student marking misses the point and dilutes the benefit of self- and peer-assessment wherein students engage in learning by internalizing academic standards and by making judgments about their own and peer performance in relation to these standards.

Regarding how to effectively involve students in assessment processes,Bloxham and West (2004)in their study on sports-studies stu-dents’ assessment of peer work suggested that teachers use strategies such as discussing assessment criteria with students, providing scor-ing rubrics, encouragscor-ing feedback-givscor-ing, and evaluatscor-ing the appropriateness of student assessment. Through this kind of systematic participation, students can better understand how their performance and work will be assessed and make revisions based on the feedback. There are various studies on self- and peer-assessment in higher education, and they involve participants from different disciplines includ-ing education, health, business, computer science, and the humanities (e.g.,Ballantyne, Hughes, & Mylonas, 2002; Falchikov & Goldfinch, 2000; Magin, 2001; Price & O’Donovan, 2006; Prins, Sluijsmans, Kirschner, & Strijbos, 2005; Purchase, 2000). Their findings indicate that explicitly explaining and defining assessment criteria should be a top priority for teachers to conduct meaningful self or peer assessment; therefore, teachers can deliberately involve students in the formation of assessment criteria and scoring rubrics. Co-deciding assessment criteria through meaningful dialogues and feedback-giving can enhance assessment effectiveness and facilitate collaborative learning (Price & O’Donovan, 2006; Prins et al., 2005).

Peer assessment is beneficial for student learning in various ways. It improves students’ ability to relate instructional objectives to assessment activities, to understand assessment criteria and processes, to identify the strengths and weaknesses of the students’ own per-formance, to improve their understanding of and their confidence in the subject matter at hand, and to improve their future performance (Ballantyne et al., 2002). Nevertheless, there are cautions that teachers should take while conducting peer assessment. For instance, Ash-worth, Bannister, and Thorne (1997)found that assessment methods, when conducted in contexts more informal than the contexts of con-trolled and supervised examinations, created situations in which University students had greater opportunities to plagiarize and to commit other acts of cheating. Ballantyne et al. also reviewed studies addressing such problems of peer assessment as the limited experience, expertise, and confidence of teachers and students relative to this assessment method. Many students doubt whether they themselves have the ability to provide constructive feedback and appropriate marks. Most students consider assessment to be the primary responsibility of teachers, and most teachers and students tend to value outcomes of expert assessment over the outcomes of peer assessment. Many stu-dents feel uncomfortable with criticizing others’ performance, and stustu-dents usually feel peer assessment to be very demanding and time-consuming (Ballantyne et al., 2002; Davies, 2000; Lin, Liu, & Yuan, 2001; Miller, 2003; Topping, Smith, Swanson, & Elliot, 2000; Tsai, Lin, & Yuan, 2002).

Appropriate technology use in assessment processes could alleviate some of the aforementioned shortcomings. For example,Davies (2000)developed a computerized peer-assessment system and implemented this system in an undergraduate module within a school of computing. The students made marks and comments on peers’ reports; some comments even concerned the student assessors’ detection of plagiarism by means of web searches for the references listed in the reports.Nicol and Milligan (2006)stressed that applying technology to the assessment process should focus on how the application can support the assessment process to promote dialogic feedback and to enhance students’ abilities of conceptualizing performance standards and being self-regulated learners. These emphases are the goals the present study strives to achieve. The next section will further discuss how technology may enhance the effectiveness and efficiency of self-and peer-assessment.

1.2. Technology-supported self- and peer-assessment

Although peer assessment can save teachers a good deal of time that they would otherwise spend on grading student work, teachers have to devote considerable time and effort to organizing and managing assessment processes. Integrating technology into assessment pro-cesses may resolve potential obstacles to implementing self- and peer-assessment (Topping, 1998).Lin et al. (2001)developed an Internet-based peer-assessment system, and they described several advantages of this system after using it in a computer-science course in Taiwan. For example, to maintain anonymity during assessment processes, teachers may spend much time on encoding. Well-designed computer programs enable students to assess each other anonymously and demand much less time and effort from teachers, and thus, students are more willing to criticize others’ performance. Computer networks enable students to conduct assessment activities anytime anywhere, and teachers can flexibly log in online to check assessment progress. Also, online assessment can eliminate the time and the cost that teachers and students would otherwise invest in printing out student work.Sung, Chang, Chiou, and Hou (2005)highlighted a web-based self- and peer-assessment system that can do away with conditions restricting various assessment activities to the classroom. The researchers pro-posed a progressively focused assessment procedure supported by the web-based system, and Taiwanese ninth-grader participants im-proved their assessment objectivity and the quality of their webpage-design assignments.

Asking each student assessor to evaluate a student’s performance translates into a situation where students need less time to complete the assessment process than would be the case if each student assessor had to evaluate the performance of a group of peers. However, every student consequently receives less feedback from peers. Scenarios in which teachers ask their students to evaluate more and more work of peers translates into a situation where each student receives more and more feedback than he or she would receive if only the teachers were evaluating the students’ work. However, asking students to evaluate significant quantities of peer-produced work would take up a significant amount of time and might negatively influence the efficiency and the effectiveness of peer assessment (Sung et al., 2005). Hence, deciding the number of ‘‘assessed students” for which each ‘‘assessing student” should be responsible is a crucial task.Sung, Lin, Lee, and Chang (2003) and Sung et al. (2005)found that if the students conducted peer assessment several times, the difference be-tween students’ assessment outcomes and the teacher’s assessment outcomes would gradually decrease. This finding indicates that asking students to assess peer performance iteratively could give them a better chance to differentiate superior work from inferior work and to grasp assessment criteria, so that the students would become more competent assessors. Moreover, through giving and receiving feedback in self- and peer-assessment, the students in the aforementioned study enhanced their ability of reflection and improved their work. The web-based assessment system enabled teachers and students to assess and revise student work multiple times with much less time spent than would characterize situations without the technology.

Tsai et al. (2002)developed a web-based assessment system in which Taiwanese pre-service students conduct three cycles of peer assessment and revision of a science-activity design project. The researchers found that those students who gave detailed and constructive

comments to their peers benefited more from the assessment processes and had better ideas about how to improve their own work than was the case for other students. The researchers stated that positive effects of peer assessment might not emerge right after assessment activities and that the results of peer assessment might not be an appropriate indicator of student performance. Furthermore, some stu-dents reported that they felt such an assessment scheme to be time-consuming and that they would be unwilling to spend extra time assessing others’ work after class. Most students were uncomfortable with criticizing others’ performance in spite of the secure anonymity, and many students provided vague and irrelevant feedback, which was useless for recipients of feedback seeking to improve their perfor-mance. Some students even purposely gave peers unjustifiably low scores so that the scorers, themselves, could possess advantageous positions. Tsai et al. suggest that teachers try to ensure that (1) students engage in peer assessment, (2) the teacher and students have an open and explicit discussion about assessment criteria in advance, and (3) the teacher continually monitor assessment processes to pre-vent students’ inappropriate grading of other students.

Pownell and Bailey (2002)differentiated four main phases of educational computing, and they envisioned the latest trend in educational computing: anyone can access information and communicate with others anytime and anywhere via small-size computers and wireless net-works. Because of the characteristics of mobile technology such as ubiquity, smaller size, comparative affordability, and the prevalence of wireless networks, more and more researchers have been exploring supportive application of such handheld computers as mobile phones, laptop computers, PDAs, and graphic calculators to new educational strategies. Arising from these endeavors to conduct mobile-learning studies is the critical issue of how to use handheld computers in innovative ways that enhance assessment (Penuel, Lynn, & Berger, 2007; Penuel & Yarnall, 2005; Shin, Norris, & Soloway, 2007). For instance, Students can flexibly conduct project-based learning and self assess-ment inside and outside classrooms via PDAs or mobile phones; teachers and students can record teaching and learning processes for port-folio assessment; teachers can receive student feedback and can, thus, monitor student understanding through an Interactive Response System (IRS); and students can key in their answers anonymously via handheld remotes and can compare their thoughts with peers’ (Davis, 2003; Draper & Brown, 2004; Penuel et al., 2007; Smodal & Gregory, 2003; Vahey & Crawford, 2002; Wang et al., 2004).

However, not much research has reported findings about how to use mobile technology for self- and peer-assessment, and this issue merits further investigation. Hence, the current study presents a novel self- and peer-assessment system using PDAs as the system plat-form and proposes an implementation model of this system. In addition, 37 pre-service teachers participated in this study to assess their lesson-plan projects with both the developed system and the proposed implementation model. The researcher who conducted and authored this study collected and analyzed both the students’ scores corresponding to the lesson-plan projects and the students’ responses to the assessment activities.

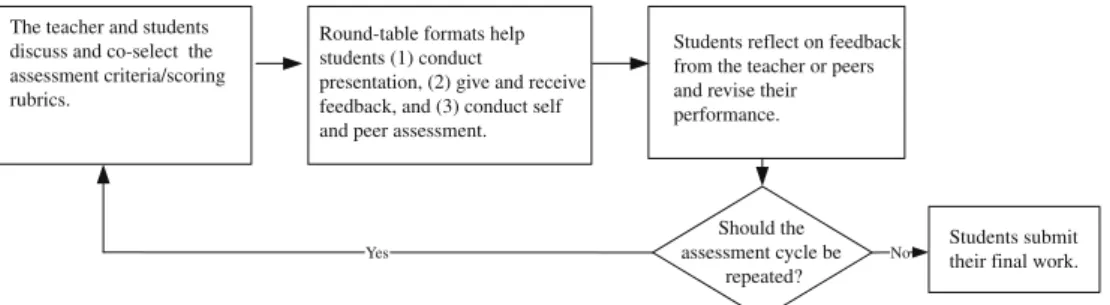

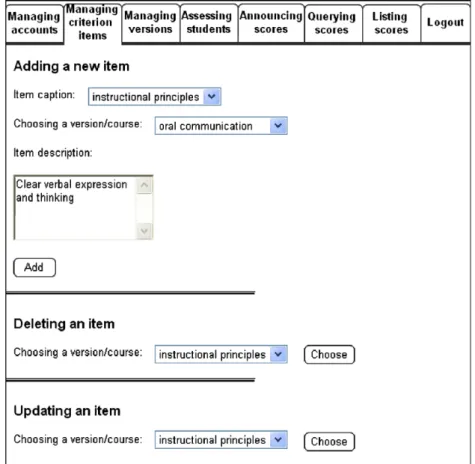

2. The MAPS and its implementation model

This study explored the potential of applying mobile technology to self- and peer-assessment and created a web-based Mobile Assess-ment Participation System (MAPS) using the PHP programming language and MySQL for system developAssess-ment and database manageAssess-ment. The developed MAPS can be used for self or peer assessment, individual- or group-performance assessment, and synchronous or asynchro-nous assessment. This study proposes a MAPS-implementation model (Fig. 1) for in-class use of the MAPS via PDAs. In this model, the MAPS serves to facilitate assessments of student performance, student projects, or student products. To make assessment criteria explicit, the teacher and the students first discuss and co-select assessment criteria or scoring rubrics so that students can understand in advance how their performance, projects, or products will be assessed. After the discussion, the teacher enters the criteria into the MAPS (Fig. 2) and provides students with detailed scoring rubrics to which the students can refer while working on projects or assignments and while conducting in-class assessments.

As for the assessment activity in the classroom, students divide up into different sessions and, accordingly, take turns presenting their work in a round-table format. Each student carries a PDA with the MAPS. During each presentation session, all presenters sit at tables scat-tered around the classroom, and those students who are not presenters freely select a table and, there, listen to the presentation, interact with the presenter, raise questions, and provide feedback. After the presentation, students assess their own presentation or anonymously assess the listened to presenter via PDAs (Fig. 3a). During the presentations and the assessments, the teacher may monitor their progress or walk around to participate in the assessment activity.

It is important to try to prevent the possible negative effect that those students who receive low scores from peers become deliberately and unjustifiably harsh assessors. The following steps can help in this matter. Only after all students finish the presentation-assessment project, the teacher announces the assessment results, and regarding all assessment items, individual students receive their own self-assessment scores and the average scores given by their peer assessors so that students can better evaluate their own performance (Fig. 3b). Afterward, the teacher encourages all students to undertake two tasks: (1) reflect on the difference between scores of their own self-assessment and average scores given by peers and (2) revise their own performance according to the feedback received from the presentation-assessment project. Considering factors such as students’ presentation performance and available time for further assess-ment, the teacher decides whether to repeat the assessment cycle or to ask students to submit their final work directly.

The teacher and students discuss and co-select the assessment criteria/scoring rubrics.

Round-table formats help students (1) conduct

presentation, (2) give and receive feedback, and (3) conduct self and peer assessment.

Students reflect on feedback from the teacher or peers and revise their performance.

Should the assessment cycle be

repeated?

Students submit their final work.

Yes No

The researcher who conducted and authored the current study argues that, with the assistance of mobile technology, this MAPS-implementation model has the following advantages over asynchronous online assessment or traditional classroom-presentation assessment:

(1) Students can conduct self- and peer-assessment in-class without concerns about Internet connectivity and equipment accessibility at home. They need not spend extra time browsing others’ work, giving feedback, and grading self and peer performance after class. However, because the MAPS is web-based, teachers can ask students to asynchronously interact with each other or to undertake online assessment if advisable.

(2) The round-table format of the assessment activity enables teachers to adjust the number of presenters in each session and the time allocated for presentation according to class size and to the availability of time for assessment.

(3) Students who are not presenters can choose a presentation in which they are interested. Rather than require student assessors to sit in rows to listen to all student presenters’ presentations, the round-table format encourages assessors to interact with presenters directly and instantly. However, to avoid privileging certain popular students, the teacher should encourage students not to choose a presentation based on the presenter’s popularity.

Fig. 2. Assessment-criterion input and management (teachers’ version).

(4) Students have more opportunities to be familiar with the assessment criteria and to continuously reflect on the students’ own per-formance and learning during the self- and peer-assessment processes. Moreover, the teacher can decide whether to repeat the assessment cycle on the basis of factors such as level of students’ assessment competence, level of students’ metacognitive skills, and the availability of class time for assessment.

3. Methods

To verify the applicability of the MAPS and its implementation model, the researcher obtained consent from 37 students to participate in this study. The students were from two teacher-education courses offered by the researcher in two successive semesters. There were 14 undergraduate students and 23 graduate students. Of the 37 students, 12 students were male. Twenty-five students had started their first year in the teacher-education program. Each student was required to finish a final project for which he or she would create a lesson plan, and all the students followed the same protocol to undertake the self- and peer-assessment activities. Two weeks before the final presen-tation, the researcher announced the rubrics for assessing the final projects.Table 1lists the rubrics that the researcher explained to and discussed with the students to ensure their understanding of and agreement to all assessment items.

All participants had no experience in using PDAs and spent about 15 min learning how to operate the MAPS before the assessment activ-ity. During the assessment processes, except for three students who encountered difficulty in connecting to the Internet, no student re-ported difficulties in the MAPS operation. The researcher resolved the problem of Internet connection by instantly giving the students other available PDAs. On the basis of the assessment model described in the previous section, the researcher divided all students into dif-ferent presentation sessions, and there were five or six presenters sitting at the presentation tables at each session. Those students who were not presenters freely chose a table where they would listen to the presentation and interact with the presenter. After a given 10 min presentation, the listeners would assess—via PDAs—each presenter to whom they had just listened and then would switch to other tables. Each student presented to two audience groups, and the students assessed their own performance (i.e., they self-assessed) after the presentation. After the completion of all the student presentations, the researcher provided each student with his or her own self-assess-ment scores and his or her own average scores of peer-grading on all the assessself-assess-ment items.

The researcher encouraged the students to share their opinions with one another. Moreover, the researcher explicitly identified to the students three important points: (1) the twofold purpose of the assessment processes (peer–peer interaction and reflection on others’ per-formance as well as on one’s own perper-formance), (2) the main purpose of the scores (assistance in self-evaluating the strengths and the weaknesses of one’s own performance), and (3) the scores’ limited function in the course (the scores’ non-influence on final projects’ grades). After the first assessment activity, the students revised their work and, the following week, conducted a second-round assessment. After the second assessment activity, the students revised their work again and then submitted their final projects. The students anony-mously filled out questionnaires including close- and open-ended questions to report how they felt about the MAPS and the assessment processes. Thirty-four students provided valid responses in the questionnaires, and the scores of 30 students were valid for statistical analysis.

4. Results

4.1. Students’ opinions about the assessment activity

After using the MAPS to conduct the self- and peer-assessment, the students filled out a questionnaire to report their attitudes toward the MAPS and its application.Table 2lists the percentages of the students’ agreement levels on the close-ended questions. The students’ responses to the first four statements indicate that most students approved of the efficiency and the convenience of the MAPS and the ben-efits of its implementation model. The students’ answers to the open-ended questions suggest that MAPS-based student-performance assessments are more portable, more time-efficient, more conducive to peer–peer interaction, and more conducive to focused reflection than are other types of assessments. These advantages are further explained as follows:

(1) Portability and time-efficiency: To grade presenters, the students could easily carry PDAs around, obviating the need for handwriting. Students’ responses in these regards include ‘‘Owing to its [the PDAs’] portability, it’s possible to evaluate the presentation right at that moment so the assessment may be more accurate,” ‘‘It feels great to carry a machine around to grade people without having to write,” ‘‘We can get the scores right away,” and ‘‘With the PDA, I feel more comfortable with grading people anonymously; people might stare at my handwriting if we used paper-and-pencil.”

Table 1

Rubrics for assessing the final project.

1–4 Points 5–7 Points 8–10 Points

Oral communication Is unclear, confused, and pointless

Is clear and straightforward but short of highlights

Is clear and smooth, and main points are logically stressed Ways of presentation Are boring, disorganized, or

unappealing

Are acceptable and able to convey some points of the design

Are excellent and able to inspire the audience to reflect on the design

Instructional sequence Is arranged randomly without consideration of various factors

Is arranged on the basis of such concerns as availability of time and media

Is appropriately and systematically arranged on the basis of various fully considered factors

Instructional strategies Are employed simply for fun or for fitting in time slots

Are employed mainly for covering instructional content

Are employed appropriately and consistently with instructional content so that students are highly motivated and engaged in higher-order thinking

Assessment Is ignored or inconsistent with instructional objectives and activities

Is somewhat consistent with instructional objectives but focused on ranking

Is seamlessly consistent with instructional objectives and activities, and the teacher and students can obtain abundant information about learning outcomes

(2) More peer–peer interaction: The students could freely choose a presentation to listen to and could easily discuss matters with the presenters. A student wrote, ‘‘I could get more ideas and inspiration from others [than from the instructor] because they seemed to understand me better than the instructor. There was more interaction. I could also receive feedback from those classmates whose majors were different from mine.” Another response was, ‘‘This assessment method allowed us to carefully listen to the lesson plans that we were interested in. It was very different from the traditional whole-class presentation. This method made me dare to raise questions.”

(3) Focused reflection: The students were exposed to multiple perspectives, and could compare and reflect on various performances and opinions. The responses include ‘‘I had a chance to compare others’ opinions with mine, and I had to compare my performance with others to make the appropriate assessment” and ‘‘I watched others’ presentations and then revised and improved my project.” One student commented, ‘‘During the assessment processes, we critiqued and learned from others. To improve their own work, the asses-sors could learn from the presenters’ ideas. People being assessed could also receive instant feedback to improve their own work. Because we had to assess others, we listened carefully to others’ presentations and we were highly motivated.”

AsTable 2shows, most students reported that they would fairly assess peers. Only 2.94% of the students agreed with the statement that they would worry about receiving unfair low scores from peers. None of the students agreed with the statement that they indeed got biased low scores, but 64.71% of the students chose ‘‘neutral” on this statement. Apparently, most students believed that they would make fair judgments, but at the same time, nearly two-thirds of the students were not totally convinced of the fairness of peer grading. Privately receiving their own scores without knowing others’ scores could lead students to issue relatively conservative responses that would func-tion, in a sense, as preemptive retaliation for anticipated—but not yet confirmed—conservative responses from peers. About one-third of the students considered the instructor’s comments and grading more professional. By answering the open-ended questions, the students also addressed their own concerns. Three students reported that they doubted the objectivity of peer assessment, and five students men-tioned the difficulty of providing constructive feedback. These five students reported that their classmates, having diverse majors, might require knowledge different from that needed to assess others’ presentations satisfactorily. A student expressed this possible problem:

Very few classmates gave me suggestions and, mostly, they listened to me quietly. I didn’t know how to revise my lesson plan. Similarly, I could only give presenters some simple suggestions such as the benefits of additional worksheets. I couldn’t provide insightful and professional opinions to help my peers improve their lesson plans

During the assessment activities, the instructor-researcher was busy monitoring the processes, so the students received neither feed-back nor grading results from the instructor until the students submitted their final projects. Hence, three students suggested that the instructor participate in the assessment activities. Other students reported concerns regarding, for example, the stability of the wireless network, the cost of PDAs, and the noise of multiple presentations in one space.

4.2. Scores of peer and self assessment

Because the instructor did not participate in the two-round assessment, only the scores on students’ final projects could be used for the correlation analysis. Not to be influenced by the student grading, the instructor deliberately made her judgment on the basis solely of the quality of the submitted final reports, without referring to student-grading results. Students’ scores for ‘‘instructional sequence,” ‘‘instructional strategies” and ‘‘assessment” given by the instructor, students themselves and peers were used for the correlation analysis. However, there was no significant correlation between the instructor grading and self grading or between the instructor grading and peer grading. The fact that nearly half of the students (47.06%) belonged to the neutral agreement level regarding willingness to undertake

Table 2

Percentages of students’ agreement levels on survey items (n = 34).

Item statement Strongly

disagree (%) Disagree (%) Neutral (%) Agree (%) Strongly agree (%) PDAs were more efficient for peer- and self-evaluation than paper and pencils would be 0.00 11.76 17.65 47.06 23.53

The operation of PDA evaluations was easy and convenient 0.00 0.00 23.53 11.76 64.71

Making explicit rubrics helped me understand how to undertake the final project 0.00 2.94 2.94 73.53 20.59 This evaluation process enabled me to obtain work-revision suggestions that were more concrete

than those in a whole-class presentation

0.00 0.00 14.71 67.65 17.65

I tried to grade my peers appropriately and not to be picky or hypocritical 0.00 5.88 8.82 70.59 14.71 I think the instructor’s comments and grading would be more professional than my classmates’ 0.00 20.59 44.12 32.35 2.94 I tended to avoid trying to revise my work although I received feedback from others 5.88 47.06 47.06 0.00 0.00 I preferred listening to every classmate’s presentation one by one 5.88 47.06 38.24 8.82 0.00 I worried that my classmates would give me low grades intentionally 17.65 47.06 32.35 2.94 0.00

I felt that I got biased low scores from peers 5.88 29.41 64.71 0.00 0.00

Table 3

The correlation coefficient between peer- and self-assessment scores on each criterion.

Oral communication Ways of presentation Instructional sequence Instructional strategies Assessment

1st round 461* .217 .393* .085 .216

2nd round .064 .103 .248 .144 .163

post-assessment revision of work could in part explain why the instructor’s grading of the students’ final reports did not significantly cor-relate to the students’ self- and peer-grading results in the two assessment activities.

Regarding the consistency between scores of self assessment and scores of peer assessment, only scores for ‘‘manner of presentation” and ‘‘instructional strategies” in the first-round assessment show significant positive correlation (Table 3).

Except for the self assessment for the oral communication, the t-tests of 30 students’ self- and peer-assessment mean scores indicate that, in general, the students thought that their own performance and their peers’ performances had significantly improved on all assess-ment items in the second-round assessassess-ment (Table 4).

5. Discussion and conclusions

This article describes a web-based self- and peer-assessment system and its implementation model. Focusing on how to appropriately integrate mobile technology with assessment, this study strives to tackle issues and concerns with self- and peer-assessment, which have been documented in relevant research (e.g.,Ballantyne et al., 2002; Lin et al., 2001; Miller, 2003; Topping et al., 2000; Tsai et al., 2002). Combining mobile technology with the concept of round-table presentations, the MAPS and its implementation model are to help the tea-cher arrange self- and peer-assessment more flexibly and to make students more attentive to presentation, interaction, and feedback in the assessment process. Along the whole assessment cycle, students self-evaluate and self-monitor their own learning and performance according to explicit assessment criteria. Furthermore, this approach serves to improve students’ work and students’ understanding insofar as students, by assessing a peer and by providing him or her feedback, reflect on the strengths and the weaknesses of their own perfor-mance as well as of other students’ perforperfor-mances.

The results of this study confirm these envisioned benefits. The students’ responses to the survey questions reveal that most students held positive attitudes toward the MAPS and its implementation model. They agreed that this assessment method was efficient, conve-nient, and time-saving, and that the assessment procedures fostered interaction and reflection. Additionally, the paired t-tests of the assessment scores point to the students’ general belief that the self and peer performances improved in the second-round assessment.

Although similar to the findings ofTsai et al. (2002), the correlation analysis in the present study reveals the presence of inconsistency between the instructor’s and the students’ grading, the students’ tendency to revise their work after receiving feedback from peers could cause this inconsistency. In this regard, future studies may require teachers to participate in each assessment activity and encourage stu-dents to substantially reflect on the related experiences and to revise their work after the assessment. The researcher who conducted and authored the present study suggests that advancing the students’ understanding and performance be the main purpose of employing self-and peer-assessment (Gibbs, 2006; Orsmond et al., 2000). The MAPS and its implementation model provide a good means for teachers and students to conduct self- and peer-assessment activities that enhance teaching and learning through active engagement in idea-sharing, feedback-giving, and self-reflection.

To avoid ineffective results and students’ resistance to self- and peer-assessment, teachers in the pre-assessment stage can encourage students to appreciate different viewpoints, to actively share opinions with peers, and to fairly assess peers. Teachers also need to pay attention to both equipment stability and space arrangement so that students are not bothered by technology malfunctions or noise from other presentations. Teachers can also participate in the assessment activities to demonstrate how to be a good assessor. Thus, applying mobile technology to self- and peer-assessment can provide teachers and students with a valuable alternative in the realm of performance assessment.

Acknowledgement

This study is supported by the National Science Council of the Republic of China under Contract Nos. NSC 96-2520-S-009-008.

References

Ashworth, P., Bannister, P., & Thorne, P. (1997). Guilty in whose eyes? University students’ perceptions of cheating and plagiarism in academic work and assessment. Studies in Higher Education, 22(2), 187–203.

Ballantyne, R., Hughes, K., & Mylonas, A. (2002). Developing procedures for implementing peer assessment in large classes using an action research process. Assessment & Evaluation in Higher Education, 27(5), 427–441.

Bloxham, S., & West, A. (2004). Understanding the rules of the game: Marking peer assessment as a medium for developing students’ conceptions of assessment. Assessment & Evaluation in Higher Education, 29(6), 721–733.

Bransford, J. B., Brown, A. L., & Cocking, R. (2000). How people learn: Brain, mind, experience, and school (expanded ed.). Washington, DC: National Academy Press. Davies, P. (2000). Computerized peer assessment. Innovations in Education and Training International, 37(4), 346–355.

Davis, S. (2003). Observations in classrooms using a network of handheld devices. Journal of Computer Assisted Learning, 19, 298–307.

Draper, S. W., & Brown, M. I. (2004). Increasing interactivity in lectures using an electronic voting system. Journal of Computer Assisted Learning, 20, 81–94. Table 4

Mean self- and peer-assessment scores in round 1 and round 2 and paired t-tests (n = 30).

Self-assessment Peer-assessment

Round 1 Round 2 Paired Round 1 Round 2 Paired

Mean s.d. Mean s.d. t-Tests Mean s.d. Mean s.d. t-Tests

Oral communication 7.000 1.313 7.133 1.074 .571 7.242 .796 7.681 .600 2.443* Ways of presentation 6.533 1.655 7.333 1.269 2.283* 6.991 .956 7.573 .557 2.887** Instructional sequence 7.200 1.349 7.800 1.157 2.382* 7.055 .791 7.730 .496 4.452** Instructional strategies 7.033 1.402 7.667 .994 2.433* 6.772 1.067 7.722 .445 5.036** Assessment 6.333 7.633 1.605 1.066 3.949** 6.699 1.151 7.701 .492 5.295** *p < .05. **p < .01.

Falchikov, N., & Goldfinch, J. (2000). Student peer assessment in higher education: A meta-analysis comparing peer and teacher marks. Review of Educational Research, 70(3), 287–322.

Gibbs, G. (2006). How assessment frames student learning. In C. Bryan & K. Clegg (Eds.), Innovative assessment in higher education (pp. 23–36). London: Routledge. Lin, S. S. J., Liu, E. Z. F., & Yuan, S. M. (2001). Web-based peer assessment: Feedback for students with various thinking-styles. Journal of Computer Assisted Learning, 17,

420–432.

Magin, D. J. (2001). A novel technique for comparing the reliability of multiple peer assessments with that of single teacher assessments of group process work. Assessment & Evaluation in Higher Education, 26(2), 139–152.

McLuckie, J., & Topping, K. J. (2004). Transferable skills for online peer learning. Assessment & Evaluation in Higher Education, 29, 563–584. Miller, P. J. (2003). The effect of scoring criteria specificity on peer and self-assessment. Assessment & Evaluation in Higher Education, 28(4), 383–394.

Nicol, D., & Milligan, C. (2006). Rethinking technology-supported assessment practices in relation to the seven principle of good feedback practice. In C. Bryan & K. Clegg (Eds.), Innovative assessment in higher education (pp. 64–77). London: Routledge.

Novak, J. D., Mintzes, J. J., & Wandersee, J. H. (1999). Learning, teaching, and assessment: A human constructivist perspective. In J. J. Mintzes, J. H. Wandersee, & J. D. Novak (Eds.), Assessing science understanding: A human constructivist view (pp. 1–13). San Diego, CA: Academic Press.

Orsmond, P., Merry, S., & Reiling, K. (2000). The use of student derived marking criteria in peer and self-assessment. Assessment & Evaluation in Higher Education, 25(1), 23–38. Penuel, W. R., & Yarnall, L. (2005). Designing handheld software to support classroom assessment: An analysis of conditions for teacher adoption. Journal of Technology,

Learning, and Assessment, 3(5), 45. <http://www.jtla.org>.

Penuel, W. R., Lynn, E., & Berger, L. (2007). Classroom assessment with handheld computers. In M. van‘t Hooft & K. Swan (Eds.), Ubiquitous computing in education (pp. 103–125). Mahwah, NJ: Lawrence Erlbaum.

Perkins, D. N., & Unger, C. (1999). Teaching and learning for understanding. In C. M. Reigeluth (Ed.). Instructional-design theories and models: A new paradigm of instructional theory (Vol. 2, pp. 91–114). Mahwah, NJ: Lawrence Erlbaum.

Pownell, D., & Bailey, G. D. (2002). Are you ready for handhelds? Learning and Leading with Technology, 30(2), 50–55.

Price, M., & O’Donovan, B. (2006). Improving performance through enhancing student understanding of criteria and feedback. In C. Bryan & K. Clegg (Eds.), Innovative assessment in higher education (pp. 100–109). London: Routledge.

Prins, F. J., Sluijsmans, D. M. A., Kirschner, P. A., & Strijbos, J.-W. (2005). Formative peer assessment in a CSCL environment: A case study. Assessment & Evaluation in Higher Education, 30(4), 417–444.

Purchase, H. C. (2000). Learning about interface design through peer assessment. Assessment & Evaluation in Higher Education, 25(4), 341–352.

Shepard, L. A. (2001). The role of classroom assessment in teaching and learning. In V. Richardson (Ed.), Handbook of research on teaching (pp. 1066–1101). Washington, DC: American Educational Research Association.

Shin, N., Norris, C., & Soloway, E. (2007). Findings from early research on one-to-one handheld use in K-12 education. In M. van‘t Hooft & K. Swan (Eds.), Ubiquitous computing in education (pp. 19–39). Mahwah, NJ: Lawrence Erlbaum.

Smodal, O., & Gregory, J. (2003). Personal digital assistants in medical education and practice. Journal of Computer Assisted Learning, 19, 320–329.

Sung, Y.-T., Chang, K.-E., Chiou, S.-K., & Hou, H.-T. (2005). The design and application of a web-based self and peer-assessment system. Computers & Education, 45, 187–202. Sung, Y.-T., Lin, C.-S., Lee, C.-L., & Chang, K.-E. (2003). Evaluating proposals for experiments: An application of web-based self-assessment and peer-assessment. Teaching of

Psychology, 30(4), 331–334.

Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 249–276.

Topping, K. J., Smith, E. F., Swanson, I., & Elliot, A. (2000). Formative peer assessment of academic writing between postgraduate students. Assessment & Evaluation in Higher Education, 25(2), 149–169.

Tsai, C.-C., Lin, S. S. J., & Yuan, S.-M. (2002). Developing science activities through a networked peer assessment system. Computers & Education, 38, 241–252.

Vahey, P., & Crawford, V. (2002). Palm education pioneers program: Final evaluation report [Electronic Version]. In SRI international.http://www.palmgrants.sri.com. Retrieved March 14, 2007.

Wang, H.-Y., Liu, T.-C., Chou, C.-Y., Liang, J.-K., Chan, T.-W., & Yang, J.-C. (2004). A framework of three learning activity levels for enhancing the usability and feasibility of wireless learning environments. Journal of Educational Computing Research, 30(4), 331–351.