2006 IEEEInternational Conferenceon Systems, Man,and Cybernetics

October8-11,2006, Taipei, Taiwan

Multi-objects Tracking

System

Using

Adaptive

Background

Reconstruction

Technique

and Its

Application

to

Traffic

Parameters

Extraction

Chin-Teng Lin, Fellow, IEEE, Yu-Chen Huang, Ting-Wei Mei, Her-Chang Pu, and Chao-Ting Hong

Abstract-In thispaper, we present areal-timemulti-objects tracking system which can detect various types of moving objects in image sequences of traffic video obtained from a

stationary video camera. Using the adaptive background

reconstruction technique can effectively handle with

environmental changes and obtain good results of objects extraction. Besides, we introduce a robust region- and

feature-based tracking algorithm with plentiful features to track correct objects continuously. After tracking objects

successfully,we cananalyzethetrackedobjects' propertiesand

recognize their behavior for extracting some useful traffic

parameters. According to the structure of our proposed algorithms, we implemented a tracking system including the functions of objects classification and accident prediction.

Experiments were conducted on real-life traffic video of some

intersection andtestingdatasetsofothersurveillance research.

The resultsprovedthealgorithmsweproposedachievedrobust segmentation ofmoving objects and successful tracking with objects occlusion or splitting events. Theimplemented system also extracteduseful trafficparameters.

I. INTRODUCTION

A

tracking

system

iscomposed

of three mainmodules: objects extraction, objects tracking and behavior analysis. Foregroundsegmentation is the first step ofobjects extraction and it's to detect regions corresponding to moving objects such as vehicles and pedestrians. The modules of objects tracking and behavior analysis only need to focus on those regions of moving objects. There are three conventional approaches for foreground segmentation outlined in the following: optical-flow, temporal differencing and background subtraction. Besides those basic methods, thereareothersorcombined methods for foregroundsegmentation. It's akey process torecoverandupdate background images

This workwassupported by the Ministry of Economic Affairs, Taiwan

R.O.C.,under Grant 95-EC-17-A-02-SI -032.

C.-T. Lin is with the Department of Electrical and Control

Engineering/Department of Computer Science, National Chiao-Tung

University, Hsin-Chu, Taiwan, R.O.C., and also with the Brain Research Center, University System of Taiwan, Taiwan, R.O.C. (phone:

+886-3-5712121 ext 31228; fax: +886-3-5729880; e-mail:

ctlin(a,mail.nctu.edLu.tW).

Y.-C. Huang, T.-W.Mei, and C.-T. Hong are with theDepartment of Electrical and Control Engineering, National Chiao-Tung University,

Hsin-Chu, Taiwan, R.O.C.(e-mail: vette(dis515hinetnet

twmei.ece93ginctu.edu.tw).

H.-C. Pu is with with the Department of Electrical and Control

Engineering, National Chiao-Tung University, Hsin-Chu, Taiwan, R.O.C.(e-mail lcpu ce872Xa nctu.dLt.W).

froma continuousimage sequences automatically. Friedman

etal. [1] used amixture of three Gaussians for each pixelto

represent the foreground, background, and shadows with an incremental version of EM (expectation maximization) method. Li et al. [2] proposed a Bayesian framework that incorporated spectral, spatial, and temporal features to characterize thebackground appearance.

Besides foreground segmentation, objects tracking is the another key module of surveillance systems. Hu et al. [3] presentedthereare 4majorcategoriesoftracking algorithms: region-based tracking algorithms [4], active-contour-based tracking algorithms, feature-based tracking algorithms [5], andmodel-basedtrackingalgorithms [6]. McKennaetal. [7] proposed a trackingalgorithm atthree levels of abstraction: regions, people, and groups in indoor and outdoor environments. Cucchiara et al. [8] presented a multilevel tracking scheme for monitoring traffic. Veeraraghavanet al. [9] usedamultileveltrackingapproachwith Kalmanfilter for tracking vehicles andpedestriansatintersections.

Understanding objects' behavior and extracting useful traffic parameters are the main work after successfully tracking themoving objects from the image sequences. Jung

etal. [10]proposed atraffic flow extraction method with the velocity and trajectory of the moving vehicles. They estimated the traffic parameters, suchasthe vehiclecountand the average speedandextracted thetrafficflows.Remagnino

et al. [11] presented an event-based visual surveillance system formonitoringvehicles andpedestrian.Trivedi etal. [12] describedanovelarchitecture fordeveloping distributed video networks for incident detection and management. The networks utilized both rectilinear and omni-directional

cameras.Kamijoetal. [13]developedamethodby the results oftracking for accident detection which can be generally adapted to intersections. Hu et al. [14] proposed a

probabilisticmodelforpredicting traffic accidents using 3-D model-based vehicletracking.

II. SYSTEMOVERVIEW

A. SystemFlowchart

At first, foreground segmentation module directly uses the rawdata ofsurveillancevideoasinputs. This sub-system

also updates background image and applies segmenting algorithm to extract the foreground image. Next, the

foreground imagewill extractindividualobjects. At the same

with extracted objects. Main work of the third sub-systemis to track objects. The tracking algorithm will use significant object features and input them into analyzingprocess tofind

the optimal matching between previous objects and cuffent

objects. After moving objects aretracked successfully in this sub-system, the consistent labels are assigned tothe correct objects. Finally, objects behavior is analyzed and recognized. Useful trafficparameters areextracted and shown inthe user interface. The diagram of global system isshownin Fig. 1.

:oreground | ObjectsExtraction I

Fig. 1. Diagram oftrackingsystem

1)Foreground Segmentation : At first,weinputtheraw data of surveillance video obtained from stationary video cameras to this module. And, the main processes ofthis sub-system are foreground segmentation and background reconstruction. The videocameraisfixed and thebackground

can be regarded as a stationary image, so the background subtraction method is the simplest way to segment moving

objects. Besides, the results of frame differencing and previous objects condition arealso used in forachieving the segmentationmorereliably.

2) Background Initialization: Before segmenting the foreground from the image sequences, the system needs to

construct aninitialbackground imagefor furtherprocess. The

basic idea of finding the background pixel is the high appearing probability ofbackground. During a continuous duration of surveillance video, the level of each pixel appearedmostfrequentlyis almost itsbackground level. We

use a simpler method that if a pixel's value is within a

criterion for several consecutive frames, it means the probability ofappearing of this value ishigh locally orthis

value is locally stable. This method can build an initial

background image even though there aremoving objects in the view ofcamera atthe duration of initialization.

3) Adaptive Background Updating: We introduce an

adaptive thresholdforforegroundsegmentation.Theadaptive threshold includestwoparts:oneisabasicvalueand theother is adaptive value. And, we use theequation shown in(1)to

producethethreshold.

Th

FG=Value

fasic+1.5*Peak1lct0l +STDEVloc1

all

The two statistic data

(Peaklocal,

STDEVIocal)

are calculatedin the specific scope as shown in Fig. 2. This adaptive

threshold will assist the background updating algorithm in

coping with environmental changesand noise effects.

Previous Th,W6 100

147121824 3642 6 -82790183 1121I1.I2

II LocalCalculating Scope

Fig.2.Histngramnfbackgroundsubtractionimagethecaption. At outdoor environment, there are some situations that

result in wrong

segmenting easily.

Those includewaving

oftree leaves,

light gradual

variation and etc. Even there are suddenlight changes happened

when the cloudscoverthesunforawhileorthesunisrevealed fromclouds. We proposean

adaptive background updating

frameworktocopewith those unfavorable situations.Firstly,

we introducea statistic indexwhich is calculated

by

theequation (2).

In-dex=Meanslocal+3*

STDEVQ,,

(2)According to this index, we adjust the frequency of updating the current background image adaptively and the

updating frequency is defined as several phases. The background updating speed will increaseordecrease with the

updating frequency. These phases and their relevant

parameters arelisted in Table 1.

TABLE I

PHASES OF ADAPTIVE BACKGROUND UPDATING

Phase Condition Samplingrate Freq

Normal Index<12 1/30 30

MiddleI 12<Index<18 1/24 24

MiddleII 18<Index<24 1/16 16

Heavy I 24<Index<30 1/8 8

Heavy11 30<Index<36 1/4 4

ExtraHeavy 36<Index Directly update N/A

B. ObjectsExtraction

In this sub-system, we'll use the connected components

algorithm to extracteachobjectandassignitaspecificlabel

to let the system recognize different objects. Before the process of connected components algorithm, we will apply morphological operation toimprove therobustness ofobject extraction. The result of thisalgorithmis the labeled objects image. Then we will build a current objects list with their basicfeaturessuchasposition,size and coloraccordingtothe labeledobjects image. Weuse aspatial filtertoremoveghost objectsor objectsattheboundaries.

C. Objects Tracking

This sub-system is the main process of entire system,

becauseit deals with objects tracking function. Inputsof this

module are three lists: current objects list, previous objects listand overlap relationlist. This sub-system can analyzethe

relation between current objects and previous objects and obtain other properties of objects, such as velocity, life, trajectory and etc.The tracking process is showninFig.3.

the parent-children association. We use the property of children list to presenttheobjects whichweremerged into the parent object and thoseobjectsinchildrenlistkeeptheir own

properties. Ifthe previous object is with children, we only append the childrenof thisprevious objectto currentobject's childrenlist. Afterappendingallobjects ortheir children, the currentobject becomesagroup of those merged objects, like aparentobject. Thediagram is shown in Fig. 4.

Current

Object

Merged Objects

Fig. 3. Diagramofobjectstracking

1) Region Matching. We use the size ofbounding box and central position as input data and a simple method to

calculate the size ofoverlaparea. Thenwecalculate theratio

ofthe overlaparea tothe minimumareaoftwoobjects by (3).

Ratio0,,,1,p = Area'M crrea obj,Areap,evious obj)

(3)

If the overlap ratio is larger than a preset threshold, one

relation of this current object and the previous object is established. This overlap relation list is an important reference list for objects tracking sub-system

2) Matching Analysis. Firstly, we need a simplifying relations processtolet those relations limittothreecategories:

1 to 1 matching, splitting and merging. In other words, we needto remove somerelationstoavoidmerging andsplitting associated withanobjectatthesametime. Instead offinding allpossible removing combination, we use thecost function

tofindoneoptimalrelationtoberemovedateach evaluating cycle and run this process as a recursive process until the violation doesn't exist. The equations for the evaluation are

shown in(4)and(5).

RatioDif0=§

Areacurr -Areapre|I/

Max(Areacurr

,Areapre) (4)Cost=RatioDiff/Ratio,vericip (5)

After simplifying overlap relation list, the matching analysiscanbeseparated into three processes. First process is

1 to 1 matching. This is a quitesimple process ofmatching analysis andwe only applya matching equationto confirm

thismatching.Thenremainingwork isonlytoupdate object's features: label, type, status, velocity, life, child objects and

trajectory.

3) Objects Merging. When multiple previous objects associated with one current object accordingto the overlap relation list, anobjects merging eventhappened. During the

process ofobjects merging, the main work isto reconstruct

hd_< --No--Yes Only Extract ChildrenObj. Become *ChildrenObj. of C.Obj. 'Yes Nx No Update C.Object

Fig. 4. Diagram ofobjects merging

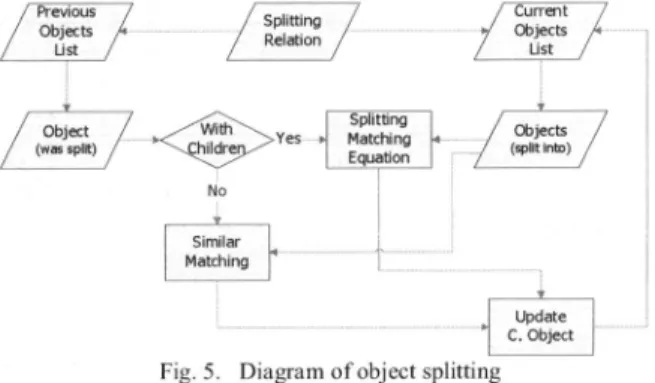

4) Object Splitting: Object splitting happens when a

single previous object associated with multiple current

objects. Splitting events often result from occlusion and objects merging event is one situation of occlusion. Our

algorithm deals with these occlusions by the property of

children list. If the previous object doesn't have the child

object, this splitting results from implicit occlusion. The

processisshown in Fig. 5.

Previous Splitting objdrec~ts

Objetcts Reaion List //

/(sObjectec / C i*iYdeYess- MSplittingMatching 4Objec

- sitea Equabion No Similar Matching r Update C. Object

Fig. 5. Diagramofobject splitting

We check the status of children objects of the previous object firstly. Ifnone of children object, we do a similar matching processtoassignthe label of thepreviousobjectto

the most similar object among these current objects and the others keep unmatched status. If the object has children objects, we will apply the matching algorithm to find the optimal matching between children objects of the previous object and the current ones. The algorithm is based on

object's position, size and color. We use three preset thresholds to filter thematching candidates in advance and

usethefeature ofobject's positiontofindthe bestmatching.

Aftermatching successfully,weupdatethematchedobjects' properties.

5) Other Matching Processes. After three previous matching process, there are still some objects unmatched. Main causes of those unmatched objects are new objects appearedor oldobjectshad left out of FoV. Besides the two main causes, previous objects unmatched sometimes result

from occlusion by background or long movement between frames. And, possible reasons of current objects unmatched aresplit from implicit occlusion, revelation from background occlusion or exceeding the thresholds when previous matching.

Our algorithm presents two additional processes to deal with those situations. One is extended scope matching.Inthis, wesearch unmatched current objects inside a preset scope of each unmatchedprevious object anduse amatching function to match them. The other one is isolated objects process. Unmatched previous objects will keep their all properties except lifeand position. Their life feature will be decreased and their position will be updated with their estimating position. Finally, unmatchedcurrentobjectswill beregarded

as newobjects and beassigned withnewlabels.

6) Object'sLife: In order to assist tracking framework,

our algorithmexploitsthetemporalinformationand create a life feature. We use it to define the status ofobjects. The threshold of life featurecanhelpfilter out someghostobjects which usually appear and disappear very quickly. And, this thresholdalso defines the deadphase forfiltering out those objects that can't be tracked anymore foraperiod time. The other threshold ofappearing is usedtoconfirmthattheobject isvalid andour behavioranalysis module willnot deal with the invalidobjects.Wealsodesignanother type of life feature forthe stoppedobjects. This feature records the consecutive duration ofanobjectwhich hasstopped. If this feature ofan object is larger than a preset threshold, this object will

becomedead, and itsregionwill becopiedintobackground.

D. BehaviorAnalysis

The main purpose is to analyze the behavior ofmoving objects to extract traffic parameters. We introduce several modules to deal with high level objects features and output real-time information related with traffic parameters, suchas

objectsclassification and accidentprediction.

1) Camera Calibration and Object

ClassiJfication:

Weintroduce asimple methodtoperformthe function ofcamera calibration and the equations are shown in (6). This can compensate the dimensional distortion which results from different locationatcameraview.

F] -Mj;tLYij

FP11

P12

RI3]

LYWJ

[I

Mlalibrati

n=L1J LP21 P22 P'23 (6)

Beforeweapplythecameracalibrationto oursystemwe need several datatocalculate thecalibratingmatrixMin advance.We usethedimensions and the locations ofsome movingobjectstocalculatethematrixM.Besides,inorderto simplifythecomputationofthecameracalibration,we normalized thosepropertiessuchaswidth, heightand

velocitytothecalibratedvalues ofcentralpositionof the image. Then,weuse thecalibrated featuretoclassify moving objectsintolarge cars, cars,motorcyclesandpeople.

four types: both stopped objects, only one moving object, samemoving direction and different moving directions. The equations of classifying are shown in (7). The prediction module only processed the later three types.

[0.(Bothstoppe4, if V1=onfV2=0 1 I.(Onlyvnemovin2, if(VI 0OnV2.0)U(V1 0onV2=0) II.(Samedir.), ifV.OnV2.O

nOvxi

*Vy2-rVj

*Vx2=O[III.

(Differendir.),

otherwise 1 (7)Firstly,for the typeI, the closest position is calculated and the distance between these two objects in that position is compared with a preset threshold to predict the occurrence of

anaccident. Secondly, we analyze the type of same moving direction further and only focus on the situation in which trajectories ofthetwoobjects are almost on the same line. We check whether the trajectories are almost on the same line with a threshold. Then the occurrence of accidents and the occurring position are predicted. Thirdly, if the moving directions of two objects aredifferent, we use the equations shown in (8) to obtain the time for objects reaching the crossing of their trajectories. Then we can predict the

occurrenceposition of accidents by the equation (9) and obtain the occurringposition.

A =Vx2*Vyl-Vxl*Vy2

TI-(Vx2*(Py2-Pyl) + Vy2*(Px2-Pxl)) /A

T2=(Vxl*(Py2-Pyl)-Vyl*(Px2-Pxl))/A (8)

n1,T2,

Tif >0onT2>o

o-TJ<(

Dist,,,,*)

+l

accident otherwise Px -l=*VAd+PA

Paccient T1*VX1+PyI

Yacarl 71*V,tl+P.4

(9)

III. EXPERIMENT RESULT

Weimplementedtracking systemonPCwith IntelP42.4G

and 512MB RAM. Inputs are image

sequences,

andresolution is 320by 240 pixels. Datasets were captured by a

DV orreferredtotesting samples used by other research.

A. AdaptiveBackground Updating

We use the datasets of PETS (Performance Evaluation of Tracking and Surveillance) to perform the adaptive background

updating algorithm. The videoisPETS2001 [15] dataset2 training videoandit's preparedfor itssignificant lightingvariation.Wealso

performed the tracking on this video using fixed background

updatingmethod withtwodifferentupdatingfrequenciesinorder to comparewithourproposed algorithm.

Theexperimental results are showninFig. 6 and 7. We focus on the right-bottom corner of each image. Because the car entered camera's FoV view slowly, some pixels of its region had been regarded as background. This symptom will become more deteriorated ifwespeeded up the updatingfrequency. We canfind the wrong segmentation occurredinFig. 7(c) image.It shows that

fastupdating frequencymore easily resultsinwrong segmentation

than slowupdating frequencyinstableenvironmentalcondition. 2) Accident Prediction: We also present a prediction

module for traffic accidents. We analyze the trajectories of

(c) #1200 Fig. 6. Background images of frame 2234 ( a: adaptive B/G updating; b:

updated B/G per 30 frames; c: updated B/G per 1O frames)

#1270 #1294 #1340

Fig.10. occlusion and splitting of multiple objects

(a) (b) (c)

Fig.7. Result images of frame 2234

Next, we demonstrated the situation of significant lighting

variation and this situation occurred in the later frames of this same video.InFig. 8(b),itoccurred wrongsegmentation but the other two method updated background fast enough to cope with lighting changes.

Fig. 8. Result images of frame 3300

C. Stopped Objects

There are often stopped objects or the moving background occurred during the tracking or monitoring. We propose a

featuretocopewiththesespecial situations andcanpresetthe

threshold of this feature to let stopped objects or the ghost

foreground be updated to background image. We

demonstratedtrackingresults ofstopped objects inFig 11.In

Fig. 1 1,there was acar(No.2)parked on campus originally and it intendedtoleave. Anothercar(No.3) intendedtopark

onthis campus.We canfindaghostobject(No.8) occurred in frame #1420 because the car (No.2) was regarded as a region of the background previously. In frame #1640, the system duplicated the region of ghost object into the background image when the feature was larger than the threshold. In

frame #1750, thecar(No.3)which hadparkedoncampuswas

alsoregardasthebackground.

_~~~~0_ _

InFig. 9(c),italso occurredsome wrong segmentations and the slowonestilltracked the wrong objects. These results prove thatour

proposed updating algorithm presents good balance and better performance among the normal environmentalconditions, gradual

change situations and rapid change situations.

: _ _~~~~~~~8

#900 #1100 #1300

(a) (b) (c)

Fig. 9. Background images of frame3392

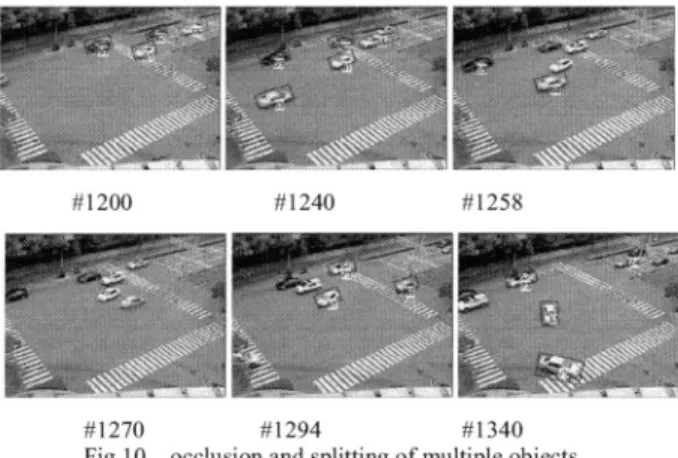

B. Occlusion and Splitting ofMultiple Objects

Ourproposed tracking algorithmcanhandle the occlusion and splitting eventsrobustly eventhrough there arevarious objectsor morethantwoobjects included the occlusion group. Thereare an occlusion offour vehicles occurredinFig. 10. No. 65 is anobject withanimplicit occlusion so oursystem

treats it as a single object. During frame #1258 to #1270, thosevehicles merged together. No. 73 was created as a new object becauseitsplitfrom anobjectwithimplicit occlusion. Then No. 68 and No. 61 vehicle had split from the occlusion group and was tracked with correct label. No. 62 didn't split fromtheocclusion group because it still merged with another objectwhen it left the camera's FoV.

#1420 #1580 #1580(B/G)

#1640 # I640(B/G) #1750(B/G)Fig.

1. Tracking results of stopped objects

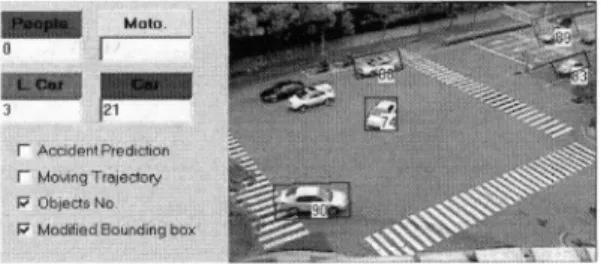

D. BehaviorAnalysis

We implement a simple but effective methodto classify those moving objects into four categories: people, motorcycles, cars and large cars. This method must be

processedwith the correct information of camera calibration.

In Fig. 12, we show experimental results of objects classification. We designed four counters that present the accumulating quantity of different categories of moving objects and the bounding boxes of objects aredrawn with the specific colors directly. _

Next,weusetheinformation oftrajectory estimation of the objects toimplement the prediction of traffic accidents. The resultsare shown in Fig. 13. We will drawan accident box and two predicting trajectories in the images of tracking results if our systempredicts the occurrence ofan accident.

Because there isn't areal accidenthappened in frame#128, theaccident alarm willdisappearsooninthelater frames.

(a)#126 (b)#128

Fig.13. Expe-rimentalresults of accidentprediction

IV. CONCLUSIONS

Accordingtothe structure of theproposed algorithms, we implemented a tracking system including the functions of objects classification and accident prediction. Experiments

were conducted on real-life traffic video of theintersection and the datasets of other surveillance research. We had demonstrated robust objects segmentation and successful tracking various objects with occlusion or splitting events. The system alsoextracted useful traffic parameters. Besides, thoseproperties ofmovingobjects orthe resultsof behavior analysisarevaluabletothemonitoringapplicationsand other surveillance systems.

[2] L.Li, W. Huang, I.Y. H. Gu,and Q. Tian, "Statistical modeling of complexbackgroundsforforeground object detection," IEEE Transactions onImageProcessing, vol. 13, no.11,pp. 1459-1472,Nov.2004.

[3] W. Hu, T. Tan, L. Wang,and S. Maybank,"Asurvey on visual surveillance ofobjectmotion andbehaviors," IEEE Transactions on Systems, Man,and Cybernetics-Part C. Applications andReviews,vol. 34, no. 3, pp. 334-352, Aug. 2004.

[4] S. Gupte,0.Masoud, R.F. K.Martin, and N. P. Papanikolopoulos,

"Detectionandclassification of vehicles,"IEEE Transactions on Intelligent TransportationSystems, vol. 3, no. 1, pp. 37-47, Mar. 2002.

[5] A.Chachich,A.Pau, A.Barber, K. Kennedy, E. Olejniczak, J. Hackney, Q.Sun, and E.Mireles, "Trafficsensorusing a color vision method," in Proc. ofSPIE: Transportation Sensors and Controls: Collision Avoidance, Traffic Management,and ITS, vol. 2902, pp. 156-165, 1996.

[6]T.N.Tan,G. D.Sullivan, and K. D. Baker, "Model-based localization andrecognitionof road vehicles," International Journal ofComputer Vision, vol. 27, no. 1, pp. 5-25, 1998.

[7] S. McKenna, S. Jabri,Z.Duric,A.Rosenfeld, and H. Wechsler, "Tracking groups of people," Computer Vision and Image Understanding, vol. 80,no.1, pp.42-56, 2000.

[8]R.Cucchiara,P.Mello,and M.Piccaidi,"Imageanalysis and rule-based reasoning foratrafficmonitoring system,"IEEETransactions on Intelligent TransportationSystems, vol. 1,no.2, pp.119-130,June2000.

[9] H.Veeraraghavan,0.Masoud, and N. P.Papanikolopoulos, "Computer visionalgorithmsforintersectionmonitoring,"IEEETransactionson Intelligent Transportation Systems, vol. 4, no. 2, pp. 78-89, June2003.

[10]Y.K.JungandY.S. Ho,"Traffic parameter extractionusing video-based vehicletracking,"in Proc.of1999IEEE/IEEJ/JSAI InternationalConferenceonIntelligent Transportation Systems, 1999,pp. 764-769.

[ 1]P.Remagnino,T.Tan,and K.Baker, "Multi-agent visual surveillance of dynamic scenes," Image andVisionComputing, vol. 16, no. 8, pp. 529-532,

1998.

[12] M.M.Trivedi,I.Mikic, and G. Kogut, "Distributed video networks for incident detection and management," in Proc. of2000IEEE onIntelligent TransportationSystems,2000, pp. 155-160.

[13] S.Kamijo,Y.Matsushita,K.Ikeuchi,andM.Sakauchi,"Traffic

monitoringand accident detectionatintersections,"IEEETransactionson Intelligent TransportationSystems,vol. 1,no.2, pp. 108-118,June 2000.

[14]W.Hu,X.Xiao,D.Xie,T.Tan,and S. Maybank,"Trafficaccident predictionusing3-Dmodel-based vehicle tracking," IEEE Transactions on

VehicularTechnology,vol.53,no.3,pp. 677-694, May 2004.

[15]ftp://pets2001.cs.rdg.ac.uk.

REFERENCES

[I]N.FriedmanandS.Russell, "Imagesegmentationinvideo sequences:a

probabilistic approach,"in Proc.13thConferenceUncertaintyinArtificial