國

立

交

通

大

學

電控工程研究所

博

士

論

文

內嵌粒子群優化學習演算法之類神經模糊系統

及其應用

Neural Fuzzy System Embedded with Particle Swarm Optimizer

and Its Applications

研 究 生:蘇閔財

指導教授:林進燈 教授

內嵌粒子群優化學習演算法之類神經模糊系統及其應用

Neural Fuzzy System Embedded with Particle Swarm Optimizer

and Its Applications

研 究 生:蘇閔財 Student:Miin-Tsair Su

指導教授:林進燈

Advisor:Chin-Teng Lin

國 立 交 通 大 學

電 控 工 程 研 究 所

博 士 論 文

A Dissertation

Submitted to Institute of Electrical and Control Engineering

College of Electrical and Computer Engineering

National Chiao Tung University

in partial Fulfillment of the Requirements

for the Degree of

Doctor of Philosophy

in

Electrical and Control Engineering

February 2012

Hsinchu, Taiwan, Republic of China

內嵌粒子群優化學習演算法之類神經模糊

系統及其應用

研究生:蘇閔財

指導教授:林進燈 博士

國立交通大學電控工程研究所博士班

摘 要

本篇論文中所提出的進化式神經模糊系統乃是採用內嵌以粒子群為基礎的 學 習 演 算 法 之 函 數 鏈 結 類 神 經 模 糊 網 路 (Functional-Link-Based Neuro-Fuzzy Network, FLNFN)。此一類神經模糊網路採用函數鏈結類神經網路來做為模糊法 則的後件部。由於,後件部採用了非線性函數展開的方式,來形成任意複雜的決 策邊界。因此,在 FLNFN 模型中,後件部的這個局部特性,可以使輸入變量的 非線性組合結果,能夠更有效地近似目標輸出。本論文主要為三大部分。在第一 部份,我們提出了一個高效率的免疫粒子群優化(IPSO)的學習方法來解決膚 色檢測的問題。我們所提的免疫粒子群優化演算法主要是結合免疫演算法(IA) 和粒子群優化(PSO)來進行參數學習。在第二部分中,另一種被稱為細菌覓食 粒子群優化(BFPSO)的混合式參數學習演算法,將被介紹來解決分類的應用。 BFPSO 演算法主要是透過 BFO 的趨化運動來操作執行區域性的搜索,而在整個 搜索空間的全域搜索則是由 PSO 來完成。利用此一方式,便能在全域性的勘探 和區域性的開採間取得最好的平衡。在第三部分中,與先前採用混合方法不同, 我們引入了以距離為基礎的突變操作元,藉以用來增加粒子群的群體多樣性。此 演算法包含架構學習及參數學習兩部分。架構學習是藉由熵的量測來決定所需的模糊法則的數目。參數學習則是使用內嵌以距離為基礎的突變操作元之粒子群優

化演算法(DMPSO),來調整歸屬函數的形狀與後件部的相對應權重。最後,我

們將論文中所提出的以 PSO 為基礎之學習演算法應用到各種分類和控制問題。

Neural Fuzzy System Embedded with

Particle Swarm Optimizer and Its Applications

Student:Miin-Tsair

Su

Advisor:Dr. Chin-Teng Lin

Institute of Electrical and Control Engineering

National Chiao Tung University

ABSTRACT

This dissertation proposes the evolutionary neural fuzzy system, designed using functional-link-based neuro-fuzzy network (FLNFN) model embedded with

PSO-based learning algorithms. The FLNFN model uses a functional link neural network to the consequent part of the fuzzy rules. The consequent part uses a

nonlinear functional expansion to form arbitrarily complex decision boundaries. Thus, the local properties of the consequent part in the FLNFN model enable a nonlinear

combination of input variables to be approximated more effectively. This dissertation consists of three major parts. In the first part, the efficient immune-based particle

swarm optimization (IPSO) learning method is presented to solve the skin color detection problem. The proposed IPSO algorithm combines the immune algorithm

(IA) and particle swarm optimization (PSO) to perform parameter learning. In the second part, another hybrid parameter learning algorithm, called bacterial foraging

particle swarm optimization (BFPSO), is introduced for classification applications. The proposed BFPSO algorithm performs local search through the chemotactic

accomplished by a PSO operator. In this way it balances between exploration and

exploitation enjoying best of both the worlds. In the third part, instead of using hybrid techniques, the distance-based mutation operator is introduced to improve the

population diversity. The learning algorithm consists of structure learning and parameter learning. The structure learning depends on the entropy measure to

determine the number of fuzzy rules. The parameter learning, based on distance-based mutation particle swarm optimization (DMPSO), can adjust the shape of the

membership function and the corresponding weights of the consequent part. Finally, the proposed PSO-based learning algorithms are applied in various classification and

control problems. Results of this dissertation demonstrate the effectiveness of the proposed methods.

誌 謝

修習博士學位這一路走來,我需要感謝的人太多!特別是我的博士論文指導 教授 林進燈博士。感謝老師在學術研究上給予學生的啟發、鼓勵與陪伴。在老 師豐富的學識、殷勤的教導及嚴謹的督促下,使我學習到許多的寶貴知識以及在 面對事情時應有的處理態度。在此,學生要由衷的向老師表示謝意和敬意。 感謝口試審查委員 陳文良教授、楊谷洋教授、張志永教授、林正堅教授以 及陳金聖副教授,在百忙之中,願意撥冗參與口試,並給予論文寶貴的建議與指 正,使得本篇論文更加完整。 博士的求學過程,真是一個漫長且艱辛的路程,有時看不到希望,甚至必須 在絕望中持續奮鬥,不知何時光明會出現,尤其是投稿論文被拒絕,畢業遙遙無 期的時候。雖然,未來前途也充滿了挑戰,希望透過如此一個真實的經驗,讓我 體察到,即使未來處在困境中,仍要持續奮鬥,因為奮鬥才有希望。 在研究過程中,也要特別感謝已畢業的博士班同學 陳政宏助理教授的一路 陪伴。特別是在研究上的討論與建議,常常可以給我新的啟發與觀念的成長。 同時,要感謝交大資訊媒體實驗室的所有夥伴,尤其是肇廷、東霖、勝智、 鎮宇、哲銓、耿維、…,在研究的過程中,藉由不斷的相互砥礪及針對研究內容 與方法的深入探討,讓我在博士的研究路上走得更順遂,特別是您們的勤奮和努 力,更是我學習的指標。 學生也要感謝碩士班的指導教授 陳文良博士與已故的葉 莒教授的悉心 指導,使我在研究及做人處世上獲益匪淺。另外,老師為人處世的言教與身教, 也深深地影響著學生。 感謝我就讀博士班期間所任職的東捷科技股份有限公司以及帆宣系統科技 股份有限公司,提供了一個良好的工作環境,讓我能夠同時兼顧工作與學業,進 而順利完成我的博士學業。在此,我也要感謝徐氏數學的徐清朗老師與徐陳雪美 師母,在我就讀大學與研究所的寒、暑假期間,提供我一個優良的打工環境,讓我能在學校上課期間專注於課業上,進而順利完成我的學士與碩士學業。 感謝父親與已故母親,從小到大對我們兄弟的栽培,讓我們能得到良好的教 育。我也要感謝岳父與岳母對我在職進修博士學位一事,抱持著正面與肯定的態 度,進而給我支持與鼓勵,讓我能夠專心於研究的工作並順利完成博士學位。 多年來,太太 怡君在我就讀博士班期間,星期假日常陪伴我到圖書館唸 書、查資料、寫文章,並協助分擔家中許多事務,讓我得以心無旁騖地專心致力 於論文研究工作,更是讓我無以為報。 因篇幅有限,還有許多曾經關心我的家人親友、教導我的師長、幫助我的同 仁、鼓勵我的朋友,無法一一致意,謹在此表達由衷的感謝,謝謝您們。 最後,謹將此論文獻給我已亡故的母親 蘇黃惠美女士,以慰她在天之靈。 閔財 於交大資訊媒體實驗室 中華民國一○一年七月十日

Table of Contents

Chinese Abstract ... i

English Abstract ... iii

Acknowledgement ...v

Table of Contents ... vii

List of Tables... ix

List of Figures ...x

Chapter 1 Introduction ...1

1.1 Motivation...1 1.2 Literature Survey ...6 1.3 Organization of Dissertation ... 11Chapter 2 Structure of the Functional-Link-Based Neuro-Fuzzy Network

...14

Chapter 3 Immune Algorithm Embedded with Particle Swarm Optimizer

for Neuro-Fuzzy Classifier and Its Applications ...19

3.1 Basic Concepts of the Artificial Immune System ...20

3.2 Clonal Selection Theory ...21

3.3 The Efficient Immune-Based PSO Learning Algorithm...22

3.3.1 Code fuzzy rule into antibody...23

3.3.2 Determine the initial parameters by self-clustering algorithm ...25

3.3.3 Produce initial population ...25

3.3.4 Calculate affinity values ...26

3.3.5 Production of sub-antibodies ...26

3.3.6 Mutation of sub-antibodies based on PSO...27

3.3.7 Promotion and suppression of antibodies ...28

3.3.8 Elitism selection...30

3.4 Skin Color Detection...30

3.5 Concluding Remarks...34

Chapter 4 An Evolutionary Neural Fuzzy Classifier Using Bacterial

Foraging Oriented by Particle Swarm Optimization Strategy...37

4.1 Basic Concepts of Bacterial Foraging Optimization ...38

4.1.1 Chemotaxis ...38

4.1.2 Swarming ...39

4.1.3 Reproduction...40

4.1.4 Elimination-and-Dispersal ...40

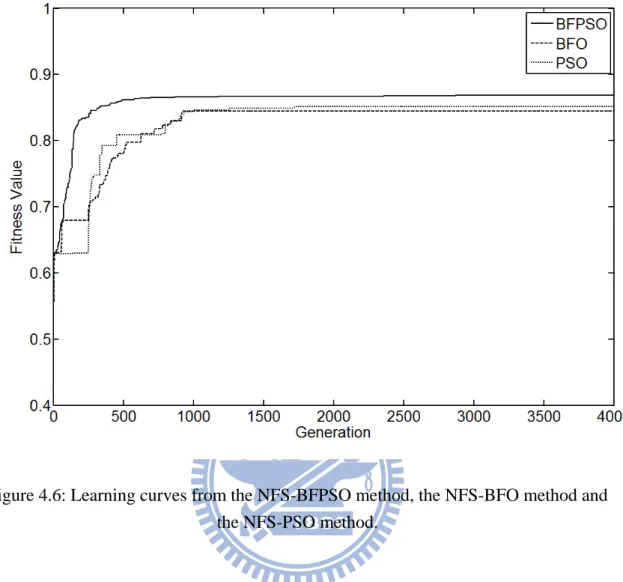

4.3 Illustrative Examples ...44

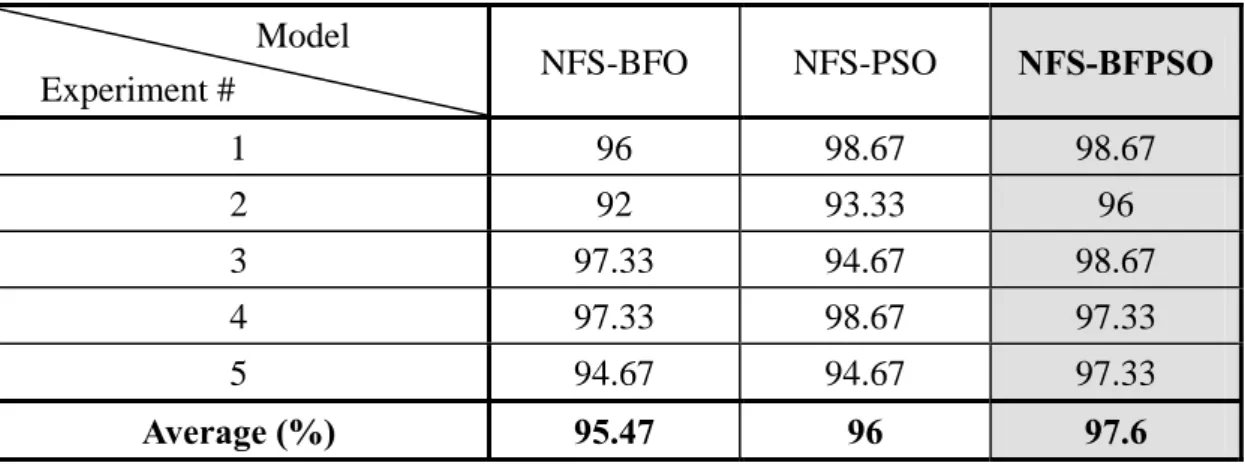

Example 1: Iris Data Classification ...45

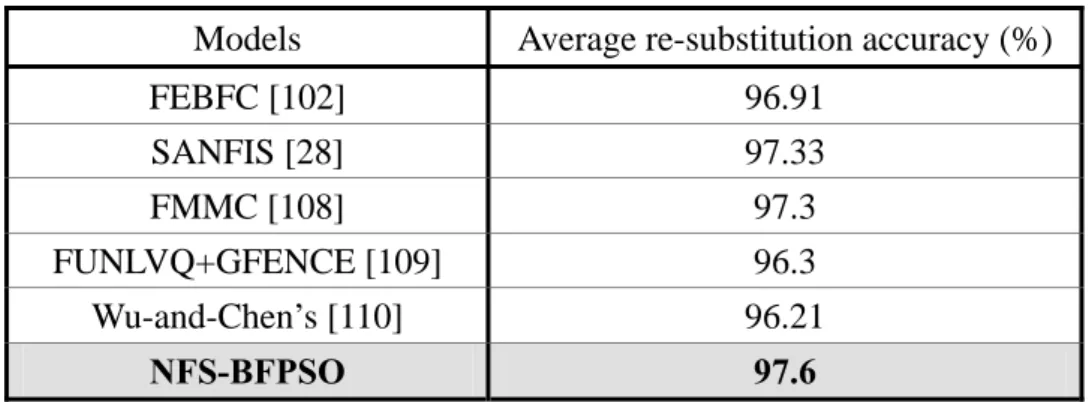

Example 2: Wisconsin Breast Cancer Diagnostic Data Classification ...52

Example 3: Skin Color Detection ...55

4.4 Concluding Remarks...57

Chapter 5 Nonlinear System Control Using Functional-Link-Based

Neuro-Fuzzy Network Model Embedded with Modified Particle Swarm

Optimizer ...59

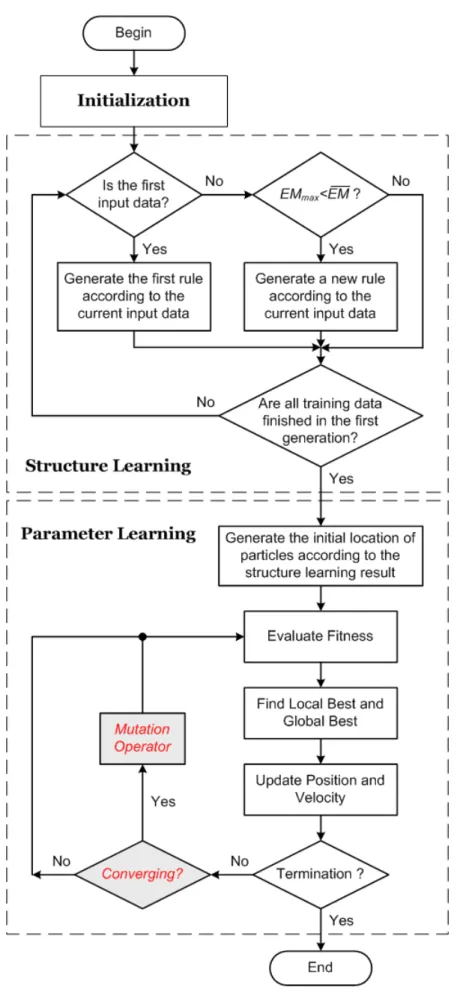

5.1 Learning Scheme for the FLNFN Model...60

5.2 Structure Learning Phase ...62

5.3 Parameter Learning Phase...64

5.4 Illustrative Examples ...67

Example 1: Multi-Input Multi-Output Plant Control...67

Example 2: Control of Backing Up the Truck ...70

Example 3: Control of Water Bath Temperature System...75

5.5 Concluding Remarks...81

Chapter 6 Comparisons and Discussions...83

6.1 Comparisons ...83

6.1.1 Skin Color Detection Using DMPSO ...83

6.1.2 Skin Color Detection Results Comparison with Different Approaches ...88

6.2 Discussions ...89

Chapter 7 Conclusions and Future Works ...91

Bibliography ...95

List of Tables

Table 3.1: The accuracy rate with different generations (%) ...33 Table 3.2: Performance comparison with various existing models from the CIT

database (Training data: 6000; Generations: 2000) ...34 Table 4.1: Classification accuracy using various methods for the iris data. ...51 Table 4.2: Average re-substitution accuracy comparison of various models for the iris data classification problem. ...52 Table 4.3: Classification accuracy for the Wisconsin breast cancer diagnostic data. ..54 Table 4.4: Average accuracy comparison of various models for Wisconsin breast cancer diagnostic data. ...55 Table 4.5: Performance comparison with various existing models from the CIT

database...56 Table 5.1: Performance comparison of the FLNFN-DMPSO, FLNFN-PSO,

CNFC-ISEL, SEFC and MFS-SE controllers for the MIMO plant. ...70 Table 5.2: Performance comparison of various controllers to control of backing up the truck. ...75 Table 5.3: Performance comparison of various controllers for the water bath

temperature control system. ...81 Table 6.1: Performance comparison with PSO and DMPSO methods from the CIT database (Training data: 6000; Generations: 2000) ...84 Table 6.2: Performance comparison with various existing models from the CIT

database (Training data: 6000; Generations: 2000) ...88 Table 6.3: The roles of IA, BFO and PSO in the proposed learning algorithm. ...90

List of Figures

Figure 1.1: The taxonomy of global optimization algorithms. ...5

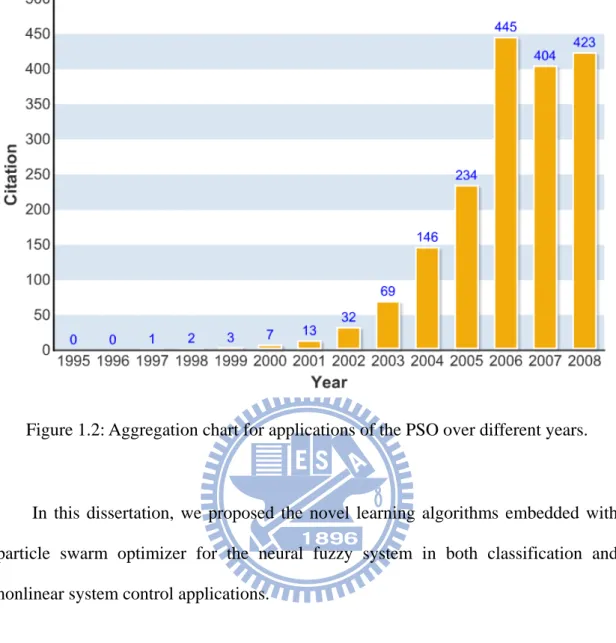

Figure 1.2: Aggregation chart for applications of the PSO over different years. ...6

Figure 1.3: Taxonomy of PSO. ...7

Figure 1.4: The variations of PSO. ...9

Figure 2.1: Structure of the selected neuro-fuzzy system model...16

Figure 3.1: The clonal selection principle...22

Figure 3.2: Flowchart of the proposed IPSO algorithm...24

Figure 3.3: Coding a neuro-fuzzy classifier into an antibody in the IPSO method. ....25

Figure 3.4: The flowchart of the mutation step...28

Figure 3.5: The coding of antibody population...29

Figure 3.6: Flowchart of the skin color detection system. ...31

Figure 3.7: The accuracy rate with different generations. ...32

Figure 3.8: The learning curves of the three methods using the CIT database...33

Figure 3.9: Original color images from CIT facial database. ...35

Figure 3.10: Results of skin color detection with 3 dimension input (Y, Cb and Cr)..36

Figure 4.1: Flowchart of proposed BFPSO method...44

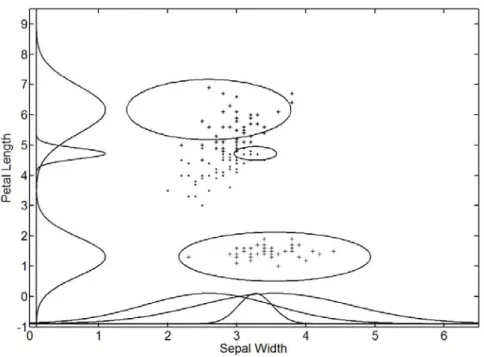

Figure 4.2: Iris data: iris sestosa (), iris versiolor (), and iris virginica (). ...47

Figure 4.3: The distribution of input training patterns and final assignment of three rules...50

Figure 4.4: Learning curves of the NFS-BFPSO method, the NFS-BFO method, and the NFS-PSO method...51

Figure 4.5: Input membership functions for breast cancer classification. ...53

Figure 4.6: Learning curves from the NFS-BFPSO method, the NFS-BFO method and the NFS-PSO method...54

Figure 4.7: The learning curves of the three methods using the CIT database...56

Figure 4.8: Original face images from CIT database...57

Figure 4.9: Results of skin color detection with 3 dimension input (Y, Cb, Cr)...57

Figure 5.1: Flowchart of the proposed learning scheme for the FLNFN model...61

Figure 5.2: Learning curves of best performance of the FLNFN-DMPSO, CNFC-ISEL, SEFC and MFS-SE in MIMO plant control. ...68

Figure 5.3: Desired (solid line) and model (dotted line) output generated by FLNFN-DMPSO in MIMO plant control. ...69

Figure 5.4: Errors of proposed FLNFN-DMPSO in MIMO plant control. ...69

Figure 5.5: Diagram of simulated truck and loading zone...71 Figure 5.6: Learning curves of best performance of the FLNFN-DMPSO,

CNFC-ISEL, SEFC and MFS-SE in control of backing up the truck. ...72

Figure 5.7: Trajectories of truck, starting at four initial positions under the control of the FLNFN-DMPSO after learning using training trajectories...74

Figure 5.8: Conventional training scheme. ...76

Figure 5.9: The regulation performance of the FLNFN-DMPSO controller for the water bath system...79

Figure 5.10: The behavior of the FLNFN-DMPSO controller under impulse noise for the water bath system...80

Figure 5.11: The behavior of the FLNFN-DMPSO controller when a change occurs in the water bath system dynamics. ...80

Figure 5.12: The tracking performance of the FLNFN-DMPSO controller for the water bath system...81

Figure 6.1: The learning curves of PSO and DMPSO methods using the CIT database. ...84

Figure 6.2: Original face images from CIT repository...85

Figure 6.3: Fitness maps generated by a well-trained FLNFN-DMPSO...86

Figure 6.4: Masks generated by a well-trained skin color classifier...87

Chapter 1

Introduction

1.1 Motivation

Fuzzy systems and neural networks have attracted the growing interest of

researchers in various scientific and engineering areas. The number and variety of applications of fuzzy systems and neural networks [1-6] have been increasing, ranging

from consumer products and industrial process control to medical instrumentation, information systems, and decision analysis.

Fuzzy systems are structured numerical estimators. They start from highly formalized insights about the structure of categories found in the real world and then

articulate fuzzy IF-THEN rules as a kind of expert knowledge. Fuzzy systems combine fuzzy sets with fuzzy rules to produce overall complex nonlinear behavior.

Neural networks, on the other hand, are trainable dynamical systems whose learning, noise-tolerance, and generalization abilities grow out of their connectionist structures,

their dynamics, and their distributed data representation. Neural networks have a large number of highly interconnected processing elements (nodes) which demonstrate the

ability to learn and generalize from training patterns or data; these simple processing elements also collectively produce complex nonlinear behavior.

The performance of fuzzy systems critically depends on the input and output membership functions, the fuzzy rules, and the fuzzy inference mechanism. On the

other hand, the performance of neural networks depends on the computational function of the neurons in the network, the structure and topology of the network, and

disadvantages of fuzzy systems and neural networks are summarized as follows [7]:

The advantages of the fuzzy systems are:

• capacity to represent inherent uncertainties of the human knowledge with linguistic variables;

• simple interaction of the expert of the domain with the engineer designer of the system;

• easy interpretation of the results, because of the natural rules representation;

• easy extension of the base of knowledge through the addition of new rules;

• robustness in relation of the possible disturbances in the system.

The disadvantages of the fuzzy systems are:

• incapable to generalize, or either, it only answers to what is written in its rule base;

• not robust in relation the topological changes of the system, such changes would demand alterations in the rule base;

• depends on the existence of a expert to determine the inference logical rules;

The advantages of the neural networks are:

• learning capacity; • generalization capacity;

• robustness in relation to disturbances.

The disadvantages of the neural networks are:

• impossible interpretation of the functionality;

• difficulty in determining the number of layers and number of neurons.

The hybrid neuro-fuzzy systems [8-34] possess the advantages of both neural networks (e.g. learning abilities, optimization abilities, and connectionist structures)

and fuzzy systems (e.g. humanlike IF-THEN rules thinking and ease of incorporating expert knowledge). In this way, we can bring the low-level learning and

humanlike IF-THEN rule thinking and reasoning of fuzzy systems into neural

networks.

There are several different ways to develop hybrid neuro-fuzzy systems;

therefore, being a recent research subject, each researcher has defined its own particular models. These models are similar in its essence, but they present basic

differences. The most popular neuro-fuzzy architectures include: 1) Fuzzy Adaptive Learning Control Network [8][20][21][29][35]; 2) Adaptive-Network-Based Fuzzy

Inference System [24]; 3) Self-Constructing Neural Fuzzy Inference Network [25]; and 4) Functional-Link-Based Neuro-Fuzzy Network [32][33]. The advantages of a

combination of neural networks and fuzzy inference systems are obvious [8][34-36]. Fusion of artificial neural networks and fuzzy inference systems have attracted the

growing interest of researchers in various scientific and engineering areas due to the growing need of adaptive intelligent systems to solve the real world problems

[8][9][19][20][24][25][30][33-38].

No matter which neuro-fuzzy architecture is chosen, training of the parameters is

the main problem in designing a neuro-fuzzy system. Backpropagation (BP) [20][24][25][32][35][38][39] training is commonly adopted to solve this problem. It is

a powerful training technique that can be applied to networks with a forward structure. Since the steepest descent approach is used in BP training to minimize the error

function, the algorithms may reach the local minima very quickly and never find the global solution. The aforementioned disadvantages lead to suboptimal performance,

even for a favorable neuro-fuzzy system topology. Therefore, technologies that can be used to train the system parameters and find the global solution while optimizing the

overall structure are required.

Figure 1.1 sketches a rough taxonomy of global optimization methods [40].

and probabilistic algorithms. Deterministic algorithms are most often used if a clear

relation between the characteristics of the possible solutions and their utility for a given problem exists. Then, the search space can efficiently be explored using for

example a divide and conquer scheme. If the relation between a solution candidate and its “fitness” are not so obvious or too complicated, or the dimensionality of the

search space is very high, it becomes harder to solve a problem deterministically. Trying it would possible result in exhaustive enumeration of the search space, which

is not feasible even for relatively small problems. Then, probabilistic algorithms come into play.

An especially relevant family of probabilistic algorithms is the Monte Carlo-based approaches. They trade in guaranteed correctness of the solution for a

shorter runtime. This does not mean that the results obtained using them are incorrect - they may just not be the global optima. An important class of probabilistic Monte

Carlo metaheuristics is evolutionary computation (EC). It encompasses all algorithms that are based on a set of multiple solution candidates (called population) which are

iteratively refined. This field of optimization is also a class of soft computing as well as a part of the artificial intelligence area. Some of its most important members are

evolutionary algorithms (EAs) and swarm intelligence (SI).

The particle swarm optimization (PSO) developed by Kennedy and Eberhart in

1995 [41-43], is a relatively new technique. Although PSO shares many similarities with evolutionary computation techniques, the standard PSO does not use evolution

operators such as crossover and mutation. PSO emulates the swarm behavior of insects, animals herding, birds flocking, and fish schooling where these swarms

search for food in a collaborative manner. Each member in the swarm adapts its search patterns by learning from its own experience and other members’ experiences.

State Space Search Branch and Bound Algebraic Geometry (Stochastic) Hill Climbing Random Optimization Simulated Annealing (SA) Tabu Search (TS) Parallel Tempering Stochastic Tunneling Direct Monte Carlo Sampling Evolutionary Algorithms (EA) Genetic Algorithms (GA) Learning Classifier System (LCS) Evolutionary Programming Evolutionary Strategy (ES) Genetic Programming (GP) Differential Evolution (DE) Standard Genetic Programming Linear Genetic Programming Grammar Guide Genetic Prog. Swarm Intelligence (SI) Ant Colony Optimization (ACO) Particle Swarm Optimization (PSO) Memetic Algorithms Harmonic Search (HS) Evolutionary Computation (EC) Monte Carlo

Algorithms Soft Computing

Artificial Intelligence (AI) Computational Intelligence (CI) Deterministic Probabilistic

Figure 1.1: The taxonomy of global optimization algorithms.

During the past several years, PSO has been successfully applied to a diverse set of optimization problems, such as multidimensional optimization problems [44],

multi-objective optimization problems [45-47], classification problems [48][49], and feedforward neural network design [39][50-53]. Aggregation chart for applications of

Figure 1.2: Aggregation chart for applications of the PSO over different years.

In this dissertation, we proposed the novel learning algorithms embedded with particle swarm optimizer for the neural fuzzy system in both classification and

nonlinear system control applications.

1.2 Literature Survey

The underlying motivation for the development of PSO algorithm is the social

behavior of animals, such as bird flocking, fish schooling and swarm theory. To simulate social behavior, bird flocking searches for food in an area. Each bird flies

according to self-cognition and social information. Self-cognition is the generalization produced by past experience. The social information is the message that is shared by

to the knowledge of the others. A PSO’s taxonomy is shown as Figure 1.3 [54].

In PSO, a member in the swarm, called a particle, represents a potential solution

which is a point in the search space. The global optimum is regarded as the location of food. Each particle has a fitness value and a velocity to adjust its flying direction

according to the best experiences of the swarm to search for the global optimum in the solution space [55].

In the original PSO algorithm, the particles are manipulated according to the following equations: 1 1 1 1 1 1 1 ( ) 2 2 ( ) t t t t t t id id c r pid xid c r pgd xid (1.1) 1 1 t t t id id id x x v (1.2) Here x and idt t id v are the th

d dimensional component of the position and velocity

of the i particle at time step t . th t id

p is the th

d component of the best (fitness)

position the i particle has achieved by time step t , and th ptgd is the th d component of the global best position achieved in the population by time step t . The constants c and 1 c are known as the “cognition” and “social” factors, respectively, 2

as they control the relative strengths of the individual behavior of each particle and collective behavior of all particles. Finally, r and 1 r are two different random 2

numbers in the range of 0 to 1 and are used to enhance the exploratory nature of the PSO.

The two main models of the PSO algorithm, called gbest (global best) and

lbest (local best), which differ in the way of they define particle neighborhood. Kennedy and Poli [43][56] showed that the gbest model has a high convergence

speed with a higher chance of getting stuck in local optima. On the contrary, the

lbest model is less likely become trapped in local optima but has a slower convergence speed than gbest .

Many researchers have worked on improving its performance in various ways,

thereby deriving many interesting variants as shown in Figure 1.4 [54].

A Parallel Vector-Based PSO (2005) Active target PSO (2008) Adaptive Dissipative PSO (2007) Adaptive Mutation PSO (2008) Adaptive PSO (2002) Adaptive PSO Guided by acceleration information (2006) Angle Modulated PSO (2005) Area Extension PSO (2007) Attractive-Repulsive PSO (2002) Basic PSO (1995) Behavior of distance PSO (2007) Best rotation PSO (2007) Binary PSO (1997) Chaos PSO (2006) Combinatorial PSO (2007) Comprehensive Learning PSO (2006) Constrained Optimization Via PSO (2007)C Cooperative Co-evolutionary PSO (2008) Cultural Based PSO (2005) Discrete PSO (1997) Dissipative PSO(2002) Divided Range PSO (2004) Double-structure coding Binary PSO (2007) Dual Layered PSO (2007) Dynamic & Adjustable PSO (2007) Dynamic Double PSO (2004) Dynamic Neighborhood PSO (2003) Estimation of Distribution PSO (2007) Evolutionary Iteration PSO (2007) Evolutionary Programming PSO (2007) Evolutionary PSO (2002) Exploring Extended PSO (2005) Extended PSO (2005) Fast PSO(2007) Fully informed PSO (2004) Fuzzy PSO (2001) Genetic Binary PSO (2006) Genetic PSO

(2006) Geometric PSO(2008) Greedy PSO(2007) Gregarious PSO(2006) Heuristic PSO(2007)

Hierarchical Recursive-based PSO (2005) Hybrid Discrete PSO (2006) Hybrid Gradient PSO (2004) Hybrid Taguchi PSO (2006) Immune PSO

(2008) Improved PSO(2006) Interactive PSO(2005)

Map-Reduce PSO (2007) Modified Binary PSO (2007) Modified GPSO

(2008) Nbest PSO(2002) Neural PSO(2005)

New PSO (2006) Niche PSO(2002) Novel Hybrid PSO (2007) Novel PSO (2008) Optimized PSO(2006) Orthogonal PSO (2008) Parallel Asynchronous PSO (2006) Perturbation PSO (2005) Predator Prey PSO (2007) PSO with Craziness and Hill Climbing (2006) PSO with Passive Congregation (2004) Pursuit-Escape PSO (2008) Quadratic Interpolation PSO (2007) Quantum Delta PSO (2004) Quantum PSO (2004) Quantum-Inspired PSO (2004) Restricted Velocity PSO (2006) Self-adaptive velocity PSO (2008) Simulated Annealing PSO (2004) Spatial Extension PSO (2002) Special Extension PSO (2006) Species Based PSO (2004) Sub-Swarms PSO (2007) Trained PSO (2007) Two-dimensional Otsu PSO (2007) Two-Swarm PSO (2006) Unconstrained PSO (2006) Variable Neighborhood PSO (2006) Vector Limited PSO (2008) Velocity Limited PSO (2006) Velocity Mutation PSO (2008) Vertical PSO

(2007) ... Continuous Binary Discrete

Augmented Lagrangian PSO (2006) Cooperative Multiple PSO (2007) Escape Velocity PSO (2006) Gaussian PSO (2003) Hybrid Recursive PSO (2007) New PSO (2005) Principal Component PSO (2005) Self-Organization PSO (2006) Unified PSO (2004)

Figure 1.4: The variations of PSO.

One of the variants introduces a parameter called inertia weight (w) into the

original PSO algorithms [56-58], and Eq. (1.1) can be rewritten as follows:

1 1 1 1 1 1 1 ( ) 2 2 ( ) t t t t t t id w id c r pid xid c r pgd xid (1.3)

The inertia weight is used to balance the global and local search abilities. A large

inertia weight is more appropriate for global search, and a small inertia weight facilitates local search. A linearly decreasing inertia weight over the course of search

was proposed by Shi and Eberhart [58]. Parameters in PSO are discussed in [59]. Shi and Eberhart designed fuzzy methods to nonlinearly change the inertia weight [60]. In

the time-varying inertia weight, a linearly decreasing vmax is introduced in [62]. By

analyzing the convergence behavior of the PSO, a PSO variant with a constriction factor was introduced by Clerc and Kennedy [63]. Constriction factor guarantees the

convergence and improves the convergence velocity.

Improving PSO’s performance by designing different types of topologies has

been an active research direction. Kennedy [64][65] claimed that PSO with a small neighborhood might perform better on complex problems, while PSO with a large

neighborhood would perform better on simple problems. Suganthan [66] applied a dynamically adjusted neighborhood where the neighborhood of a particle gradually

increases until it includes all particles. In [67], Hu and Eberhart also used a dynamic neighborhood where closest particles in the performance space are selected to be its

new neighborhood in each generation. Parsopoulos and Vrahatis combined the global version and local version together to construct a unified particle swarm optimizer

(UPSO) [68][69]. Mendes and Kennedy introduced a fully informed PSO in [70]. Instead of using the pbest and gbest positions in the standard algorithm, all the

neighbors of the particle are used to update the velocity. The influence of each particle to its neighbors is weighted based on its fitness value and the neighborhood

size. Veeramachaneni et al. developed the fitness-distance-ratio-based PSO (FDR-PSO) with near neighbor interactions [71]. When updating each velocity

dimension, the FDR-PSO algorithm selects one other particle nbest, which has a higher fitness value and is nearer to the particle being updated.

Some researchers investigated hybridization by combining PSO with other search techniques to improve the performance of the PSO. Evolutionary operators

such as selection, crossover, and mutation have been introduced to the PSO to keep the best particles [72], to increase the diversity of the population, and to improve the

parameters such as the inertia weight [74]. Relocating the particles when they are too

close to each other [75] or using some collision-avoiding mechanisms [76] to prevent particles from moving too close to each other in order to maintain the diversity and to

escape from local optima has also been used. In [73], the swarm is divided into subpopulations, and a breeding operator is used within a subpopulation or between the

subpopulations to increase the diversity of the population. Negative entropy is used to discourage premature convergence in [77]. In [78], deflection, stretching, and

repulsion techniques are used to find as many minima as possible by preventing particles from moving to a previously discovered minimal region. Recently, a

cooperative particle swarm optimizer (CPSO-H) [79] was proposed. Although CPSO-H uses one-dimensional (1-D) swarms to search each dimension separately, the

results of these searches are integrated by a global swarm to significantly improve the performance of the original PSO on multimodal problems.

From our review of the state-of-the-art, we noticed two tendencies: 1) PSO variants are mostly added with further operators (e.g. mutation operator) and

mechanisms (e.g. “fly-back”, multi-swarms, co-evolution), and 2) PSO variants are merged into one in order to improve its performance. Therefore, in this dissertation,

we present three novel PSO-based learning algorithms for the neuro-fuzzy systems according to these two tendencies.

1.3 Organization of Dissertation

The overall objective of this dissertation is to develop the novel learning algorithms embedded with particle swarm optimizer for the neuro-fuzzy systems. The

research, we take the functional-link-based neuro-fuzzy network (FLNFN) model for

example to demonstrate the performance of the proposed learning algorithms. Organization and objectives of each chapter in this dissertation are as follows.

In Chapter 2, we describe the structure of FLNFN model. The FLNFN model is based on our laboratory’s previous research [32]. Each fuzzy rule corresponds to a

sub-FLNN [80-82] comprising a functional expansion of input variables. The functional link neural network (FLNN) is a single layer neural structure capable of

forming arbitrarily complex decision regions by generating nonlinear decision boundaries with nonlinear functional expansion. Therefore, the consequent part of the

FLNFN model is a nonlinear combination of input variables, which differs from the other existing models [20][24][25].

In Chapter 3, we propose an efficient immune-based particle swarm optimization (IPSO) algorithm for neuro-fuzzy classifiers to solve the skin color detection problem.

The proposed IPSO algorithm combines the immune algorithm (IA) and PSO to perform parameter learning. The IA uses the clonal selection principle, such that

antibodies between others of high similar degree are affected, and these antibodies, after the process, will have higher quality, accelerating the search and increasing the

global search capacity. On the other hand, we employed the advantages of PSO to improve the mutation mechanism of IA. Simulations have conducted to show the

performance and applicability of the proposed method.

In Chapter 4, we present an evolutionary neural fuzzy classifier, designed using

the neural fuzzy system (NFS) and a new evolutionary learning algorithm. This new evolutionary learning algorithm is based on a hybrid of bacterial foraging

optimization (BFO) and PSO. It is thus called bacterial foraging particle swarm optimization (BFPSO). The proposed BFPSO method performs local search through

over the entire search space is accomplished by a particle swarm operator. The

proposed NFS with BFPSO learning algorithm (NFS-BFPSO) is adopted in several classification applications. Experimental results have demonstrated that the proposed

NFS-BFPSO method can outperform other methods.

In Chapter 5, we present an evolutionary NFS for nonlinear system control. A

supervised learning algorithm, which consists of structure learning and parameter learning, is presented. The structure learning depends on the entropy measure to

determine the number of fuzzy rules. The parameter learning, based on the PSO algorithm, can adjust the shape of the membership function and the corresponding

weighting of the FLNN. The distance-based mutation operator, which strongly encourages a global search giving the particles more chance of converging to the

global optimum, is introduced. The simulation results have shown the proposed method can improve the searching ability and is very suitable for the nonlinear system

control applications.

In Chapter 6, we compare the performance of the proposed learning algorithms

using skin color detection problem. In addition, a brief discussion of the proposed learning methods is also made.

Chapter 2

Structure of the Functional-Link-Based

Neuro-Fuzzy Network

In the field of artificial intelligence, neural networks are essentially low-level

computational structures and algorithms that offer good performance when they deal with sensory data. However, it is difficult to understand the meaning of each neuron

and each weight in the networks. Generally, fuzzy systems are easy to appreciate because they use linguistic terms and IF-THEN rules. However, they lack the learning

capacity to fine-tune fuzzy rules and membership functions. Therefore, neuro-fuzzy networks combine the benefits of neural networks and fuzzy systems to solve many

engineering problems.

In [83], the definition of hybrid neuro-fuzzy system is as follows: “A hybrid

neuro-fuzzy system is a fuzzy system that uses a learning algorithm based on gradients or inspired by the neural networks theory (heuristic learning strategies) to

determine its parameters (fuzzy sets and fuzzy rules) through the patterns processing (input and output)”. In other words, neuro-fuzzy networks bring the low-level

learning and computational power of neural networks into fuzzy systems and give the high-level human-like thinking and reasoning of fuzzy systems to neural networks.

Recently, neuro-fuzzy networks have become popular topics of research. The advantages of a combination of neural networks and fuzzy inference systems are

obvious [8][34-36]. They not only have attracted considerable attention due to their diverse applications in fields such as pattern recognition, image processing, prediction,

expressions. The most popular neuro-fuzzy architectures include: 1) Fuzzy Adaptive

Learning Control Network (FALCON) [8][20][21][29][35]; 2) Adaptive-Network-Based Fuzzy Inference System (ANFIS) [24]; 3)

Self-Constructing Neural Fuzzy Inference Network (SONFIN) [25]; and 4) Functional-Link-Based Neuro-Fuzzy Network (FLNFN) [32][33].

In this dissertation, the selected NFS model is based on our laboratory’s previous research [32][33], called FLNFN. Figure 2.1 presents the structure of the FLNFN

model, which combines a neuro-fuzzy network with a FLNN [80-82]. The FLNN [81][84] is a single layer neural structure capable of forming arbitrarily complex

decision regions by generating nonlinear decision boundaries with nonlinear functional expansion. Moreover, the FLNN was conveniently used for function

approximation and pattern classification with faster convergence rate and less computational loading than a multilayer neural network. In the selected FLNFN

model, each fuzzy rule that corresponds to a FLNN consists of a functional expansion of input variables, which differs from the other existing models [20][24][25].

The FLNFN model realizes a fuzzy IF-THEN rule in the following form. Rule j :

IF xˆ is 1 A1j and xˆ is 2 A2j … and ˆ is xi Aij … and ˆ is xN ANj

THEN 1 1 2 2 1 ˆ ... M j kj k j j Mj M k y w w w w

(2.1)where xˆ and i yˆ are the input and local output variables, respectively; j A is the ij

linguistic term of the precondition part with a Gaussian membership function; N is

the number of input variables; w is the link weight of the local output; kj k is the basis trigonometric function of input variables; M is the number of basis functions, and Rule j is the th

1

ˆ

x

x

ˆ

2 3 ˆy 2 ˆy 1 ˆy 1ˆ

x

x

ˆ

2Figure 2.1: Structure of the selected neuro-fuzzy system model.

The operation functions of the nodes in each layer of the FLNFN model are now

described. In the following description, ( )l

u denotes the output of a node in the th

l layer.

Layer 1 (Input node): No computation is performed in this layer. Each node in

this layer is an input node, which corresponds to one input variable, and only transmits input values to the next layer directly:

i

i x

u(1) ˆ

(2.2)

linguistic label of input variables in layer 1. Therefore, the calculated membership

value specifies the degree to which an input value belongs to a fuzzy set in layer 2. The implemented Gaussian membership function in layer 2 is

(1) 2 (2) 2 [ ] exp i ij ij ij u m u (2.3)

where mij and ij are the mean and standard deviation of the Gaussian membership function, respectively, of the th

j term of the th

i input variable xˆi.

Layer 3 (Rule Node): Nodes in this layer represent the preconditioned part of a

fuzzy logic rule. They receive one-dimensional membership degrees of the associated

rule from the nodes of a set in layer 2. Here, the product operator described above is adopted to perform the IF-condition matching of the fuzzy rules. As a result, the

output function of each inference node is

i ij j u u(3) (2) (2.4) where the

i iju(2) of a rule node represents the firing strength of its corresponding

rule.

Layer 4 (Consequent Node): Nodes in this layer are called consequent nodes.

The input to a node in layer 4 is the output from layer 3, and the other inputs are

nonlinear combinations of input variables from a FLNN, as shown in Figure 2.1. For such a node,

M k k kj j j u w u 1 ) 3 ( ) 4 ( (2.5)where wkj is the corresponding link weight of the FLNN and k is the functional expansion of input variables. Considering the computational efficiency, the functional expansion uses a trigonometric polynomial basis function, given by

1, , , , , 2 3 4 5 6

xˆ1, sin (xˆ1), cos (xˆ1), , xˆ2 sin (xˆ2), cos (xˆ2)

for thetwo-dimensional input variables

x xˆ ˆ1, 2

. Therefore, M is the number of basis functions, M 3 N, where N is the number of input variables. Moreover, the output nodes of FLNN depend on the number of fuzzy rules of the FLNFN model.Layer 5 (Output Node): Each node in this layer corresponds to a single output

variable. The node integrates all of the actions recommended by layers 3 and 4 and acts as a center of area (COA) defuzzifier with

R j ) ( j R j j ) ( j R j ) ( j R j M k k kj ) ( j R j ) ( j R j ) ( j ) ( u y ˆ u u w u u u u y 1 3 1 3 1 3 1 1 3 1 3 1 4 5 (2.6)where R is the number of fuzzy rules, and y is the output of the FLNFN model.

As described above, the number of tuning parameters for the FLNFN model is known to be (2 3 ) P , where N R N, R , and P denote the number of inputs, existing rules, and outputs, respectively.

Chapter 3

Immune Algorithm Embedded with

Particle Swarm Optimizer for

Neuro-Fuzzy Classifier and Its

Applications

Skin color detection is the process of finding skin-colored pixels and regions in

an image or a video. This process is typically used as a preprocessing step to find regions that potentially have human faces and limbs in images. Several computer

vision approaches have been developed for skin color detection. A skin color detector typically transforms a given pixel into an appropriate color space and then use a skin

color classifier to label the pixel whether it is a skin or a non-skin pixel. A skin color classifier defines a decision boundary of the skin color class in the color space based

on a training database of skin-colored pixels.

This chapter presents the efficient immune-based particle swarm optimization

(IPSO) for neuro-fuzzy classifiers to solve the skin color detection problem. The proposed IPSO algorithm combines the immune algorithm (IA) and particle swarm

optimization (PSO) to perform parameter learning. The IA uses the clonal selection principle to affect antibodies between others of high similar degree, and these

antibodies, after the process, will be of higher quality, accelerating the search, and increasing the global search capacity. The PSO algorithm, proposed by Kennedy and

Eberhart [41-43], has proved to be very effective for solving global optimization. It is not only a recently invented high-performance optimizer that is easy to understand

and implement, but it also requires little computational bookkeeping and generally

only a few lines of code [85]. In order to avoid trapping in a local optimal solution and to ensure the search capability of a near global optimal solution, mutation plays

an important role in IPSO. Therefore, we employ the advantages of PSO to improve mutation mechanism of IA. The proposed method can improve the searching ability

and greatly increase the converging speed that we can observe in the simulations.

3.1 Basic Concepts of the Artificial Immune System

The biological immune system is successful at protecting living bodies from the

invasion of various foreign substances, such as viruses, bacteria, and other parasites (called antigens), and eliminating debris and malfunctioning cells. Over the last few

years, a growing number of computer scientists have carefully studied the success of this competent natural mechanism and proposed computer immune models, named

artificial immune systems (AIS), for solving various problems [86-94]. AIS aim at using ideas gleaned from immunology in order to develop adaptive systems capable

of performing a wide range of tasks in various areas of research.

In this research, we review the clonal selection concept, together with the affinity

maturation process, and demonstrate that these biological principles can lead to the development of powerful computational tools. The algorithm to be presented focuses

on a systemic view of the immune system and does not take into account cell-cell interactions. It is not our concern to model exactly any phenomenon, but to show that

some basic immune principles can help us not only to better understand the immune system itself, but also to solve complex engineering tasks.

3.2 Clonal Selection Theory

Any molecule that can be recognized by the adaptive immune system is known

as an antigen (Ag). When an animal is exposed to an Ag, some subpopulation of its bone-marrow-derived cells (B lymphocytes) responds by producing antibodies (Ab’s).

Ab’s are molecules attached primarily to the surface of B cells whose aim is to recognize and bind to Ag’s. Each B cell secretes a single type of antibody (Ab), which

is relatively specific for the Ag. By binding to these Ab’s (cell receptors) and with a second signal from accessory cells, such as the T-helper cell, the Ag stimulates the B

cell to proliferate (divide) and mature into terminal (non-dividing) Ab secreting cells, called plasma cells. The process of cell division (mitosis) generates a clone, i.e., a cell

or set of cells that are the progenies of a single cell. While plasma cells are the most active Ab secretors, large B lymphocytes, which divide rapidly, also secrete Ab’s,

albeit at a lower rate. On the other hand, T cells play a central role in the regulation of the B cell response and are preeminent in cell mediated immune responses, but will

not be explicitly accounted for the development of our model.

Lymphocytes, in addition to proliferating and/or differentiating into plasma cells,

can differentiate into long-lived B memory cells. Memory cells circulate through the blood, lymph and tissues, and when exposed to a second antigenic stimulus

commence to differentiate into large lymphocytes capable of producing high affinity antibodies, pre-selected for the specific antigen that had stimulated the primary

response [95]. In this study, we treat the long-lived B memory cells as the better

antibodies by elitism selection. Figure 3.1 depicts the clonal selection principle [95].

The main features of the clonal selection theory [96][97] that will be explored in

this study are:

• Generation of new random genetic changes, subsequently expressed as

diverse Ab patterns, by a form of accelerated somatic mutation (a process called affinity maturation);

• Elimination of newly differentiated lymphocytes carrying low affinity antigenic receptors.

Figure 3.1: The clonal selection principle.

3.3 The Efficient Immune-Based PSO Learning Algorithm

This section describes the efficient immune-based PSO (IPSO) learning

algorithm for use in the neuro-fuzzy classifier. Analogous to the biological immune system, the proposed algorithm has the capability of seeking feasible solutions while

maintaining diversity. The proposed IPSO combines the immune algorithm (IA) and particle swarm optimization (PSO) to perform parameter learning. The IA uses the

clonal selection principle to accelerate the search and increase global search capacity.

The PSO algorithm has proved to be very effective for solving global optimization. It is not only a recently invented high-performance optimizer that is very easy to

understand and implement, but it also requires little computational bookkeeping and generally only a few lines of code. In order to avoid trapping in a local optimal

solution and to ensure the search capability of a near global optimal solution, mutation plays an important role in IPSO. Moreover, the PSO adopted in evolution algorithm

yields high diversity to increase the global search capacity, as well as the mutation scheme. Therefore, we employed the advantages of PSO to improve the mutation

mechanism of IA. A detailed IPSO of the neuro-fuzzy classifier is presented in Figure 3.2. The whole learning process is described step-by-step below.

3.3.1 Code fuzzy rule into antibody

The coding step is concerned with the membership functions and the

corresponding parameters of the consequent part of a fuzzy rule that represent Ab’s suitable for IPSO. This step codes a rule of a neuro-fuzzy classifier into an Ab. Figure

3.3 shows an example of a neuro-fuzzy classifier coded into an Ab (i.e. an Ab represents a rule set), where i and j represent the ith dimension and the jth rule,

respectively. In this research, a Gaussian membership function is used with variables representing the mean and standard deviation of the membership function. Each fuzzy

rule has the form in Figure 2.1, where mij and ij represent a Gaussian membership function with mean and standard deviation of the th

i dimension and th j rule node and wij represents the corresponding parameters of consequent part.

Figure 3.3: Coding a neuro-fuzzy classifier into an antibody in the IPSO method.

3.3.2 Determine the initial parameters by self-clustering algorithm

Before the IPSO method is designed, the initial Ab’s in the populations are

generated according to the initial parameters of the antecedent part and the consequent part. In this study, the initial parameters of a neuro-fuzzy classifier were computed by

the self-clustering algorithm (SCA) method [52][98][99]. That is, we used SCA method to determine the initial mean and standard deviation of the antecedent part.

On the other hand, the initial link weight of the consequent part is a random number in the range of 0 to 1.

SCA is a distance-based connectionist clustering algorithm. In any cluster, the maximum distance between an example point and the cluster center is less than a

threshold value. This clustering algorithm sets clustering parameters and affects the number of clusters to be estimated. In the clustering process, the data examples come

from a data stream. The clustering process starts with an empty set of clusters. The clusters will be updated and changed depending on the position of the current

example in the input space.

3.3.3 Produce initial population

In the immune system, the Ab’s are produced in order to cope with the Ag’s. In other words, the Ag’s are recognized by a few of high affinity Ab’s (i.e. the Ag’s are

SCA, and the other Ab’s of population are generated based on the first initial Ab by

adding some random value.

3.3.4 Calculate affinity values

For the large number of various Ag’s, the immune system has to recognize them for their posterior influence. In biological immune system, affinity refers to the

binding strength between a single antigenic determinants and an individual antibody-combining site. The process of recognizing Ag’s is to search for Ab’s with

the maximum affinity with Ag’s. Moreover, every Ab in the population is applied to problem solving, and the affinity value is a performance measure of an Ab which is

obtained according to the error function. In this study, the affinity value is designed according to the follow formulation:

2 1 1 1 1 D N d k k k D Affinity value y y N

(3.1)where

y

k represents the thk model output, d

k

y represents the desired output, and

D

N represents the number of the training data. In the problems, the higher affinity

refers to the better Ab.

3.3.5 Production of sub-antibodies

In this step, we will generate several neighborhoods to maintain solution

variation. This strategy can prevent the search process from becoming premature. We can generate several clones for each Ab on feasible space by Eqs. (3.2), (3.3) and (3.4).

Each Ab regarded as parent while the clones regarded as children (sub-antibodies). In other words, children regarded as several neighborhoods of near parent.

deviation: clones children[ i_c] antibody parent[ i] (3.3) weight : clones children[ i_c] antibody parent[ i] (3.4) where parenti represents the ith Ab from the Ab population; childreni c_

represents clones number c from the ith Ab; and are parameters that control the distance between parent. In this scheme, and are important parameters. The large values lead to the speed of convergence slowly and the search

of optimal solution difficulty, whereas the small values lead to fall in a local optimal solution easily. Therefore, the selection of the and will critically affect the learning results, and their values will be based on practical experimentation or on trial-and-error tests.

3.3.6 Mutation of sub-antibodies based on PSO

In order to avoid trapping in a local optimal solution and to ensure the search

capability of near global optimal solution, mutation plays an important role in IPSO. Moreover, the PSO adopted in evolution algorithm yields high diversity to increase

the global search capacity, as well as the mutation step. Hence, we employed the advantages of PSO to improve mutation mechanism. Through the mutation step, only

one best child can survive to replace its parent and enter the next generation.

PSO is a recently invented high-performance optimizer that is very easy to understand and implement. Each particle has a velocity vector vi and a position vector xi to represent a possible solution. In this research, the velocity for each

particle is updated by Eq. (1.3). The parameter w(0, 1] is the inertia of the particle, and controls the exploratory properties of the algorithm. The constants c1 and c2

are known as the “cognition” and “social” factors, respectively. r1 and r2 are uniformly distributed random numbers in [0, 1]. The term vi is limited to the range

max v

. If the velocity violates this limit, it will be set at its proper limit. Changing velocity enables every particle to search around its individual best position and global best position. Based on the updated velocities, each particle changes its position

according to Eq. (1.2).

When every particle is updated, the affinity value of each particle is calculated

again. If the affinity value of the new particle is higher than those of local best, then the local best will be replaced with the new particle. Moreover, in the mutation step,

each Ab (or particle) in the population must be mutated only one time by PSO in each generation. The mutation step flowchart is presented in Figure 3.4.

{

{

{

{

}

}

}

}

Figure 3.4: The flowchart of the mutation step.

3.3.7 Promotion and suppression of antibodies

In order to affect Ag’s and keep diversity to a certain degree, we use information entropy theory to measure the diversity of Ab’s. If the affinity between two Ab’s is

greater than the suppression threshold Thaff, these two Ab’s are similar, and the Ab of

immune algorithm composed of N Ab’s having L genes.

…..

…..

Antibody 1

..

..

.

Antibody k

Antibody N

..

..

.

…..

…..

…..

…..

1

2

…..

l

…..

L-1

L

G1,l

Gk,l

GN,l

Gene

Figure 3.5: The coding of antibody population.

From information entropy theory, we get

N i il il l N P P IE 1 log ) ( (3.5)where Pil is the probability that the i allele comes out at the th l gene. The th

diversity of the genes is calculated using Eq. (3.5). The average entropy value IE N( )

of diversity can be also computed as follows:

L l l N IE L N IE 1 ) ( 1 ) ( (3.6)where L is the size of the gene in an Ab. Equation (3.6) yields the diversity of the

Ab pool in terms of the entropy. There are two kinds of affinities in IPSO. One explains the relationship between an Ab and an Ag using Eq. (3.1). The other accounts for the degree of association between the th

j Ab and the th

k Ab and measures how

similar these two Ab’s are. It can be calculated by using

) 2 ( 1 1 _ IE Ab Affinity jk (3.7)

where _Affinity Abjk is the affinity between two Ab’s j and k, and IE(2) is the

entropy of only the Ab’s j and k. This affinity is constrained from zero to one.

When (2)IE is zero, the genes of the th

j Ab and the th

k Ab are the same.

3.3.8 Elitism selection

When a new generation is created, the risk of losing the best Ab is always existent. In this study, we adopt elitism selection to overcome the above-mentioned

problem. Therefore, the Ab’s are ranked in ascending order of their affinity values. The best Ab is kept as the parent for the next generation. Moreover, the best Ab and

Ab’s with high antigenic affinity are transformed into long-lived B memory cells. Elitism selection improves the efficient of IPSO considerably, as it prevents losing the

best result.

3.4 Skin Color Detection

Detecting skin-colored pixels, although seems a straightforward easy task, has

proven quite challenging for many reasons. The appearance of skin in an image depends on the illumination conditions where the image was captured. Therefore, an

important challenge in skin detection is to represent the color in a way that is invariant or at least insensitive to changes in illumination. The choice of the color space affects

greatly the performance of any skin detector and its sensitivity to change in illumination conditions. Another challenge comes from the fact that many objects in

the real world might have skin-tone colors. This causes any skin detector to have much false detection in the background if the environment is not controlled.

process has two phases: a training phase and a detection phase. Training a skin

detector involves three basic steps:

1. Collecting a database of skin patches from different images. Such a

database typically contains skin-colored patches from a variety of people under different illumination conditions.

2. Choosing a suitable color space.

3. Learning the parameters of a skin classifier.

Given a trained skin detector, identifying skin pixels in a given image or video frame involves:

1. Converting the image into the same color space that was used in the training phase.

2. Classifying each pixel using the skin classifier to either a skin or non-skin. 3. Typically post processing is needed using morphology to impose spatial

homogeneity on the detected regions.

In this research, we used the California Institute of Technology (CIT) facial

database (on http://www.vision.caltech.edu/Image_Datasets/faces/.) The database has 450 color images, the size of each being 320×240 pixels, and contains 27 different

people and a variety of lighting, backgrounds, and facial expressions.

Three input dimensions (Y, Cb and Cr) were used in this experiment. We chose

6000 training data and 6000 testing data. We used the CIT database to produce both the training data and the testing data. We chose 3000 skin and 3000 non-skin pixels as

the training data in the color images. Also, we chose other 3000 skin and 3000 non-skin pixels as the testing set. We set four rules constituting a neuro-fuzzy

classifier.

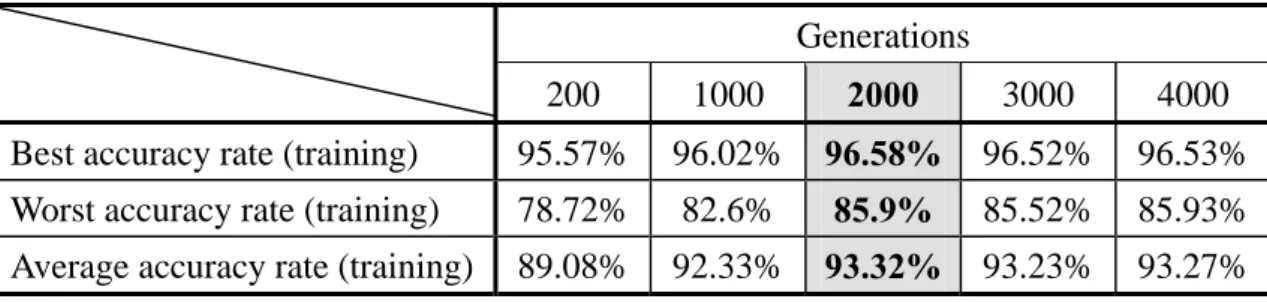

The number of Ab’s for a swarm was set to 100. With the same initial condition,

the accuracy rate with different generations for 50 runs is shown in Figure 3.7 and tabulated in Table 3.1. It seems a good choice to terminate the training phase after

2000generations process.

Table 3.1: The accuracy rate with different generations (%) Generations

200 1000 2000 3000 4000

Best accuracy rate (training) 95.57% 96.02% 96.58% 96.52% 96.53% Worst accuracy rate (training) 78.72% 82.6% 85.9% 85.52% 85.93% Average accuracy rate (training) 89.08% 92.33% 93.32% 93.23% 93.27%

Figure 3.8: The learning curves of the three methods using the CIT database.

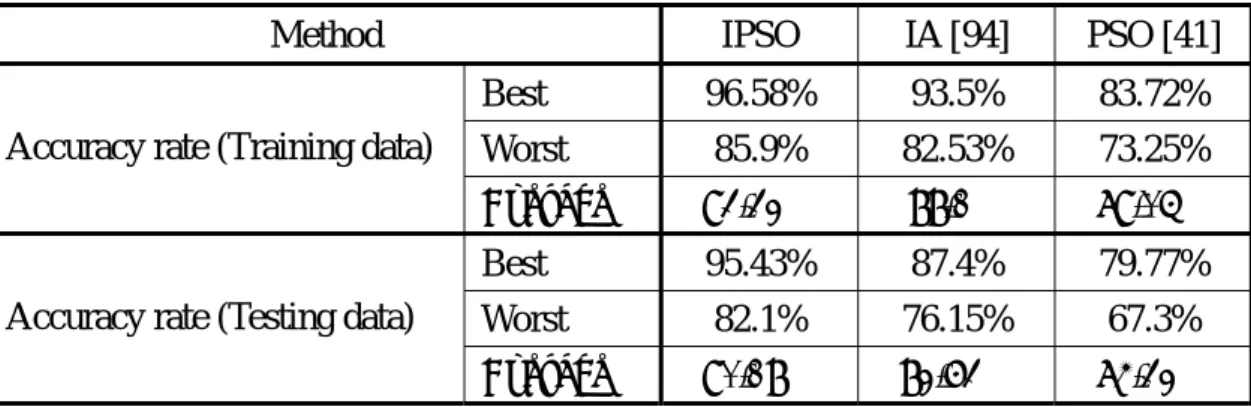

In this example, the performance of the IPSO method is compared with the IA method [94], and the PSO method [41]. First, the learning curves of IA, PSO and

IPSO methods are shown in Figure 3.8. In Figure 3.8, we find that the performance of the proposed IPSO method is superior to the other methods. Furthermore, the

comparison items include the training and testing accuracy rates are tabulated in Table 3.2.

Table 3.2: Performance comparison with various existing models from the CIT database (Training data: 6000; Generations: 2000)

Method IPSO IA [94] PSO [41]

Best 96.58% 93.5% 83.72%

Worst 85.9% 82.53% 73.25%

Accuracy rate (Training data)

Average 93.32% 88.1% 79.05%

Best 95.43% 87.4% 79.77%

Worst 82.1% 76.15% 67.3%

Accuracy rate (Testing data)

Average 90.18% 82.63% 74.32%

The CIT facial database consists of complex backgrounds and diverse lighting.

Hence, from the comparison data listed in Table 3.2, the average of the test accuracy rate is 74.32% for PSO, 82.63% for IA and 90.18% for the proposed IPSO. The

proposed IPSO method still maintains a superior test accuracy rate. To demonstrate the skin color detection result, the color images from the CIT database are shown in

Figure 3.9. A well-trained classifier can generate binary outputs (1/0 for skin/non-skin) to detect a facial region. Figure 3.10 shows that our approach accurately determines a

facial region.

3.5 Concluding Remarks

In this chapter, the efficient immune-based particle swarm optimization (IPSO) is

proposed to improve the searching ability and the converge speed. We proposed the IPSO for a neuro-fuzzy classifier to solve the skin color detection problem. The

advantages of the proposed IPSO method are summarized as follows: 1) We employed the advantages of PSO to improve the mutation mechanism; 2) The

experimental results show that our method is more efficient than IA and PSO in accuracy rate and convergence speed.