行政院國家科學委員會專題研究計畫 成果報告

人臉辨識法之運算量與辨識率之研究 研究成果報告(精簡版)

計 畫 類 別 : 個別型

計 畫 編 號 : NSC 100-2221-E-011-137-

執 行 期 間 : 100 年 08 月 01 日至 101 年 07 月 31 日 執 行 單 位 : 國立臺灣科技大學電機工程系

計 畫 主 持 人 : 楊英魁

計畫參與人員: 碩士班研究生-兼任助理人員:陳君豪 博士班研究生-兼任助理人員:方偉力

報 告 附 件 : 出席國際會議研究心得報告及發表論文

公 開 資 訊 : 本計畫可公開查詢

中 華 民 國 101 年 12 月 08 日

中 文 摘 要 : 本研究計畫為改善人臉辨識演算法之成效,提出了一種降低 二維主成份分析法(Two-Dimensional Principal Component Analysis, 2DPCA)運算量的演算法,此方法名為次區二維主 成份分析法(Sub-Area Two-Dimensional Principal

Component Analysis, SA 2DPCA)。目前基於傳統二維主成份 分析法之各種改良方法中,由於大多數方法在特徵分解 (eigen-decomposition)的部份會需要很大的運算量

(computation load),因此本計畫提出改良傳統二維主成份 分析法,使用合適的分割數目,將圖片資料分割為數區以減 少運算量,並證明分割數目的重要性,過高的分割數目會使 得特徵擷取的成效反而變差,同時因為分區處理的關係,每 一區的特徵更容易被取得,進而獲得良好的特徵擷取成效。

此法也使用權重值(weight)的觀念,將經二維主成份分析法 處理後所得的投影特徵向量,賦與權重值,並使用最小平均 平方法來將此權重值最佳化,因此可以得到一組最適合用來 辨識的投影特徵向量。本計畫將以 ORL 資料集為測試對象,

實施各種實驗,並與其它性質相同的現存幾種方法做比較,

期以實驗結果證實,本計畫所提之次

區二維主成份分析法的運算量比大部份基於傳統二維主成份 分析法的方法明顯的降低,且提升辨識的成效,這將讓此方 法更好應用在人臉辨識相關各領域上。

中文關鍵詞: 二維主成份分析法,特徵分解,運算量,辨識率,人臉辨識 演算法

英 文 摘 要 : To improve the performance of face recognition algorithm, this project proposes an approach named Sub-Area Two-Dimensional Principal Component Analysis (SA2DPCA) in order to not only reduce the computation load but also increase the face recognition rate of Two-Dimensional Principal Component Analysis (2DPCA).

The 2DPCA has been a popular approach on face recognition and therefore there are number of

approaches being proposed to improve its performance.

However, most of those approaches require heavy

computation load to raise recognition rate during the process of eigen-decomposition that involves with covariance matrices computation. The SA2DPCA is therefore proposed to divide a face image into appropriate number of smaller areas (sub-areas) and process these smaller areas individually. The

computation load of processing covariance matrices is consequently reduced due to the smaller size of sub- areas. Beside, image features can be easier extracted due to relatively larger portion in a smaller area for features. Further, weights have been

appropriately assigned to features to enhance face recognition result. The popular ORL database will be used when conducting experiments. Various kinds of performance comparisons against related existing approaches will be done to show the improved computation load and face recognition rate of

SA2DPCA. The better performance of SA2DPCA will make the approach more suitable to be applied to practical applications

英文關鍵詞: 2-D principle component analysis, feature extraction, computation cost, recognition rate, face recognition alogorithm

1

行政院國家科學委員會補助專題研究計畫成果報告

※※※※※※※※※※※※※※※※※※※※※※※※※

※ ※

※ 人臉辨識法之運算量與辨識率之研究 ※

※ ※

※※※※※※※※※※※※※※※※※※※※※※※※※

計畫類別:

■個別型計畫 □整合型計畫 計畫編號:NSC 100-2221-E-011-137

執行期間: 100 年 8 月 1 日至 101 年 7 月 31 日

計畫主持人:楊英魁 共同主持人:

本成果報告包括以下應繳交之附件:

□赴國外出差或研習心得報告一份

□赴大陸地區出差或研習心得報告一份

□出席國際學術會議心得報告及發表之論文各一份

□國際合作研究計畫國外研究報告書一份

執行單位:國立台灣科技大學

中 華 民 國 101 年 12 月 7 日

行政院國家科學委員會專題研究計畫成果報告

2

計畫編號:NSC 100-2221-E-011-137 執行期限:100 年 8 月 1 日至 101 年 7 月 31 日

主持人:楊英魁 國立台灣科技大學 電機工程研究所

一、中文摘要

本研究計畫為改善人臉辨識演算法之 成效,提出了一種降低二維主成份分析法 (Two-Dimensional Principal Component Analysis, 2DPCA)運算量的演算法,此方法 名 為 次 區 二 維 主 成 份 分 析 法 (Sub-Area Two-Dimensional Principal Component Analysis, SA 2DPCA)。目前基於傳統二維 主成份分析法之各種改良方法中,由於大 多數方法在特徵分解(eigen-decomposition) 的部份會需要很大的運算量(computation load),因此本計畫提出改良傳統二維主成 份分析法,使用合適的分割數目,將圖片 資料分割為數區以減少運算量,並證明分 割數目的重要性,過高的分割數目會使得 特徵擷取的成效反而變差,同時因為分區 處理的關係,每一區的特徵更容易被取 得,進而獲得良好的特徵擷取成效。此法 也使用權重值(weight)的觀念,將經二維主 成份分析法處理後所得的投影特徵向量,

賦與權重值,並使用最小平均平方法來將 此權重值最佳化,因此可以得到一組最適 合用來辨識的投影特徵向量。本計畫將以 ORL 資料集為測試對象,實施各種實驗,

並與其它性質相同的現存幾種方法做比 較,期以實驗結果證實,本計畫所提之次

區二維主成份分析法的運算量比大部份基 於傳統二維主成份分析法的方法明顯的降 低,且提升辨識的成效,這將讓此方法更 好應用在人臉辨識相關各領域上。

關鍵詞:二維主成份分析法,特徵分解,

運算量,辨識率,人臉辨識演算法 Abstract

To improve the performance of face recognition algorithm, this project proposes an approach named Sub-Area Two-Dimensional Principal Component Analysis (SA2DPCA) in order to not only reduce the computation load but also increase the face recognition rate of Two-Dimensional Principal Component Analysis (2DPCA). The 2DPCA has been a popular approach on face recognition and therefore there are number of approaches being proposed to improve its performance.

However, most of those approaches require heavy computation load to raise recognition rate during the process of eigen-decomposition that involves with covariance matrices computation. The SA2DPCA is therefore proposed to divide a face image into appropriate number of smaller areas (sub-areas) and process these smaller areas individually. The computation load of processing covariance matrices is consequently reduced due to the smaller size of sub-areas. Beside, image features can be

3

easier extracted due to relatively larger portion in a smaller area for features. Further, weights have been appropriately assigned to features to enhance face recognition result.

The popular ORL database will be used when conducting experiments. Various kinds of performance comparisons against related existing approaches will be done to show the improved computation load and face recognition rate of SA2DPCA. The better performance of SA2DPCA will make the approach more suitable to be applied to practical applications.

二、緣由與目的

本研究計畫的目的為改善人臉辨識演 算法之成效。人臉辨識是近年來在影像處 理領域裡相當熱門的研究題目,之所以會 有這麼多研究團體及學者投入的原因,在 於人臉辨識能夠有效應用於人類的生活之 中,像是大樓門禁管理、人員進出管理、

身份確認、監督[1]-[14],以及我們日常生 活上會使用到的電子產品,像是數位相機 的自動捉取人臉後判斷是哪個人,和手機 及筆記型電腦在開機時可以自動判斷是否 是允許的使用者。人臉辨識領域在應用端 的部份使用範圍極廣,且實用性也非常的 高,在理論端的部份可以結合許多人工智 慧的方法來達到更好的成效,所以非常具 有研究價值。

在人臉辨識這個領域裡,所使用的演 算法種類非常的多,有的是為了降低運算 量,有的是為了增加辨識率[15][16],最好 情況是希望能夠同時提升辨識率(accuracy rate) 及 降 低 運 算 量 (computational burden) 。以兼顧辨識率及處理速度的角度 來說,近十幾年來最常用的特徵擷取演算 法就是主成份分析法(principal component analysis, PCA)[17],主成份分析法(PCA)在

人臉辨識上的使用,最早是由 Sirovich 和 Kirby 所提出[17][18],利用投影的觀念,

將原始的資料投影到較低的維度,使其特 徵變的更明顯,如此可讓後端系統在做分 類時,有較佳的成效。主成份分析法(PCA) 在人臉辨識裡,從被提出後,就有許多專 家及學者投入相關的研究,像是 Pujol 等人 提 出 的 topological principal component analysis[19],處理的方式為使用資料變數 間 topological 的關係,來結合主成份分析 法(PCA)做運算,但因為此法的處理過程中 需將共變異數矩陣(covariance matrix)進行 對角化(diagonalize),所以會造成運算量較 高的缺點。由 Kim 等人所提出的 kernel principal component analysis[20],其做法是 藉由 kernel 的觀念,將低維度的資料投影 到高維度,並結合主成份分析法(PCA)來使 用,優點是辨識率高,在測試 ORL Database 時可達 97.5%的辨識率,但因投影到高維 度的運算耗時,所以缺點為運算的複雜度 過高。由 Wang 等人[21]提出使用主成份分 析 法 (PCA) 加 上 線 性 鑑 別 分 析 法 (linear discriminant analysis, LDA)來進行人臉資 料的特徵擷取,其做法為先將資料用主成 份分析法(PCA)擷取重要特徵,再用線性鑑 別分析法讓資料變的更有鑑別性,方便系 統後端的處理,但此法的缺點為會因為處 理樣本數不夠,而產生小樣本尺度問題 (small simple size, SSS)[22]。由 Wang 等人 [23] 提 出 使 用 對 稱 影 像 修 正 (symmetrical image correction, SIC)和位元面特徵熔合 (bit-plane feature fusion, BPFF)的方法來結 合主成份分析法(PCA)應用於人臉辨識,特 色是如果照片中有強烈光源的變化,此法 可以降低其所帶來辨識上的影響。由 Hsieh 等人[24]提出次圖形(sub-pattern)的技術結 合主成份分析法(PCA),其辨識成效佳,對 於圖片裡強烈光源變化帶來的影響,也有 很好的抵制能力,但此法的缺點為運算量 較高。

4

在主成份分析法(PCA)用於人臉辨識 領域的多年之後,Yang 等人[15]提出了一 種名為二維主成份分析法(two-dimensional principal component, 2DPCA)的演算法,此 法主要的目地是應用於人臉資料的特徵擷 取(feature extraction),其二維的特性,可以 得到降低運算量及提升辨識率的好處,此 法在辨識率和運算量這二個關鍵議題中取 得到全面性的提升[15]。因為二維主成份分 析法在人臉辨識上優異的表現,進而衍生 出各類型不同的演算法,由 Zhang 等人提 出 的 二 維 平 方 主 成 份 分 析 法 ((2D)2PCA) [25],使用的方法為將二維主成份分析法從 橫軸方向及縱軸方向進行處理,取出最短 的維度,再將所得的結果來做辨識,優點 是可降低運算量,但在一般的情況下,當 可做為訓練的資料量較多時,理論上辨識 率要比訓練資料量少的時候有所提升,在 如此的條件之下,此法與其他演算法相 比 , 提 升 的 幅 度 卻 不 顯 著 [22] 。 由 Sanguansat 等人[26]所提出的二維主成份 分 析 法 結 合 二 維 線 性 鑑 別 分 析 法 (two-dimensional Linear discriminant analysis, 2DLDA)的方式來對人臉進行辨 識,優點是採取二維線性鑑別分析法可以 避免傳統線性鑑別分析法所帶來的小樣本 尺度問題[22],以及辨識率的提升,但缺點 是因為多了一道二維線性鑑別分析法的處 理程序,所以整體的運算時間會比原先只 使用二維主成份分析法時要長。Meng 等人 [27]提出了以量化量測法(volume measure) 結合二維主成份分析法的方法,其做法為 使用二維主成份分析法進行特徵擷取,後 端的分類使用計算距離的方式,此為由自 身所定義方法來計算矩陣的量(volume),這 個方式的好處是較適合計算高維度的資 料。由 Wang 等人[28]提出的統計二維主成 份分析法(Probabilistic 2DPCA),使用高斯 分佈(Gaussian distribution)的概念結合二維 主成份分析法,此方式的優點是對於圖片

中的雜訊,有很好的抵制效果。Kim 等人 [29]提出了混合式雙向二維主成份分析法 (fusion method based on bidirectional 2DPCA),此法不只對行向量做降維,也對 列向量做降維,混合這兩部份處理的結 果,來進行後端的運算,好處是辨識率會 提升,缺點是運算量較高[30]。

上述所提到的人臉辨識演算法,各有 其優缺點。當二維主成份分析法被提出 後,更是強化了主成份分析法系列演算法 在人臉辨識領域裡的地位,因為二維主成 份分析法是針對人臉資料而設計的,所以 表現更勝於傳統主成份分析法。

在基於二維主成份分析法的基礎下,

本計畫提出一套針對人臉資料特性處理的 演算法,由於矩陣維度對運算量的影響很 大,此法的精神在於對二維的人臉資料進 行分割區塊的處理,再分別將每個被分割 的小區塊進行二維主成份分析法的運算,

如此可以達到降低運算量及提升辨識率的 效果,我們將此法稱為次區二維主成份分 析 法 (sub-area two dimensional principal component, SA2DPCA),預計此法將不只可 以降低在二維主成份分析法的運算量,並 在辨識率上比大部份基於傳統二維主成份 分析法的方法有更好的表現。

三、研究結果與討論

在本研究中,提出了一種降低二 維主成份分析法運算複雜度的演算法,名 為分部特徵分解之二維主成份分析法。二 維主成份分析法 是一個專為人臉辨識設 計的特徵擷取演算法,其特色為計算量不 高,但能夠擁有良好的特徵擷取成效,而 讓系統後端在進行辨識時有高辨識率的表 現,而使得此法廣泛應用於人臉辨識的領

5

域中。本研究基於能讓人臉辨識的效果能 夠更好的目地之下,希望能使用一種方式 使得人臉辨識的運算量低,而辨識成效 高,因此提出了分部特徵分解之二維主成 份分析法改良了傳統二維主成份分析法,

使用合適的分割數目,將圖片資料分割為 數區,並詳細證明分割數目的重要性,過 高的分割數目會使得特徵擷取的成效反而 變差,本研究以 ORL 資料集為測試對象,

適合的分割數目為 4,也就是將圖片等份分 成四區,再來進行運算,可以獲得良好的 特徵擷取成效。在實驗結果的部份詳細的 計算出分部特徵分解之二維主成份分析法 與傳統二維主成份分析法在複雜度的差 異,很明顯的分部特徵分解之二維主成份 分析法的複雜度比傳統二維主成份分析法 低的很多,並且因為分區處理的關係,每 一區的特徵更容易被取得,也使得辨識的 成效比原始的二維主成份分析法來的高,

在同是使用最近鄰居法則做為分類器的情 況之下,傳統二維主成份分析法的辨識率 為 93%,分部特徵分解之二維主成份分析

法 的 辨 識 率 為 93.5% , 雖 然 只 提 升 了 0.5%,但本法主要的目地是為了降低複雜 度,現在連辨識率的部份也都有所進步,

如此的成效讓分部特徵分解之二維主成份 分析法成為應用在人臉辨識領域上相當成 功的方法。

本計畫所提之次區二維主成份分析 法,在基礎理論的部份改進了傳統二維主 成份分析法的運算量,以學術研究的角度 來說,改善了理論端的部份。另外,此法 不僅可用在人臉的資料,只要是傳統二維 主成份分析法可以應用的範疇,次區二維 主成份分析法都可以使用,所以此法在學 術部份的相關領域都會有所貢獻。在對於 國家貢獻的部份,因應國家近年來極力發 展高科技應用於產品之上,像是各項 3C 產 品,而人臉辨識的技術可以應用於多數先 進、實用的產品之上,如數位相機、筆記 型電腦、手機、大樓監控系統…等,於國 家安全如軍事等應用,也都會有顯著的效 益。

實驗結果比較

編號 使用方法 辨識率 運算量

1 (2D)2PCA [25] 90.5% high 2 2DPCA+Fusion method based on

bidirectional [29]

92.5% high

3 2DPCA+2DLDA [26] 93.5% high

4 SI2DPCA (proposed) 93.5% low

5 2DPCA+Kernel [39] 94.58% very high 6 2DPCA+Feature fusion approach [40] 98.1% very very

high

6

編號 3 的方法為使用二維主成份分析 法後加上二維線性鑑別分析法,所以複雜 度會比單純只使用二維主成份分析法來的 高。編號 4 為本研究所提出之分部特徵分 解之二維主成份分析法,其複雜度比單純 使用二維主成份分析法還低,因此,若有 任何一種方法,其複雜度比二維主成份分 析法的還高的話,就表示此法的複雜度比 本研究所提出之分部特徵分解之二維主成 份分析法還高。編號 5 的方法為核(Kernel) 之二維主成份分析法,此法使用將資料投 影到高維度空間處理後再轉回來低維度空 間的概念,結合二維主成份分析法使用,

但因高低維度空間轉換的過程相當耗費時 間,所以此法的運算複雜度很高,因此,

雖然編號 5 的方法比本研究所提的分部特 徵分解之二維主成份分析法的辨識率高,

但運算複雜度的表現,卻遠不如本研究所 提之法。編號 6 之法為使用二維主成份分 析 法 結 合 特 徵 混 合 法 (Feature fusion approach),此法的辨識率雖高,但其運算 處理時間比二維主成份分析法高了十倍左 右之多[39],所以此法的運算複雜度也會比 本研究所提出之分部特徵分解之二維主成 份分析法還高出許多。綜觀表 11 所列的方 法,本研究所提出之分部特徵分解之二維 主成份分析法在辨識率表現的部份,比編 號 1、編號 2 與編號 3 的方法都來的好。雖 然編號 5 與編號 6 的辨識率表現比本研究 所提之法高,但因此二種方法的運算複雜 度相當高,所以在這個部份,本研究所提

出之分部特徵分解之二維主成份分析法是 占有較佳的優勢。本研究所提之法能夠兼 顧辨識率表現及較低的運算複雜度,以整 體性的表現來看,是優於表 11 中其它五種 方法。

四、未來應用方向

人臉辨識被廣泛的應用在各種領域 中,特別是在應用端的產品,現今有許多 的電子產品都使用了人臉辨識的功能,因 此,本計畫的目的是希望能降低人臉辨識 的運算量,而使得產品的性能表現更佳。

在研究端的部份,則是希望藉由降低複雜 度來提升二維主成份分析法的效能,並且 期望此法不只是用在人臉辨識的領域,而 是只要在使用二維主成份分析法的情況 下,都能獲得降低運算量的好處,也就是 說從基礎理論端來提升二維主成份分析法 的效能。

五、重要參考文獻

[1] A. Z. Kouzani, F. He, and K. Sammut,

“Towards invariant face recognition,” Inf.

Sci., vol. 123, pp. 75–101, 2000.

[2] K. Lam and H. Yan, “An analytic-to-holistic approach for face recognition based on a single frontal view,”

IEEE Trans. Pattern Anal. Mach. Intell., vol.

20, no. 7, pp. 673–686, Jul. 1998.

[3] M. A. Turk and A. P. Pentland,

“Eigenfaces for recognition,” J. Cognitive

7

Neurosci., vol. 3, pp. 71–86, 1991.

[4] T. Yahagi and H. Takano, “Face recognition using neural networks with multiple combinations of categories,” J. Inst.

Electron. Inf. Commun. Eng., vol. J77-D-II, no. 11, pp. 2151–2159, 1994, (in Japanese).

[5] S. Lawrence, C. L. Giles, A. C. Tsoi, and A. D. Back, “Face recognition: A convolutional neural-network approach,”

IEEE Trans. Neural Netw., vol. 8, no. 1, pp.

98–113, Jan. 1997.

[6] S. H. Lin, S. Y. Kung, and L. J. Lin,

“Face recognition/detection by probabilistic decision-based neural network,” IEEE Trans.

Neural Netw., vol. 8, no. 1, pp. 114–132, Jan.

1997.

[7] M. J. Er, S. Wu, J. Lu, and H. L. Toh,

“Face recognition with radial basis function RBF neural networks,” IEEE Trans. Neural Netw., vol. 13, no. 3, pp. 697–710, May 2002.

[8] F. Yang and M. Paindavoine,

“Implementation of an RBF neural network on embedded systems: Real-time face tracking and identity verification,” IEEE Trans. Neural Netw., vol. 14, no. 5, pp.

1162–1175, Sep. 2003.

[9] J. Lu, K. N. Plataniotis, and A. N.

Venetsanopoulos, “Face recognition using kernel direct discriminant analysis algorithms,” IEEE Trans. Neural Netw., vol.

14, no. 1, pp. 117–126, Jan. 2003.

[10] B. K. Gunturk, A. U. Batur, and Y.

Altunbasak, “Eigenface-domain super-resolution for face recognition,” IEEE

Trans. Image Process., vol. 12, no. 5, pp.

597–606, May 2003.

[11] B. L. Zhang, H. Zhang, and S. S. Ge,

“Face recognition by applying wavelet

subband representation and kernel associative memory,” IEEE Trans. Neural Netw., vol. 15, no. 1, pp. 166–177, Jan. 2004.

[12] Q. Liu, X. Tang, H. Lu, and S. Ma,

“Face recognition using kernel scatter-difference-based discriminant analysis,” IEEE Trans. Neural Netw., vol. 17,

no. 4, pp. 1081–1085, Jul. 2006.

[13] W. Zheng, X. Zhou, C. Zou, and L.

Zhao, “Facial expression recognition using kernel canonical correlation analysis (KCCA),” IEEE Trans. Neural Netw., vol. 17, no. 1, pp. 233–238, Jan. 2006.

[14] X. Tan, S. Chen, Z. H. Zhou, and F.

Zhang, “Recognizing partially occluded, expression variant faces from single training image per person with SOM and soft k-NN ensemble,” IEEE Trans. Neural Netw., vol.

16, no. 4, pp. 875–886, Jul. 2005.

[15] J. Yang, D. Zhang, A. F. Frangi, and J.

Y. Yang, “Two-Dimensional PCA: A New Approach to Appearance-Based Face Representation and Recognition,” IEEE Trans. Pattern Analysis and Machine Intelligence., vol. 26, no. 1, pp. 131-137, Jan.

2004.

[16] J. Lu, X. Yuan, and T. Yahagi, “A Method of Face Recognition Based on Fuzzy c-Means Clustering and Associated Sub-NNs,” IEEE Trans. Neural Netw., vol.

18, no. 1, Jan. 2007

[17] L. Sirovich and M. Kirby,

“Low-Dimensional Procedure for Characterization of Human Faces,” J.

Optical Soc. Am., vol. 4, pp. 519-524, 1987.

[18] M. Kirby and L. Sirovich, “Application of the KL Procedure for the

Characterization of Human Faces,” IEEE Trans. Pattern Analysis and Machine

8

Intelligence., vol. 12, no. 1, pp. 103-108, Jan.

1990.

[19] A. Pujol, J. Vitria, F. Lumbreras and J. J.

Villanueva, “Topological principal component analysis for face encoding and recognition,” Pattern Recognition Letters., pp. 769-776, 2001.

[20] K. I. Kim, K. Jung, and H. J. Kim, “Face Recognition Using Kernel Principal Component Analysis,” IEEE Signal Processing Letters., vol. 9, no. 2, pp. 40-42, Feb. 2002.

[21] H. Wang, Z. Wang, Y. Leng, X. Wu and Q. Li, “PCA Plus F-LDA: A new approach to face recognition,” International Journal of Pattern Recognition and Artificial Intelligence., Vol. 21, No. 6, pp. 1059–1068, 2007.

[22] W. H. Yang and D. Q. Dai,

“Two-Dimensional Maximum Margin Feature Extraction for Face Recognition,”

IEEE Transactions on Systems, Man and Cybernetics., vol. 39, no. 4, pp. 1002-1012, Aug, 2009.

[23] H. Wang, Y. Leng, Z. Wang and X. Wu,

“Application of image correction and bit-plane fusion in generalized PCA based face recognition,” Pattern Recognition Letters 28, pp 2352-2358, Aug. 2007.

[24] P. C. Hsieh and P. C. Tung, “A novel hybrid approach based on sub-pattern technique and whitened PCA for face recognition,” Pattern Recognition 42, pp.

978-984, 2009.

[25] D. Zhang and Z. H. Zhoua, “(2D)^2PCA:

Two-directional two-dimensional PCA for efficient face representation and recognition,” Neurocomputing 69, pp.

224–231, Jun. 2005.

[26] P. Sanguansat, W. Asdornwised, S.

Jitapunkul and S. Marukatat,

“Tow-dimensional linear discriminant analysis of principle component vectors for face recognition,” IEEE., pp. 345-348, 2006.

[27] J. Meng and W. Zhang, “Volume measure in 2DPCA-based face recognition,”

Pattern Recognition Letters 28, pp.

1203–1208, Jan. 2007.

[28] H. Wang, S. Chen, Z. Hu and B. Luo,

“Probabilistic two-dimensional principal component analysis and its mixture model for face recognition,” Springer Neural Comput & Applic 17, pp. 541–547, 2008.

[29] Y. G. Kim, Y. J. Song, U. D. Chang, D.

W. Kim, T. S. Yun and J. H. Ahn, “Face recognition using a fusion method based on bidirectional 2DPCA,” Applied Mathematics and Computation., vol. 205, pp. 601–607, 2008.

[30] Y. Qi and J. Zhang, “(2D)2PCALDA:

An efficient approach for face recognition,”

Applied Mathematics and Computation., vol.

213(1), pp. 1-7, Jul. 2009.

[31] K. L. Yeh, Principal Component Analysis with Missing Data [Online], pp.

1-12, Jun. 2006. Available:

http://www.cmlab.csie.ntu.edu.tw/~cyy/learn ing/tutorials/PCAMissingData.pdf

[32]

http://episte.math.ntu.edu.tw/entries/en_lagra nge_mul/index.html

[33]

http://zh.wikipedia.org/zh-tw/%E6%8B%89

%E6%A0%BC%E6%9C%97%E6%97%A5

%E4%B9%98%E6%95%B0

[34] S. J. Leon, Linear algebra with applications, seventh ed. Prentice Hall, 2006.

[35] A. M. Martinez, and A. C. Kak, “PCA

9

versus LDA,” IEEE Trans on Pattern Analysis and Machine Intelligence., vol.23, no.2, pp. 228 - 233 , 2001.

[36] J. S. R. Jang, "Data Clustering and Pattern Recognition," (in Chinese) available at the links for on-line courses at the author's

homepage at http://www.cs.nthu.edu.tw/~jang.

[37] 3D Computer Vision Winter Term 2005/06, Nassir Navab

[38]“The ORL face database”, http://www.cl.cam.ac.uk/research/dtg/attarchi ve/facedatabase.htm

[39] N. Sun, H. X. Wang, Z. H. Ji, C. R. Zou and L. Zhao, “An efficient algorithm for Kernel two-dimensional principal component analysis,” Neural Comput & Applic., 17, pp.

59-64, 2008.

[40] Y. Xu, D. Zhang, J. Yang and J. Y.

Yang, “An approach for directly extracting features from matrix data and its application in face recognition,” Neurocomputing, 71, pp.

1857-1865, Feb, 2008.

[41] M. Li and B. Yuan, “2D-LDA: A statistical linear discriminant analysis for image matrix,” Pattern Recognition Letters, 26, pp. 527–532, 2005.

[42] G. Huang, “Fusion (2D)2PCALDA: A new method for face recognition,”

Applied Mathematics and Computation, 216, pp. 3195–3199, 20

10

1

參加 ICAI2012 及 IPCV2012 研討會心得報告

報告人: 楊英魁 2012.10.18

時間: 2012 年 7 月 16 日 ~ 2010 年 7 月 19 日 地點: Las Vegas, USA

報告內容:

這次在 Las Vegas, USA 為期四天所舉行的研討會 The 14th 2012 International Conference on Artificial Intelligence (ICAI2012)以及 The 16th International conference on Image Processing, Computer Vision & Pattern Recognition (IPCV2012),是學術界在人工智慧 與影像辨識領域上重要的一次會議。ICAI2012 以及 IPCV2012 是 WORLDCOMP12 (The 2012 World Congress in Computer Science, Computer Engineering, and Applied Computing)中 25 個研討會之二。由於它的重要性,所以有另外九十幾個與此兩領域有關的研討會一起舉行。總 共有來自全球不同領域的超過兩千七百個專家學者參與,氣氛熱絡,連當地旅館都不容易定到,

每位 Keynote Speaker 都是在這兩領域領域裡大師級的人物。

參加這次 ICAI2012,主要是去發表兩篇由國科會所支持研究的成果論文:A Fuzzy-Reasoning Radial Basis Function Neural Network with Reinforcement Learning

Method 以及 SI2DPCA: A Low-Computation Face Recognition Approach。發表此兩篇論文時,

大約有 160 幾位專家學者參與討論,氣氛對非常熱絡,對論文所提出的方法與理論,與會者都 極為肯定。

這幾天期間,與各地學者專家深入討論各個不同的領域,受益良多,也能正確的掌握目前 人工智慧與影像辨識的領域,尤其是每天第一場的 keynote speech 更是精采。主講者不但學術 豐富,有幽默感,而且明確指出今後在這些領域上可以進行的幾個方向,足以當作最好的參考。

參加此研討會,不但有機會與來自世界各地的學者專家廣泛討論,相互切磋,也因此更確 定目前所進行的研究方向是正確的。而且同時可以參加六個相關研討會,真是不虛此行

2

IPCV 2012 Conference - decision on your paper (IPC2842)

Tuesday, April 24, 2012 3:23 PM

From:

"Steering Committee" <sc@world-comp.org>

Add sender to Contacts

To:

yingkyang@yahoo.com

Cc:

yingkyang@yahoo.com

Dear Dr. Ying-Kuei Yang:

I am pleased to inform you that the following paper which you submitted to:

The 16th International Conference on Image Processing, Computer Vision, & Pattern Recognition

(IPCV'12: July 16-19, 2012, USA) has been accepted as a Regular Research Paper (RRP) - ie, accepted for both, publication in the proceedings and oral formal presentation. Please see below for the categories of accepted papers.

Paper ID #: IPC2842

Title: SI2DPCA: A Low-Computation Face Recognition Approach Author(s): Ying-Kuei Yang, Wei-Li Fang and Jung-Kuei Pan

Note: The "paper ID #" shown above is composed of three letters

(conference prefix) followed by four numeral/digits. You will need to have this "Paper ID #" at the time of registration and final paper submission (for publication).

(The evaluation of this paper is arranged by Track Chair: 247)

3

SI2DPCA: A Low-Computation Face Recognition Approach

Ying-Kuei Yang, Wei-Li Fang and Jung-Kuei Pan

Dept. of Electrical Engineering, National Taiwan Uni. of Sci. & Technology, Taiwan e-mail: yingkyang@yahoo.com

Abstract

Several 2DPCA-based face recognition algorithms have been proposed hoping to achieve the goal of improving recognition rate while mostly at the expense of computation cost. In this paper, an approach named SI2DPCA is proposed to not only reduce the computation cost but also increase recognition performance at the same time. The approach divides a whole face image into smaller sub-images to increase the weight of features for better feature extraction. Meanwhile, the computation cost that mainly comes from the heavy and complicated operations against matrices is reduced due to the smaller size of sub-images. The experimental results have demonstrated that SI2DPCA works well on reaching the goals of reducing computation cost and improving recognition simultaneously after comparing its performance against several better-known approaches.

Keywords: face recognition, feature extraction, principle component analysis, covariance computation, eigen-decomposition.

1. Introduction

Face recognition in image processing has been significantly important because it can be applied in human life efficaciously [1]-[10]. Several algorithms have been proposed in face recognition. The Yang et al.proposed the so-called two-dimensional principal component (2DPCA) algorithm aiming for better feature extraction of face images to increase recognition rate and reduce computation cost simultaneously [11]. Because 2DPCA has such good performance, various face recognition algorithms based on 2DPCA had been proposed and enhanced. For instance, the approach of “Two-directional two-dimensional PCA ((2D)2PCA)” proposed by Zhang et al. [12] is to process a face image from transverse and longitudinal axis respectively and then perform the recognition by analyzing their shortest dimension. Unfortunately, its improvement on recognition rate is not ubiquitous in relatively large scale of training samples [13]. Sanguansat et al. [14] proposed the approach of

“Two-dimensional principal component combined two-dimensional Linear discriminant analysis (2DPCA&2DLDA)” [14] to face recognition applications. Although this approach solves the small sample size problem, its computation cost is high due to the composition of 2DPCA and 2DLDA.

Meng et al. [15] proposed the combination of 2DPCA with self-defined volume measure to perform feature extraction by 2DPCA first and then conduct classification by computing the distances of matrix volumes. This approach is more suitable to process applications with high dimensional data.

4

Wang et al. [16] proposed “probabilistic two-dimensional principal component analysis” that combines 2DPCA with Gaussian distribution concept to mitigate the noise influence in face image recognition. Kim et al. [17] proposed “fusion method based on bidirectional 2DPCA” that reduces dimensions of both row and column vectors before performing face recognition procedure. It does increase recognition rate, but at the expense of high computation cost [18].

Aforesaid face recognition algorithms based on 2DPCA have tried to either increasing recognition rate or reducing computation cost, but not both. In this paper, an approach named sub-image 2-dimensional principal component analysis (SI2DPCA) is proposed hoping to achieve not only reducing the computation but also increasing the recognition rate in face image recognition applications. Unlike conventional 2DPCA, the SI2DPCA divides a whole face image into smaller sub-images so that features can be better recognized and extracted. At the same time, computation cost can also be reduced due to smaller size of sub-images.

2. The sub-image 2-dimensional principal component analysis

2.1 Two-dimensional principal component analysis (2DPCA)

The 2DPCA approach by Yang et al. [11] in 2004 is proposed particularly for two dimensional image data. Suppose there is an image data set Z={A1, A2,…, AN } with N images, and the dimension of every image is n×n. The covariance matrix of the image data set is computed by Eq. (1) and the average value of the data set is computed by Eq. (2).

1

1 N ( i )( i )T N i=

=

∑

− −R A A A A (1)

1

1 N

i

N i=

= ∑

A A (2) where Ai is an image in the data set, R is covariance matrix, and A is data average.

After eigen-decomposition is performed for covariance matrix, k eigenvectors corresponding to the k biggest eigenvalues are selected. These eigenvectors are the projection vectors of the original image data set and the features of the image can therefore be extracted from those projection vectors as shown in Eq. (3).

i

=

iY AX i=1,2,…,k (3) where Yi are projected feature vectors, Xi means eigenvectors. Suppose there are k biggest eigenvalues being selected, then a feature vector set B=[Y1 ,Y2 ,…,Yk ] in descending order of eigenvalues can be obtained and these projected feature vectors are the resultant principal components of an original image data A by 2DPCA.

2.2 Sub-image 2-dimensional principal component (SI2DPCA)

5

SI2DPCA is proposed in this paper to further increase the recognition accuracy and decrease the computation cost. As discussed previously, the high computation cost of PCA and 2DPCA comes from computing covariance matrix and eigen-decomposition [19]. Therefore, SI2DPCA proposes to equally divide a face image into smaller sub-images to be processed so that the total computation cost can be reduced.

The intuitive way is to equally divide a face image into four smaller sub-images for feature extraction. Suppose there is an m×m square matrix and the eigen-decomposition is to be performed against it. The eigenvalue λ is obtained by subtracting λ from each of diagonal elements of the square matrix, and then setting the value of the determinant of the square matrix to be zero. The process is described in Eq. (4).

(4)

The next step is to apply the extension method [19] to extend the square matrix in Eq. (4). The initial procedure is to choose the first element of every column from the determinant in Eq. (4) and multiply it by the smaller determinant that consists of the elements which belong to neither the column nor the row where the first element is located. The (-1)(i+j) in Eq. (5) is used to get the coefficient sign (+ or -) of every smaller determinant. The symbols of “i” and “j” are the row and column of the first element of a determinant respectively.. Eq. (5) is the result of extending Eq. (4).

(5)

Similar extension process needs to be performed against every smaller determinant in Eq. (5).

This procedure of performing extension process continues until no more determinant exists. At this moment, only scalar computation remains in the equation. Based on Eq. (5), it is obvious to observe that the higher dimension a square matrix has, the higher computation cost is.

Eq. (6) shows the result of dividing the determinant of the square matrix in Eq. (4) into four smaller ones.

11 12 1m

2 1 2 2 2m

m 1 m 2 m n

0

a a a

a a a

a a a

λ

λ

λ

−

−

=

− L L

L L

M M O M

M M O M

L L

6

m m

2 2

m m m m m m

2 2 2 2 2 2

m m m m m m

2 2 2 2 2 2

m m

2 2

11 12 1 1( 1) 1m

21 22

1 ( 1) m

( 1)1 ( 1) ( 1)( 1) ( 1) m

m1 m m( 1) mm

0

a a a a a

a a

a a a a

a a a a

a a a a

λ

λ

λ

λ

λ

+

+

+ + + + +

+

−

−

− =

−

−

L L L

O M M O M

M M O M M O M

L L L L

L L L L

M O M M O M

M O M M O M

L L L L

(6)

Because the time complexity of computing a determinant is O(n!), the total computation cost of computing the determinants for each of smaller square matrices in Eq. (6) is much less than the computation cost for Eq. (5). From the final equation that is set to be zero, several λ values can be obtained. The eigenvectors can consequently be calculated by substituting λ values into its corresponding matrix in Eq. (6). The eigenvector that is based on the largest λ value is the most important feature. The eigenvector based on the second largest λ value is the second important feature, and so on.

Because current computers are mostly binary-based systems, ill condition problem that gives incorrect result could be caused when computing eigen-decomposition [19]. To avoid such problem, many studies use singular value decomposition (SVD) to replace the process of eigen-decomposition.

For a matrix with dimension m×n, the computation cost of SVD can be described as Eq. (7) [20].

4m2n + 8mn2 + 9n3 (7) The big-order of (15) is O(n3) when m < n, meaning the dimension variation causes significant difference in terms of computation cost. This infers that the idea of working on several smaller matrices rather than one original larger matrix by SI2DPCA can lower computation cost when performing eigen-decomposition process.

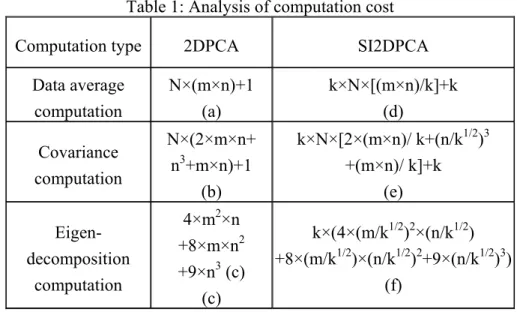

The comparison of computation cost between the conventional 2DPCA and the proposed SI2DPCA is shown in Table 1 that details the formulas of computation cost. In Table 1, the formula (a) that comes from Eq. (2) is to compute the data average by 2DPCA. The N means computation summation of N images. The computation amount of m×n in Formula (a) is for matrix addition.

Adding one in formula (a) is for the division operation in Eq. (2). Formula (b) is from Eq. (1) to compute the covariance matrix. The N and the plus-one have same meaning as in formula (a). There are three operations in Eq. (1). The first one is subtracting the data average obtained by formula (a) from the original image data, causing m×n computation. There are two such operations in Eq. (1), so the computation cost is 2×m×n. The second operation is the multiplication of the two matrices shown in Eq. (1). The computation cost of such matrix multiplication is n3. The third operation is the computation of transposing a matrix, causing m×n computation. Formula (c) comes from Eq. (7) and is the computation cost of eigen-decomposition for 2DPCA.

The rest of formulas in Table 1 are for SI2DPCA. Suppose an original image with dimension m×n is equally divided into k smaller images, where k can be square-rooted and m and n are times of

7

k1/2. That is, the dimension of each smaller matrix is ((m/ k1/2) × (n/ k1/2)). The Eq. (2), Eq. (1) and Eq.

(7) need to be applied for each of k smaller matrices in that order. As explained previously for formulas (a) to (c), the computation costs indicated in formulas (d) to (f) are self-explained by reducing dimension to be ((m/ k1/2) × (n/ k1/2)) for each smaller matrix and summing up the whole computation cost of k smaller matrices.

Table 1: Analysis of computation cost

Computation type 2DPCA SI2DPCA

Data average computation

N×(m×n)+1 (a)

k×N×[(m×n)/k]+k (d)

Covariance computation

N×(2×m×n+

n3+m×n)+1 (b)

k×N×[2×(m×n)/ k+(n/k1/2)3 +(m×n)/ k]+k

(e) Eigen-

decomposition computation

4×m2×n +8×m×n2 +9×n3 (c)

(c)

k×(4×(m/k1/2)2×(n/k1/2) +8×(m/k1/2)×(n/k1/2)2+9×(n/k1/2)3)

(f)

In previous discussions, an original image is assumed in m×n dimension. In reality, a 2-dimensional human face image generally has same dimension on columns and rows, meaning m equals n. Under this assumption, the time complexity in big order for Table 1 can therefore be summarized in Table 2. Although Table 2 shows SI2DPCA has no advantage over 2DPCA in terms of time complexity, its actual computation cost is much less when k is greater than one. The bigger the k is, the greater the decreased amount is for computation cost. In general, the most reasonable k is 4 meaning at least roughly half computation cost is reduced by SI2DPCA.

Table 2: Time complexity analysis in big order for matrices

Computation type 2DPCA SI2DPCA

Data average computation m2 m2

Covariance computation m3 (m/ k1/2)3 Eigen-decomposition computation m3 (m/ k1/2)3

Above discussions prove that SI2DPCA can reduce computation cost. However, the fundamental

8

goal is to perform face image recognition. That is, hoping the proposed SI2DPCA does not improve its computation cost at the expense of recognition performance.

In SI2DPCA, after an original face image is equally divided into several smaller sub-images, each of the sub-images is processed individually for feature extraction. Because the size of a sub-image is smaller, any important features existing in this sub-image can be easier to be found and therefore to be extracted. For example, a sub-image may include only features of eyes and hair, and these features and their detailed textures would then be so obvious to be recognized and extracted in this relatively small image. On the other hand, a whole image includes not only eyes and hair but also many other features.

In this situation, the features of eyes and hair may not be so outstanding in such immense image data and therefore can not be easily recognized. Even these two features have been recognized, their weights in its image may not be as great as those extracted from smaller sub-images because of the co-existence of other features in the whole bigger image.

3. Experiments and analysis 3.1 The ORL database

The ORL database [21] is a well-known face image database and is used in this paper for experiments. There are 40 individual faces in ORL database. Each individual face has 10 different images making totally 400 face images in the database. The images were taken with a tolerance of some tilting and rotation of the face for up to 20 degrees [11][21]. In ORL database, all images are grayscale with dimension of 112×92. The pixel value range is 0~255.

3.2 Experiments and analysis of SI2DPCA

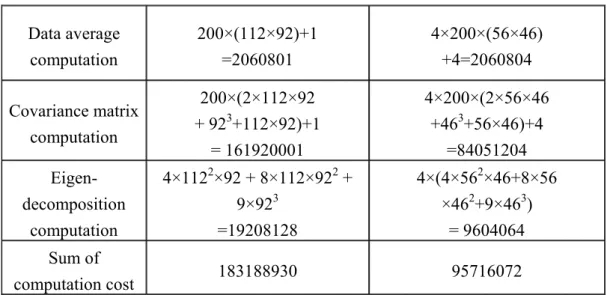

According to Table 1, the computation cost of SI2DPCA and 2DPCA can be calculated for the images in ORL database. Every image has dimension of 112×92, meaning m and n in Table 1 are 112 and 92 respectively. And each image is divided into 4 smaller sub-images, meaning the value of k in Table 1 is 4. Suppose 200 images are taken as training data, meaning N in Table 1 is 200, and 8 features are selected and extracted. Putting these values into Table 1, the result is shown in Table 3.

Table 3 shows that the computation cost for SI2DPCA is only half of 2DPCA. When calculating covariance matrix and eigen-decomposition, there are many quadratic or cubic power computations.

Smaller image dimensions operated in SI2DPCA can greatly reduce the computation cost, as discussed previously

Table 3: Analysis of computation cost for ORL database

Computation type 2DPCA SI2DPCA

9

Data average computation

200×(112×92)+1

=2060801

4×200×(56×46) +4=2060804 Covariance matrix

computation

200×(2×112×92 + 923+112×92)+1

= 161920001

4×200×(2×56×46 +463+56×46)+4

=84051204 Eigen-

decomposition computation

4×1122×92 + 8×112×922 + 9×923

=19208128

4×(4×562×46+8×56

×462+9×463)

= 9604064 Sum of

computation cost 183188930 95716072

Besides the computation cost, the proposed SI2DPCA and conventional 2DPCA also need be compared on their recognition performance. The recognition is performed by the nearest neighbor rule (NNR) [11] that is based on Euclidean distance.

In this experiment, the first 5 images of every face are treated as training and the remained 5 images of every face are treated as testing images. That is, there are 200 images for training and 200 images for testing. Eight important features are extracted in the experiment, meaning a 8-elements feature vector is obtained for each of images. The projected feature vector of each of training and testing images can be calculated by multiplying the 8-elements feature vectors to the data of every training and testing images. The classification for each of testing images can then be performed by NNR against the training images.

The recognition rate comparison between 2DPCA and SI2DPCA is shown in Table 4. Earlier discussions argued that important features can be better recognized and extracted in smaller sub-images. This can be observed in Table 4 that shows slight better recognition rate for SI2DPCA over conventional 2DPCA. Both Table 3 and Table 4 together show that the SI2DPCA reduces computation cost without compromising its recognition performance.

Table 4: Recognition comparison between 2DPCA and SI2DPCA Strategy Recognition rate

2DPCA 93%

SI2DPCA 93.5%

Various methodologies based on 2DPCA have been proposed. Table 5 shows the performance comparison in terms of recognition rate and computation cost among some of better-known approaches and SI2DPCA. All the experiments for the approaches in Table 5 are conducted based on the face images in ORL database. In Table 5, the computation costs of method 1, method 2 and method 3 are all higher than SI2DPCA while the recognition rates are either lower than or same as

10

SI2DPCA. This is because SI2DPCA operates against matrices in smaller dimensions. For methods (4), (5) and (6) in Table 5, they even put additional processes to 2DPCA. Method 5 combines 2DPCA with Kernel algorithm. This approach projects image data to high dimensional space, causing high computation cost. Although its recognition rate is slightly better than the proposed SI2DPCA, the much higher computation cost makes it difficult for practical applications. Method 6 combines feature fusion with 2DPCA in order to increase recognition rate. The resultant recognition rate is very good at 98.1% that is better than the rate of 93.5% by SI2DPCA in the experiment. Unfortunately, the computation cost of this approach is so high, at least 10 times higher than 2DPCA, that it is impossible to be applied to any practical applications.

Table 5: Comparison among other methods and SI2DPCA Metho

d number

Method Recognitio n rate

Computation cost

1 (2D)2PCA [12] 90.5% high

2 2DPCA+Fusion method based on bidirectional [17]

92.5% high

3 2DPCA+2DLDA [14] 93.5% high

4 SI2DPCA (proposed) 93.5% low

5 2DPCA+Kernel [22] 94.58% very high

6 2DPCA+Feature fusion approach [23] 98.1% very very high

4. Conclusion

The feature extraction algorithm 2DPCA is specially developed for face recognition. Its characteristics are low computation cost and good feature extraction, making 2DPCA a popular approach for face recognition. In this paper, an enhanced approach “SI2DPCA” is proposed to operate at even lower computation cost without compromising its good recognition performance. Both of the two goals of reducing computation cost and maintaining good recognition rate have been shown in the results of the conducted experiments in this paper.

References

[1] A. Z. Kouzani, F. He, and K. Sammut, “Towards invariant face recognition,” Inf. Sci., vol. 123, pp.

75–101, 2000.

[2] M. J. Er, S. Wu, J. Lu, and H. L. Toh, “Face recognition with radial basis function RBF neural networks,” IEEE Trans. Neural Netw., vol. 13, no. 3, pp. 697–710, May 2002.

[3] F. Yang and M. Paindavoine, “Implementation of an RBF neural network on embedded systems:

11

Real-time face tracking and identity verification,” IEEE Trans. Neural Netw., vol. 14, no. 5, pp.

1162–1175, Sep. 2003.

[4] J. Lu, K. N. Plataniotis, and A. N. Venetsanopoulos, “Face recognition using kernel direct discriminant analysis algorithms,” IEEE Trans. Neural Netw., vol. 14, no. 1, pp. 117–126, Jan. 2003.

[5] B. K. Gunturk, A. U. Batur, and Y. Altunbasak, “Eigenface-domain super-resolution for face recognition,” IEEE Trans. Image Process., vol. 12, no. 5, pp. 597–606, May 2003.

[6] B. L. Zhang, H. Zhang, and S. S. Ge, “Face recognition by applying wavelet subband representation and kernel associative memory,” IEEE Trans. Neural Netw., vol. 15, no. 1, pp. 166–177, Jan. 2004.

[7] Q. Liu, X. Tang, H. Lu, and S. Ma, “Face recognition using kernel scatter-difference-based discriminant analysis,” IEEE Trans. Neural Netw., vol. 17, no. 4, pp. 1081–1085, Jul. 2006.

[8] W. Zheng, X. Zhou, C. Zou, and L. Zhao, “Facial expression recognition using kernel canonical correlation analysis (KCCA),” IEEE Trans. Neural Netw., vol. 17, no. 1, pp. 233–238, Jan. 2006.

[9] X. Tan, S. Chen, Z. H. Zhou, and F. Zhang, “Recognizing partially occluded, expression variant faces from single training image per person with SOM and soft k-NN ensemble,” IEEE Trans. Neural Netw., vol. 16, no. 4, pp. 875–886, Jul. 2005.

[10] G. Huang, “Fusion (2D)2PCALDA: A new method for face recognition,” Applied Mathematics and Computation, 216, pp. 3195–3199, 2010.

[11] J. Yang, D. Zhang, A. F. Frangi, and J. Y. Yang, “Two-Dimensional PCA: A New Approach to Appearance-Based Face Representation and Recognition,” IEEE Trans. Pattern Analysis and Machine Intelligence., vol. 26, no. 1, pp. 131-137, Jan. 2004.

[12] D. Zhang and Z. H. Zhoua, “(2D)^2PCA: Two-directional two-dimensional PCA for efficient face representation and recognition,” Neurocomputing 69, pp. 224–231, Jun. 2005.

[13] W. H. Yang and D. Q. Dai, “Two-Dimensional Maximum Margin Feature Extraction for Face Recognition,” IEEE Transactions on Systems, Man and Cybernetics., vol. 39, no. 4, pp. 1002-1012, Aug, 2009.

[14] P. Sanguansat, W. Asdornwised, S. Jitapunkul and S. Marukatat, “Tow-dimensional linear discriminant analysis of principle component vectors for face recognition,” IEEE., pp. 345-348, 2006.

[15] J. Meng and W. Zhang, “Volume measure in 2DPCA-based face recognition,” Pattern Recognition Letters 28, pp. 1203–1208, Jan. 2007.

[16] H. Wang, S. Chen, Z. Hu and B. Luo, “Probabilistic two-dimensional principal component analysis and its mixture model for face recognition,” Springer Neural Comput & Applic 17, pp.

541–547, 2008.

[17] Y. G. Kim, Y. J. Song, U. D. Chang, D. W. Kim, T. S. Yun and J. H. Ahn, “Face recognition using a fusion method based on bidirectional 2DPCA,” Applied Mathematics and Computation., vol.

12

205, pp. 601–607, 2008.

[18] Y. Qi and J. Zhang, “(2D)2PCALDA: An efficient approach for face recognition,” Applied Mathematics and Computation., vol. 213(1), pp. 1-7, Jul. 2009.

[19] S. J. Leon, Linear algebra with applications, seventh ed. Prentice Hall, 2006.

[20] 3D Computer Vision Winter Term 2005/06, Nassir Navab

[21]“The ORL face database”, http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.htm [22] N. Sun, H. X. Wang, Z. H. Ji, C. R. Zou and L. Zhao, “An efficient algorithm for Kernel two-dimensional principal component analysis,” Neural Comput & Applic., 17, pp. 59-64, 2008.

[23] Y. Xu, D. Zhang, J. Yang and J. Y. Yang, “An approach for directly extracting features from matrix data and its application in face recognition,” Neurocomputing, 71, pp. 1857-1865, Feb, 2008.

13

Conference on Artificial Intelligence (ICAI 2012) - decision on your paper (ICA2902)

Thursday, April 26, 2012 7:45 AM

From:

"Steering Committee" <sc@world-comp.org>

Add sender to Contacts

To:

yingkyang@yahoo.com

Cc:

yingkyang@yahoo.com

Dear Dr. Yingkuei Yang:

I am pleased to inform you that the following paper which you submitted to:

The 14th International Conference on Artificial Intelligence

(ICAI'12: July 16-19, 2012, USA) has been accepted as a Regular Research Paper (RRP) - ie, accepted for both, publication in the proceedings and oral formal presentation. Please see below for the categories of accepted papers.

Paper ID #: ICA2902

Title: A Fuzzy-Reasoning Radial Basis Function Neural Network with Reinforcement Learning Method

Author(s): Ying-Kuei Yang, Jin-Yu Lin, Wei-Li Fang and Jung-Kuei Pan

Note: The "paper ID #" shown above is composed of three letters

(conference prefix) followed by four numeral/digits. You will need to have this "Paper ID #" at the time of registration and final paper submission (for publication).

14

A Fuzzy-Reasoning Radial Basis Function Neural Network with Reinforcement Learning Method

Ying-Kuei Yang, Jin-Yu Lin, Wei-Li Fang and Jung-Kuei Pan

Dept. of Electrical Engineering, National Taiwan University of Science & Technology, Taipei, TAIWAN

e-mail: yingkyang@yahoo.com

Abstract

The performance of rule-based fuzzy systems primarily relies on its rules and membership functions.

Some researchers have proposed a self-construct rule-based fuzzy system based on reinforcement learning, which often results in a 5-layer neural network. The Fuzzy-reasoning Radial Basis Function Neural Network (FRBFN) proposed in this paper has only 3 layers reduce its forwarding calculation time. The reasoning time is reduced by eliminating fuzzification and defuzzification in the learning system. The membership functions of a rule are finely tuned through learning procedure. The Radial Basis Function Neural Network (RBFN) is employed in FRBFN to offer the generality and smoothness of the network. The result of experiment has shown that our proposed network has the ability to well train a rule-based fuzzy system through reinforcement learning with god performance.

Keywords: fuzzy systems, radial basis function neural network, reinforcement learning, supervised learning

1. Motivation

The rule-based fuzzy systems have been successfully used in many real-world control applications [1]. Many researchers have devoted research on constructing rules and membership functions by system itself through learning [2][3][4][5]. Supervised learning requires sample data sufficiently large enough to learn. The use of reinforcement learning is to eliminate this requirement.

Reinforcement learning method is based on a trial-and-error method and therefore no precise training pair is required [6][7][8][9][10].

Most researchers have considered a fuzzy system as a five layers neural network. This selection is to map input variables, input membership functions, rule base, output membership functions and output variables to each layer of neural network respectively. The use of five layers has created a

15

complex neural network that requires high computation cost. A less layers of neural network that performs the same function is proposed in this thesis. This idea comes from the similarity between a Radial Basis Function Neural Network (RBFN) and a rule-based fuzzy system [11][12]. The proposed network has only three layers: input layer, hidden layer and output layer. The layer reduction can decrease the computation cost and enhance the learning speed of a network system.

2. The Fuzzy-Reasoning Radial Basis Function Neural Network (FRBFN)

2.1 Network Architecture

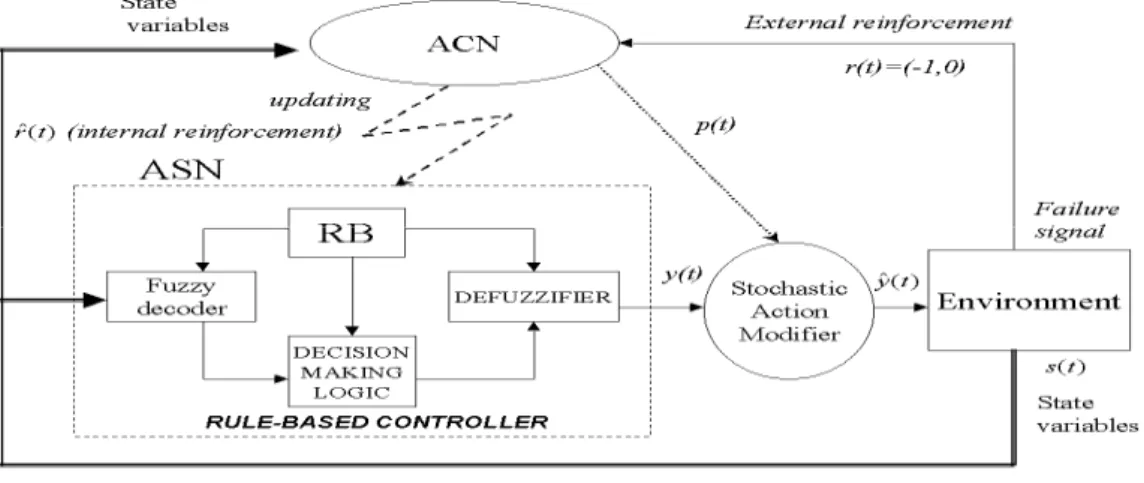

The logical network of proposed FRBFN is shown in Figure 1.

Figure 1. Logical Network Architecture of FRBFN

There are four functional blocks in Figure 1. Action Critic Network (ACN), Action Selection Network (ASN) and Stochastic Action Modifier (SAM) function blocks are the basic components of FRBFN network. The environment function block on the right is the target for control purpose.

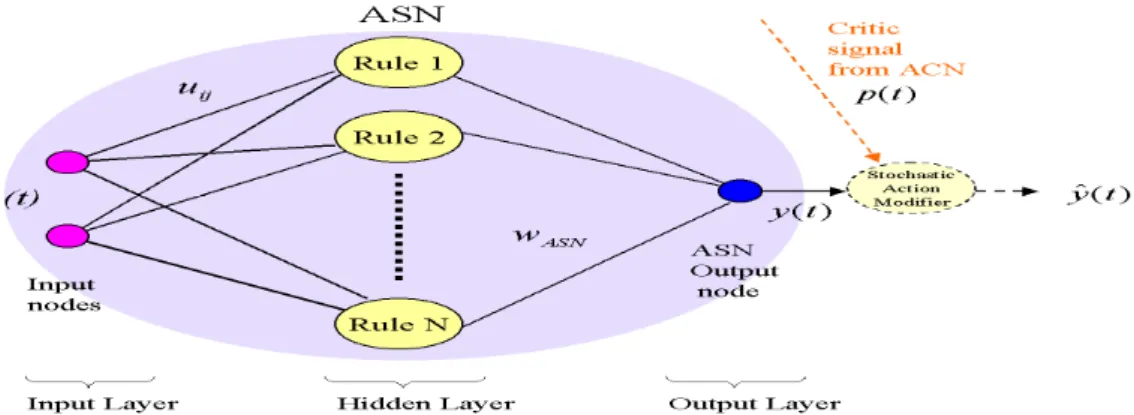

2.2 Function of ASN

ASN acts as a rule-based fuzzy logic controller that includes three basic components in a fuzzy system: fuzzification, inference engine and defuzzification [1]. Because of the equivalence between rule-based fuzzy system and RBFN[11][12], a RBFN is introduced to implement an ASN. The RBFN performs the task of what a fuzzy system can do and eliminates the procedures of fuzzification and defuzzification. The network architecture of ASN implemented by RBFN is shown in Figure 2. ASN receives state variables s(t) from environment, such as a controlled plant and internal reinforcement signal rˆ t( ) generated from ACN. The state variables offer the information about plant’s current status. For the associated reinforcement learning system, both the state variables and the internal

16

reinforcement signals are used as a criteria to update learning parameters in ASN. The values of these parameters are learned and consequently generated through the signals of state variables s(t) and internal reinforcement signal rˆ t( ).

Figure 2. Block diagram of Action Selection Network (ASN)

The output of ASN does not directly apply to the controlled plant. Instead, the output y(t) of ASN is first sent to the Stochastic Action Modifier (SAM). The SAM then tries to optimize y(t) to generate a modified output yˆ t( ) based on the predicted reinforcement signal p(t) at that time and its stochastic algorithm.

)) ( ), ( ( )

ˆ(t N y t t

y =

σ

(1)where N is a normal or Gaussian distribution function with meany(t) and variance σ(t). A small value of σ(t) indicates the system is closer to a stable situation. For our learning algorithm, we choose probability function σp(t) as

) (

1 2

) 1

( p t

p t e

= +

σ (2)

where p(t) is generated by ACN and is the signal used to predict reinforcement signal r(t).

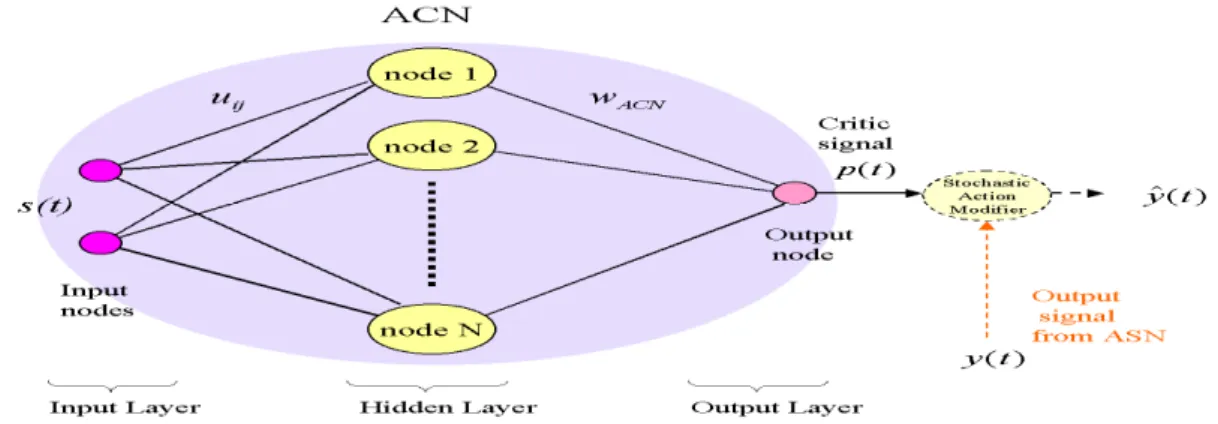

2.3 Function of ACN

The function of an ACN shown in Figure 1 is to generate signals of p(t) and rˆ t( ) by receiving state variables and external reinforcement signals. The output signal p(t) is a prediction signal and

) ˆ t(

r is an internal reinforcement signal. The purpose of p(t) is to predict the infinite discounted prediction signal z(t)and rˆ t( ) is the difference betweenp(t) and z(t). The objective of ACN is to model the environment such that it can perform a multi-step prediction of reinforcement signal for the current action chosen by ASN. Using a Radial Basis Function Network (RBFN) is proposed to implement an ACN, because a RBFN has the ability to approximate the real-valued mapping of continuous or piecewisely continuous function. Another reason to use RBFN is for total network architecture of FRBFN. If both the ASN and ACN use the same neural network architecture, some

17

layers of RBFN can be shared. The network diagram of ACN is shown in Figure 3.

Figure 3. Block diagram of Action Critic Network (ACN)

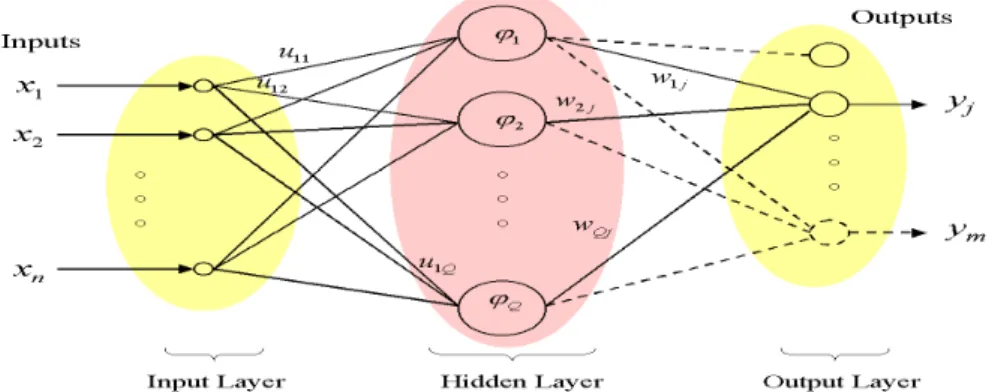

The input space s(t) of ASN and ACN should be identical because they all receive same information of s(t) from the same environment. The input and hidden layers of ASN or ACN can be shared each other. The architecture of proposed FRBFN is more compact by sharing the first two layers,. The network architecture shown in Figure 4 is composed of one ASN and one ACN to form the final version of FRBFN.

Figure 4. FRBFN implementation diagram

The network diagrams of ASN and ACN shown in Figure 2 and Figure 3 are merged in Figure 4.

There are three reasons to combine these two networks: (1) Both ASN and ACN have the same network architecture. (2) The input state space for these two networks is identical. (3) The numbers of hidden nodes in ASN and ACN are identical because of the same input state space. Sharing layer 1 and layer 2 by ACN and ASN will reduce the memory requirement for storage and eliminate the need to recalculate the outputs of hidden nodes. And in learning phase, we do learn widths and centers in hidden nodes once only because ACN and ASN both use the same hidden nodes. These two networks have their own weight parameters between layer 2 and layer3. The network architecture of FRBFN is simpler and the learning parameters are less than others in [3][4][5][6].

![Table 5: Comparison among other methods and SI2DPCA Metho d number Method Recognition rate Computation cost 1 (2D) 2 PCA [12] 90.5% high](https://thumb-ap.123doks.com/thumbv2/9libinfo/9128868.412948/23.892.146.746.435.728/table-comparison-methods-metho-number-method-recognition-computation.webp)