國 立 交 通 大 學

電信工程研究所

博 士 論 文

即時性與非即時性訊務之無線網路

資源分配

Resource Allocation for Real-Time and

Non-Real-Time Traffic in Wireless

Networks

研 究 生:黃郁文

指導教授:李程輝 博士

即時性與非即時性訊務之無線網路資源分配

Resource Allocation for Real-Time and

Non-Real-Time Traffic in Wireless Network

研究生:黃郁文

Student:

Yu-Wen

Huang

指導教授:李程輝 博士 Advisor:

Dr.

Tsern-Huei

Lee

國立交通大學

電信工程研究所

博士論文

A Dissertation

Submitted to Institute of Communication Engineering

College of Electrical Engineering

National Chiao Tung University

in Partial Fulfillment of the Requirements

for the Degree of Doctor of Philosophy

in

Communication Engineering

January 2012

Hsinchu, Taiwan

即時性與非即時性訊務之無線網路資源分配

學生: 黃郁文 指導教授: 李程輝 博士

國立交通大學電信工程研究所

摘要

在

本篇論文中,我們首先討論無線網路(IEEE 802.11e WLANs、以 OFDMA 技術為基礎的系統)資源分配技術。接著,將相關經驗應用至廣義有線系統即時性訊務多工器。

在IEEE 802.11e WLANs 中,我們用高效能 TXOP 分配演算法、多工機制與相關的允入

控制單元推廣IEEE 802.11e HCCA 規格標準中的樣本排程器以保證不同變動位元速率訊務的

不同服務品質保證需求(延遲限制、封包遺失率)。其中,我們透過定義等效訊務流和集成封包 遺失率來得到訊務內與訊務間多工增益。並藉此達到高頻寬使用效率。再者,我們採用加權 遺失公平的服務排程演算法將集成 TXOP 分配給各個訊務流。電腦模擬結果顯示我們提出的 方法可以達到訊務流之服務品質需求,並且與先前研究比較起來,可以達到較高的頻寬使用 效率。 在以OFDMA 技術為基礎的系統中,我們提出同時處理即時性與非即時性訊務的資源分 配演算法。其中,對於即時性訊務而言,假設其服務品質需求為延遲限制與資料遺失率。接 著,根據訊務流之延遲限制與資料遺失率計算『最小所需頻寬』,然後將資源分配定義為滿足 訊務流之『最小所需頻寬』下,最大化系統吞吐量之最佳化問題。資源分配結束後,若用戶 端連結多個訊務流,則採用等比例遺失排程演算法決定訊務流間資源分配。萬一現有資源無

法提供每個訊務流最小所需頻寬,則將資源分配問題轉為最大化即時性訊務傳送量。其中, 每個用戶所得資源不得超過其最小所需頻寬。此外,我們也設計『先行處理器』以最大化滿 足服務品質需求之訊務流數。在本論文中,我們證明,在任意訊框中,若任意排程演算法可 滿足訊務流之服務品質需求,則我們提出之等比例排程演算法亦可。電腦模擬結果亦顯示我 們提出之演算法相較於先前的研究,擁有較佳效能。 論文的最後一個部份,我們研究可處理變動封包長度之多工系統。我們提出等比例遺失 佇列管理演算法,使其與近期限優先之排程演算法結合提供即時性訊務之不同服務品質需求 (延遲限制與資料遺失率)。我們指出,若以等效頻寬為指標,我們所提出之等比例遺失演算法 為最佳佇列管理演算法。等比例遺失演算法假設封包可以無限制切割。為了更貼近實際封包 交換網路,我們亦提出二個以封包為基本單位之佇列管理演算法。其中一個演算法為 G-QoS 演算法之直接推廣,另外一個則根據等比例遺失演算法的結果設計。電腦模擬結果指出,根 據等比例遺失演算法設計的佇列管理演算法(以封包為基本單位)比 G-QoS 演算法之直接推 廣,擁有較佳效能。

Resource Allocation for Real-Time and Non-Real-Time Traffic

in Wireless Networks

Student: Yu-Wen Huang advisor: Dr. Tsern-Huei Lee

Institute of Communication Engineering

National Chiao Tung University

Abstract

In this dissertation, we firstly studied resource allocation technique for wireless network such as IEEE 802.11e WLANs and OFDMA-based systems. Then, extend the developed results to a general multiplexer for real-time traffic in wired systems.

In IEEE 802.11e WLANs, we generalize the sample scheduler described in IEEE 802.11e HCCA standard with an efficient TXOP allocation algorithm, a multiplexing mechanism, and the associated admission control unit to guarantee QoS for VBR flows with different delay bound and packet loss probability requirements. We define equivalent flows and aggregate packet loss probability to take advantage of both intra-flow and inter-flow multiplexing gains so that high bandwidth efficiency can be achieved. Moreover, the concept of proportional-loss fair service scheduling is adopted to allocate the aggregate TXOP to individual flows. From numerical results obtained by computer simulations, we found that our proposed scheme meets QoS requirements and results in much higher bandwidth efficiency than previous algorithms.

systems which handles both real-time and non-real-time traffic. For real-time traffic, the QoS requirements are specified with delay bound and loss probability. The resource allocation problem is formulated as one which maximizes system throughput subject to the constraint that the bandwidth allocated to a flow is no less than its minimum requested bandwidth, a value computed based on loss probability requirement and running loss probability. A user-level proportional-loss scheduler is adopted to determine the resource share for flows attached to the same subscriber station (SS). In case the available resource is not sufficient to provide every flow its minimum requested bandwidth, we maximize the amount of real-time traffic transmitted subject to the constraint that the bandwidth allocated to an SS is no greater than the sum of minimum requested bandwidths of all flows attached to it. Moreover, a pre-processor is added to maximize the number of real-time flows attached to each SS that meet their QoS requirements. We show that, in any frame, the proposed proportional-loss scheduler guarantees QoS if there is any scheduler which guarantees QoS. Simulation results reveal that our proposed algorithm performs better than previous works.

Finally, we study a multiplexing system which handles variable-length packets. A proportional loss (PL) queue management algorithm is proposed for packet discarding, which combined with the work-conserving EDF service discipline, can provide QoS guarantee for real-time traffic flows with different delay bound and loss probability requirements. We show that the proposed PL queue management algorithm is optimal because it minimizes the effective bandwidth among all stable and generalized space-conserving schemes. The PL queue management algorithm

is presented for fluid-flow models. Two packet-based algorithms are investigated for real packet switched networks. One of the two algorithms is a direct extension of the G-QoS scheme and the other is derived from the proposed fluid-flow based PL queue management algorithm. Simulation results show that the scheme derived from our proposed PL queue management algorithm performs better than the one directly extended from the G-QoS scheme.

Acknowledgements

First of all, I would like to represent my deeply gratitude to my advisor, Dr. Tsern-Huei Lee, for his constantly teaching, support and encouragement.

Secondly, I would like to represent my sincere appreciation to my parents, my families and my girlfriend for their love, support and encouragement.

Special thank to all the staff of Network Technology Laboratory, Institute of Communication Engineering, National Chiao Tung University for their enthusiastic help.

Finally, this dissertation is dedicated to my dear grandma living in the heaven. I will keep her teaching, love and spirit in my mind forever.

Contents

摘要... i Acknowledgements ... vi Contents ... vii List of Tables... ix List of Figures...x Chapter 1 Introduction...1Chapter 2 Related Works ...7

2.1. Resource Allocation in IEEE 802.11e HCCA ...7

2.2. Resource Allocation in OFDMA-Based Systems...10

2.3. Optimal Queue Management Algorithm for ATM Networks...18

Chapter 3 Resource Allocation for Real-Time Traffic in IEEE 802.11e WLANs ...22

3.1. System Model ...23

3.2. Aggregate TXOP Allocation Algorithm ...27

3.3. Proportional-loss Service Scheduler ...33

3.4. The Associated Admission Control Unit ...40

3.5. Simulation Results ...41

Chapter 4 Resource Allocation for Real-Time and Non-Real-Time Traffic in OFDMA-Based Systems ...52

4.1. System Model ...53

4.2. The Proposed Scheme...53

4.3. Simulation Results ...65

Chapter 5 Optimal Queue Management Algorithm for Real-Time Traffic ...78

5.1. System Model ...79

5.3. Packet-based Systems ...95

5.4. Simulation Results ...98

Chapter 6 Conclusions...104

Biography...107

Appendix A Derivations of all equations and proofs of all lemmas and theorems...115

Appendix B Pseudo codes of the proposed algorithms...122

Vita ...127

List of Tables

Chapter 3

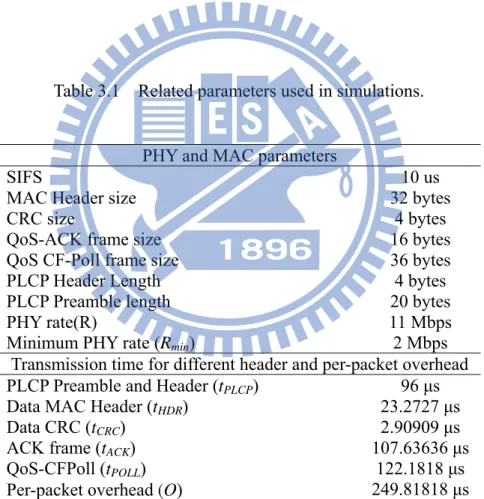

Table 3.1 Related parameters used in simulations. ...42 Table 3.2 TSPECs of traffic flows attached to Type I, Type II and Type III QSTAs...43 Table 3.3 The 99% confidence intervals of packet loss probability of flows attached to Type I, Type II and Type III QSTAs. ...44 Table 3.4 Over-allocation Ratio of Type I, Type II and Type III QSTAs...49 Table 3.5 Performance comparison for our proposed scheme and PRO-HCCA. ...51

Chapter 4

Table 4.1 Calculation of *

,n k

R t and the resulting Pn k*,

t for four conditions...57Table 4.2 Parameters of simulation environment, traffic characteristics, QoS requirements and adopted modulation and coding scheme...67 Table 4.3 Loss probabilities for users attached with one Type I and one Type II real-time flows. ...74 Table 4.4 Number of Type I and Type II flows which meet their QoS requirements in the

second scenario...74

Chapter 5

Table 5.1 and k , k 1 k 5 for the example illustrated in Fig. 5.2...86

Table 5.2 Traffic characteristics and QoS requirements of the five flows generated from video trace files...98 Table 5.3 Steady-state (normalized) packet loss probability for flows generated from video

List of Figures

Chapter 3

Fig. 3.1 Static and periodic schedule for 802.11e HCCA...24

Fig. 3.2 The system architecture of our proposed scheme...26

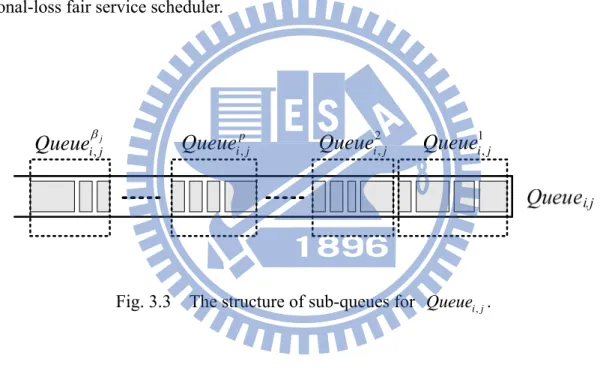

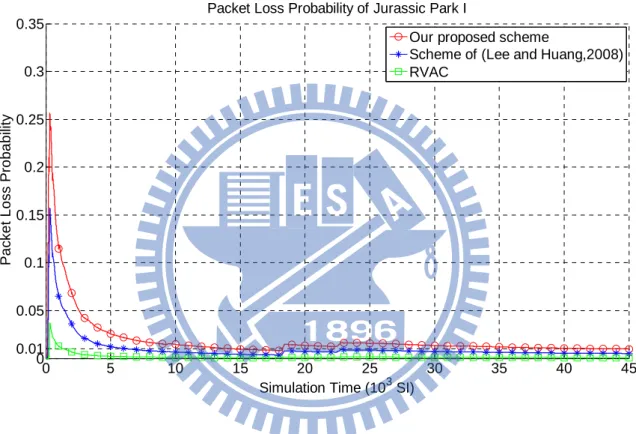

Fig. 3.3 The structure of sub-queues for Queue . ...33 i j, Fig. 3.4 Running packet loss probabilities of Jurassic Park I attached to Type I QSTA. ...46

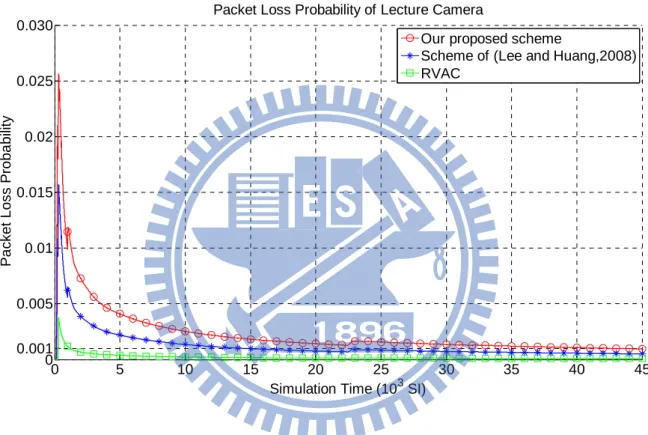

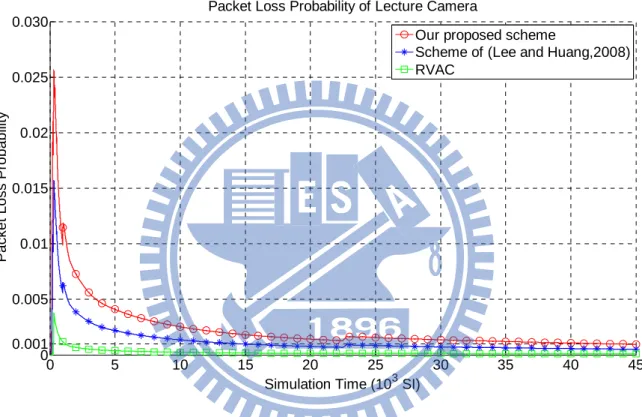

Fig. 3.5 Running packet loss probabilities of Lecture Camera attached to Type I QSTA. ...47

Fig. 3.6 Running packet loss probabilities of Lecture Camera attached to Type I QSTA. ...48

Fig. 3.7 Comparison of admissible region...51

Chapter 4 Fig. 4.1 Architecture of the proposed scheme. ...54

Fig. 4.2 The relationship between Pn k,

t and Rn k,

t ...56Fig. 4.3 Throughputs of various schemes in the first scenario. ...69

Fig. 4.4 Loss probabilities of SSs attached with real-time traffic flows in the first scenario. ....70

Fig. 4.5 Throughput comparison between proposed:ILP and proposed:Matrix schemes...75

Fig. 4.6 Loss probability comparison between proposed:ILP and proposed:Matrix schemes. ..76

Fig. 4.7 Throughputs of various schemes in the second scenario...77

Chapter 5

Fig. 5.1 Architecture of the investigated multiplexer system and the structure of virtual sub-queues, m

k

Fig. 5.2 An example for illustrating PL queue management algorithm for traffic flows with identical delay bound requirement...85 Fig. 5.3 An example for illustrating PL queue management algorithm for traffic flows with

different delay bound requirement...94 Fig. 5.4 Sample Path of packet loss probability for video trace files with (a) our proposed PL queue management algorithm (b) Packet-based algorithm I (c) Packet-based algorithm II adopted. ...103

Chapter 1

Introduction

Because of the rapid proliferation of real-time multimedia applications such as VoIP and streaming video, providing quality of service (QoS) guarantee for individual traffic flows in current communication networks becomes an important issue. Generally speaking, QoS provisioning includes guarantee of maximum packet delay and packet loss probability.

For a traffic flow, the maximum tolerable delay of all its packets is called the delay bound of the flow. Packet loss probability is normally defined as the ratio of packets which are discarded due to buffer overflow or deadline violation to the total number of packets arrived. Buffer overflow occurs if a packet arrives when buffer is full, and deadline violation means that a packet is placed in the buffer longer than its delay bound. It is often acceptable for a real-time application to lose some packets as long as the packet loss probability is below a desired pre-specified value.

was introduced, and this amendment has been combined into WLAN standard [2]. The QoS-aware coordination function proposed in IEEE 802.11e is called Hybrid Coordination Function (HCF). This function consists of two channel access mechanisms. One is contention-based Enhanced Distributed Channel Access (EDCA) and the other is contention-free HCF Controlled Channel Access (HCCA). The contention-free nature makes HCCA a better choice for QoS support than EDCA [3].

HCCA requires a centralized QoS-aware coordinator, called Hybrid Coordinator (HC), which has a higher priority than normal QoS-aware stations (QSTAs) in gaining channel control. HC can gain control of the channel after sensing the medium idle for a PCF inter-frame space (PIFS) that is shorter than DCF inter-frame space (DIFS) adopted by QSTAs. After gaining control of the transmission medium, HC polls QSTAs according to its polling list. In order to be included in HC’s polling list, a QSTA needs to negotiate with HC by sending the Add Traffic Stream (ADDTS) frame. In this frame, the QSTA describes the traffic characteristics and the QoS requirements in the Traffic Specification (TSPEC) field. Based on the traffic characteristics and the QoS requirements, HC calculates the scheduled service interval (SI) and transmission opportunity (TXOP) duration for each admitted flow. Upon receiving a poll, the polled QSTA either responds with QoS-Data if it has packets to send or a QoS-Null frame otherwise. When the TXOP duration of some QSTA ends, HC gains the control of channel again and either sends a QoS-Poll to the next station on its polling list or releases the medium if there is no more QSTA to be polled.

In this dissertation, we present an efficient scheduling scheme for HCCA to provide QoS guarantee for VBR traffic flows with different delay bound and packet loss probability requirements. The proposed scheme achieves both intra-flow and inter-flow multiplexing gains. In this scheme, HC calculates TXOP duration and performs admission control while every QSTA implements a proportional-loss fair service scheduler to determine how the allocated TXOP is shared by traffic flows attached to it. Numerical results obtained by computer simulations show that our proposed TXOP allocation algorithm results in much better performance than previous works. Moreover, the proposed proportional-loss fair service scheduler successfully manages the TXOP so that different delay bound and packet loss probability requirements of all traffic flows can be fulfilled.

In OFDMA-based wireless systems, such as IEEE 802.16 [4] and the Long Term Evolution (LTE) [5], channel access is partitioned into frames in the time domain and sub-channels in the frequency domain to achieve multi-user and frequency diversities. One obvious performance metric to evaluate resource allocation schemes is system throughput. A simple strategy to achieve high system throughput is to allocate more resources to users with better channel qualities. This strategy, unfortunately, may lead to starvation and cause QoS violation to real-time applications attached to users who have poor channel qualities. A well-designed resource allocation scheme should, therefore, take QoS support into consideration while maximizing system throughput.

throughput with QoS support for real-time traffic flows. Our contributions include: 1) define and derive the minimum requested bandwidth of each real-time flow based on the loss probability requirement and the running loss probability, 2) formulate the resource allocation problem as one which maximizes system throughput subject to the constraint that the bandwidth allocated to a flow is greater than or equal to its minimum requested value, 3) propose a user-level proportional-loss (PL) scheduler for multiple real-time traffic flows attached to the same subscriber station (SS) to share the allocated resource, and 4) modify the resource allocation problem to maximize the amount of real-time traffic transmitted and add a pre-processor in front of the PL scheduler to maximize the number of real-time flows attached to each SS that meet their QoS requirements, when the available resource is not sufficient to provide each flow its minimum requested bandwidth. We show that, in any frame, the proposed PL scheduler guarantees QoS if there is any scheduler which guarantees QoS. Simulation results reveal that our proposed algorithm performs better than previous works.

Finally, we consider a general multiplexer operated in wired system. In order to provide QoS guarantee for traffic flows with different delay bound and packet loss probability requirements, it is necessary to be equipped with two types of priority schemes: time priority and loss priority. Note that only stable priority schemes are considered in this dissertation, where a priority scheme is said to be stable iff its priority assignment policy does not change over time. A time priority scheme is responsible for service scheduling. It assigns time priority to all the buffered packets so that the multiplexer can select the highest priority packet for service. There are two types of time priority

schemes: static and dynamic. A static time priority scheme assigns priorities to flows while a dynamic scheme does so to packets. Rate monotonic [6] is a famous static time priority scheme, while generalized processor sharing (GPS) [7], [8] and earliest deadline first (EDF) are well-known dynamic time priority schemes. A loss priority scheme is in charge of queue management and normally has two main functions. One determines the necessity to discard packets. When there are some packets needed to be discarded, the other one identifies which packets in the buffer should be discarded. Most of the previous works regarding loss priority assignment can be classified into two categories, namely, push-out [9]-[12] and partial buffer sharing [13]-[15]. In a push-out scheme, when buffer is full upon a packet arrival, the packet with lowest priority is pushed out or discarded. Obviously, tail drop can be considered as a special pushout scheme where loss priorities are assigned based on packet arrival time. In a partial buffer sharing scheme, each traffic flow is assigned a threshold value, and an arriving packet is admitted into the buffer iff the current buffer occupancy does not exceed the threshold assigned to the traffic flow it belongs to. Push-out is more efficient than partial buffer sharing because it minimizes overall packet loss. However, the complexity of push-out is likely to be higher than that of partial buffer sharing.

In this dissertation, we study a multiplexer which provides heterogeneous QoS guarantee, delay bound and loss probability for variable-length packets. In such a multiplexer, it should be more meaningful to consider the amount of data loss rather than the number of packet loss. Consequently, we define loss probability as the ratio of the total amount of data lost to that of data

arrived and then adopt it as the metric for evaluating the performances of schemes handling variable-length packets. A proportional-loss (PL) queue management algorithm for fluid-flow model is proposed for data discarding. The proposed PL queue management algorithm tries to minimize the total amount of data loss and balance the normalized running loss probabilities for all admitted traffic flows. When combined with the EDF service discipline, it is an effective and efficient scheme for both time and loss priority assignments. We show that the combined scheme is optimal because it minimizes the effective bandwidth under the generalized space-conserving constraint. We further investigate and compare two packet-based queue management schemes. One is a direct extension of the G-QoS scheme and the other is a derivative of the proposed PL queue management algorithm. Results show that the scheme derived from the proposed PL queue management algorithm outperforms the one extended from the G-QoS scheme.

The rest of this dissertation is organized as follows. In Chapter 2, we review related works. Then, we present the proposed schemes and evaluate their performances for 1) IEEE 802.11e HCCA, 2) OFDMA-based systems and 3) a general wired systems in Chapter 3, 4 and 5, respectively. Finally, Chapter 6 contains the conclusions drawn for this dissertation.

Chapter 2

Related Works

In this chapter, we review the related works regarding to perform resource allocation in IEEE 802.11e HCCA and OFDMA-based systems, respectively. Before leaving this chapter, we describe the optimal queue management schemes for ATM networks.

2.1. Resource Allocation in IEEE 802.11e HCCA

In IEEE 802.11e HCCA, resource is partitioned and allocated to users in the time domain. As a result, performing resource allocation can be achieved by some scheduling schemes. Scheduling schemes designed for IEEE 802.11e HCCA can be classified into two categories, namely, static and dynamic. In a static scheduling scheme, HC allocates the same TXOP duration to a QSTA every time it is polled. Moreover, the SI is often selected as the minimum of delay bound requirements of all traffic flows. The sample scheduler provided in IEEE 802.11 standard document [2] is a typical example of static scheduling scheme. The HC of the sample scheduler allocates TXOP duration

based on mean data rate and nominal MAC service data unit (MSDU) size. It performs well for constant bit rate (CBR) traffic. For variable bit rate (VBR) traffic, packet loss may occur seriously. In [16], some static scheduling scheme was proposed to generalize the sample scheduler with modified TXOP allocation algorithm and admission control unit so that both delay bound and packet loss probability requirements of admitted traffic flows can be fulfilled. To achieve the same goal, the Rate-Variance envelope based Admission Control (RVAC) algorithm [17] uses token buckets for traffic shaping. With the token buckets, the envelope of traffic arrival can be determined. Using the traffic envelope and the given delay bound requirement, one can compute the packet loss probability for an allocated bandwidth. Although the fact that many real-time VBR applications can tolerate packet loss to certain degree was taken into consideration in these works to improve bandwidth efficiency, it was assumed that all traffic flows have the same delay bound of one SI and the same packet loss probability requirement. Since different real-time applications may require distinct delay bound and packet loss probability requirements, ones can manage the bandwidth more efficiency if each requirement can be considered individually.

In contrast to static ones, a dynamic scheduling scheme allocates TXOP duration to a QSTA dynamically, according to system status, to provide delay bound guarantee and/or fairness. Some dynamic scheduling schemes can be found in [18]-[25]. To achieve delay bound guarantee, a dynamic scheduling scheme requires QSTAs to timely report their queue statuses to HC. As an example, in the prediction and optimization-based HCCA (PRO-HCCA) scheme [20] that was

proposed recently, the SI is set to be smaller than or equal to half of the minimum of delay bounds requested by all traffic flows. As a consequence, compared with static scheduling schemes, QSTAs are polled more frequently, which implies higher overhead for poll frames. Furthermore, static and periodic polling allows QSTAs to easily eliminate overhearing to save energy. Therefore, although dynamic scheduling has the potential to achieve high bandwidth efficiency, it is worthwhile to study static scheduling schemes. In the following paragraphs, we give a detailed description of the sample scheduler.

The Sample Scheduler [2]Consider QSTA which has a n flows. Let a l, L denote, respectively, the mean data rate l

and the nominal MSDU size of the lth flow attached to QSTA

a. HC calculates TXOP as follows. a

Firstly, it decides, for flow l, the average number of packets Nl that arrive at the mean data rate

during one SI l l l SI N L (1)

Secondly, the TXOP duration for this flow is obtained by max min min max l , l l a a L L TD N O O R R (2) where min a

R is the minimum physical transmission rate of QSTA , and a Lmax and O denote,

overhead O includes the transmission time for an ACK frame, inter-frame space, MAC header, CRC field and PHY PLCP preamble and header.

Finally, the total TXOP duration allocated to QSTA is given by a

1 a n a l POLL l TXOP TD SIFS t

(3)where SIFS and tPOLL are, respectively, the short inter-frame space and the transmission time of a

CF-Poll frame.

Admission control is performed as follows. Assume that QSTA negotiates with HC for a

admission of a new traffic flow, i.e., the ( 1)th a

n flow of QSTA . For simplicity, we further a

assume that the delay bound of the new flow is not smaller than SI. The process is similar if this

assumption is not true. HC updates TXOPa as TXOPa TXOPaTDna1. The new flow is admitted iff the following inequality is satisfied

1, K b cp a k k k a b T T TXOP TXOP SI SI T

(4)where T is the time used for EDCA traffic during one beacon interval. cp

2.2. Resource Allocation in OFDMA-Based Systems

In OFDMA-based systems, resource is partitioned into frames in the time domain and sub-channels in the frequency domain. A well-designed resource allocation algorithm should take system throughput, fairness and QoS support into account.

Several previous works, say, [26], [27], adopted the concept of proportional fairness (PF) to eliminate starvation while maintaining acceptable system throughput. These schemes, although achieve a kind of fairness among users, are not suitable for QoS support. In [28] and [29], the ideas of PF and static minimum bandwidth guarantee were combined to support multiple service classes. This enhanced algorithm, however, does not take delay bound and loss probability requirements of real-time flows into consideration and thus is unlikely to provide QoS support well.

In [30], a power and sub-carrier allocation policy was proposed for system throughput optimization with the constraint that the average delay of each traffic flow is controlled to be lower than its pre-defined level. Guaranteeing average delay, however, is in general not sufficient for real-time applications. The results presented in [31] reveal that dynamic power allocation can only give a small improvement over fixed power allocation with an effective adaptive modulation and coding (AMC) scheme. As a result, to reduce the complexity, it is reasonable to design resource allocation schemes under the assumption that equal power is allocated to each sub-channel.

Some resource allocation algorithms were proposed, assuming equal-power allocation, to assign a user a higher priority for channel access if the deadline of its head-of-line (HOL) packet is smaller [32]-[35]. A simple scheme, called modified largest weighted delay first (M-LWDF), which uses a kind of utility function that is sensitive to loss probability and delay bound requirements as well as delay of HOL packets, was presented in [33]. Obviously, considering only

the deadlines of HOL packets is not optimal. A QoS scheduling and resource allocation algorithm which considers deadlines of all packets was presented in [36]. This scheme requires high computational complexity and thus may not be practical for real systems. To reduce computational complexity, a matrix-based scheduling algorithm was proposed in [27]-[29]. The M-LWDF, the scheme proposed in [36] and the matrix-based scheduling algorithm are related to our work and will be reviewed in the following paragraphs. For ease of presentation, we firstly describe the system model and then depict the details of each scheme.

System modelWe consider a single-cell OFDMA-based system which consists of one base station (BS) and multiple users or subscriber stations (SSs). Time is divided into frames, and the duration of a frame is equal to Tframe. In a frame, there are M sub-channels and S time slots. We assume that the

sub-channel statuses of different SSs are independent. Moreover, for a given SS, its statuses on the

M sub-channels are also independent. The channel quality for a given SS on a specific sub-channel

is fixed during one frame. Transmission power is equally allocated to each sub-channel. To improve reliable transmission rate, an effective AMC scheme is adopted to choose a transmission mode based on the reported signal-to-noise ratio (SNR). We only consider downlink transmission.

For ease of description, we assume that no SS is attached with both real-time and non-real-time traffic flows. Let and RT NRT represent, respectively, the sets of SSs that are attached with

real-time and non-real-time traffic flows. Further, let RT NRT . We shall use K to n

denote the number of traffic flows attached to SS n. All non-real-time flows attached to the same

SS are aggregated into one so that K =1 if SS n nNRT. The QoS requirements of real-time

traffic flows are specified by delay bound and loss probability. The kth flow attached to SS

n is

denoted by fn k, . If SS n , then the delay bound and loss probability requirements of RT fn k, are represented by Dn k, Tframe and P , respectively. Data are assumed to arrive at the beginning n k, of frames.

In the BS, a separate queue is maintained for each real-time traffic flow while non-real-time data are stored per SS. Assume that SS n . The data of flow RT fn k, are buffered in

,

n k

Queue , which can be partitioned into Dn k, disjoint virtual sub-queues, denoted by Queuen kd, ,

,

1 d Dn k, where Queuen kd, contains the data in Queuen k, that can be buffered up to d T frame

without violating their delay bounds. We shall use d,

n kQ t to represent the size of Queuen kd, at

the beginning of the tth frame (including the newly arrived),

,

1 , , n k D d d n k n k Q t Q t , and

n1 n k,

K k nQ t Q t . Data which violate their delay bounds are dropped. It is assumed that the

size of each queue is sufficiently large so that no data will be dropped due to buffer overflow. To simplify notation, the queue for storing data of SS nNRT is denoted by Queue . n

Resource allocation is performed at the beginning of each frame and, therefore, it suffices to consider one specific frame, say the tth frame. For SS n, we denote its maximum achievable

transmission rate on the mth sub-channel in the th

t frame and its long-term average throughput up to

the tth frame by

,

n m

r t and r tn

, respectively.

Scheme of [36]In [36], resource allocation is formulated as an optimization problem which maximizes some utility function subject to QoS guarantee. It consists of two stages. In the first stage, resources are allocated to real-time traffic flows only. If there are un-allocated resources after the first stage, the second stage is performed to allocate the remaining resources to non-real-time traffic.

In the first stage, called real-time QoS scheduling, the minimum requested bandwidth of each real-time traffic flow is calculated by min ,

, 1 1

n n k

K D d

n k d n k

R

Q t d. Note that substituting with0, 1, or corresponds, respectively, to strict priority [37], average QoS provisioning [38], or urgent [39] scheduling policy. With the assumption that sub-channel is the smallest resource granularity, the first stage aims to minimize the total number of sub-channels used to serve the sum of calculated minimum requested bandwidths of all real-time flows. This problem can be modeled as maximum weighted bipartite matching (MWBM) and solved by the famous On Kuhn’s Hungarian

method, whose complexity is 2

( RT (min( , RT )) )

O M M [40], where RT is the size of . RT

In the second stage, the th

m sub-channel, if still available, is allocated to the SS which

satisfies *

, arg max

NRT

n n n n m

n U r t r t , where Un

x , called marginal utility function, is the -proportional fairness [41], is given by

1 1 1 if 1 log otherwise x U x x (5)where x represents the average throughput. Note that the policy corresponds to maximum throughput, proportional fairness, or max-min fairness if is chosen to be 0, 1, or , respectively.

It was shown in [36] that the above scheme with makes a reasonable trade-off between 1 QoS support and maximization of system utility. However, it has some drawbacks. Firstly, assuming the granularity of resource to be sub-channels can result in waste of bandwidth. In current standards such as IEEE 802.16 and LTE, a sub-channel can be shared by multiple SSs. Secondly, although the number of sub-channels used to serve real-time traffic is minimized in the first stage, the remaining service capability for non-real-time traffic may not be maximized. This is because the qualities of remaining sub-channels could be poor for SSs attached with non-real-time traffic flows. Thirdly, calculation of the minimum requested bandwidth for each real-time traffic flow does not take its loss probability requirement into consideration. Real-time traffic usually can tolerate data loss to certain degree. System throughput can be improved significantly if one takes advantage of this feature in resource allocation. Finally, the complexity of the Hungarian method could make this scheme infeasible for a real system.

Matrix-based scheduling algorithm [27]A matrix-based scheduling algorithm which tries to maximize the utility sum of all users with acceptable computational complexity was proposed in [27]. In this scheme, a matrix U [ un m, ] of dimension M is defined for resource allocation, where un m, rn m,

t rn t represents themarginal utility of user n on sub-channel m. For sub-channel m, let s represent the number m

of slots that have not been allocated and xn m, the number of slots allocated to SS n. Initially, we

have sm and S xn m, , n0 , 1 m M . The matrix-based scheduling algorithm consists of three steps: 1) Find an ( ,n m ) which satisfies un m, max1 n ,1 m M{un m, }. 2) Set

, min( , , )

n m m n n m

x s Q t r t (allocate Qn

t rn m,

t or all the remaining slots of sub-channel *m , whichever is smaller, to user n ), *

, ,

max(0, )

n n n m n m

Q t Q t r t x

(update queue status of user *

n ), and

,

m m n m

s s x (update the remaining number of slots of sub-channel *

m ). Replace the (n)th row of U by an all-zero row if Qn

t 0 (user n does *not need any more resource) and the ( )th

m column of U by an all-zero column if sm (all slots 0 of sub-channel *

m are allocated). 3) Update rn*

t . If Qn

t 0 , then re-calculate

*, *, *

n m n m n

u r t r t for all mm* (update the marginal utilities of user n on various *

sub-channels before allocating the remaining resources). The above three steps are repeatedly executed until all elements of U are replaced with zeroes. The resulting values of xn m, , n ,

1 m M , are the solutions. Assuming that M , the computational complexity of the

matrix-based scheduling algorithm in the worst case is ( 2 2)

1

M columns of U are replaced by all-zero columns one by one, followed by replacing the rows

by all-zero rows one by one. Its complexity is ( 2 2)

O M M if M .

Note that the matrix-based scheduling algorithm takes queue occupancy into consideration. However, it does not consider QoS support. The same authors combined the idea of PF with static minimum bandwidth guarantee to support multiple service classes [28], [29]. A user whose channel quality is better than some threshold is guaranteed a pre-defined minimum bandwidth. This enhanced version, still, cannot provide QoS support well because it does not consider delay bound and loss probability requirements of real-time flows.

Modified-Largest Weighted Delay First (M-LWDF) [33]The goal of the M-LWDF scheme is to achieve P W( n k, Dn k, )Pn k, for all n , RT

1 k Kn . In M-LWDF, the marginal utility of flow fn k, on sub-channel m is

, , ,

n k Wn k t rn m t

, where Wn k,

t T frame is the delay of the HOL packet of Queuen k, at thebeginning of frame t and n k, is an arbitrary positive constant. To transmit data, the flow with

the largest marginal utility on some available sub-channel is selected for service. It was shown that M-LWDF is throughput-optimal in the sense that it is able to keep all queues stable if this is at all feasible to do with any scheduling algorithm. Moreover, it was reported that n k, an k, r tn

,where an k, (logPn k, ) Dn k, , performs very well. Clearly, for such a selection of n k, , the marginal utility is sensitive to loss probability and delay bound requirements as well as delay of the

HOL packet. When combined with a token bucket control, M-LWDF can provide QoS support to flows with minimum bandwidth requirements. However, how to serve non-real-time flows with zero minimum bandwidth requirements was not studied. To compare its performance with that of our proposed scheme, we shall assume that the operation of M-LWDF is divided into two stages. In the first stage, only real-time traffic flows are considered. As a consequence, the first stage of M-LWDF is the same as that of the matrix-based scheduling, except for a different marginal utility function. The complexity of the first stage is max{ ( 2 2), ( 2 2)}

RT RT RT

O M O M M . If

there are un-allocated resources after the first stage, then the remaining resources are allocated in the second stage to non-real-time flows with zero minimum resource requirements. The goal of the second stage is to maximize system throughput. Assume that the matrix-based scheduling algorithm is adopted in the second stage. As a result, the complexity of the second stage is

2 2

2 2

max{ (O M NRT NRT ), O(NRT M M )}.

2.3. Optimal Queue Management Algorithm for ATM Networks

To support heterogeneous QoS differentiation such as delay bound and packet loss probability, it is necessary to jointly design time priority and loss priority schemes. In [42]-[48], relative differentiated service, one approach in DiffServ framework, was proposed trying to provide heterogeneous QoS differentiation. In relative differentiated service, packets are grouped into multiple classes so that a packet belonging to a higher priority class receives better service than a

packet belonging to a lower one. The proportional differentiation model was proposed to refine the relative differentiated service with quantified QoS spacing. In proportional differentiation model, performance metrics such as average delay and/or packet loss probability are controlled to be proportional to the differentiation parameters chosen by network operators. Assume that there are

N service classes. The average experienced delay and suffered packet loss probability of the i th

service class, denoted by di and Pi , respectively, are spaced from those of the

th

j service class as

i j i j

d d and P Pi j i j , 1 ,i j N . Here, i and i denote, respectively, the

delay and packet loss probability differentiation parameters of the ith service class. The work

presented for relative differentiated service successfully controls the average delays and packet loss probability in a proportional sense. However, this service model is not practical for real-time traffic. The reasons are stated as follows. 1) For real-time traffic, we believe it is more meaningful for a multiplexer to guarantee delay bounds rather than providing proportional average delays. 2) Since packets of real-time traffic have to be dropped whenever they violate their delay bound, buffer overflow can be eliminated by engineering the buffer space according to the delay bound of all real-time traffic and the service capability of the system. As a result, it is reasonable to assume that packet loss only results from deadline violation for a multiplexer dealing with real-time traffic.

In [49], the authors generalized the QoS scheme [11] and combined it with the earliest deadline first (EDF) service discipline to support multiple delay bound and cell loss probability requirements for real-time traffic flows in ATM networks, assuming cell loss only results from deadline violation.

This generalized version is named G-QoS. It was proved that the G-QoS scheme is optimal in the sense that it minimizes the effective bandwidth among all stable and generalized space-conserving schemes. A scheme is said to be generalized space-conserving if a packet is discarded only when it or some other packets buffered in the system will violate their delay bounds. Moreover, effective bandwidth refers to as the minimum required bandwidth to meet QoS requirements of all traffic flows. Two drawbacks of the G-QoS scheme are 1) it only handles fixed-length packets and 2) when batches of packets arrive, packet-by-packet processing requires high computational complexity. The G-QoS scheme and its original version, the QoS scheme, are related to our work and will be reviewed in the following paragraphs.

It is assumed that there are K traffic flows, namely, f , 1 f ,…, and 2 f , which are K

multiplexed into a system with transmission capability C and a single queue of size B. Consider

k

f . Let P represent its packet loss probability requirement. The number of arrived and k

discarded packets (or cells) by time t are denoted by A tk

and L tk

, respectively. The runningpacket loss probability P tk

is defined as P tk

L tk

A tk

.

The QoS scheme [11]The QoS scheme is operated as follows. Assume that a packet arrives at time t, and the buffer is fully occupied. Define D t

as the set which contains indices of traffic flows that have at leastsuch that P tj

Pj P tk

Pk , 1 k K. If the arriving packet belongs to f , then this packet is jdiscarded. Otherwise, a packet which belongs to f is discarded and the arriving packet is j

admitted to the buffer. As was proved in [12], the QoS scheme is optimal in the sense that it achieves maximum bandwidth utilization among all stable and space-conserving schemes.

The G-QoS scheme [49]In the G-QoS scheme, it was assumed that the buffer is sufficiently large so that there is no cell loss due to lack of buffer space. The EDF policy was adopted as its service discipline. Upon arrival, a cell is marked with its deadline, which is equal to its arrival time plus the requested delay bound. Then, the schedulability test of the EDF scheduler is performed according to the deadlines of the newly arrival and all the other existing ones. The newly arrived cell is admitted into the buffer without discarding any cell if no cell will violate its delay bound, assuming that there is no more cell arrival in the future. Otherwise, a cell in the discarding set is lost. The discarding set

S t is the maximum subset of existing cells at time t, including the newly arrived one, such that

the remaining cells in the system are schedulable if cell c is discarded for any cS t

. Whichcell is to be discarded is determined by the normalized running cell loss probabilities of traffic flows having cells in the discarding set. Among these traffic flows, a cell which belongs to the traffic flow with the smallest normalized running cell loss probability is discarded. It was proved that the G-QoS scheme is optimal in the sense that it minimizes the effective bandwidth among all stable and generalized space-conserving schemes.

Chapter 3

Resource Allocation for Real-Time

Traffic in IEEE 802.11e WLANs

The Medium Access Control (MAC) of IEEE 802.11e defines a novel coordination function, namely, Hybrid Coordination Function (HCF), which allocates Transmission Opportunity (TXOP) to stations taking their quality of service (QoS) requirements into account. However, the reference TXOP allocation scheme of HCF Controlled Channel Access (HCCA), a contention-free channel access function of HCF, is only suitable for constant bit rate (CBR) traffic. For variable bit rate (VBR) traffic, packet loss may occur seriously. In this chapter, we generalize the reference design with an efficient TXOP allocation algorithm, a multiplexing mechanism, and the associated admission control unit to guarantee QoS for VBR flows with different delay bound and packet loss probability requirements. We define equivalent flows and aggregate packet loss probability to take advantage of both intra-flow and inter-flow multiplexing gains so that high bandwidth efficiency can be achieved. Moreover, the concept of proportional-loss fair service scheduling is adopted to

allocate the aggregate TXOP to individual flows. From numerical results obtained by computer simulations, we found that our proposed scheme meets QoS requirements and results in much higher bandwidth efficiency than previous algorithms.

3.1. System Model

The studied system consists of K QSTAs, called QSTA1, QSTA2, …, and QSTAK such that

i

QSTA has n existing VBR flows. Transmission over the wireless medium is divided into SIs i

and the duration of each SI, denoted by SI, is a sub-multiple of the length of a beacon interval T . b

Moreover, an SI is further divided into a contention period and a contention-free period. The HCCA protocol is adopted during contention-free periods.

It is assumed that every QSTA has the capability to measure channel quality to determine a feasible transmission rate which yields a frame error rate sufficiently smaller than the packet loss probability requirements requested by all traffic flows attached to the QSTA. The relationship between measured channel quality and frame error rate can be found in [52].

The QoS requirements of traffic flows are specified with delay bound and packet loss probability. Every QSTA is equipped with sufficiently large buffer so that a packet is dropped if and only if (iff) it violates the delay bound. It is assumed that there are I different packet loss probabilities, represented by P , 1 P , …, and 2 PI with P1P2 ... PI , and J possible delay

j j

D SI for some integer j 1.

HC allocates TXOPs to QSTAs based on a static and periodic schedule. As illustrated in Fig. 3.1, the TXOP for QSTA , denoted by k TXOP , is allocated every SI and is of fixed length. The k

length of scheduled SI is chosen to be the minimum of all requested delay bounds. Note that SI is updated if a new flow with delay bound smaller than those of existing ones is admitted or the existing flow with the smallest delay bound is disconnected and there is no other existing flow with the same delay bound. In this case, the TXOPs allocated to QSTAs have to be recalculated accordingly. TXOP 1 TXOP 2 TXOP a TXOP K TXOP 1 TXOP 2 TXOP a TXOP K

Fig. 3.1 Static and periodic schedule for 802.11e HCCA.

Consider the existing flows of a specific QSTA, say QSTAa. The na flows attached to QSTAa are

classified into groups according to their QoS requirements. Let F represent the set which i j,

contains all traffic flows with packet loss probability P and delay bound i D . Furthermore, let j

1 ,

i j J i j

arrivals of different flows are independent Gaussian processes. Since sum of independent Gaussian random variables remains Gaussian, the aggregated flow of all the flows in set F is Gaussian and i j,

will be represented by f . For convenience, we shall consider i j, f as a single flow. A separate i j, queue, called Queue , is maintained for flow i j, f , i j, 1 i I and 1 j J . Let N( i j, , i j2, )

denote the distribution of traffic arrival for flow f in one SI. Note that the values of i j, i j, and 2 , i j can be calculated by , ( , ) ( , ) i j E Ni j E Xi j . (6) and 2 2 , ( , ) ( , ) ( , ) ( , ) i j E Ni j VAR Xi j E Xi j VAR Ni j , (7)

where N and i j, Xi j, represent, respectively, the number of packets belonging to flow f that i j, arrive in one SI and the packet size.

Our proposed scheme consists of an aggregate TXOP allocation algorithm, the proportional-loss fair service scheduler, and the associated admission control unit. As mentioned before, TXOP allocation and admission control are performed in HC and proportional-loss fair service scheduler is implemented in QSTAs. An overview of our proposed scheme is depicted in Fig. 3.2. Once again, let us consider QSTAa with na traffic flows, which are classified into IJ

que ue s I J , , i j a i j TD TXOP

3.2. Aggregate TXOP Allocation Algorithm

For ease of presentation, we firstly consider the case that flows are with identical packet loss probability requirement and then, generalize the results to the case that flows are with different packet loss probability requirement.

Flows with identical packet loss probability requirementsIt is assumed that flows requesting different delay bounds but identical packet loss probabilities. Without loss of generality, assume that the packet loss probability requested by all flows is P . As 1

a result, we have F . Further, for ease of description, we assume that there is at least one F1

traffic flow with delay bound D . 1

Consider QSTA which has a n flows. The a n flows are classified into a J disjoint sets

1,1

F , F , …, and 1,2 F such that a flow belongs to 1,J F iff its delay bound is 1, j jSI. Let f , 1, j

1 j J , with traffic arrival distribution 2 1, 1,

( j, j)

N denote the aggregated flow of all the flows

in set F1, j . The first come first serve (FCFS) service discipline was adopted for packet

transmission. The effective bandwidth c1, j of flow f1, j is computed to take advantage of

intra-flow multiplexing gain. The effective bandwidth c is defined as the minimum TXOP 1, j

allocated to flow f to guarantee a packet loss probability smaller than or equal to 1, j P for flow 1

1, j

f . Since the delay bound of flow f is 1, j jSI, the effective bandwidth c can be determined 1, j

capability is c1, j , and the desired packet loss probability is P . Given the traffic arrival 1

distribution 2 1, 1,

( j, j)

N , the effective bandwidth c can be written as 1, j c1,j 1,j 1,j 1,j, where

1, j

was called the QoS parameter of flow f . Derivation of packet loss probability for a 1, j

finite-buffer system is complicated. Reference [50] provided a good approximation based on the tail probability of an infinite buffer system and the loss probability of a buffer-less system, as shown in equation (8).

0

0 L L P P x P X x P X (8)In the above equation, P xL

represents the packet loss probability of a finite-buffer system withbuffer size x and P X

x

denotes the tail probability above level x of an infinite-buffer system.The equation for P X

x

can be found in [50]. It is pretty complicated and thus is omitted dueto space limitation. The equation for PL

0 can be given by

2

1, 2 1, 1, 1, 1, 1, 1, 1, 0 + 1 2 j j j j L j j j j P Q e Q (9) where

1, ( 2 ) 1, 2 1 2 ( ) j x j Q e dx . Having P X

x

, P X

0

and PL

0 , one can obtain the(approximate) packet loss probability of a finite-buffer system with server transmission capability 1, j

c and buffer size jc1,j as

2

1, 1, 1, 1, 1, 1, 1, 2 1, 1, 1, 1, 1, 1, 1, 2 j j j j j j jc j j c j j j L j j j j j P c e e Q (10)Consequently, given mean 1, j, variance 2 1, j

, delay bound jSI , and the desired packet loss

turn can be used to derive the effective bandwidth c1,j 1,j 1,j 1,j for flow f . 1, j

Let L represent the nominal packet size of flow 1, j f . The average number of packets 1, j

which can be transmitted in one SI, denoted by N1, j, can be estimated as

1, 1, 1, j j j c N L (11)

The allocated TXOP duration for flow f is given by 1, j

1, max 1, max 1, , j j j a a c L TD N O O R R (12)

where R represents the feasible physical transmission rate of a QSTA . a

As mentioned before, using buffer to store packets achieves intra-flow multiplexing gain. To further achieve inter-flow multiplexing gain, an equivalent flow of delay bound D , denoted by 1 f , ˆ1,j

is defined for flow f , 11, j . Let j J N

ˆ1,j, ˆ1,2j

be the traffic arrival distribution of f . ˆ1,jWe have ˆf1,1 f1,1. The equivalent flow f for 2ˆ1,j is obtained by letting its mean and j J

effective bandwidth equal to those of flow f , i.e., 1, j ˆ1,j 1,j and ˆ ˆ1,j 1,j 1,j 1,j, where ˆ1,j

is the QoS parameter of the equivalent flow. Since the delay bound of the equivalent flow f is ˆ1,j

equal to D1SI , a packet of f which arrives in the nˆ1,j th SI will violate its delay bound and be

dropped if it is not served in the ( 1)th

n SI. As a consequence, the effective bandwidth for f ˆ1,j

can be derived based on a buffer-less system. That is, the QoS parameter ˆ1,j can be computed according to equation (9) for PL(0) . Note that P1 ˆ1,j can be well approximated by

1 1

Q P

[51]. With the approximation, we have 1

1, 1, 1, 1ˆ j j j Q P

flows fˆ1,j, 1 j J , one can determine the aggregate equivalent flow ˆf1. Let

2 1 1 ˆ ˆ,N

denote the distribution of traffic arrival in one SI for the aggregate equivalent flow ˆf1. Since sum of independent Gaussian random variables remains Gaussian, we have ˆ1 1,1 J 2 ˆ1,

j j

and 2 2 2 1 1,1 2 1, ˆ J ˆ j j

. Again, given ˆ1 and 2 1 ˆ , the QoS parameter ˆ1 of flow ˆf1 can be derived according to equation (9) for PL(0) . Having P1 ˆ1, one can compute the effective

bandwidth ˆc for flow 1 ˆf1. The TXOP duration allocated to QSTA is then determined as a

follows max 1 1 ˆ max , a POLL a a a L c TXOP N O SIFS t n O R R (13) where 1 1 ˆ1 1 ˆ ˆ ˆ c (14) 1 1 1 ˆc N L (15)

In equation (15), L1 denotes the weighted average nominal packet size of all the flows in F , and 1 is calculated by 1, 1, 1 1 1, 1 J j j j J j j N L L N

. (16)The criterion shown in equation (4) was used for admission control.

Clearly, assuming all traffic flows have identical packet loss probabilities is a big constraint of the above scheme. A straightforward solution to handle flows with different packet loss

probabilities is to assume that all flows have the most stringent requirement. Unfortunately, such a solution increases the effective bandwidths of flows which allow packet loss probabilities greater than the smallest one. Another possible solution is to compute separately the effective bandwidth

ˆi

c for aggregated equivalent flow fˆi, 1 i I , and allocate TXOPa

iI1cˆi . Such a solution,however, does not take advantage of inter-flow multiplexing gain. In the following sub-section, we present our proposed scheme which considers different packet loss probabilities and takes advantage of inter-flow multiplexing gain.

Flows with different packet loss probability requirementsFirst of all, an aggregate equivalent flow, denoted by fˆi, is determined using the technique

described in the last section for flows f , i,1 f , …, and i,2 fi J, , for all i, 1 i I . Note that the packet loss probability requirement of fˆi is P . Let i

2 ˆ ˆi, i

N represent the traffic arrival

distribution for flow fˆi. Define ˆf as the ultimate equivalent flow with traffic arrival distribution

2

1 ˆ , 1 ˆ

I I

i i

i i

N

. The desired packet loss probability of flow ˆf , denoted by Pultimate, is givenby 1 1 ˆ ˆ I i i i ultimate I i i P P

(17)Note that the delay bounds of the aggregate equivalent flows fˆi, 1 i I , and the ultimate

equivalent flow ˆf are equal to SI. Consequently, the QoS parameter ˆ of flow ˆf can be

allocated to QSTA can be calculated using equation (13), except that the aggregate effective a

bandwidth and the average number of packets which can be served in one SI are obtained by 2 1 ˆ 1 ˆ I ˆ I ˆ i i i i c

(18) ˆc N L (19)In equation (19), L denotes the weighted average nominal packet size of all the flows in F and is calculated by

I1

I1

i i i

i i

L

N L

N , where Ni and Li can be obtained using equations (15)and (16), respectively. The aggregate TXOP allocation procedure for QSTA is summarized a

below.

Step 1. For 1 i I , determine the aggregate equivalent flow fˆi with packet loss

probability requirement P for flows i f , i,1 f , …, and i,2 fi J, .

Step 2. Determine the packet loss probability Pultimate using equation (17).

Step 3. Compute the QoS parameter of the ultimate equivalent flow using equation (9) with

ultimate

P as the desired packet loss probability.

Step 4. Compute the aggregate transmission duration TXOP allocated to a QSTA using a

equation (13) with the effective bandwidth and average number of packets served in one SI obtained from equations (18) and (19).