行政院國家科學委員會專題研究計畫 成果報告

卓越數位學習科學研究中心--卓越數位學習科學研究中心

(3/3)

研究成果報告(完整版)

計 畫 類 別 : 整合型 計 畫 編 號 : NSC 99-2631-S-003-002- 執 行 期 間 : 99 年 08 月 01 日至 100 年 07 月 31 日 執 行 單 位 : 國立臺灣師範大學科學教育中心 計 畫 主 持 人 : 張俊彥 共 同 主 持 人 : 李忠謀、陳伶志、曾元顯、襲充文、李蔡彥 方瓊瑤、楊芳瑩、侯文娟、江政杰、葉富豪 林育慈、陳柏琳 計畫參與人員: 碩士級-專任助理人員:簡佑達 學士級-專任助理人員:黃湋恆 學士級-專任助理人員:王碩妤 學士級-專任助理人員:李慧鈺 三專級-專任助理人員:王淑文 碩士班研究生-兼任助理人員:朱純劭 碩士班研究生-兼任助理人員:張莊平 碩士班研究生-兼任助理人員:鍾昇宏 碩士班研究生-兼任助理人員:姜光庭 碩士班研究生-兼任助理人員:簡琮訓 碩士班研究生-兼任助理人員:周子宇 碩士班研究生-兼任助理人員:林蓓伶 碩士班研究生-兼任助理人員:任欣怡 碩士班研究生-兼任助理人員:簡佑達 碩士班研究生-兼任助理人員:陳怡均 碩士班研究生-兼任助理人員:黃邦烜 碩士班研究生-兼任助理人員:楊儒松 碩士班研究生-兼任助理人員:顏子軒 碩士班研究生-兼任助理人員:林瑞硯 碩士班研究生-兼任助理人員:李振遠 碩士班研究生-兼任助理人員:林忠彥碩士班研究生-兼任助理人員:林永在 碩士班研究生-兼任助理人員:江蕙伶 碩士班研究生-兼任助理人員:曾冠雲 碩士班研究生-兼任助理人員:羅文鑫 博士班研究生-兼任助理人員:蕭建華 博士班研究生-兼任助理人員:吳皇慶 博士班研究生-兼任助理人員:林銘照 博士班研究生-兼任助理人員:葉庭光 博士後研究:張月霞 博士後研究:陳佳利 博士後研究:左聰文 報 告 附 件 : 國外研究心得報告 出席國際會議研究心得報告及發表論文 處 理 方 式 : 本計畫可公開查詢

中 華 民 國 100 年 10 月 28 日

1

Project Summary

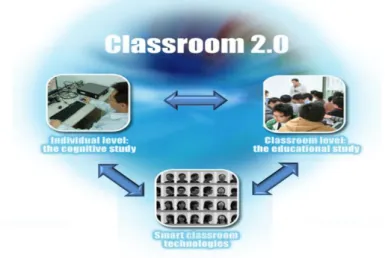

The three-year Center for excellence in e-Learning Sciences (CeeLS) project has aimed to renovate the current university science smart classrooms in Taiwan by developing a Classroom 2.0 which comprises of the smart classroom technologies of a Future Innovative Science Learning Environment (FISLE) in the university earth/computer science classroom. The objectives of setting up the Classroom 2.0 is to

integrate modern technologies (image processing, speech processing, video

processing, speech recognition, text mining, information retrieval, natural language processing, data mining, machine learning, etc) to create an intelligent classroom embedded with individualized and interactive learning materials. By jointly designing modern technology-enhanced e-learning science learning environments and individualized instructional materials and methods, an innovative science learning environment in improving teachers’ pedagogies, students’ learning strategies, student-teacher interactions and student learning outcomes in both the cognitive and affective domains might finally become tenable. In doing so, it is hoped to ultimately offer practical knowledge and experience to inform school teachers, educational researchers, and computer engineers to better integrate technologies in classroom learning environments and facilitate student learning as a whole. To realize the aforementioned goals, the CeeLS endeavors to bring together a group of experts in the area of science education, cognitive science, computer science, and computer engineering and proposes three closely interrelated research directions: (1) research and development of Smart Classroom technologies; (2) educational research to examine students’ learning outcomes both affectively and cognitively in the Classroom 2.0 environment; (3) cognitive research to examine students’ attention distribution in the Classroom 2.0 environment. Figure 1 depicts how the technical, educational and cognitive research teams are closely interrelated.

2

In the past few years of the CeeLS project, several prototypes of the smart classroom technologies have been developed. For example, a real-time approach for face detection and recognition in video streams for the roll-call system in the smart classroom has been designed. A recognition system for the lecturer’s arm gesture in order to control the presentation was also designed. In addition, a series of experiments on speech summarization were conducted. The developed speech recognition and summarization techniques have been integrated into a speech-based lecture browsing prototype system. In order to recognize students’ behaviors in a classroom, a system of vision-based student gesture recognition was developed. Several educational and cognitive studies were conducted based on the abovementioned newly developed prototypes of the smart classroom technology. The CeeLS project has altogether produced more than 35 highly selective international journals indexed by SSCI/SCI. The outcomes yielded by the 3-year CeeLS project can be generally classified into the following aspects.

Researches Published by Selective International Journals

Under the Center for excellence in e-Learning Sciences (CeeLS) project, a good numbers of studies conducted with close collaboration among the research teams in fields of computer science and science education have been published by several highly selective international journals indexed by SSCI/SCI.

International Conferences Held to Engage Direct Dialogue

To encourage more direct interactions among scholars, the CeeLS project has held international conferences in fields of science education and computer science to engage domestic researchers to share their studies and perspectives with researchers from different countries and cultural backgrounds, which have exerted positive influences on the international academic society at large.

CeeLS Project Shared in International Research Community

To actively participate in the international research community, the CeeLS project synopsis has been submitted and accepted by the Turkish Online Journal of Educational Technology. It is anticipated that by sharing the research on the development and the impact of advanced automatic classroom systems proposed in the CeeLS project, the journal article published would further promote collaboration among educators, researchers, and computer engineers internationally for the better development of future classroom 2.0.

3

Research and Development of Multimedia Technology

The Video Processing Research team has developed feasible face detection, motion detection and recognition modules which can be easily modified to detect the students’ gestures in the classroom environment. The technologies have been experimented in a control environment. The technologies have been successfully used to detect the critical motion of nearby moving vehicles on the expressway.

The Speech Processing Research team investigated extractive speech summarization and proposed a unified probabilistic generative framework that combines the sentence generative probability and the sentence prior probability for sentence ranking. Each sentence of a spoken document to be summarized is treated as a probabilistic generative model for predicting the document. An elegant feature of the proposed framework is that both the sentence generative probability and the sentence prior probability can be estimated in an unsupervised manner, without the need for handcrafted document-summary pairs. A prototype system for speech summarization and retrieval of the NTNU courseware has also been established.

The core video processing technology has also been put to use to enhance learning of Computer Science topics at the K-12 level. Our experiment shows that with our proposed model, high school students are able to learn CS topics effectively without additional class time than already allotted.

A 3D Compound Virtual Field Trip (3D-CVFT) system through the integration of Graphic-Based Virtual Reality technology with 3D Stereo-Vision has been developed.

Extend the Use of Multimedia Technology in Assessing Teaching and Learning Needs for Classroom 2.0

Multimedia technologies were applied in a contextualized Animation-Based Questionnaire (ABQ) developed to examine the validity of the original traditional paper-based questionnaire by providing situated visual images described in the question items. The great potential of applying multimedia technologies in educational survey was evidenced in our published paper that the situated contexts provided could equalize the imagery differentiation from each of the participating students and thus to increase the validity of the questionnaire. With the use of ABQ, students could directly perceive the external visual images of question meaning from animations, rather than generate internal visual images by themselves. The results of the comparison between the students’ responses on ABQ and on the traditional questionnaire format (i.e., Text-Based Questionnaire, TBQ) indicate that ABQ can equalize the vividness of students’ visual images that are stimulated from question descriptions.

4

A new technique of animation called Full Learner-Pacing (FLP) function was designed to improve the instructional efficiency of animation-based instruction. The interactivity of FLP allows students to be in complete control of the speed, orientation, and changes of presented objects in an animation. In contrast to the traditional animation and static graphics, the empirical results indicate that the animation embedded with FLP can bring students the best instructional efficiency.

An instructional technology model called MAGDAIRE (Modeled Analysis, Guided Development, Articulated Implementation, and Reflected Evaluation) was designed to foster pre-service science teachers’ abilities to develop and implement technology-integrated instruction to facilitate their science teaching. MAGDAIRE was deployed in a high school science teacher education course in Taiwan. The empirical results indicate that the implementation of MAGDAIRE can significantly promote pre-service teachers’ technology competencies and enhance their confidence in implementing technology-integrated instruction.

Classroom 2.0 related researches have been conducted in three different classes. Two of the Classroom 2.0 systems, speech driven PPT (SD_PPT) and Technology Enabled Interaction System (TEIS), were utilized and students’ general perceptions towards the systems were evaluated. The results of the pilot test shows that overall, both teacher and students perceived positively towards the systems of SD_PPT and TEIS. Students’ perceptions towards learning in an ICT-Supported Learning Environment have also improved significantly to a great extent after experiencing both systems. Students, in particular, showed significant improvement in how they viewed learning with the use of ICT, both learning motivation and effectiveness with ICT, after experiencing the smart classroom systems.

Eye tracking technology was carried out to assess a college learner’s visual attention distributions during multimedia presentations. The visual attention data helps provide some pedagogical information for the integration of Classroom 2.0 technologies

The application of multimedia technology in assessing teaching and learning needs and expectations could help the researchers envision the prospects of future classrooms more accurately and thus to enhance closer collaboration between educational practitioners and computer scientists.

Establishment of Social Tagging Platform in Collaboration with eNTSEC and OSR Internationally

A social tagging platform building on eNTSEC (Taiwan Internet Science Education Center, http://www.ntsec.edu.tw) has been established, which is a sub-project of the cross-country cooperation project namely OpenScienceResources

5

(OSR). This cross-country project is a collaborative work that organizes with 20 research institutions from 13 countries (mostly allocated in Europe). The project aims at integrating resources from diverse institutions, groups, and individuals such as science museums, science centers, educators, educational technologists, metadata experts, user groups, and standard bodies to enhance public learning in general. As part of the OSR project, this study utilized social tagging technology to develop a platform to help users easily get access and manage annotated learning resources and thus enhance learning in classroom practice. In the social tagging system, users can use arbitrarily regarded words as tags to describe any learning object. In this way, users can retrieve the objects whether through personal tags or others’ public tags. Specifically, in this system, users are encouraged to collaboratively mark miss-tagging or similar tags in a folksonomy (the set of tags for a specific learning object). By the collaborative marking, user vocabulary can be convergent, and the precision and recall of retrieving result can be improved.

The following are some of the representative CeeLS-related papers either published in SSCI/SCI journals or presented at international conferences.

6

Paper published at the Turkish Online Journal of Educational Technology (TOJET), 9 (2010), 7-12:

7

8

Paper published at the ACM Transactions on Asian Language Information Processing (TALIP), 8(2009), 2:1-27

9

10

11

12

Paper Presented at the 2011annual international conference of National Association for Research in Science Teaching (NARST 2011):

國科會補助專題研究計畫項下赴國外(或大陸地區)出差或

研習心得報告

日期: 99 年 11 月 03 日一、 國外(大陸)研究過程:本人赴北海道大學移地研究,並參與

北海道大學與首爾大學共同主辦的第十三屆科學教育論

壇。本人的研究希望把最近開發的 Smart Classroom 概念推

廣到日本與韓國。在共同交換經驗與分享研究後,不但北海

道大學 Professor Eizo Ohno (Hokkaido University, Faculty of

Education) 很感興趣,這個智慧型教室的議題在科學教育

論壇中也受到熱烈的迴響,學者們都希望將來能夠試用這個

智慧型教室。

二、 研究成果:主要分成兩方面,第一個是 Smart Classroom 的

論文已發表在全美科學教學研究年會如下,另一個主要成果

計畫編號

NSC 99-2631-S-003-002

計畫名稱

卓越數位學習科學研究中心

出 國 人 員

姓名

張俊彥主任

服 務

機 構

及 職

稱

國立台灣師範大學

科教中心

出國時間

99 年 11 月 03 日至

99 年 11 月 07 日

出國

地點

北海道大學是,本人將於 2011 年 11 月主辦三所學校的會議如下:

(

http://www.sec.ntnu.edu.tw/nhs/index.html

),讓這樣的交流

可以繼續下去!

三、 建議:移地參訪與研究可以增加學者間的交流,是很好的活

動應繼續支持。

國科會補助專題研究計畫出席國際學術會議心得報告

日期: 100 年 7 月 21 日一、 參加會議經過 禮拜六(7/9 日)早上搭乘九時三十五分長榮航空直飛英國倫敦希斯洛機場的 飛機,在過境旅館住宿一晚後,第二天再搭乘巴士至劍橋大學 Fitzwilliam College 本國際研討會的舉辦地點。

本 研 討 會 CATE2011(The 2011 IASTED International Conference on Computers and Advanced Technology in Education) 是一個電腦與先進科技在教育 上的應用的相關研討會。研討會期間共有 45 篇論文口頭發表,參加研討會的專 家學者約一百五十餘人,來自世界各地,包含澳洲、芬蘭、法國、英國、美國、 中國、加拿大、土耳其等多個國家,其中更有多位來自台灣。

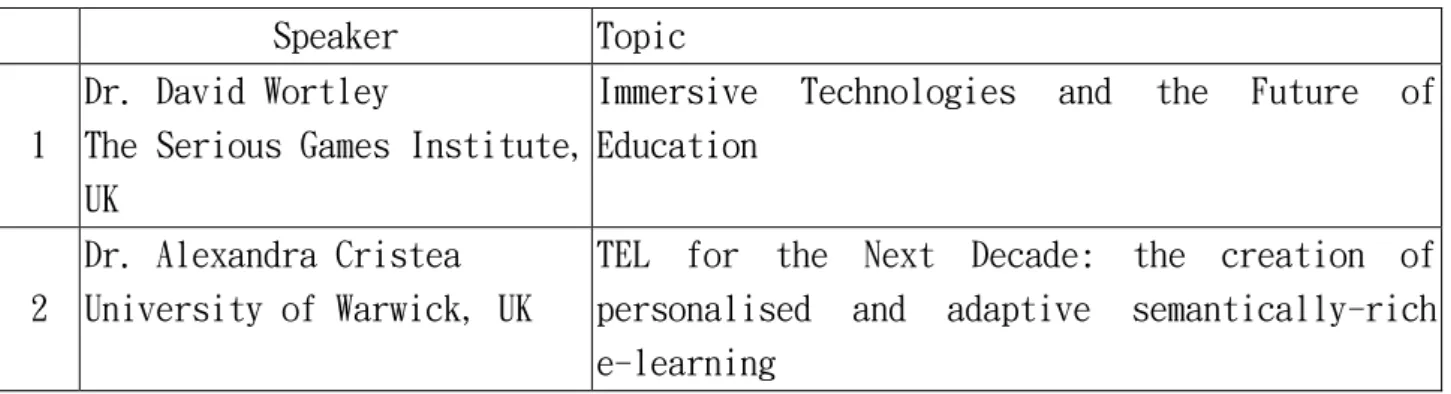

CATE2011 國際研討會共為期三天,行程滿滿。第一天上午舉行開幕式,包 含 了 一 場來 自英 國的 David Wortley 博 士 主 講 的 keynote address , 講 題 是 Immersive Technologies and the Future of Education,主要探討目前的數位通訊科

計畫編

號

NSC 99-2631-S-003-002-

計畫名

稱

未來學習環境:教室 2.0

出國人

員姓名

方瓊瑤

服務機構

及職稱

國立臺灣師範大學資訊工程系 副教授會議時

間

100 年 7 月 11

日至

100 年 7 月 13 日

會議地點

英國 劍橋(Cambridge, UK)會議名

稱

(中文) 2011 年 IASTED 電腦與先進科技在教育上的應用國際研討會 (英文) The 2011 IASTED International Conference on Computers and Advanced Technology in Education (CATE2011)發表論

文題目

(中文) 教室 2.0 中學生動作辨識系統

技在教育上的應用。相關照片如下圖所示。

之後接著就是口頭論文發表,主要有二個 sections,其主題分別為 Assessment, control and evaluation in advanced technology-based education 以及 Innovative technology-based curriculum, courseware, teaching and learning,共計十八篇論文在 第一天發表。

第二天上午安排第三個 section,Games-based learning and mobile learning, 計有六篇相關論文發表。之後安排專題演講 invited speaker 為英國的 Alexandra Cristea 博士,講題為 Tel for the Next Decade: The Creation of Personalised and Adaptive Semantically-rich e-Learning,主要探討在數位科技時代個人化學習的前 瞻性。下午則有第四個 section 的論文發表,主題是 Best practices in advanced technology-based education。晚上還有一個晚宴以及頒獎典禮,本篇論文很榮幸 獲得本研討會的最佳論文獎(CATE2011 Best Paper Award),相關獎牌以及獎狀如 上圖所示。

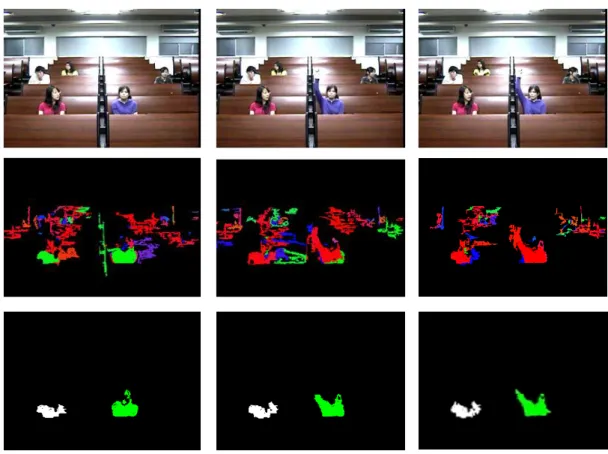

第 三 天 的 section 主 題 為 , Advanced software/hardware systems for technology-based education,共計有十一篇論文發表。我們的論文在第三天的 session 發表,內容是教室 2.0 中學生動作辨識系統。 本篇論文主要探討階梯教室學生上課動作分析系統。研究的目的為分析記錄 學生上課時的肢體動作,提供分析記錄結果給教育研究人員作為參考與研究。動 作分析在教育領域尤其重要,老師可藉由觀察學生的動作知道學生的學習狀況。 例如學生舉手,老師可對學生不懂的部份加以說明,提高學生的學習效率。 本研究的實驗情境設定為階梯教室。與一般肢體辨識研究不同的地方在於輸 入影像只看見學生的上半身部分,除了站立動作外,其餘舉手,趴下等動作學生 皆不會離開座位。階梯教室的後面有窗戶,如圖所示。窗外照射進教室的光線, 與教室內日光燈閃爍都會造成強烈的光影變化,造成系統光線處理上的困難。且 教室桌椅背景近似膚色,本系統無法直接擷取輸入影像顏色為膚色的部份,視之

為學生的肢體部分。另外,階梯教室座位前後排有高低起伏差異,學生做在教室 會造成肢體部位前後重疊的情況,且左右相鄰的學生也會有部份肢體左右重疊的 情況,因為階梯教室所有前景物會有遠近的差異,這些都是本研究需克服的困難。 因此本研究將攝影機架設在階梯教室前面以擷取學生上課影像。系統先定位 教室椅背高度線,並取出影像前景顏色。藉由 motion 與前景顏色資訊整合判斷 出影像前景點。接著利用影像前景點為種子進行 region growing,並將擴展出的 regions 利用 region combination 演算法定位學生物件。本系統使用學生物件的組 成關係變化辨識六種動作,分別為舉右手、舉左手、舉雙手、趴下、站立與正常 坐姿。另外,本系統亦利用自動機來模擬並記錄學生在課堂上的連績動作。實驗 結果顯示提出方法可以解決一些環境的問題,並對實驗遇到的其他問題有分析與 討論。 最後本研究還有需改進的部份,未來希望能整合其他資訊或者更適合的方法 讓本研究更完整。本篇論文的發表頗受歡迎,由於獲得最佳論文獎,因此引起與 會者的熱烈討論,是一個大家非常感興趣的研究主題。

二、 與會心得

本次 CATE2011 研討會在英國劍橋大學 Fitzwilliam College 舉辦,劍橋大 學擁有古老優良的傳統學風,距倫敦市區亦只有一個多小時的火車車程,交 通尚稱便利。 由於本研討會在劍橋大學的 Fitzwilliam College 而非在一般的飯店舉 行,因此與會者更能專心融入各研討會主題的討論,幾乎每一篇論文報告都 引起熱烈的廻響,並進行積極的問答。研討會期間聆聽來自全球各地的學者 將電腦與先進科技應在教育上的不同領域之相關研究,意識到部份探討的議 題趣味十足。由於本研究室的研究主題原為電腦視覺與影像處理,首度跨到 教育應用領域,除了獲獎的肯定外,也從各研究中窺知了科技對教育的強大

助力,希望以後如果有機會也能朝相關領域發展。 三、 建議 非常感謝行政院國家科學委員會提供出席國際會議的相關補助,讓我們 能在國際研討會之交流上增廣見聞,並降低經濟負擔。希望未來能持續提供 相關補助方案以供國內學者出國交流機會,增進台灣學者的國際觀,並提高 台灣相關研究在國際學術界的能見度。 四、 攜回資料名稱及內容

CATE2011 議程手冊一本、CATE2011 論文光碟一片、CATE2011A 最佳 論文獎獎牌乙座以及獎狀乙張。

STUDENT GESTURE RECOGNITION SYSTEM IN

CLASSROOM 2.0

Chiung-Yao Fang, Min-Han Kuo, Greg-C Lee, and Sei-Wang Chen Department of Computer Science and Information Engineering,

National Taiwan Normal University

No. 88, Section 4, Ting-Chou Road, Taipei, 116, Taiwan, R. O. C. violet@csie.ntnu.edu.tw; babulakau@hotmail.com;

leeg@csie.ntnu.edu.tw; schen@csie.ntnu.edu.tw

ABSTRACT

This paper presents a student gesture recognition system employed in a theatre classroom, which is a subsystem belonging to Classroom 2.0. In this study, a PTZ camera is set up at the front of the classroom to capture video sequences. The system first pre-processes the input sequence to locate the main line of the theatre classroom and to extract the candidates from the foreground pixels. Subsequently, motion and color information is utilized to identify the foreground pixels, which are regarded as the seeds of growth for the foreground regions. The system combines the foreground regions to segment the objects, which represent individual students. Six student gestures, which include raising the right hand, raising the left hand, raising two hands, lying prone, standing up and normal, are classified based on the relationship between the regions in similar objects. The experimental results demonstrate that the proposed method is robust and efficient.

KEY WORDS

Smart classroom, gesture recognition, object segmentation.

1. Introduction

“Smart Classroom”, a term proposed recently, usually refers to a room housing an instructor station, equipped with a computer and audiovisual equipment, which allows the instructor to teach using a wide variety of media. In Taiwan, the instructor station is referred to as an e-Station, which typically composes of a PC with software, a hand-writing tablet, an external DVD player, an amplifier, a set of speakers, a set of wired or wireless microphones and an unified control panel. This kind of “Smart Classroom”, however, is in reality not an example of a smart system. In this paper, we propose the concept of a truly smart classroom, which we will name Classroom 2.0.

In our view, a “Smart Classroom” such as Classroom 2.0 should be intelligent, interactive, individualized, and integrated. In the following paragraphs, we will briefly introduce the properties and relative systems of Classroom 2.0.

Intelligent: the classroom technology should automatically conduct tasks that do not require human intervention. To enable this, the following four systems should be employed in the classroom:

(1)The intelligent Roll-Call (iRollCall) system: the system can automatically recognize students who are present in the classroom.

(2)The intelligent Teaching Response (iTRes) system: the system can automatically control the technologies used in the classroom, including the control of the software systems.

(3)The intelligent Classroom Exception Recognition (iCERec) system: the system can automatically identify and track human behavior in the classroom. This system is intended to automatically track the behavior of students in a classroom and is a revolutionary way of collecting data for educational research.

(4)The intelligent Content Retrieval (iCORE) system: the system can automatically give feedback on the quality of an answer to a given question.

Interactive: the classroom technology should facilitate interaction between the instructor and the students.

Individualize: the classroom technology should react in accordance with the behavior of individual users.

Integrated: the classroom technologies could be integrated as a single i4 system instead of being made up of separate systems.

From the above description, it is evident that iRollCall, iTRes, iCERec, and iCORE are the four kernel systems of Classroom 2.0. The focus of this paper will be to introduce the iCERec system.

The iCERec system has been designed to recognize specially defined student behaviour in a classroom. The pre-defined exceptions include the raising of hands to ask/answer questions, students dozing off during the lecture, taking naps, and students standing up and sitting down. More exceptions may be added as required by educational research.

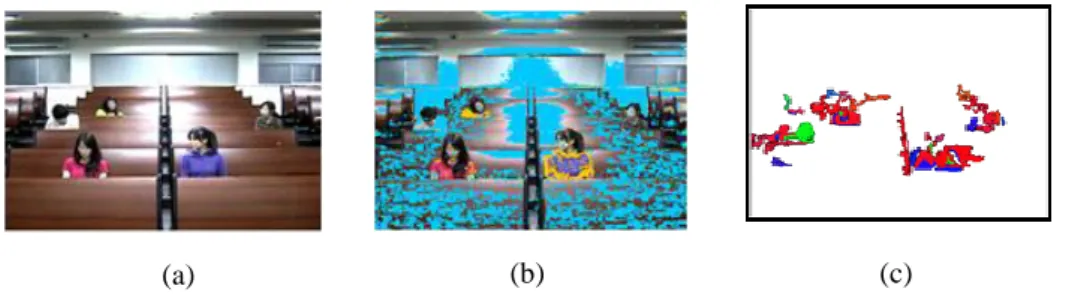

In this study, the students in a classroom are supposed to sit through a lesson, thus student gestures constitute a motion space consisting of the upper body, face, and hands. The PTZ camera is set up in front of the classroom, and three input sequence examples are shown in Figure 1. Firstly, it can be seen that the light from the fluorescent lamps might be different in different regions of the classroom. The detection of students then becomes more complex due to reflections from the chair. Secondly, students in classrooms with a theatre-style setup will be partially occluded by not only others sitting in front of them, but sometimes also by students sitting beside them. Thirdly, the problem of motion segmentation needs to be solved; more than one student may change their gesture in a given frame.

Mitra and Acharya [1] divided the gestures arising from different body parts into three classes: (1) hand and arm gestures, (2) head and face gestures, and (3) body gestures. We believe that student gestures comprise of all the above three classes. Wan and Sawada [2] used the probabilistic distribution of the arm trajectory and a fuzzy approach to recognize as many as 30 different hand gestures. The experimental results show that the recognition system can obtain high recognition rates of „Rain‟ and „Round‟ gestures. However, nine markers should be attached to indicate the feature points of a performer‟s body. This assumption is difficult to implement in a classroom.

Wang and Mori [3] presented two supervised hierarchical topic models for action recognition based on motion words. Video sequences are identified by a “bag-of-words” representation. In the proposed method, a frame is represented by a single word and this model is trained by a supervised learning process. Finally, five different data sets were tested by Wang and Mori to show that their methods achieved superior results. However, most gesture recognition systems are designed to recognize the gestures of a single person [2,3,4,5,6], and do not consider the partial occlusion problem. Our system has been developed to work on multiple students, taking partial occlusion into account.

2. System Flowchart

A flowchart of the student gesture recognition system is shown in Figure 2. Once the video sequence frames have been input into the system, the motion pixels and the candidates of the foreground pixels are detected by the system. These two parameters are helpful in identifying the foreground pixels. On the other hand, certain main lines, which indicate the horizontal line of a row of chairs, will also be located. Using the locations of the main lines as constraints, the foreground regions can be extended by considering the identified foreground pixels as the seeds. These foreground regions can then be combined to segment the foreground objects, which are assumed to represent individual students. Finally, a gesture recognition technique is applied to identify various student gestures.

2.1 Main Line Location

Figure3 shows the flowchart of the main line location process, and Figure4 shows an example. Once the video sequence frames have been inputted into the system, the system detects the edges using Sobel‟s approach. Figure 4(a) shows one of the input frames, and the edge detection result is shown in Figure 4(b). Subsequently, the system extracts the horizontal edges by implementing the morphological opening operation, using a 5X1 horizontal kernel, and is shown in Figure 4(c). The horizontal edges are projected in the horizontal direction to obtain the numbers of the edge pixels, which can be regarded as the importance of the main line edges.

The system can extract the main line candidates from the bottom of the frame based on the degree of importance. The red lines shown in Figure 4(d) indicate the main line candidates. The system clusters these candidates into different classes and calculates the average locations of these classes to identify the real positions of the main lines. Only three main lines located in the bottom of the frame are extracted and preserved for the following process, as depicted in Figure 4(e). Finally, the system will calculate the locations of the other main lines using a geometric series, as depicted by the green lines shown in Figure 4(f).

2.2 Motion detection

The system can detect motion by subtracting the intensity values of the pixels in the tth frame from the corresponding pixels in the t-1th frame and by calculating the absolute values of the subtraction results. Let the intensity values of a pixel p at time t-1 and t be It-1(p) and It(p), respectively. Therefore,

the magnitude of the motion of this pixel can be defined as Motion detection

Video sequence input

Foreground pixel extraction

Main line location

Foreground pixel identification

Region growing

Object segmentation

Gesture recognition

Figure 2. Flowchart of the student gesture recognition system.

Edge detection

Output main line location Main line identification Video sequence input

Horizontal edge extraction

Importance of edge calculation

Main line candidateextraction

| ) ( ) ( | ) (p I p I 1 p M t t

Figure 5 shows an example of the process of motion detection. Figures 5(a) and (b) show the t-1th frame and the tth frame respectively, and Figure 5(c) shows the motion detection results.

2.3 Foreground Pixel Extraction and Identification

The input frames are represented by the RGB color model of a given pixel p, whose R, G, and B values are Rp, Gp, and Bp respectively. The translation equation to calculate the hue value h of the pixel

in the HIS model is given by:

g b h b g b r g r b r g r g b h b g b r g r b r g r h for 2 , if 5 . 0 cos 2 for , 0 if 5 . 0 cos 2 1 2 1 2 1 2 1 where p p p p B G R R r , p p p p B G R G g , and p p p p B G R B b . Moreover, the translation equation to calculate the Cr value of the pixel in the YCrCb model is given by:

(a) (b) (c)

(d) (e) (f)

Figure 4. An example of the main line location process.

Figure 5. An example of motion detection.

(a) (b) (c)

p p

p G B

R

Cr(0.500) (0.4187) (0.0813)

Based on these two components, the system first accumulates the pixel numbers from the input frame to form a histogram of the hue and Cr values . Figure 6 (c) shows the Hue-Cr histogram of the image in Figure 6 (a). It is assumed that the background occupies larger regions than the foreground in the classroom. Therefore, the system normalizes and sorts these pixel numbers on the histogram. After normalization, the top 40% of pixels are classified as the background pixels, and the bottom 5% pixels are classified as the candidates of the foreground pixels.

Subsequently, the system identifies the foreground pixels using motion, color, and template information. Given a pixel p, let M(p) indicate the normalized magnitude of the motion, C(p) be the normalized value of the location of the pixel p in the Hue-Cr histogram, and Ft-1(p) indicate the

foreground pixel probability of the pixel p at time t-1. The foreground pixel probability of the pixel p at time t can then be calculated by:

) ( ) ( ) ( ) (p M p C p F 1 p Ft t .

where α, β, and γ are constants, and F0(p)0. If Ft(p)0.5 then the pixel p is marked as a

foreground pixel, shown in yellow in Figure 6(b). On the other hand, the top 40% pixels are marked as background pixels, shown in blue in Figure 6(b).

2.4 Region Growing

The Main function and the RegionGrowing function of the region growing algorithm are developed to grow regions. The Main function selects the foreground pixels whose y_axis locations are between the maximum and minimum main lines, and sets these selected pixels as the seeds necessary for the growth of the foreground regions.

Function Main() {

x the set of foreground pixels, and let r = 0, If (the y_axis location of x is between the maximum and minimum main lines){

If (x does not belong to any labelled region){ Label the region number of x as r;

RegionGrowing(x);

r = r +1;

} } }

The RegionGrowing function grows the desired regions. Let yh and y'h be the hue values of

pixel y and y‟ respectively, and y's be the saturation value of pixel y‟. Symbol Ny indicates the set of

neighbours of y. The RegionGrowing function first selects a pixel y from the SSL_queue, whose region Figure 6. An example of foreground pixel extraction.

(a) (b) No. of pixels Cr value Hue value (c)

number is ry. All neighbouring pixels, whose properties are similar to those of pixel y, will be

classified into the same region. Otherwise, the neighbouring pixels of y will be set as the boundary pixels of region ry. Here, T1 and T2 denote the thresholds used to check the pixel properties.

Function RegionGrowing(x){ SSL_queue x ;

While (SSL_queue is not empty) {

Output a pixel y which belongs to region ry from the SSL_queue; y

N y ' ;

If (y‟ is not labelled){

h h

h y y

D ' ;

If (DhT1 and y'the set of background pixels

and y'sT2){

Label the region number of y‟ as ry;

Update the average hue value of region ry;

RegionGrowing(y‟); } else

label y‟ as a boundary pixel of region ry;

} } }

Figure 7 illustrates the results of the region growing algorithm. The input frame is shown in Figure 7(a), and Figure 7(b) shows the distributions of the foreground pixels (yellow) and the background pixels (blue). The result of the region growing algorithm is shown in Figure 7(c). Notice that the foreground regions are successfully bounded by the background pixels.

2.5 Object Segmentation

The system segments the objects by the process of region combination. Using a graph to represent an object, each region can be regarded as a node of the graph. If two regions are next to each other, then a link will be added to connect these two corresponding nodes in the graph. The weight of this link represents the strength of the connection between these two nodes. Let the two nodes be denoted by nk

and n’k respectively. The weight of the link can be defined as

L n A n A n n w k k k k 2 )) ' ( ), ( max( ) ' , (

where A(nk) and A(n'k) represent the areas of nodes nk and n’k respectively, and L indicates the

distance between the centers of these two nodes. Here, we assume that the height of the seated student is not greater than twice the distance between two adjacent main lines. If the distance is greater than twice the distance between two adjacent main lines, then the weight is set to zero.

Figure 7. An example of region growing.

Moreover, the system increases the weight of the link if the link connects two nodes which have the same neighbors. Figure 8(a) shows an example where the nodes nk and n’k have two common

neighbors, thus the weight of their link is assigned a value depending on the number of common neighbors. There is also a high probability that these two nodes belong to the same object. On the other hand, the system decreases the weight of the link if the link connects two nodes which do not have any common neighbors. Figure 8(b) shows an example where the nodes nk and n’k contain no common

neighbors, thus the weight of their link is decreased to a constant value. In addition, there is a low possibility that these two nodes belong to the same object.

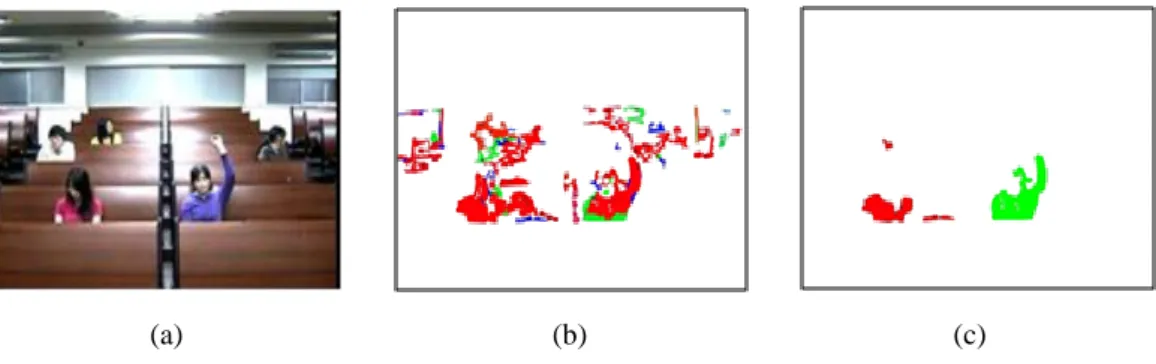

Figure 9 shows the results of object segmentation. Figure 9(a) shows the original input frame, Figure 9(b) shows the results of region growing, and Figure 9(c) shows the result of object segmentation. It can be seen that the system selects those objects with substantially large areas, while the smaller objects are ignored.

3 Gesture Recognition

The system divides student gestures into six classes, which include raising the left hand, raising the right hand, raising two hands, standing up, lying prone, and normal posture. Figure 10 illustrates some examples of these six classes, which can be regarded as states and be constructed to form a finite state machine. We assume that the initial state of the finite automaton is the normal posture.

Figure 8. Two examples of adapting the link weights. (a) An example depicting an increase in the weight of the link. (b) An example depicting a decrease in the link weight.

(a) (b) nk n’ k w(nk,n’k) n k n’ k w(nk,n’k) (a) (b)

Figure 9. The result of object segmentation.

dit, git, cit). Here, ait, pit, and mti indicate the area, the center position, and the motion of the object at

frame t, respectively. Symbols di t

, gi t

, and ci t

are vectors which indicate the areas, the center positions, and the colors of the regions belonging to object i, respectively. A change in these feature values at successive frames can translate the finite automaton from one state to another. Thus, we define 14 rules

(corresponding to transitions a to l) to translate the states. For example, if the center positions of some left regions of object i move upwards and are larger than a preset threshold value, and the area and center position of object i increase, then the student may be raising his/her left hand. This situation can cause a state transition from „normal‟ to „raising the left hand‟ through transition a, as shown in

Figure 10. A finite state machine consisting of student gestures. Raising two hands Raising the left hand Normal Raising the right hand Stand- ing up Lying prone a b c d e f g h i j k l m n

Figure10.

4. Experimental Results

The input sequences for our system were acquired using a PTZ camera mounted on a platform and processed on a PC with an IntelRCore™ 2.186GHz CPU. The PTZ camera is set at a height of approximately 155 cm to 175 cm above the floor. The input video sequences are recorded at a rate of 30 frames/second.

Figure 11 shows an example of the student is raising her hand. The first column illustrates three selected frames of this sequence, and the second column shows their corresponding region growing results. Their segmentation results are shown in the third column. In this case the state of the finite state machine will change from „normal‟ to „raising the right hand‟ through transition b.

Table 1 shows the experimental results of the student gesture recognition system. From this table, it can be observed that a total of 159 gestures can be recognized. Of these, 115 gestures were recognized correctly, therefore giving an accuracy of approximately 72%. The accuracy rate of „lying prone‟ is higher because this gesture is more independent compared to the other gestures, while the accuracy rate of „standing up‟ is lower as the recognition result of this gesture is easily disturbed by others situated behind the student under observation. A total of 43 false positive gestures were also

r e c o r d e d .

From the experiments, we conclude that the lighting is a very important factor affecting the accuracy rate of the gesture recognition system, especially the light change caused by the motion of the students.

Table 1.

The experimental results of the student gesture recognition system. Heading level Total no. of gestures Accuracy rate Correct recogni-ti on numbers False positive Raising the left hand 31 71% 22 8 Raising the right hand 33 73% 24 10 Raising two hands 32 72% 23 5 Lying prone 32 78% 25 2 Standing up 31 68% 21 18 Total 159 72% 115 43 5. Conclusions

In this paper, we proposed a lecture theatre based student gesture analysis system. The system first locates the main line and identifies the foreground pixels. Using the technique of region growing, the students can be segmented. Then, a finite state machine is used to recognize various student gestures. Currently, the accuracy rate of the proposed system is 72%. We believe that the accuracy rate can be increased with the incorporation of some prior knowledge into the system.

This is a new attempt to apply image processing techniques to help the teacher to notice some behaviours of the students in classroom. We hope the system can be improved and practiced in the future. Moreover, since more than one student may change their gestures at the same time, using multiple PTZ cameras to detect multiple student gestures simultaneously may be a good area of research for the future.

Acknowledgment

The authors would like to thank the National Science Council of the Republic of China, Taiwan for financially supporting this research under Contract No. NSC 98-2221-E-003-014-MY2 and NSC 99-2631-S-003-002-.

References

[1] S. Mitra and T. Acharya, “Gesture Recognition: A Survey,” IEEE Transactions on Systems, Man,

[2] K. Wan and H. Sawada, “Dynamic Gesture Recognition Based on the Probabilistic Distribution of Arm Trajectory,” Proceedings of International Conference on Mechatronics and Automation, Takamatsu, 2008, 426-431.

[3] Y. Wang and G. Mori, “Human Action Recognition by Semilatent Topic Models,” IEEE

Transactions on Pattern Analysis and Machine Intelligence, 31(10), 2009, 1762-1774.

[4] J. Alon, V. Athitsos, Q. Yuan and S. Sclaroff, , “A Unified Framework for Gesture Recognition and Spatiotemporal Gesture Segmentation,” IEEE Transactions on Pattern Analysis and Machine

Intelligence, 31(9), 2009, 1685-1699.

[5] P. T. Bao, N. T. Binh, and T. D. Khoa, “A New Approach to Hand Tracking and Gesture Recognition by a New Feature Type and HMM,” Proceedings of Sixth International Conference

on Fuzzy Systems and Knowledge Discovery, Tianjin, 2009, 3-6.

[6] Q. Chen, N. D. Georganas and E. M. Petriu, “Hand Gesture Recognition Using Haar-Like Features and a Stochastic Context-Free Grammar,” IEEE Transactions on Instrumentation and

國科會補助專題研究計畫項下赴國外(或大陸地區)出差

或研習心得報告

日期:100

年07

月24

日一、國外(大陸)研究過程:

研習期間與美國兩位學者一組,展開五天的計畫合作。此次研習

不但在五天之中都有卡內基美隆大學傑出的研究者進行教育科技

相關之演講與實務經驗分享,更提供許多創新的研究方向與工具

教學。此外,更有以小組為單位的教育科技應用教學,例如本人

參加了電腦合作學習(CSCL)的課程,教授 Carolyn Rose 與她的博

士班學生便提供 TuTalk 與 SIDE 此兩項 CSCL 平台開發與分析的工

具,並輔助我利用此兩項工具發展一個研究計畫。在參與研習的

同時,與國外兩位學者共同腦力激盪,在研習的最後一天完成計

畫的實作,並在 Poster Session 發表並展示合作成果。

計畫編號

NSC 99-2631-S-003-002

計畫名稱

卓越數位學習科學研究中心

出 國 人 員

姓名

簡佑達

研究助理

服 務

機 構

及 職

稱

國立台灣師範大學

科教中心

出國時間

100 年 07 月 24 日至

100 年 08 月 01 日

出國

地點

匹茲堡卡內基

美隆大學

二、研究成果:

本小組透過分析卡內基美隆大學所提供的學生線上討論資料,初

步釐清學生在線上探究科學相關議題時的討論架構,並依此架構

設計自動化的即時回饋,透過智慧化個人教師(Intelligent

Tutor)的機制,輔助學生進行線上的小組合作學習。在研習期間

透過卡內基美隆大學人機互動領域(HCI)的博士生協助,順利完成

一個自動化即時反饋的線上討論平台之雛形。

三、建議:

希望國科會能持續鼓勵並補助學生/學者參與此類研習,透過非母

語與他國學者討論並實作計畫是非常具有挑戰性並且實用的研究

訓練!但是此行的支出並非學生可負荷的,希望國科會能夠多給

予補助!

四、其他:

出席國際學術會議心得報告

計畫編號 NSC 99 2631-S-003-002 計畫名稱 卓越數位學習科學研究中心(3/3) 出國人員姓名 服務機關及職稱 張俊彥 國立台灣師範大學科教中心 主任 會議時間地點 99.09.01~99.09.05 日本神戶 會議名稱 2010NEURO 研討會發表論文題目 Association of catechol-O-methyltransferase (COMT) polymorphism and cognitio n, BMI, blood pressure, and uric acid in a Chinese cohort.

一、 參加會議經過:

第 33 屆 Japan Neuroscience Society (JNS) annual convention 於 2010 年 9

月 2-4 在日本神戶舉辦。會議以邀清演講與 Poster 兩種方式進行。值得一

提的是,受邀進行演講的學者皆是在神經科學、認知科學等領域之精英,

包括諾貝爾獎得主與多位美國國家院士級之知名學者。本人依研究領域

相關性,參與了 Genetics, Environment, Culture, and Behavior Theme、Brain,

Body, Behavior 相關之演講與 posters。本人之論文在 2 日發表,多位學者

參與討論並肯定研究取向及結果,並交換寶貴意見。

二、 與會心得

學習行為究竟如何受到後天環境與先天基因調控,是近年來認知心理學

與認知神經科學學者最關心的議題之一。我個人對紐約大學 Prof. Joseph

LeDoux 主講之 The Emotional Brain : Past, Present, Future 印象特別深刻。

隨著人類基因體定序完成和高速基因分析技術的發展,目前已定義出人

類大約擁有三萬個基因。這些大量基因資訊,不僅衝擊醫學與心理學對

疾病診斷、治療方法的開展,也擴及認知與行為層面。特殊環境因子可

能導致認知與心理改變是眾所周知的,近年來許多發現指出特殊環境因

子會影響特殊基因調控人類的行為,這些發現提供教育與心理學者一個

值得思考的新面向,意即這些環境因子為何?對學生(學習)行為的影響為

何?參與此研討會提供個人長期探索問題解決研究一個新的方向與契

機,因此參與此會議確實對於未來學術研究有重要之啟發。

三、 建議

JNS 已成了全世界重要的神經科學學術研討會議之一,許多重要的研究及發現都

在此會議中發表。本校應多鼓勵及補助國內科學教育與認知心理研究者、年輕

學者、研究生參與此盛會,俾期在國際上發揮最大的影響力。

四、攜回資料

1. Final Program and Abstracts of 2010 JNS Annual Meeting。

2. 本人曾參與場次之論文及相關資料。

出席國際學術會議心得報告

計畫編號 NSC 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心 出國人員姓名 服務機關及職稱 國立台灣師範大學 科教中心 張俊彥主任會議時間地點 99.10.18~99.10.20 新加坡 會議名稱 AABE 2010 (The 23

rd

Biennial Conference of the Asia Association for Biology Education

發表論文題目 From Gene to Education - The ECNG Research Framework: Education, Cognition, Neuroscience, and Gene

一、參加會議經過:本人受邀到亞洲生物教育學會(Asia Association for

Biology Education 簡稱 AABE) 第二十三屆雙年會(新加坡主辦)進行主

題演講。受邀時,本人表示在生物教育研究的涉獵較少,主要是地球科學

教育與數位學習方面,但是主辦人張教授(Dr. C H Diong)非常希望我能

前往,因此我選擇這幾年才開始的一個前瞻研究主題來談,試著擘畫一個

願景:亦即如何把目前當紅的基因研究與教育進行整合,最終能用來改進

未來的教育。這個演講先前的研究已發表在 Brain and Cognition 期刊如下

所示,本人並在此專題演講中,增加了許多個人的想法。AABE 2010 雙年

會活動內容還包括:討論會議、論文發表、海報展演、小組討論….等。

在會議中,除了和主要來自亞洲的生物教育學者專家,彼此分享生物教育

研究,也參觀新加坡的外海掩埋場。許多與會者對於本人專題演講的研究

均表達了高度的興趣並有好幾位學者與本人聯絡後續合作研究事宜。

二、與會心得:亞洲生物教育雙年會並重研究與實務兩方面,台灣參加這

個會議的人數較少,建議可鼓勵更多學者參與這個雙年會並在這個組織中

發揮影響力!

出席國際學術會議心得報告

計畫編號 NSC 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心 出國人員姓名 服務機關及職稱 國立台灣師範大學 科教中心 張俊彥主任 會議時間地點 99 年 11/14-21 尼泊爾加德滿都會議名稱 SIX NEPAL GEOLOGICAL CONGRESS

發表論文題目 The Development of an Online 3D Compound Virtual Field Trip System

Nepal Geological Congress」

,地點在尼泊爾的首府加德滿都,它是尼泊爾

最大的城市,是主要的經濟、政治、教育、文化和工商業中心。Sixth Nepal

Geological Congress 年會活動內容包括:主題演講、論文發表、海報展演、.

等。在會議中,主要和來自尼泊爾的地質學家和地球科學教育學者專家,

彼此分享地質科學與教育的研究心得。本人此次發表的論文,除了發表在

Journal of Nepal Geological Society 外,後續的研究亦將刊登於 Learning,

Media, and Technology 期刊。此論文主要介紹本研究開發的虛擬野外考察

系統,許多與會者對於這樣的系統(見下圖)均表達了高度的興趣且希望

有一天能夠試用這個系統。

二、與會心得:本人首次參加這樣的地質會議,現在許多純科學的學術研

討路會都會有一些教育的子題,建議科學教育學者可以多參加這樣的會議

以便和科學家有更多的互動!!

出席國際學術會議心得報告

計畫編號 NSC 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心 出國人員姓名 服務機關及職稱 國立台灣師範大學 科教中心 張俊彥主任 會議時間地點 12/11-12/18 維也納 會議名稱 歐盟 OSR Meeting.發表論文題目 The Searching Effectiveness of Social Tagging in Museum Websites

一、參加會議經過:本次為參與此歐盟計畫「開放科學資源計畫

(OpenScienceResources, OSR)的年度計畫報告會議(每年兩次)

。此計畫整

合各國科學博物館及科學教育中心、教育科技、資訊工程專家、使用者社

群等參與人員的專長。本研究團隊受邀參與 OSR,協助 OSR 發展社會性

標籤的技術服務,並將社會性標籤技術應用於台灣地區科學博物館或科學

教育館的數位資源帄台。此次會議除了聆聽各個國家的進度報告,個人研

究團隊亦摘要報告我們目前的研究進度。我們這個研究報告也將刊登在

Dear Author,

The final submission of your paper entitled "The Searching Effectiveness of Social

Tagging in Museum Websites" has been successfully submitted.(Paper ID: "2189)

Please note that we do not send proofs before publication. If no major issues are detected in final editing process, your paper will be published without any further communication. We suggest that you subscribe to FREE table of contents alerting service at journal website: http://www.ifets.info/toc_subscribe.php Sincere regards. Nian-Shing Chen Kinshuk Demetrios G Sampson Co-Editors- in-Chief,

二、與會心得:本此與會更加瞭解將如何與這個計畫的所有參與者合作,

並帶回許多與此計畫相關的訊息,有助於提昇台灣的研究團隊對此歐盟計

畫的貢獻。

出席國際學術會議心得報告

計畫編號 NSC 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心 出國人員姓名 服務機關及職稱 國立台灣師範大學 科教中心 張俊彥主任 會議時間地點 05/21-29 波蘭華沙 會議名稱 歐盟 Ecsite 年度研討會及會議發表論文題目 Incorporating online informal science learning resources into formal science learning environments in Taiwan

一、參加會議經過:本會議是歐洲科學中心和博物館交流會「European

network of science centres and museums」簡稱「Ecsite」所舉辦的 2011 年

會,地點在波蘭的首府華沙,它是波蘭最大的城市,是主要的經濟、政治、

教育、文化和工商業中心。Ecsite 2011 年會活動內容包括:主題演講、討

論會議、論文發表、海報展演、小組討論….等。在會議中,除了和主要

來自歐洲的科學博物館人員、科學傳播和教育學者專家,彼此分享科學中

心與博物館在大眾教育上的主要功能,也參觀波蘭的世界文化遺產。本人

此次發表的論文,已投稿到 Australasian Journal of Educational Technology

如下所示。此論文主要介紹如何把非制式教育的資源整合運用至制式教育

中,許多與會者對於本計畫的研究均表達了高度的興趣。

Hello Dr Chang,

Thank you very much. Receipt acknowledged. Date: 1 Aug 2011 11:47:26 +0800

Title: Exploring the feasibility of incorporating European online informal science learning resources into formal science learning environments in Taiwan

Authors: Chun-Yen Chang (National Taiwan Normal U) Johannes-Geert Hagmann (Deutsches Museum), Yu-Ta Chien, Chung-Wen Cho (National Taiwan Normal U) Original filename: complete text with author information.docx File reading check: Some problems with poor legibility of figures.

The worst is Figure 6, please send a new copy of it with x axis legend legible (do not send the whole MS Word file, just a replacement for Figure 6, in .jpg format or as a picture within an MS Word file - it would be best to redraw with axes transformed, i.e.

'queries' on the y axis, 'counts' on the x axis).

Please avoid copy and paste from PowerPoint files into MS Word files, as a severe loss of legibility may occur. We cannot send article containing poorly prepared figures out to reviewers.

We will place your submission into AJET's review process and in due course advise you on the outcome.

We thank you for submitting your work to AJET. Best wishes,

Roger Atkinson

Production Editor, AJET

http://www.ascilite.org.au/ajet/ajet.html

二、與會心得:本人首次參加這樣會議,是跨入「非制式科學教育」的第

一步,新加坡和韓國的科學中心(或博物館)均有派代表參加,很可惜國

內的科學博物館或中心並未有人與會,應多鼓勵!

出席國際學術會議心得報告

計畫編號 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心-卓越數位學習科學研究中心(3/3) 出國人員姓名 服務機關及職稱 林育慈/國立暨南國際大學/課程教學與科技研究所/助理教授會議時間地點 September 22-24, 2010, Carnegie Mellon University, Pittsburgh, PA, USA

會議名稱 The Fourth IEEE International Conference on Semantic Computing 發表論文題目 Learning‐focused Structuring for Blackboard Lecture Videos 一、參加會議經過

ICSC 會議是三天的議程,是語意計算領域重要的國際會議,主要的討論主題包含:

Semantics based Analysis

Natural language processing

Image and video analysis

Audio and speech analysis

Data and web mining

Behavior of software, services and networks

Security

Analysis of social networks

Semantic Integration

Metadata and other description languages

Database schema integration

Ontology integration

Interoperability and service integration

Semantic programming languages and software engineering

Semantic system design and synthesis

Applications using Semantics

Search engines and question answering

Semantic web services

Content-based multimedia retrieval and editing

Context-aware networks of sensors, devices and applications

Machine translation

Music description

Medicine and Biology

GIS systems and architecture

Semantic Interfaces

Natural language interfaces

Multimodal interfaces

前兩天都有 keynote session,且皆由此領域中相當重量級的人物主講,內容相當具有 吸引力。我的報告是在最後第一天的下午第一場,時間是 13:45 – 15:45,session 主題是:

Audio, Image, Video, and Speech Analysis,每一位報告者有 25 分鐘的口頭報告時間加上

5 分鐘的 Q&A 時間。論文報告的過程相當順利,由於是 session chair,也認識了會議中 許多不同領域的人。

二、研究報告 1. 議程

本人的報告是在最後一天:

Parallel Session 8 ICSC (11): Audio, Image, Video, and Speech Analysis (2)

Room Rangos 3

Session Chair Yu-Tzu Lin

On the use of visual soft semantics for video temporal decomposition to scenes

Vasileios Mezaris, Panagiotis Sidiropoulos, Anastasios Dimou, Ioannis Kompatsiaris

MySpace Video Recommendation with Map-Reduce on Qizmt Yohan Jin, Minqing Hu, Harbir Singh, Daniel Rule, Mikhail Berlyant, Zhuli Xie

Learning-focused Structuring for Blackboard Lecture Videos Yu-Tzu Lin, Hsiao-Ying Tsai, Chia-Hu Chang, Greg Lee Web-inspired Sentence Complexity Index

Juan-Pablo Ramirez, Alexander Raake, Ashkan Sharifi, Hamed Ketabdar

Joint-AL: Joint Discriminative and Generative Active Learning for Cross-domain Semantic Concept Classification

Huan Li, Yuan Shi, Mingyu Chen, Alex Hauptmann, Zhang Xiong 2. 報告投影片

三、與會心得

同討論,並由中獲得不少寶貴的意見,對於我未來的研究實為助益良多。而除了上台報 告研究成果外,我亦積極地參與其他場次,仔細聆聽其他研究者的報告,並在會後與其 他研究者交換研究想法,以此開闊研究的視野。並與會議主辦單位的教授有很多交流, 藉以了解美國學術界現況。 在參與會議的過程中,印象最深刻的莫不來及各個學者敏銳的研究想法及以看問題 的角度。常常在一個論文報告後,有經驗的學者所提出的問題或是建議改進的方向,不 儘都是我未從想到的問題,而且問題都非常深入且切中研究核心,這些學者提問的方式 及寬廣的研究視野,將是我未來努力的目標。

出席國際學術會議心得報告

計畫編號 99-2631-S-003-002 計畫名稱 卓越數位學習科學研究中心-卓越數位學習科學研究中心(3/3) 出國人員姓名 服務機關及職稱 柯佳伶 臺灣師範大學資訊工程系 副教授會議時間地點 Syracuse, N.Y. , June 28-July 1, 2011 會議名稱

The Twenty Forth International Conference on Industrial, Engineering & Other Applications of Applied Intelligent Systems (IEA-AIE 2011)

發表論文題目 Informative Sentence Retrieval for Domain Specific Terminologies 一、參加會議經過

如何提供有效率且具智慧的技術來解決現實生活中的問題,此種需求一直在增加中。智 慧型應用(applied intelligence)的相關技術包括許多不同的研究領域,例如資料探勘、適性控 制、電腦視覺、決策支援系統、機器學習、網際網路技術、計畫排程等。國際智慧型應用協 會 (The International Society of Applied Intelligence , ISAI) 透過 每年度舉辦的 IEA-AIE (International Conference on Industrial, Engineering and Other Applications of Applied Intelligence Systems) 研討會,提供在應用人工智慧領域的國際學者及工業界一個討論交流的機會。 IEA-AIE 為一個歷史悠久的國際研討會,本年度的 IEA-AIE 2011 研討會為第 24 屆,於 6 月 27 日到 7 月 1 日在美國紐約州雪城大學舉辦。我於 6 月 24 日中午在桃園機場搭乘國泰航空 飛往香港的班機,由香港轉機到美國紐約,到達紐約是當地時間 6 月 24 日深夜十點多,因此 在紐約停留兩天,6 月 27 日再從紐約搭乘火車到達雪城準備參加會議。

會議於 6 月 28 日開始,大會以發展及研究可解決實際生活人工智慧應用問題的技術為主 軸,所邀請的講者及所接受論文,除了理論方法的部分,也著重解決實際問題的智慧型系統 的應用。本次會議共收到 206 篇論文投稿,每篇論文經過至少三位審查委員的審查,其中只 有 92 篇獲得接受發表。所接受論文涵蓋主題非常廣泛,大致可分成: 特徵擷取方法、聚落分 析、分類方法、類神經網路、基因演算法、影像處理、機器人應用、最佳化方法、排程方法、 路徑規劃方法、遊戲理論、認知方法等。會議中並同時舉辦 7 個特定議題的 special sessions, 包括漸進式聚落分析及特殊事件偵測技術及其在動態資訊中的智慧應用、智慧型文件處理技 術、人類認知及情感程序模型建立方法、智慧型互動中的認知計算、智慧型系統的應用、自 然現象的啟發式最佳化方法、化學及生物資訊處理方法及應用等。在 6 月 27 日到 7 月 1 日四 天的議程中,共安排了 26 場論文發表 sessions,每個時段有 3 個 sessions 同時進行,此外邀 請了 3 位著名學者各主講一場 keynote speech,分別是 IBM Thomas J. Watson 研究中心的 Ajay K. Royyuru、來自 Rochester 大學的 Henry Kauts、以及來自美國空軍研究實驗室的 Richard W. Linderman。

我的論文被安排於 6 月 27 日,會議第一天下午第二個 session 中第二位發表。我此次被 接受及發表的論文: Informative Sentence Retrieval for Domain Specific Terminologies,本論文針 對專有詞彙之定義式問題,建立一套以電子書為答案來源之定義式答案句自動擷取系統雛 形。本論文運用資訊檢索的概念由電子書內容中選取候選句子,並提出以維基百科等外部知 識來源衡量句中所包含的字詞與查詢專有詞彙關鍵字的關聯權重值,作為系統挑選答案句之 評分依據。本方法能夠讓答案不受限於特定定義式句型,而找出更多能夠幫助了解該專有詞 彙之相關定義解釋說明的內容作為答案。由實驗結果顯示,本方法所擷取之答案句及排序順 序,與人工評分挑選的標準答案結果一致性很高。 在參加三天半的議程後,我於 7 月 2 日搭火車到紐約,於 7 月 3 日搭乘回程航線返台, 於 7 月 4 日下午七點左右抵達台北。 圖一 於 IEA/AIE 2011 舉辦會場大廳留影