Music Classification By Parsing Main Melody

全文

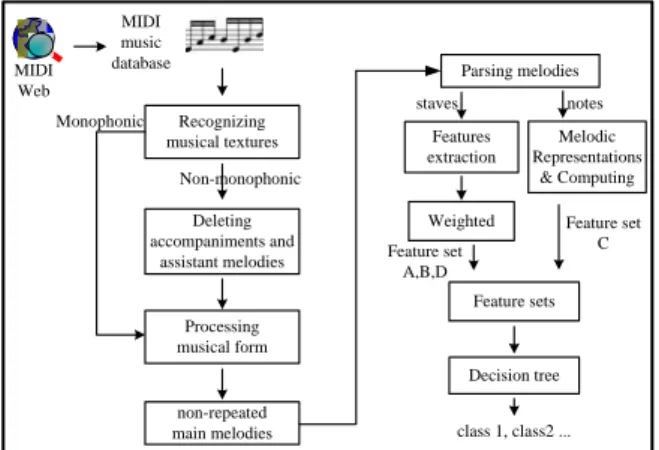

(2) in composing a piece such as dynamics, staccato, etc. We investigated the extraction of main melody in a music based on music theory and what music features are most suitable to represent a melody.. 3. METHOD In this section, we describe the extraction of non-repeated main melody and music features in details. The flow chart of method is shown Figure 1. The main idea is to extract the non-repeated main melody from a piece and discover the melodic variations in music features. We state how a non-repeated main melody is extracted from a piece in Section 3.1. In Section 3.2, we illustrate how a melody is translated into a sequence of representations and what representations are suitable for data mining. The melodic features are interpreted and weighted in Section 3.3.. Stage 2: The various music forms must be considered for extracting a main melody such as A、AB and ABA etc. Most pieces belong to one of them and we only need to process ABA form because of the back segment A is repeated performance according to music theory. We can delete the redundancy by extraction from its XML score. For example, Fig.2 (b) shows that the back redundant parts A must be deleted in ABA form. The remained part AB is a main melody we need as shown in Fig.2(c).. (a). (b) MIDI Web. MIDI music database. Parsing melodies staves. Monophonic. Recognizing musical textures. notes. Features extraction. Melodic Representations & Computing. Weighted. Feature set C. Non-monophonic Deleting accompaniments and assistant melodies. Feature set A,B,D. (c) Fig. 2 An example illustrating how to extract main melody by parsing a homophonic texture piece.. Feature sets. 3.2. Representations of Melody. Decision tree. Integrating the three points below, we can use a sequence of symbols to represent a main melody after considering the pitch of a note, a note with duration and a chord.. Processing musical form. non-repeated main melodies. class 1, class2 .... Fig. 1 System architecture.. 3.1. Non-repeated Main Melody Extraction Melody [9] is composed of a sequence of the pitches and durations of the notes. Composers are to make the music sound more harmonious so extra music textures are often added in a piece. However, a texture of the western music consists of melody (horizontal) and harmony (vertical). In the following, textures can be divided into three categories: 1) Monophonic texture: It is single melody without accompaniment. 2) Homophonic texture: A texture of one main melody with accompaniment. 3) Polyphonic texture: Two or more melodic lines of equal complexity and they are sounding simultaneously. Besides, there are repeated and similar phrases in a piece that are most memorable. In this paper, the repeated and similar phrases are called main melody. Miller et al. [9] argued that main melody is enough to represent a song. The extraction of main melody is as shown in the following step: Stage 1: Each score can be translated into the XML style and then we parse it to acquire the monophonic texture. Fig.2 (a) shows that the tonic of a homophonic texture is recorded in the treble clef.. Fig. 3 A same song with pitches in different octaves. (A) The pitch of a note can be represented as relative or absolute pitch separately. Absolute pitch means that the notes with same chroma in different octaves are regarded as same pitch. Relative pitch means that the notes with same chroma in different octaves are regarded as different pitches. We employed two representations because of composers may compose a same piece with different octaves. A same song with different pitch is composed as shown in Fig. 3. (B) Note with duration means that the note sounded for a specific time such as whole note, half note, etc. Composers use notes with duration to enrich the rhythm. In this paper we treats thirty-second note as a base unit. For example, a quarter note equals eight thirty-second notes. A melody can be converted into a sequence of pitches with duration as shown in Fig. 4. (C) Chord [9] is a combination of two or more notes which sound simultaneously. A chord can be represented. - 282 -.

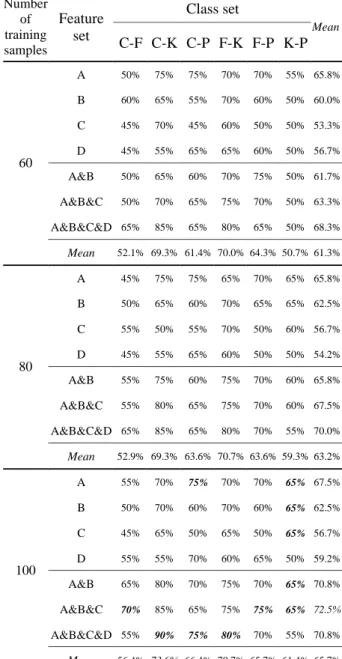

(3) as the highest, average and lowest pitches if a chord consists of three or more notes. A chord with two notes can be represented as the highest and lowest pitches. In Fig. 4, the first chord with two eighth notes is represented as a relative highest pitch C3 and a relative lowest pitch C4. The C3_4 can be denoted as a relative pitch C3 with four units. The C3_4&C4_4 denotes that a chord with two notes. The representations are convenient for analyzing the variations of the pitch of a melody.. Besides, the music feature in set C is about melodic gamut. We thought that the gamut is useful for interpreting a melody and encode all pitches of a melody into the figures (value: 1~88) according to a piano keyboard. Hence, we compute the important features, including maximum pitch, minimum pitch, average relative pitch, average absolute pitch, average positive interval and average negative interval. Finally, we put the music features (as Table 1) of all melodies above described into the features pool for music classifier. Number Feature of training set samples. Relative pitch:<C3_4&C4_4, G3_4, E3_4&C4_4, G3_4, C3_4&G4_4, G3_4, E3_4&G4_4, G3_4, F2_4&A4_4…..> Absolute pitch:<C_4&C_4, G_4, E_4&C_4, G_4, C_4&G_4, G_4, E_4&G_4, G_4, F_4&A_4..>. Fig. 4 Chords are represented as a symbolic sequence.. 3.3. Features Interpretation and Weighting. Mean. C-F C-K C-P F-K F-P K-P. A. 50%. 75%. 75%. 70%. 70%. 55% 65.8%. B. 60%. 65%. 55%. 70%. 60%. 50% 60.0%. C. 45%. 70%. 45%. 60%. 50%. 50% 53.3%. D. 45%. 55%. 65%. 65%. 60%. 50% 56.7%. A&B. 50%. 65%. 60%. 70%. 75%. 50% 61.7%. A&B&C. 50%. 70%. 65%. 75%. 70%. 50% 63.3%. A&B&C&D 65%. 85%. 65%. 80%. 65%. 50% 68.3%. 60. In music theory, Miller [9] stated that the music involves other characters except for the notes. In other words, we can utilize music characters to analyze the variations between melodies according to music theory. We described the specific thirty features that are useful for music classification as shown in Table 1. First, the music features in set A are about format of the music score and usually implied the music style the composers want to express. Then, both sets of B and D are related to format of the performing notes, which can be sounded interesting. Hence, we adopted both sets of B and D to analyze the variations between melodies. To discover the melodic variations, the related music features are standardized by formula (1) as shown below.. Mean. 52.1% 69.3% 61.4% 70.0% 64.3% 50.7% 61.3%. A. 45%. 75%. 75%. 65%. 70%. 65% 65.8%. B. 50%. 65%. 60%. 70%. 65%. 65% 62.5%. C. 55%. 50%. 55%. 70%. 50%. 60% 56.7%. D. 45%. 55%. 65%. 60%. 50%. 50% 54.2%. A&B. 55%. 75%. 60%. 75%. 70%. 60% 65.8%. A&B&C. 55%. 80%. 65%. 75%. 70%. 60% 67.5%. A&B&C&D 65%. 85%. 65%. 80%. 70%. 55% 70.0%. 80. n. ∑ xi. Class set. Mean. (1). wi = i =1 M where wi denotes the time ratio of the feature in a melody; xi denotes the time duration of the feature in a melody; M denotes the whole time of a melody.. 52.9% 69.3% 63.6% 70.7% 63.6% 59.3% 63.2%. A. 55%. 70%. 75%. 70%. 70%. 65% 67.5%. B. 50%. 70%. 60%. 70%. 60%. 65% 62.5%. C. 45%. 65%. 50%. 65%. 50%. 65% 56.7%. D. 55%. 55%. 70%. 60%. 65%. 50% 59.2%. A&B. 65%. 80%. 70%. 75%. 70%. 65% 70.8%. A&B&C. 70%. 85%. 65%. 75%. 75%. 65% 72.5%. A&B&C&D 55%. 90%. 75%. 80%. 70%. 55% 70.8%. 100. Feature set A. Term key signature, tempo, time signature. B. Chord, slur, tie, staccato, accent and fermata maximum pitch, minimum pitch, average C relative pitch, average absolute pitch, average positive interval and average negative interval appoggiatura, mordent, trill, arpeggio, turn, crescendo, diminuendo, pianisissimo, D pianissimo, piano, mezzo piano, mezzo forte, forte, fortissimo and fortisissimo Table 1 Music features used in this paper.. Mean. 56.4% 73.6% 66.4% 70.7% 65.7% 61.4% 65.7%. Table 2 Classification between categories.. 4. EXPERIMENTAL EVALUATION In this section, we evaluate proposed approaches about extraction of main melody and weighted music features by testing various kinds of music namely classic, folk, kid and pop music that are collected from finalemusic [5]. In order to verify that was also suitable. - 283 -.

(4) for classification between sub-categories, we further divided classical music into three sub-categories namely baroque, classic and contemporary periods. For the classifier, we employed the decision tree for two-way classification. All the experimental datasets were split randomly into training set (80%) and test set (20%). In the training phase, different number of samples (each class was provided with 30, 40 and 50 training samples) were used to build the decision tree. After the training, each built classifier was tested by other cases (each class was provided with 10 cases) not contained in the training set for further evaluation.. 4.1 Performance Analysis of Feature Sets First, we want to investigate the effect of music feature sets so we grouped four categories into six clusters that each cluster consists of two categories for two-way classification. Feature sets are divided into single feature set or combinatorial feature sets. Our method. they have the diverse pitches in most melodies. The Beatles, Chinese and Japan folk songs can also be distinguished after combining feature set (B) due to Beatles’ songs using more chords or ties.. 4.2 Performance Analysis of Sub-categories Furthermore, we tested that proposed approaches about extraction of main melody and weighted music features were effective for sub-categories so we divided classical music into three different periods. Table 3 shows the results where C1, C2, C3 denotes the three sub-categories of classical music, namely baroque, classic and contemporary periods respectively and the suitable music feature sets are able to improve the accuracy from 45% to 80%. Number of training samples. Shan's method. 100. Class set C1-C2 C1-C3 C2-C3. A. 65%. 50%. 50%. A&B. 70%. 55%. 45%. A&B&C. 70%. 55%. 55%. A&B&C&D. 60%. 70%. 55%. A. 65%. 60%. 50%. A&B. 75%. 70%. 45%. A&B&C. 70%. 80%. 55%. A&B&C&D. 60%. 70%. 60%. A. 65%. 60%. 50%. A&B. 75%. 70%. 50%. A&B&C. 75%. 80%. 55%. A&B&C&D. 60%. 65%. 55%. 60. 80 Accuracy. Feature set. 60 40 20 0 E-B. E-C. E-J. B-C. B-J. C-J. Two-way classification. 80. Fig. 5 Comparisons of the accuracy with Shan [13]. Table 2 shows the result where C, F, K, P denotes the categories of classic, folk, kid and pop respectively. The result indicates that the music features sets are able to improve the accuracy from 45% to 90%. We discover that the combinatorial feature sets are worth for music classification especially for the specific category such as classic-kid (C-K), folk-kid (F-K), etc. We review folk and kid songs and then discover that most folk songs have more chords and dynamics than kid songs. Most kid songs are C Major. In 100 training samples, we obtain the average accuracy from 56.4% to 73.6% if we do not consider single or combinatorial feature sets. The average 72.5% accuracy can be obtained using combinatorial feature sets (A&B&C) if we consider an optimal combination. In addition, the single feature set A was better than other single feature set in accuracy. We assume that each experiment can be based on feature set A and then they can be combined other feature sets to improve the accuracy. In order to prove that proposed approaches about extraction of main melody and weighted music features are reliable so we classified the music Shan [13] used. They are performed by two-way classification using 60 training samples. Fig. 5 shows that our some results are more than Shan’s such as E-B, B-C and B-J by using combinatorial feature sets A&B and A&B&C. The Enya and Beatles’ songs can be distinguished after combining feature set (C) because of. 100. Table 3 Classification between sub-categories.. 5. CONCLUSIONS In this paper, we investigated the extraction of main melody in a music based on music theory and employee the suitable feature sets to classify music. Experimental results show that the classification accuracy was achieved as good as 90% by the most suitable features for 2-way classification. We discovered the interesting diversities between different music styles, such as the tempo, grace, slur, chords, staccato, etc. Through the experimental results, we confirm that the combinatorial feature sets are important for music classification. Moreover, the proposed most suitable features can quickly raise classification accuracy by using only 60 to 100 training samples. For future work, it is helpful for retrieving users’ needs rapidly from music database. A combinatorial approach [11] has been proposed to solve. We may use these proposed music features to predict user’s music hobby and further develop music. - 284 -.

(5) recommendation systems. Besides, we will also test more categories of music in the future so as to extend the applications.. REFERENCES [1] Chai, W. and Vercoe, B. Folk Music Classification Using Hidden Markov Models. In Proc. of International Conference on Artificial Intelligence, 2001. [2] Chai, W. and Vercoe, B. Using User Models in Music Information Retrieval Systems. In Proc. of Intl. Symposium on Music Information Retrieval, 2000. [3] Chen, H. C. and Chen, A. L. P. A Music Recommendation System Based on Music Data Grouping and User Interests. In Proc. of ACM Intl. Conference on Information and Knowledge Management, 2001. [4] Fang-Fei Kuo, Meng-Fen Chiang, Man-Kwan Shan, Suh-Yin Lee: Emotion-based music recommendation by association discovery from film music. ACM Multimedia, pp.507-510, 2005. [5] Finale Showcase. http://www.finalemusic.com/showcase/ [6] Liu, C. C., Hsu, J. L. and Chen, A. L. P. Discovering Nontrivial Repeating Patterns in Music Data. IEEE Transactions on Multimedia, 3(3), 2001. [7] McKay, C., Fiebrink, R., McEnnis, D., Li, B. and Fujinaga, I. Ace: A Framework For Optimizing Music Classification. 6th International Conference on Music Information Retrieval, 2005. [8] MIDI Manufacturers Association. http://www.midi.org/ [9] Miller, H. M. and Williams, E. Introduction to Music, 1991. [10] Musicnetwork. http://www.interactivemusicnetwork.org/ wg_standards/index.html [11] Pachet, F., Roy, P. and Cazaly, D. A combinatorial approach to content-based music selection. IEEE Multimedia, 7(1): 44-51, 2000. [12] Recordare. http://www.recordare.com/software.html [13] Shan, M. K. and Kuo, F. F. Music Style Mining and Classification by Melody. IEICE Transactions on Information and Systems, E86-D(4), 2003. [14] Themefinder. http://www.themefinder.org/ [15] Tseng, Y. H. Content-Based Retrieval for Music Collections. SIGIR, pp. 176-182, 1999. [16] Uitdenbogerd, A. L. and Zobel, J. Manipulation of Music for Melody Matching. In Proc. of the ACM Multimedia, pp. 235-240, 1998. [17] Uitdenbogerd, A. L. and Zobel, J. Melodic Matching Techniques for Large Music Databases. In Proc.of the ACM Multimedia, pp. 57-66, 1999. [18] W3Schools. http://www.w3schools.com/ [19] Weyde, T. and Datzko, C. Efficient Melody Retrieval With Motif Contour Classes. ISMIR, pp. 686-689, 2005.. - 285 -.

(6)

數據

![Fig. 5 Comparisons of the accuracy with Shan [13].](https://thumb-ap.123doks.com/thumbv2/9libinfo/8916253.261488/4.892.485.784.401.771/fig-comparisons-accuracy-shan.webp)

相關文件

Yuen Shi-chun ( 阮 仕 春 ) , Research and Development Officer (Musical Instrument) of the Hong Kong Chinese Orchestra, is the foremost innovator in the construction

(Keywords: general education, learning passport, language skills, cultivation in humanity, activities of music and arts, democracy and constitutionalism, the silky way over

(Keywords: general education, learning passport, cultivation in humanity, activities of music and arts, democracy and constitutionalism, the silky way over the sea).. The

(Keywords: general education, learning passport, cultivation in humanity, activities of music and arts, democracy and constitutionalism, the silky way over the sea).. The

pop

DVDs, Podcasts, language teaching software, video games, and even foreign- language music and music videos can provide positive and fun associations with the language for

• 有向圖(directed graph)、無向圖(undirected

Forming the initial ideas as the base of the composition activity, as well as the fundamental