高職應用外語科學生四技二專統一入學測驗英文專業考科與看圖寫作成績之相關性研究 - 政大學術集成

全文

(2) A Study on Correlations between English Professional Subject of the Technological and Vocational Education Joint College Entrance Exam and Picture Writing Performance of Students from Department of Applied Foreign Languages of Vocational High Schools. A Master Thesis. 立. 治to 政Presented 大 Department of English,. ‧ 國. 學 National Chengchi University. ‧. n. er. io. sit. y. Nat. al. Ch. engchi. i n U. v. In Partial Fulfillment. of the Requirements for the Degree of Master of Arts. by Su-mei Chen November, 2012.

(3) Acknowledgements I am most grateful to my advisor, Dr. Hsueh-ying Yu, for her guidance and encouragement throughout the research. Many thanks also goes to Dr. Yow-yu Lin for his helpful advice on the preliminary research design of the study, and to Dr. Chieh-yue, Yeh and Dr. You-yu Lin, my thesis committee members, for their insightful comments on the manuscript.. 政 治 大. My deep gratitude is extended to Barry, Shirley, and Lan-ian for their help with. 立. data collection. I am also indebted to the students, who participate in the study and fully. ‧ 國. 學. cooperate during implementation of the research.. I also greatly appreciate Jennifer and Patty for their assistance with rating the. ‧. participants’ writing samples for the study. I would like to extend my deep thanks to. y. Nat. io. sit. Su-uan and Zh-zu, who give me encouragement to carry out the research.. n. al. er. Finally, my heartfelt acknowledgements go to my family. My parents’ care and,. i n U. v. especially, my husband’s support contribute a lot to my thesis writing.. Ch. engchi. iii.

(4) TABLE OF CONTENTS. Acknowledgements ....................................................................................................................... iii Table of Contents .......................................................................................................................... iv List of Tables ................................................................................................................................. vii List of Figures ............................................................................................................................... viii Chinese Abstract............................................................................................................................ ix. 政 治 大. English Abstract ............................................................................................................................ xi. 立. Chapter One: Introduction ........................................................................................................ 1. ‧ 國. 學. Background and Motivation .................................................................................................. 1 Purpose of the Study ............................................................................................................. 3. ‧. Research Questions ............................................................................................................... 4. y. Nat. io. sit. Significance of the Study ...................................................................................................... 4. n. al. er. Definition of Key Terms ....................................................................................................... 5. i n U. v. Chapter Two: Literature Review ............................................................................................... 6. Ch. engchi. Theoretical Perspectives on Writing Assessment .................................................................. 6 Constructs of Writing Ability ........................................................................................ 6 Test Usefulness .............................................................................................................. 9 Direct and Indirect Writing Assessment ................................................................................ 9 Direct Writing Assessment ............................................................................................ 10 Indirect Writing Assessment ......................................................................................... 11 Uses of Indirect and Direct Writing Assessment ................................................................... 12 Uses of Indirect Writing Assessment ............................................................................ 13 Uses of Direct Writing Assessment ............................................................................... 14 iv.

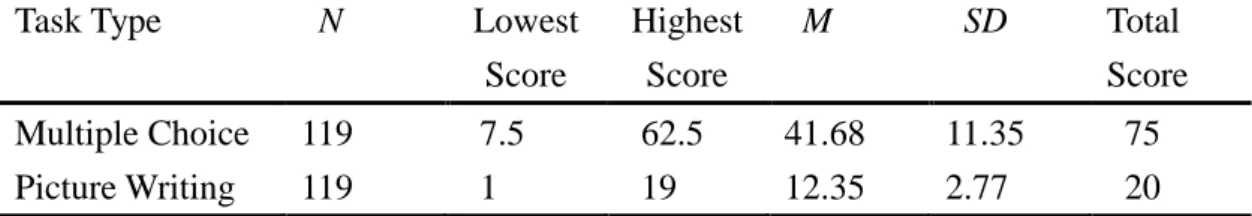

(5) Relationship between Direct and Indirect Writing Assessment ............................................ 15 Strong to Moderate Correlations between Direct and Indirect Tests of Writing ........... 15 Low Correlations between Direct and Indirect Tests of Writing................................... 16 Assessment by Combination of Direct and Indirect Writing Measures ................................ 18 Combined to Assess Both Basic and Higher-order Writing Abilities ........................... 19 Ways of Combining Direct and Indirect Measures ....................................................... 21 EPS Indirect Writing Assessment of TVE Joint College Entrance Exam ............................. 21 Introduction to Question Types of EPS Indirect Writing Assessment........................... 22. 政 治 大 Test ................................................................................................................................ 23 立 Opinions on Using Indirect Format to Assess Writing Ability in EPS Writing. Chapter Three: Method .............................................................................................................. 27. ‧ 國. 學. Participants ............................................................................................................................ 27. ‧. Instruments ............................................................................................................................ 29. sit. y. Nat. EPS Multiple-choice Writing Test ................................................................................. 29. io. er. English Picture Writing Task ......................................................................................... 30 Indirect Writing Questionnaire ...................................................................................... 31. al. n. v i n C ........................................................................................ Direct Writing Questionnaire 33 hengchi U. Procedure............................................................................................................................... 34. Data Analysis ........................................................................................................................ 37 Chapter Four: Results and Discussion ...................................................................................... 40 Correlation between EPS Multiple-choice Writing Test and Picture Writing Task .............. 40 Correlations between Four Item Types of EPS Multiple-choice Writing Test and Picture Writing Task .............................................................................................................. 42 Perceptions of EPS Multiple-choice Writing Test and Picture Writing Task ........................ 44 Abilities Participants Applied in Indirect and Direct Writing Tasks ............................. 44 Difficulties Participants Faced in Indirect and Direct Writing Tasks ............................ 49 v.

(6) Perceptions of Two Types of Writing Tasks .................................................................. 54 Indirect Writing Task ............................................................................................. 54 Direct Writing Task ............................................................................................... 56 Comparison between Indirect and Direct Writing Tasks....................................... 57 Perceptions of EPS Multiple-choice Writing Test of TVE Joint College Entrance Exam ............................................................................................................................. 58 Chapter Five: Conclusion ........................................................................................................... 63 Summary of Major Findings ................................................................................................. 63. 政 治 大 Encouraging Extensive Reading ................................................................................... 65 立. Implications ........................................................................................................................... 65. Performing Actual Writing ............................................................................................ 66. ‧ 國. 學. Emphasizing Writing Process ....................................................................................... 67. ‧. Limitations ............................................................................................................................ 67. sit. y. Nat. Suggestions for Future Research ........................................................................................... 69. io. er. References ..................................................................................................................................... 72 Appendixes .................................................................................................................................... 78. n. al. Ch. engchi. vi. i n U. v.

(7) LIST OF TABLES Table 2.1. Taxonomy of Language Knowledge of Writing ........................................................ 7. Table 2.2. Listing of 20 Writing Characteristics ........................................................................ 20. Table 3.1. Demography of Participants ...................................................................................... 27. Table 3.2. English Learning Experience of Participants ............................................................ 28. Table 3.3. English Writing Learning Experience of Participants ............................................... 28. Table 3.4. Acquired GEPT Certificates of Participants .............................................................. 29. Table 4.1. Descriptive Statistics of EPS Multiple-choice Writing Test and Picture Writing. 政 治 大 Descriptive Statistics of Four Item Types of EPS Multiple-choice Writing Test ...... 42 立 Task............................................................................................................................ 41. Table 4.3. Correlations between Subtests of EPS Multiple-choice Writing Test and Picture. 學. ‧ 國. Table 4.2. Writing Task .............................................................................................................. 43 Rank order of Abilities Participants Used to Take EPS Multiple-choice Writing. ‧. Table 4.4. sit. y. Nat. Test ............................................................................................................................ 45. Table 4.6. Difficulty Levels for Subtests of EPS Multiple-choice Writing Test ........................ 50. al. v i n C h Participants Encountered Rank Order of Difficulties to Take EPS Multiple-choice engchi U n. Table 4.7. er. Rank order of Abilities Participants Used to Take Picture Writing Task .................. 47. io. Table 4.5. Writing Test ............................................................................................................... 51 Table 4.8. Rank Order of Difficulties Participants Encountered to Take Picture Writing Task............................................................................................................................ 53. Table 4.9. Recommended Item Types for Indirect Writing Test ................................................ 55. Table 4.10 Recommended Item Types for Direct Writing Test ................................................... 57. vii.

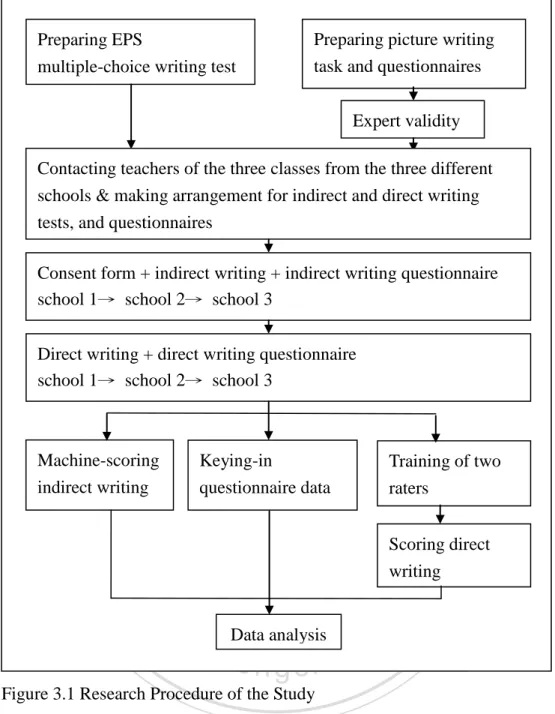

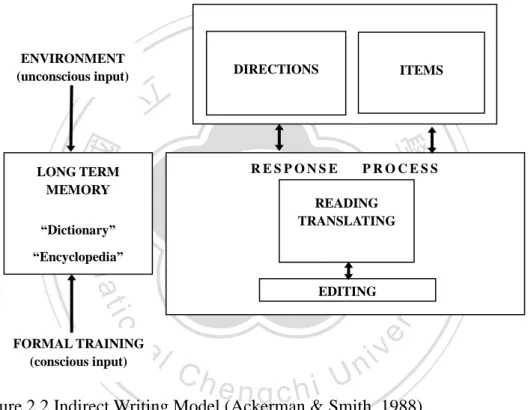

(8) LIST OF FIGURES Figure 2.1. Direct Writing Model.............................................................................................. 11. Figure 2.2. Indirect Writing Model ........................................................................................... 12. Figure 3.1. Research Procedure of the Study ............................................................................ 35. 立. 政 治 大. ‧. ‧ 國. 學. n. er. io. sit. y. Nat. al. Ch. engchi. viii. i n U. v.

(9) 國立政治大學英國語文學系碩士在職專班 碩士論文提要 論文名稱:高職應用外語科學生四技二專統一入學測驗英文專業考科與看圖寫作 成績之相關性研究 指導教授:尤雪瑛博士. 政 治 大. 立. 研究生:陳素梅. ‧ 國. 學. 論文提要內容:. ‧. 在評量學生的寫作能力時,通常採用直接測驗。然而,四技二專統一入學測驗. sit. y. Nat. 英文專業考科卻採用間接測驗,來評量應用外語科學生的英文寫作能力。本研究旨. al. er. io. 在檢視專業考科之效力,並研究如何改進現行的考試方式。. v. n. 為了達成該研究目的,119 位應用外語科三年級學生參與本研究。本研究間接. Ch. engchi. i n U. 測驗試題採用四技二專統一入學測驗英文專業考科,直接測驗試題採用看圖寫作, 以檢視專業考科與直接寫作成績之間的相關性。此外本研究使用問卷以調查學生對 直接與間接寫作測驗的看法。 結果顯示,專業考科與看圖寫作之間呈現中度相關,表示該專業考科在某種程 度上,能顯示出受試者的直接寫作能力。在四個大題中,段落組成及段落語意不連 貫句子挑選與看圖寫作呈現中度相關,因此,這兩個大題較能顯示出受試者的直接寫 作能力。 然而,問卷調查結果發現,受試者運用篇章結構的知識來完成間接測驗。但是, 卻沒有運用相同的概念於直接測驗中。此種現象可能是因為傳統的寫作教學方式著重. ix.

(10) 在文法分析及單字教學。因此,四技二專統一入學測驗的英文專業考科應同時施測 直接與間接測驗,以期對英文寫作教學產生正面的回衝效應。. 關鍵字:直接測驗,間接測驗,四技二專統一入學測驗,專業考科,看圖寫作. 立. 政 治 大. ‧. ‧ 國. 學. n. er. io. sit. y. Nat. al. Ch. engchi. x. i n U. v.

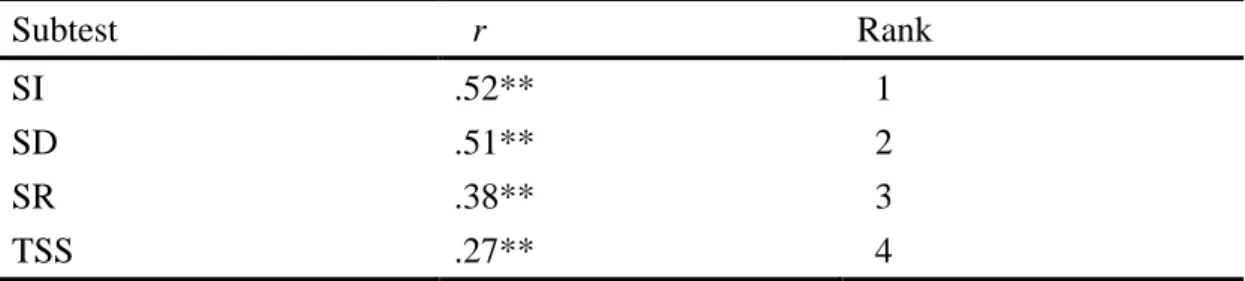

(11) Abstract. Direct writing assessment is usually employed to evaluate students’ writing proficiency. However, the Technological and Vocational Education (TVE) Joint College Entrance Exam adopts indirect writing assessment to assess students from Department of Applied Foreign Languages (DAFL) in English Professional Subject. 政 治 大. (EPS). The purpose of the present paper is to examine the effectiveness of the EPS. 立. indirect writing test and how the current practice can be improved.. ‧ 國. 學. For serving the purpose, a total of 119 third-year DAFL students participated in the study. The researcher uses indirect writing assessment, the EPS indirect writing. ‧. test, and direct writing assessment, a picture writing task, as the testing instruments to. y. Nat. io. sit. examine the correlation between the two writing measures. Moreover, questionnaires. n. al. er. are used to investigate the participants’ perceptions of the two writing tasks.. i n U. v. Results indicated that the EPS multiple-choice writing test and the picture. Ch. engchi. writing task exhibited a moderate correlation, suggesting the indirect test could, at least in part, serve as a good indication of the students’ writing competence in direct writing. Results also showed that sentence insertion (SI) and sentence deletion (SD), among the four subtests, moderately correlated with the direct writing task. The two subtests could thus be depended on as a better indication of the participants’ direct writing proficiency. Nevertheless, questionnaire findings displayed that the students applied discourse-level knowledge in the indirect test. Nonetheless, the same concept was not applied to the direct task probably because of the traditional teaching approach to xi.

(12) English writing, focusing on grammar analysis and vocabulary teaching. Therefore, the two writing tasks should be combined in the entrance exam to produce positive washback effect on writing instruction. Key Words: direct writing assessment, indirect writing assessment, TVE Joint College Entrance Exam, English Professional Subject, picture writing. 立. 政 治 大. ‧. ‧ 國. 學. n. er. io. sit. y. Nat. al. Ch. engchi. xii. i n U. v.

(13) CHAPTER ONE INTRODUCTION Background and Motivation Writing has long been regarded as a key indicator of language learners’ English proficiency level. Therefore, large-scale assessments include measures to examine test-takers’ ability in writing performance. For example, Test of English as a Foreign Language (TOEFL) and International English Language Testing System (IELTS), the two world-renowned college admission tests, require examinees to demonstrate their. 政 治 大 island-wide English proficiency examination, contains writing tests from beginning to 立 writing ability. In Taiwan, General English Proficiency Test (GEPT), the prevalent. advanced levels. It is thus evident that writing is a vital language ability that cannot be. ‧ 國. 學. ignored.. ‧. Since writing skill is essential to language learning, the assessment of written. sit. y. Nat. language skills is also getting more and more important. Two distinctly different. io. er. approaches to writing skill assessment, direct and indirect measurement, have evolved from the long history of testing writing. Both kinds of assessment have proved to be. al. n. v i n C h literature, butUboth have their own advantages successful in the writing assessment engchi and disadvantages (Breland & Gaynor, 1979; Cooper, 1984; Stiggins, 1981; Teng, 2002). Direct and indirect measures of writing ability are both employed in the entrance examinations in Taiwan to test students’ writing proficiency. Both of the major college entrance exams, Scholastic Aptitude Test (SAT) and Department Required English Test (DRET), draw on a direct writing component to evaluate students’ writing ability. In SAT, examinees are often asked to describe a series of pictures; as for DRET, essay guided writing is required. On the other hand, the. Technological and Vocational Education (TVE) Joint College Entrance Examination 1.

(14) capitalizes solely on indirect writing assessment while assessing students from Department of Applied Foreign Languages (DAFL) in English Professional Subject (EPS). The exam measures students’ writing proficiency through five multiple-choice subtests, including topic sentence selection (TSS), sentence insertion (SI), sentence deletion (SD), sentence rearrangement (SR) and cloze. The examinees only need to read the stem and to pick the best answer from the distractors. Although direct testing method has become the trend of writing assessment, EPS uses indirect testing of writing skill due to issues of practicability. According to. 政 治 大 year, direct writing testing was adopted. Free writing or guided writing was usually 立 Teng (2002) and You, Chang, Joe and Chi (2002), from the 1998 to 2000 academic. used to examine the students’ writing proficiency. Nevertheless, from the 2001. ‧ 國. 學. academic year, as the implementation of the Examination and Enrollment Separation. ‧. Program (EESP), the TVE Joint College Entrance Exam changed the administration. sit. y. Nat. of the EPS writing test from summer vacation to early May. Taking the number of. io. er. examinees (about 3,000 to 4,000 each year) into consideration, the entrance exam decided to adopt indirect writing measurement because it was impracticable to. al. n. v i n assemble enough professorsC to grade students’ writing h e n g c h i U in the middle of the semester.. The EPS writing test thus used multiple-choice items to assess students’ writing. proficiency by testing the subsets of skills that constituted the components of writing competence. Due to the present constraint of the TVE Joint College Entrance Exam, the EPS multiple-choice writing test has been implemented for more than 10 years. Nevertheless, few studies have been done to investigate the efficacy of the indirect test over the years. Among the few studies concerning the EPS writing test, studies have been conducted to evaluate the appropriateness of the question types and items (P. Lin, 2005; You et al., 2002). Others have been devoted to discussing the suitability 2.

(15) of the indirect format (P. Lin, 2005; National Teachers’ Association [NTA], 2011; Teng, 2002; TVE Joint College Admissions [TVEJCA], 2011; You et al., 2002). Nonetheless, little research has been carried out to examine the correlation between students’ performance in the EPS indirect test and their writing results in direct writing task. In addition, researchers have not yet reached a conclusion whether the question types are suitable or too difficult for the students (P. Lin, 2005; You et al., 2002). It is thus important to inspect which of the four subtests has higher correlation with the. 政 治 大 Although lots of studies have indicated that indirect measures of writing ability 立. students’ writing performances in direct writing.. could not provide full information about examinees’ writing competence profiles. ‧ 國. 學. (Ackerman & Smith, 1988; Breland & Jones, 1982; Carlson et al., 1985; Chang, 2003;. ‧. Cooper, 1984; Quellmalz et al., 1982; Stephenson & Giacoboni, 1988; Stiggins, 1981),. sit. y. Nat. some researchers and writing composition instructors have claimed that the EPS. io. er. indirect writing test could assess DAFL students’ basic writing ability without producing writing (P. Lin, 2005; You et al., 2002). Hence, it is also important to. al. n. v i n C h of the EPS writing examine the students’ perceptions test and direct writing engchi U respectively.. Purpose of the Study The purpose of the thesis is to examine the correlations between the performances of DAFL student writers in the EPS objective writing test and direct English writing. The correlations between the scores on the four subtests of EPS and direct writing are investigated in the study to see whether there are differences between the subtests. Besides, the study also discusses examinees’ perceptions of the EPS multiple-choice writing test and direct writing task. In this regard, the present study aims to examine the skills the students applied and the difficulties they faced 3.

(16) while taking the two writing tasks. The students’ opinions on the two types of writing tasks and on the EPS writing test of the TVE Joint College Entrance Exam are also investigated. Through the inquiry into the relationship of the indirect writing test of EPS and direct writing and the discussion on the students’ perceptions of the two writing tasks, we may have a better understanding of whether the EPS multiple-choice test can be depended on as a good indication of DAFL student writers’ writing proficiency. Research Questions. 政 治 大. In order to achieve the purposes of the current study, the following questions are addressed:. 立. 1. How do the scores of the EPS multiple-choice writing test of the TVE Joint. ‧ 國. 學. College Entrance Exam correlate with direct writing performance for DAFL. ‧. students of vocational high schools?. sit. y. Nat. 2. Which of the subtests in the EPS multiple-choice writing test of the TVE Joint. io. er. College Entrance Exam is more closely related to direct writing for DAFL students of vocational high schools?. al. n. v i n What are the examinees’C perceptions of the EPS h e n g c h i Umultiple-choice writing test of the. 3.. TVE Joint College Entrance Exam and toward direct writing task? Significance of the Study. The present study is intended to investigate the correlations between the scores obtained from the EPS multiple-choice writing test and direct writing task. The utility of the four subtests of the EPS writing test in assessing writing ability is also examined. Furthermore, the present study aims to discuss the test-takers’ perceptions of the direct and indirect writing tasks. The results of the current study may thus provide an empirical basis for the appropriateness of the present format of writing measurement. 4.

(17) The designers of the EPS test items as well as DAFL teachers may benefit from the correlational results. For the formulators of the EPS test questions, the result may help them judge which item types may better reflect DAFL students’ writing competence and determine whether the objective test can be counted on as a valid writing test. In the case of DAFL teachers, they could gain a clearer insight into the nature of the indirect measure of writing skill. Once they understand the feature of the writing test, they may know what to focus on while teaching students how to cope with the entrance exam and how to write better compositions.. 政 治 大 Key terms used in the present study are defined as follows: 立 Definition of Key Terms. 1. Direct Writing Assessment: Direct writing assessment requires examinees to do. ‧ 國. 學. actual writing in reply to a given prompt. Examinees’ written texts are read and. ‧. scored by two or more raters according to a pre-set yardstick (Breland & Gaynor,. sit. y. Nat. 1979; Stiggins, 1981).. io. er. 2. Indirect Writing Assessment: Indirect writing assessment requests examinees to respond to multiple-choice items instead of performing actual writing (Breland &. n. al. Ch. Gaynor, 1979; Cooper, 1984).. engchi. i n U. v. 3. Picture Writing: Picture writing usually contains a series of one to four pictures. Examinees are required to write a passage in response to the pictorial stimulus. The present study uses a three-frame picture sequence as the picture writing test to evaluate DAFL students’ proficiency of direct writing. 4. The EPS Multiple-choice Writing Test of the TVE Joint College Entrance Exam: The writing test takes the form of multiple choices to assess DAFL students’ proficiency in writing. The test consists of 40 items, divided into five subtests, including TSS, SI, SD, SR and cloze. In the present study, the first four item types (30 items) are used as the testing instrument. 5.

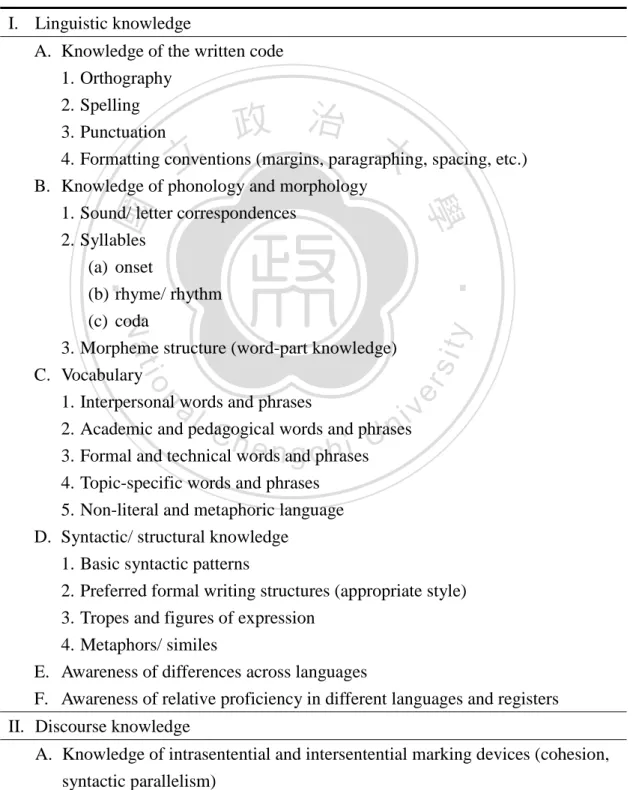

(18) CHAPTER TWO LITERATURE REVIEW This chapter is divided into six sections in an attempt to review the literature relevant to the present study. First, theoretical perspectives on writing assessment are provided. Second, an overview of direct and indirect writing assessment is introduced. Third, uses of direct and indirect writing assessment are presented. Fourth, relationship between direct and indirect writing assessment is discussed. Fifth, assessment by combination of direct and indirect tests of writing is suggested. The last. 政 治 大 English Professional Subject (EPS) of the Technological and Vocational Education 立. section of this chapter reviews the studies on the multiple-choice writing test of the. (TVE) Joint College Entrance Exam.. ‧ 國. 學. Theoretical Perspectives on Writing Assessment. ‧. Writing has long been considered a vital indication of learners’ language ability.. sit. y. Nat. As writing skill plays a crucial role in language learning, the assessment of writing. io. er. also becomes increasingly important. In assessing writing, it is essential to consider the different aspects of writing ability and writing test. Therefore, the constructs of. al. n. v i n C hin this section. Next, writing ability is first discussed test usefulness in terms of six engchi U qualities is presented.. Constructs of Writing Ability Before assessing the skill, we need to make sense of what constitutes writing ability because specifying the components of language knowledge involved in writing is fundamental to designing a writing test (Weigle, 2002). Grabe and Kaplan (1996), using the studies of Hymes (1972), Canale and Swain (1980), and Bachman (1990) as a basis, itemized three types of writing ability. As shown in Table 2.1, the three types of language knowledge of writing include linguistic knowledge, discourse knowledge and sociolinguistic knowledge. Linguistic knowledge is composed of basic 6.

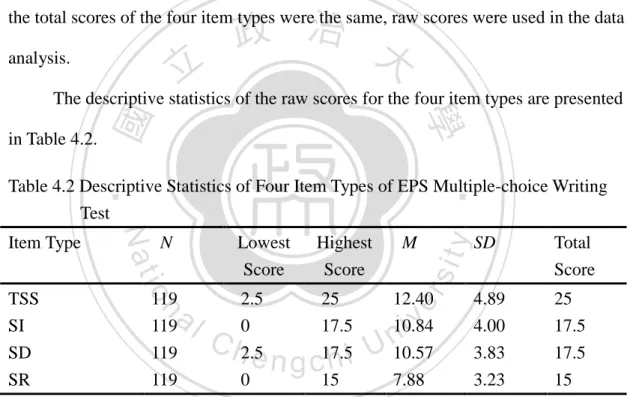

(19) components of language, that is, knowledge of phonology, morphology, vocabulary and syntactic structure. Discourse knowledge consists of knowledge of how to connect sentences to form cohesive and coherent texts. With sociolinguistic knowledge, it signifies knowledge of how to use language appropriately in various social contexts or settings. Table 2.1 Taxonomy of Language Knowledge of Writing (Grabe and Kaplan, 1996) I. Linguistic knowledge A. Knowledge of the written code 1. Orthography 2. Spelling 3. Punctuation 4. Formatting conventions (margins, paragraphing, spacing, etc.) B. Knowledge of phonology and morphology 1. Sound/ letter correspondences 2. Syllables (a) onset (b) rhyme/ rhythm. 立. 政 治 大. n. sit. er. io. al. y. ‧. ‧ 國. 學. Nat. (c) coda 3. Morpheme structure (word-part knowledge) C. Vocabulary 1. Interpersonal words and phrases 2. Academic and pedagogical words and phrases 3. Formal and technical words and phrases 4. Topic-specific words and phrases 5. Non-literal and metaphoric language D. Syntactic/ structural knowledge 1. Basic syntactic patterns. Ch. engchi. i n U. v. 2. Preferred formal writing structures (appropriate style) 3. Tropes and figures of expression 4. Metaphors/ similes E. Awareness of differences across languages F. Awareness of relative proficiency in different languages and registers II. Discourse knowledge A. Knowledge of intrasentential and intersentential marking devices (cohesion, syntactic parallelism) 7.

(20) B. Knowledge on informational structuring (topic/ comment, given/ new, C. D. E. F. G. H. I.. theme/ rheme, adjacency pairs) Knowledge of semantic relations across clauses Knowledge to recognize main topics Knowledge of genre structure and genre constraints Knowledge of organizing schemes (top-level discourse structure) Knowledge of inferencing (bridging, elaborating) Awareness of differences in features of discourse structuring across languages and cultures Awareness of different proficiency levels of discourse skills in different languages. III. Sociolinguistic knowledge. 政 治 大. ‧. ‧ 國. 立. 學. A. Functional uses of written language 1. Apologize 2. Deny 3. Complain 4. Threaten 5. Invite 6. Agree 7. Congratulate. n. al. er. io. sit. y. Nat. 8. Request 9. Direct 10. Compliment B. Application and interpretable violation of Gricean maxims C. Register and situational parameters 1. Age of writer 2. Language used by writer (L1, L2, …) 3. Proficiency in language used 4. Audience considerations 5. Relative status of interactants (power/ politeness). Ch. engchi. i n U. v. 6. Degree of formality (deference/ solidarity) 7. Degree of distance (detachment/ involvement) 8. Topic of interaction 9. Means of writing (pen/ pencil, computer, dictation, shorthand) 10. Means of transmission (single page/ book/ read aloud/ printed) D. Awareness of sociolinguistic differences across languages and cultures E. Self-awareness of roles of register and situational parameters The detailed list of constructs of writing ability, as Weigle (2002) points out, 8.

(21) provides a framework for designing writing tests. The taxonomy, giving an outline of the assorted aspects of writing proficiency, may be of help while developing writing tasks for assessment. For that reason, the present study applies the taxonomy of writing constructs to the design of direct and indirect writing questionnaires to investigate the writing skill constructs examinees utilize while doing the direct and indirect writing tasks respectively. Nevertheless, only the first two kinds of writing constructs—linguistic and discourse knowledge—would be examined because of the nature of the writing tasks.. 政 治 大 After elaborating the constructs of writing ability, in the development of a 立. Test Usefulness. writing test, we should also look into its quality. Bachman and Palmer (1996). ‧ 國. 學. observed that “the most important quality of a test is its usefulness” (p.17). They. sit. y. Nat. authenticity, interactiveness, impact, and practicality.. ‧. described test usefulness as having six qualities: reliability, construct validity,. io. er. According to the researchers, although all of the six qualities of test usefulness are important, it is practically impossible to augment each quality. The six qualities. al. n. v i n would be laid different stressCfor different assessment h e n g c h i U situations. Test developers,. imposed the restriction of their particular assessment contexts, would try to strike a balance between the six qualities and to maximize overall usefulness in developing writing tests. For the present study, validity and reliability are the two qualities that receive the greatest attention. The following sections concerning the usefulness of direct and indirect writing assessment will center primarily on these two qualities. Direct and Indirect Writing Assessment In the long history of the assessment of writing ability, two apparently different measures have evolved: direct and indirect writing measurement. In order to have a 9.

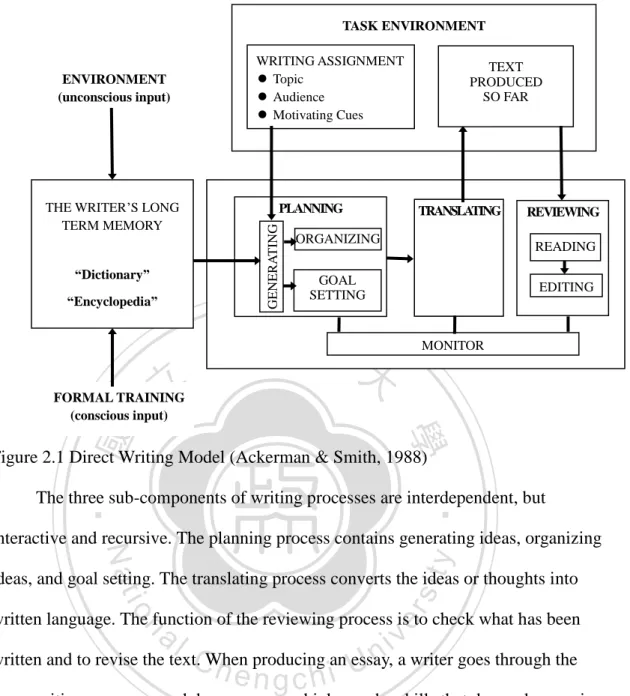

(22) better understanding of the nature of the writing measures, a brief introduction to the two writing assessments is given. A framework of direct and indirect writing models is discussed following each introduction. The present study then applies the two writing models to direct and indirect writing questionnaires to inspect the difficulties examinees might encounter while undertaking the two writing measures. Direct Writing Assessment Direct writing assessment requests test takers to do actual writing in response to a given prompt. Each text written by the examinees is read and scored independently. 政 治 大 1981). Item types for direct measures of writing ability consist of blank-filling, 立. by two or more raters based on a pre-set standard (Breland & Gaynor, 1979; Stiggins,. sentence completion/combination, short answer, and guided writing (including writing. ‧ 國. 學. with offered words and phrases, topic sentence writing, picture writing,. ‧. question-answering, situation-based writing). The method is thus thought of as a. sit. y. Nat. production measure, tending to assess the whole writing ability integratively rather. io. er. than specific constructs in an isolated way (Cooper, 1984; Stiggins, 1981). The construct skills measured by this approach can be expounded by Ackerman. al. n. v i n C h conceptual model and Smith’s (1988) comprehensive of direct writing behaviors. engchi U. Building on the Hayes-Flower (1980) writing model, Ackerman and Smith illustrated the process involved in creating written texts and explored the tasks required of direct writing assessment. As shown in Figure 2.1, the writing process involves task environment, the writer’s long-term memory, and cognitive writing processes. The task environment refers to the writing assignment and the text produced so far. The writer’s long-term memory is composed of a dictionary and an encyclopedia. The final element, the cognitive writing processes, consists of three sub-components: planning, translating, and reviewing.. 10.

(23) TASK ENVIRONMENT. THE WRITER’S LONG TERM MEMORY. “Dictionary” “Encyclopedia”. 立. WRITING ASSIGNMENT Topic Audience Motivating Cues. PLANNING GENERATING. ENVIRONMENT (unconscious input). TEXT PRODUCED SO FAR. TRANSLATING. ORGANIZING. REVIEWING READING. GOAL SETTING. EDITING. 政 治 大. MONITOR. FORMAL TRAINING (conscious input). ‧ 國. 學. Figure 2.1 Direct Writing Model (Ackerman & Smith, 1988). ‧. The three sub-components of writing processes are interdependent, but. sit. y. Nat. interactive and recursive. The planning process contains generating ideas, organizing. io. er. ideas, and goal setting. The translating process converts the ideas or thoughts into written language. The function of the reviewing process is to check what has been. al. n. v i n CWhen written and to revise the text. producing an essay, a writer goes through the U hen i h gc. three writing processes and draws on some higher-order skills that demand reasoning (Ward et al., 1980). The results of direct writing measures can thus offer sufficient information to judge examinees’ real writing proficiency (Stiggins, 1981). Indirect Writing Assessment Indirect assessment, also called objective assessment, is regarded as a recognition measure because it requires test takers to respond to multiple-choice items instead of performing actual writing (Breland & Gaynor, 1979; Cooper, 1984). The objective test covers components of what we refer to as the constructs of writing ability: punctuation, vocabulary, grammar, sentence construction, and organization 11.

(24) (Grabe & Kaplan, 1996; Stiggins, 1981; Weir, 2005). These constructs are explicit to an extent that the responses are either right or wrong (Stiggins, 1981). The construct skills and process associated with multiple-choice writing tests can be clarified by the conceptual writing model of indirect writing behaviors (Ackerman & Smith, 1988). Although similar to the direct writing model, the objective test model only consists of one primary component: the reviewing process (see Figure 2.2).. ENVIRONMENT (unconscious input). 政 治 大 DIRECTIONS. RESPONSE. LONG TERM MEMORY. 學. PROCESS. READING TRANSLATING. “Dictionary”. ‧. ‧ 國. 立. ITEMS. io. n. al. er. EDITING. FORMAL TRAINING (conscious input). sit. y. Nat. “Encyclopedia”. Ch. engchi. i n U. v. Figure 2.2 Indirect Writing Model (Ackerman & Smith, 1988) The model clearly points out the cognitive process involved in indirect testing procedure. In response to a multiple-choice item, test takers read and encode the content of the stem and alternatives into their working memory. Then, they edit the stem by matching the prior linguistic knowledge in memory and select a proper letter or number for the correct answer. Uses of Indirect and Direct Writing Assessment Past research concerning direct and indirect measurement of writing skills has had a fierce argument in favor of each since the two approaches have their own 12.

(25) advantages and disadvantages (Breland & Gaynor, 1979; Cooper, 1984; Stiggins, 1981; Teng, 2002). In the literature, proponents of both measures have expressed their concern over validity and reliability when suggesting the use of the two approaches. Uses of Indirect Writing Assessment During the 1950s and 1960s, owing to the prevalence of the discrete-point testing and psychometric-structuralist theory, reliability was prioritized over other testing concerns (Grabe & Kaplan, 1996; Hamp-Lyons, 1991). Indirect testing of writing skills was thus advocated because of its high reliability. Moreover, the. 政 治 大 scoring cost (Breland & Gaynor, 1979; Cooper, 1984; Grabe & Kaplan, 1996; 立. resulting data of indirect writing could be scored efficiently with relatively low. Shohamy et al., 1992; Stiggins, 1981; White, 1995). Besides high reliability, objective. ‧ 國. 學. measure of compositional ability also has predictive validity. If carefully developed,. ‧. the results of the assessment could predict success in examinees’ future academic. sit. y. Nat. writing (Breland & Gaynor, 1979; Grabe & Kaplan, 1996; Greenberg, 1986).. io. er. Despite its high scoring reliability, indirect writing assessment is gradually attacked for its lack of content and construct validity. Researchers have stated that the. al. n. v i n C h could not represent scores of the discrete-point items how good examinees really are engchi U in actual writing (Grabe & Kaplan, 1996; Weir, 2005). Since the objective. measurement relies heavily on test takers’ reading or editing skills rather than real writing skills (Ackerman & Smith, 1988; Cooper, 1984; Shohamy et al., 1992; Stiggins, 1981), harmful washback effect on English learning and on instruction could occur (Hughes, 2003; Weir, 2005). Under the influence of the indirect approach for assessing writing ability, writing instruction may just involve practice of multiple-choice items instead of practice in actual writing. Thus, the approach may demonstrate to the students that writing is not important (Breland & Gaynor, 1979; Cooper, 1984; White, 1995). 13.

(26) Uses of Direct Writing Assessment Beginning in the 1970s, uses of indirect writing assessment started to be out of favor due to increasing emphasis on communication ability and on validity (Grabe & Kaplan, 1996; Hamp-Lyons, 1991). Direct measures of writing skills were favored over multiple-choice testing by degrees at that time. Because the approach requires an active response to a prompt, it has high construct validity. Moreover, direct writing tests can reflect the actual tasks examinees might encounter in real-world writing circumstances (Breland & Gaynor, 1979; Shohamy et al., 1992; Stiggins, 1981; Weir,. 政 治 大 takers’ real writing proficiency. Furthermore, since practice for direct writing tests 立. 2005; White, 1995). They are thus considered to be able to evaluate and reflect test. means practice of writing skills, the writing method can result in a beneficial. ‧ 國. 學. washback effect on learning and on teaching (Hughes, 2003; Shohamy et al, 1992;. ‧. White, 1995). Accordingly, it conveys a message to the teachers that writing skills are. sit. y. Nat. highly valuable (Breland & Jones, 1982).. io. er. However, direct writing assessment still has its drawbacks concerning validity and reliability. The validity of direct measures of writing ability is threatened because. al. n. v i n C hcould not fully represent sometimes the writing samples test takers’ actual writing engchi U. proficiency (Hughes, 2003) and because the assessment could not always successfully. require examinees to demonstrate specific skills in the tasks (Cooper, 1984; Stiggins, 1981). In the case of reliability, direct writing assessment was mainly attacked for its comparatively low scoring reliability and high scoring cost (Ackerman & Smith, 1988; Breland & Gaynor, 1979; Cooper, 1984; Stiggins, 1981). Nonetheless, the scoring reliability has been ameliorated over the years. Lumley (2002) maintained that through combining the following three factors: rater training, clearer description of scoring standard, and writing tasks, direct writing could reach higher reliability coefficient. Hamp-Lyons (2003) also asserted that inter-rater 14.

(27) reliability above .75 could be gained when calculating the degree of agreement between two or more scorers on the rating awarded to a given writing sample. Due to its improved scoring reliability and high construct validity, direct testing of writing skills gradually gains in more popularity (Grabe & Kaplan, 1996; Hughes, 2003). Relationship between Direct and Indirect Writing Assessment To solve the debate on the uses of the two writing assessments, numerous researchers have conducted empirical studies to delve deeply into the relationship. 政 治 大 the scores obtained from the two measures (Benton & Kiewra, 1986; Breland & 立. between direct and indirect writing assessment by examining the correlations between. Gaynor, 1979; Chang, 2003; Godshalk et al., 1966; Hogan & Mishler, 1980; Moss et. ‧ 國. 學. al., 1982; Ward, et al., 1980). Some researchers have reported strong to moderate. ‧. correlations, while others have produced results that show low correlations.. sit. y. Nat. Strong to Moderate Correlations between Direct and Indirect Tests of Writing. io. er. A relatively strong to moderate relationship between the two measures of writing ability is found at different educational levels. For example, investigating the. al. n. v i n C hamong college freshmen, direct and indirect performances Breland and Gaynor (1979) engchi U found substantial correlations between the scores of the two approaches. They. administered three 20-minute argumentative essays and the Test of Standard Written English (TSWE), three 30-minute, 50-item multiple-choice tests, on three different occasions. The scores of the three essays, read holistically by two raters, correlated .63 (n = 819), .56 (n = 926) and .58 (n = 517) with the corresponding TSWE scores. The correlation between the sum of the three direct assessments and the sum of the three indirect assessments was .76 (n = 234). Therefore, they concluded that the two writing measures inclined to assess similar skills. In one of the seminal writing assessment studies, Godshalk, Swineford, and 15.

(28) Coffman (1966) reported significant correlations from .458 to .707 between College Board English Composition Test (six objective tests and two interlinear exercises) and five free-writing exercises among 646 secondary school students, enrolled almost evenly from Grades 11 and 12. The six multiple-choice subtests consisted of paragraph organization, usage, sentence correction, prose groups, error recognition, and construction shift. The usage (r = .707) and sentence correction (r = .705) showed the highest degree of correlations with essay total score, while the lowest correlation coefficient was obtained in scores on paragraph organization (r = .458).. 政 治 大 direct and indirect tests of writing skills for students at elementary and junior high 立. Hogan and Mishler (1980) also reported similar levels of correlations between. school levels. A 20-minute picture writing and the Metropolitan Achievement. ‧ 國. 學. Test—Language Instructional Tests (MAT-LIT) were administered to roughly 140. ‧. students in Grade 3 and 160 students in Grade 8. The correlations between the. sit. y. Nat. MAT-LIT and the picture writing, scored by two independent raters with a third reader. io. er. introduced to settle discrepancies, were .68 and .65 for Grade 3 and 8 respectively. Low Correlations between Direct and Indirect Tests of Writing. al. n. v i n C hhas shown a low relationship However, other research between the results of engchi U. direct and indirect measures of writing ability using students at various academic. levels. For instance, Moss, Cole, and Khampalikit (1982) compared scores of direct and indirect writing assessment for students at Grades 4, 7, and 10 and attained lower correlations at lower grade levels. The 40-item objective test was taken from the Language Test of the 3Rs Achievement Test, whereas the two essay tasks were chosen from the National Assessment of Educational Progress (NAEP). Comparisons of the two writing measures for the Grade 10 students yielded moderate correlations, which was quite similar to other studies using students at college levels. However, the correlational results of Grades 4 and 7 were generally lower than those of Grade 10. 16.

(29) Moss et al. thus pointed out that the scores obtained from the multiple-choice tests were not quite indicative of the participants’ corresponding scores on direct measurement of writing competency especially for students at elementary school levels. Comparing 172 college students’ ability to formulate scientific hypotheses in free-response and machine-scored forms, Ward, Frederiksen, and Carlson (1980) obtained low correlations between the corresponding scores from the two formats. Ward et al. concluded that the two formats assessed quite different constructs and that. 政 治 大 them since questions in real life would rarely be presented in multiple-choice items. 立. the ability to produce solutions was more important than the ability just to recognize. Another study that resulted in low correlations between direct and indirect. ‧ 國. 學. writing tests was done by Benton and Kiewra (1986). Apart from investigating the. ‧. validity of the multiple-choice tests underscoring the grammatical usages in. sit. y. Nat. measuring examinees’ writing proficiency, Benton and Kiewra enquired into the. io. er. efficacy of the tests assessing organizational ability as well. Benton and Kiewra employed one TSWE test and four organizational tests to measure the utility of these. al. n. v i n C h writing ability tests in assessing 105 undergraduates’ in two essays. The four engchi U. organizational tests, composed of anagram solving, word reordering, sentence reordering, and paragraph assembly, represented organizational ability at lexical, sentence, paragraph, and text levels of discourse production respectively. Although the essay scores were significantly correlated with the test-takers’ performances on the TSWE and the four organizational tests, the correlations coefficients were relatively low. The highest correlation with the essay scores was attained in a composite organizational score (the sum of all four organizational tests). The results indicated that in the assessment of writing proficiency, tests emphasizing organizational ability should be included. On the other hand, the score of the sentence reordering test was 17.

(30) associated with the lowest correlation with the measure of writing ability. It was concluded that aside from paragraph-level organizational ability, the lexical, sentence, and text levels of discourse production were also needed in examining organizational ability. In Taiwan, Chang (2003) also found a low correlation between self-developed indirect and direct writing tests for eighth graders (n = 908) and ninth graders (n = 1012). The indirect writing test consisted of vocabulary, grammar, and sentence rearrangement. The direct writing tasks included sentence combination, picture. 政 治 大 between the scores on the participants’ performances in the same grammatical patterns 立 writing, and essay writing. Chang found a significant but low correlation of .182. measure, at least in some way, different constructs.. 學. ‧ 國. found in the two writing tests. The results implied that the two methods seemed to. ‧. In sum, different degrees of correlation coefficients have been found between. sit. y. Nat. the scores of the two approaches. The present study thus attempts to find out the. io. er. correlations between the EPS writing test and direct writing task so as to examine the validity of the indirect writing measure to reflect examinees’ actual writing ability.. al. n. v i n C h of Direct andUIndirect Writing Measures Assessment by Combination engchi. From the reviewed literature, it is clear that direct and indirect writing. assessments have their strengths and limitations. Direct writing assessment is advocated by many researchers and writing teachers who think that only by involving examinees in doing the actual writing can we assess their writing competence. Regardless of its high validity, direct measure is questioned about its unrepresentative samples and comparatively unreliable scoring. On the other hand, indirect writing measurement, the more efficient and objective method of testing writing, lacks validity. Since the two writing approaches bring about problems when implemented alone, a lot of researchers have suggested that the two approaches be used in one 18.

(31) single measure to integrate the advantages of each approach (Ackerman & Smith, 1988; Benton & Kiewra, 1986; Breland & Gaynor, 1979; Breland & Jones, 1982; Chang, 2003; Cooper, 1984; Godshalk et al., 1966; P. Lin, 2005; Teng, 2002). In this section, the integration of the advantages of the two writing measures is discussed. Next, ways of combining the two writing measures are presented. Combined to Assess Both Basic and Higher-order Writing Abilities Numerous researchers have believed that the two writing approaches provide unique information about examinees’ profiles of writing competence (Ackerman &. 政 治 大 Quellmalz et al., 1982; Stephenson & Giacoboni, 1988; Stiggins, 1981). Once direct 立. Smith, 1988; Breland & Jones, 1982; Carlson et al., 1985; Chang, 2003; Cooper, 1984;. and indirect writing assessments are combined, they can benefit from the strengths of. ‧ 國. 學. both sides. The two measures can be used for assessing different writing competence. ‧. profiles: basic and higher-order writing abilities.. sit. y. Nat. For example, Cooper (1984) stressed that the best method to measure. io. er. composition skills should contain an essay as well as a multiple-choice section. In so doing, each measure could complement what the other could not assess directly.. al. n. v i n C hsuch as organization, Examinees’ higher-order skills, clarity, sense of purpose, and engchi U idea development, could be revealed by direct writing performances. On the other. hand, lower-level skills, like spelling, mechanics, and grammatical usage, could be examined by the scores of the multiple-choice portions. Ackerman and Smith (1988) also suggested that the two techniques be combined in one writing test. Ackerman and Smith found that indirect writing was intended to evaluate examinees’ declarative knowledge, the preliminary rules first learned in the development of writing skills. But direct writing aimed at evaluating procedural knowledge of writing, the ability required in the final stage of writing development. After examining the factor structure of direct and indirect methods of 19.

(32) writing assessment, Ackerman and Smith concluded that the higher-order procedural writing skills could be more accurately assessed with direct writing tasks and that the basic declarative writing skills should be measured by indirect writing tests. Through the combination of both methods of writing assessment, examinees could demonstrate both the procedural and declarative writing skills in one single writing test. Breland and Jones (1982) provided best empirical evidence to prove that the two approaches were responsible for assessing different construct skills. They rescored 806 20-minute essays written for the English Composition Test (ECT) in. 政 治 大 classified into discourse, syntactic, and lexical categories. Discourse characteristics 立. terms of 20 writing characteristics. As indicated in Table 2.2, the 20 characteristics are. are composed of nine features, regarded as the overall quality of compositions.. ‧ 國. 學. Syntactic characteristics, consisting of six features, relate to sentence-level traits of. ‧. writing ability. In the case of lexical characteristics, the five features represent. sit. y. Nat. word-level indicators of compositions.. io. er. Table 2.2 Listing of 20 Writing Characteristics (Breland & Jones, 1982, p.6) Discourse Characteristics. Syntactic Characteristics. n. al. 1. Statement of thesis. Ch. 2. Overall organization. n U engchi 10. Pronoun usage. iv. Lexical Characteristics 16. Level of diction. 11. Subject-verb agreement. 17. Range of vocabulary. 3. Rhetorical strategy. 12. Parallel structure. 18. Precision of diction. 4. Noteworthy ideas. 13. Idiomatic usage. 19. Figurative language. 5. Supporting material. 14. Punctuation. 20. Spelling. 6. Tone and attitude. 15. Use of modifiers. 7. Paragraphing and transition 8. Sentence variety 9. Sentence logic Among the 20 characteristics, eight of them could evidently predict the original 20.

(33) ECT essay holistic score. Of the eight characteristics, five of them belonged to discourse-level skills. The result indicated that essay scores counted chiefly on examinees’ discourse or higher-order writing ability. Breland and Jones added that ECT direct and indirect writing measures should be combined to maximize the effectiveness of the writing test. The multiple correlation between the original ECT objective raw score and the ECT essay score was .58. But when the objective scores were combined with significant direct characteristics, the multiple correlation jumped to .70. As a consequence, combined. 政 治 大 Ways of Combining Direct and Indirect Measures 立. measures could better estimate test takers’ overall composition skills.. From the above studies, most researchers have agreed that direct and indirect. ‧ 國. 學. measures of writing skill could be combined to complement what the other could miss.. ‧. Nonetheless, researchers still cannot reach a consensus on how to combine the two. sit. y. Nat. measures. Teng (2002) proposed that writing tests should add a direct writing. io. er. component, accounting for 20 to 30% or even 50% of the total writing examination. Breland and Jones (1982) and Godshalk et al. (1966) did not specify the weighting of. al. n. v i n Cbut the direct writing component, recommended 20 minutes should be h estrongly ngchi U allotted for a direct writing component.. EPS Indirect Writing Assessment of TVE Joint College Entrance Exam In the literature of writing assessment, some researchers, emphasizing construct and content validity, have proposed that good writing assessment should involve examinees in generating writing samples rather than select among alternatives in multiple-choice items (Grabe & Kaplan, 1996; Greenberg, 1986; Hughes, 2003; Shohamy et al., 1992; White, 1995). Others have made a suggestion of combining the two writing measures to obtain the advantages of both approaches. Nevertheless, the EPS writing assessment of the TVE Joint College Entrance Exam adopts only indirect 21.

(34) writing testing due to the present constraint of practicality (Teng, 2002; You et al., 2002). Ever since the 2001 academic year, the first year of the administration of the entrance exam, little empirical studies have been conducted to probe into the efficacy of the EPS writing test. Among the studies on the EPS writing test, some studies have put emphasis on inspecting the appropriateness of the question types (P. Lin, 2005; You et al., 2002). Others have focused on discussing the suitability of the indirect format to assess writing ability (P. Lin, 2005; National Teachers’ Association [NTA],. 政 治 大 Accordingly, this section first gives an introduction to the four subtests of the 立. 2011; Teng, 2002; TVE Joint College Admissions [TVEJCA], 2011; You et al., 2002).. EPS writing test. Past researchers’ and writing teachers’ opinions on the suitability of. ‧ 國. 學. the indirect format are then discussed.. ‧. Introduction to Question Types of EPS Indirect Writing Assessment. sit. y. Nat. The EPS indirect writing assessment consists of four question types that relate. io. er. to writing ability (You et al., 2002): topic sentence selection (TSS), sentence insertion (SI), sentence deletion (SD), and sentence rearrangement (SR). TSS requires. al. n. v i n examinees to select the mostC appropriate topic sentence h e n g c h i U for a given passage. In SI, test takers are requested to select the most proper sentence for the blank in a given. passage. SD asks students to delete an irrelevant sentence so the remaining sentences of the given passage can all stick to the topic. As for SR, it demands that examinees rearrange five scrambled sentences to make a coherent paragraph. Among the four item types of the EPS writing test, You, Chang, Joe, and Chi (2002) reported that the most suitable item type for the EPS writing test was SR, followed by SI, TSS, and SD after surveying 24 vocational high school English teachers attending the symposium held by the testing center of the TVE Joint College Entrance Exam. However, SI, according to P. Lin’s (2005) study, is the most difficult 22.

(35) item types among the four. Opinions on Using Indirect Format to Assess Writing Ability in EPS Writing Test Over the years, researchers and writing teachers have expressed their opinions on the suitability of the testing format. Some approve of the use of indirect writing tests (P. Lin, 2005; NTA, 2011; Teng, 2002; You et al., 2002). Nevertheless, more researchers and teachers disapprove of using multiple-choice items to assess the writing proficiency of students from Department of Applied Foreign Languages (DAFL) (P. Lin, 2005; Teng, 2002; You et al., 2002).. 政 治 大 students mostly because they think the multiple-choice items could adequately assess 立. The EPS indirect writing tests are favored by some writing teachers and DAFL. examinees’ writing competence. For instance, You et al. (2002) noted that over 70%. ‧ 國. 學. of the 24 English teachers surveyed in the study applauded the use of the objective. ‧. test. Since the DAFL students rarely received training in English writing, it would be. sit. y. Nat. a challenge for them to produce writing in the entrance exam. In addition, the teachers. io. er. argued that as long as the test items could validly assess the students’ basic writing concepts, the indirect measurement of writing ability could be regarded as an. n. al. Ch. appropriate testing format for the students.. engchi. i n U. v. P. Lin (2005) also reported that most of her participants regarded indirect writing tests as the most suitable task types to examine their writing proficiency in the entrance exam. Lin administered three different task types—free writing, guided writing, and the objective writing test—to 35 DAFL third-year vocational high school students. A statistically significant difference (F=74.44, p<0.01) was found between the scores on free writing (a 25-minute essay writing), guided writing (a 25-minute picture writing), and the objective writing test (a 20-minute, 10-item multiple-choice test). Post hoc test revealed that the English majors performed the best in the multiple-choice test, followed by the free writing, and the guided writing (p<0.01). 23.

(36) Similar to the empirical results, the questionnaire and interview data indicated that the students considered the multiple-choice writing test the most suitable to be included in the EPS writing test of the TVE Joint College Entrance Exam, and the guided writing task the least suitable. Although some teachers and students have expressed their assent to the use of the EPS writing test, researchers advise the exam include some easier question types if the EPS writing test continues to adopt the indirect format. Teng (2002) proposed nine question types to be included in the EPS writing test to make the writing entrance. 政 治 大 consisted of sentence correction, sentence paraphrase, sentence transformation, 立. exam more valid. The nine question types to replace direct testing of writing ability. sentence addition, sentence completion, comprehensive test, vocabulary usage,. ‧ 國. 學. syntactic structure, and punctuation. In You et al.’s (2002) study, teachers. ‧. recommended grammar correction, punctuation, and word usage. Teachers in NTA. y. sit. io. er. error-picking.. Nat. (2011) proposed that the EPS multiple-choice tests add grammar choice and. In spite of the approval of using the indirect format to assess DAFL students’. al. n. v i n C h tests have met with writing proficiency, the objective opposition by more researchers engchi U and writing teachers. For example, although most of P. Lin’s (2005) participants. pointed out that the direct writing tasks should not be added to the EPS writing test of the TVE Joint College Entrance Exam, they confessed it was the free writing that could best assess their writing proficiency. The participants also acknowledged that they preferred to take the objective writing test because they could get higher scores by repeated practice and guessing. Some of the participants even noted that the multiple-choice items could only assess their knowledge about grammar and reading comprehension. For that reason, Lin concluded that due to the present format of the writing assessment, more time would be allotted to practice doing the multiple-choice 24.

(37) items instead of doing the actual writing in the writing classroom. The EPS writing test thus had a negative washback effect on English writing instruction for DAFL students. After conducting a survey of the opinions of 7 teachers and 36 students on the effect of the EPS writing test on teaching and learning, Teng (2002) also discovered that the EPS indirect writing test had a negative washback effect on the teaching and learning of English writing. From the students’ viewpoints, it seemed that the disadvantages of the objective writing test outweighed the advantages. Because. 政 治 大 reading ability, such as grammar and vocabulary, the EPS writing test should add 立 indirect measures of compositional skills could only assess the students’ analytic. expressing themselves in written language.. 學. ‧ 國. direct writing components to examine the students’ ability of comprehensively. ‧. Furthermore, some teachers in the symposium mentioned in You et al.’s (2002). sit. y. Nat. study still expressed their concern over the EPS objective writing test. They insisted. io. er. that in addition to the multiple-choice items, direct testing of writing ability also be included in the EPS writing test of the TVE Joint College Entrance Exam. Only when. al. n. v i n C htest takers to writeUcould the students cultivate basic the writing test of EPS required engchi writing skills that the indirect assessment intends to measure. If it was difficult for. DAFL students to write a complete composition, the direct writing test could consist of easier item types, such as sentence making, paragraph writing or picture writing. In conclusion, ever since the implementation of the EPS objective writing test, researchers and writing teachers have had contradictory opinions on the suitability of the testing format. Some maintain that the EPS multiple-choice writing test could assess the students’ basic writing ability as well as discourse or higher-order writing ability. Yet, others argue that writing tests in the multiple-choice format could not reflect the students’ actual writing ability, which can only be gauged by direct writing. 25.

(38) The present study thus aims to inspect the efficacy of the EPS writing test to reflect students’ actual compositional ability.. 立. 政 治 大. ‧. ‧ 國. 學. n. er. io. sit. y. Nat. al. Ch. engchi. 26. i n U. v.

(39) CHAPTER THREE METHOD This study aims to examine the correlations between scores on the multiple-choice writing test of the English Professional Subject (EPS) and English performance. This study also determines which subtest of the EPS objective test is more related to the writing ability of the students from Department of Applied Foreign Languages (DAFL). In addition, this study investigates the examinees’ opinions on the EPS writing test. This chapter is thus divided into the following four sections: a. 政 治 大 collection, and the methods of data analysis. 立. brief description of the participants, the instruments employed, the procedure of data. Participants. ‧ 國. 學. The sample of the participants in this study was randomly selected from a. ‧. population of 1,143 third-year DAFL students in 13 commercial vocational high. sit. y. Nat. schools in the great Taichung area (Ministry of Education, Department of Statistics. io. er. [MEDS], 2011). Three schools were at first randomly drawn from the 13 schools. Then, one class within each of the three schools was randomly selected for inclusion. al. n. v i n C h DAFL students in the study. A total of 124 third-year were first sampled. Nevertheless, engchi U five participants were dropped from the samples because they had taken the EPS. multiple-choice writing test before. Hence, as indicated in Table 3.1, a total of 119 students, composed of 17 males and 102 females, participated in the present study. Table 3.1 Demography of Participants. School 1 School 2 School 3 Total. Gender. No. of Class. No. of Students. Male. Female. 1 1 1 3. 50 28 41 119. 7 1 9 17. 43 27 32 102. 27.

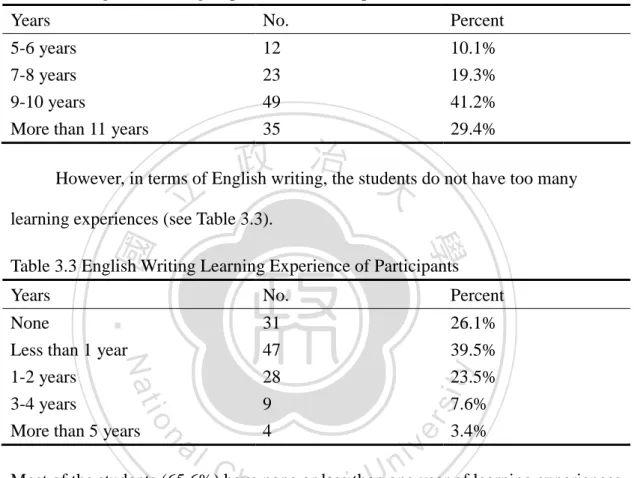

(40) As can been seen in Table 3.2, most of the participants (60.5%) have learned English for 7 to 10 years. Some students (29.4%) have learned English for more than 11 years, approximately from preschool age. Few students (10.1%) have received 5 to 6 years of formal English instruction. Table 3.2 English Learning Experience of Participants Years. No.. Percent. 5-6 years 7-8 years. 12 23. 10.1% 19.3%. 9-10 years More than 11 years. 49 35. 41.2% 29.4%. 政 治 大. However, in terms of English writing, the students do not have too many. 立. learning experiences (see Table 3.3).. ‧ 國. 學. Table 3.3 English Writing Learning Experience of Participants No.. io. al. y. 28 9 4. 26.1% 39.5% 23.5% 7.6% 3.4%. sit. 1-2 years 3-4 years More than 5 years. ‧. 31 47. Nat. None Less than 1 year. Percent. er. Years. n. v i n C h none or less thanUone year of learning experiences Most of the students (65.6%) have engchi in English writing. The reasons why the participants do not have enough instruction in English writing are that one of the participating schools does not have courses of English Writing and that the other two with English Writing courses do not pay too much attention to teaching writing due to their tight teaching schedule. Table 3.4 then illustrates the certificates of the General English Proficiency Test (GEPT) the participants acquired.. 28.

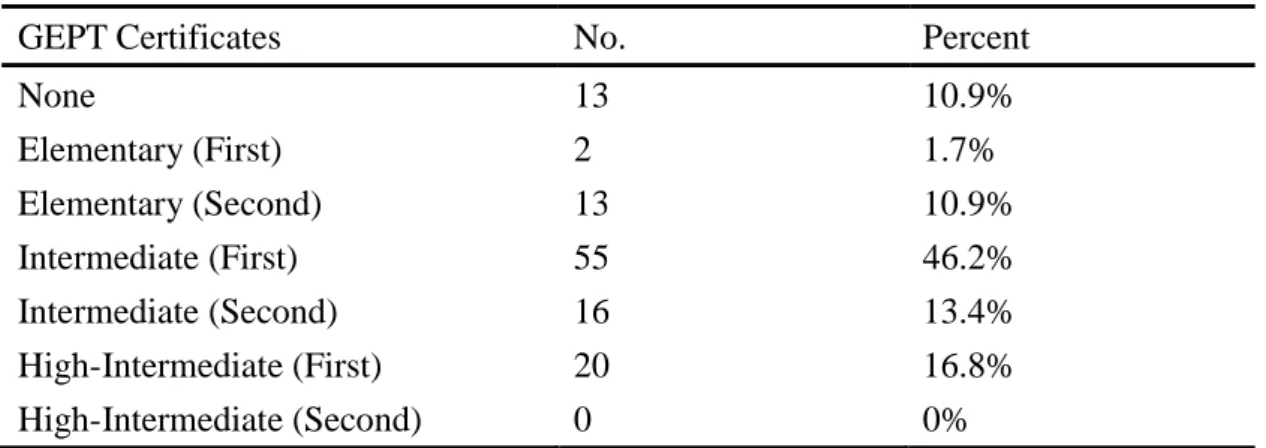

(41) Table 3.4 Acquired GEPT Certificates of Participants GEPT Certificates. No.. Percent. None Elementary (First) Elementary (Second) Intermediate (First) Intermediate (Second) High-Intermediate (First) High-Intermediate (Second). 13 2 13 55 16 20 0. 10.9% 1.7% 10.9% 46.2% 13.4% 16.8% 0%. Over 76% of the participants have acquired the certificate of or higher than the first stage of the intermediate level. Because the participants major in English, their. 政 治 大. English proficiency levels are higher than those of the students from other. 立. 學. ‧ 國. departments of the vocational high schools. Instruments. The instruments employed in the present study included the EPS. ‧. multiple-choice writing test, the English picture writing task, indirect writing. Nat. sit. y. questionnaire (IWQ), and direct writing questionnaire (DWQ). The questionnaires. n. al. er. io. were written both in English and in Chinese. However, the Chinese versions were. i n U. v. used to guarantee the participants’ full understanding of the questions.. Ch. engchi. EPS Multiple-choice Writing Test. The EPS multiple-choice writing test from the 2011 academic year was used for the present study. The 2011 edition contains 40 multiple-choice items, divided into five parts: topic sentence selection (TSS), sentence insertion (SI), sentence deletion (SD), sentence rearrangement (SR) and cloze. The examinees need to finish the test within a 100-minute time limit. According to the conference held by National Teachers’ Association to comment on the EPS writing test in the 2011 academic year, the test items conform to the objective of the writing test (National Teachers’ Association [NTA], 2011). Since the emphasis of the first four sections of the writing 29.

(42) test is to assess students’ basic writing ability (You et al., 2002), the first four parts of the 2011 edition were directly employed as the multiple-choice writing test of the current study. In order to inspect whether the testing constructs of the EPS subtests could represent students’ writing ability, the test items and the number of items for each of the four parts remained the same as those of the 2011 EPS multiple-choice writing test. The objective test in the current study was thus composed of 30 multiple-choice items, divided into four subtests: TSS, SI, SD and SR. The number of items was 10, 7, 7, and. 政 治 大 participants to complete the test (for the writing test and its answer keys, see 立. 6 for each of the four subtests respectively. The study allotted 75 minutes for the. Appendix A and B).. ‧ 國. 學. English Picture Writing Task. ‧. The present study employed picture writing as the direct writing task because. sit. y. Nat. DAFL teachers have recommended using picture writing to test their students’ English. io. er. writing proficiency in the EPS writing assessment (You, et al., 2002). For unskilled student writers, it would be easier to write about stories because the pictures can. al. n. v i n Ccreate provide a reference point and a context for examinees to develop their U hen i h gc compositions (X. Lin, 2006; Wright, 1989; Wright, 1996).. The English picture writing task used for the current study was directly taken from the 2006 academic year of the writing exam of the Scholastic Aptitude Test (SAT), which requires test takers to describe a series of three related pictures. The 2006 edition has received rave reviews from teachers and students because the creative and detailed pictorial stimulus can evaluate examinees’ various writing ability (X. Lin, 2006). The writing task in the current study, in light of the testing instruction of the 2006 edition, required the participants to compose a 100-word passage about the prompts in 30 minutes. Directions were given to call for the students to describe 30.

(43) what was happening in the three-frame picture sequence (see Appendix C). The present study employed holistic scoring technique in the assessment of the picture writing task in that this scoring technique has won praise from writing assessment researchers. For example, Perkins (1983) noted that if overall writing competence was the aim of direct measure of writing ability, holistic scoring possessed the strongest construct validity among all of the scoring techniques. Moreover, Cooper (1984) also pointed out that holistic scoring was the most valid and direct way to rank-order and select candidates in terms of writing ability.. 政 治 大 rubric developed by Joint College Entrance Examination Center and used as the 立. Since the picture writing was taken from the SAT writing exam, the scoring. standard to score SAT picture writing was adopted for the holistic scoring for the. ‧ 國. 學. present study (see Appendix D for Chinese version, and Appendix E for English. ‧. version). As X. Lin (2009) reported, the holistic scoring was classified into five ranks:. sit. y. Nat. very good (19-20 points), good (15-18 points), fair (10-14 points), poor (5-9 points),. io. er. and very poor (0-4 points). Using the analytic scoring rubric, the two raters awarded a comprehensive score that reflected their overall impression of text quality. They then. al. n. v i n verified whether the holisticC score they had just given h e n g c h i U corresponded to the scoring. scheme. The holistic score was briefly checked by the following five components: content (five points), organization (five points), grammar (four points), vocabulary (four points), and mechanics (two points). According to Stephenson and Giacoboni (1988), this scoring procedure, “combining the elements of the analytical and holistic scoring methods, provides results that are less subject to the relativistic criticism that pure holistic scoring might elicit” (p.10). Indirect Writing Questionnaire IWQ consisted of 13 questions and was divided into two parts: (a) basic 31.

數據

相關文件

決議文草案寫作技巧(Writing the draft resolution Sample resolution)Training for Speaking skills/ Debate skills 學生指 南:Handout: Draft Resolution Format

Reading Task 6: Genre Structure and Language Features. • Now let’s look at how language features (e.g. sentence patterns) are connected to the structure

incorporating creative and academic writing elements and strategies into the English Language Curriculum to deepen the learning and teaching of writing and enhance students’

Making use of the Learning Progression Framework (LPF) for Reading in the design of post- reading activities to help students develop reading skills and strategies that support their

Part 2 To provide suggestions on improving the design of the writing tasks based on the learning outcomes articulated in the LPF to enhance writing skills and foster

• To introduce the Learning Progression Framework (LPF) as a reference tool for designing a school- based writing programme to facilitate progressive development

Task: Writing an article to the school newspaper arguing either for or against the proposal which requires students to undertake 50 hours of community service, in addition to

本校為一科技大學,學生之來源大多屬技職體系之職業高中及專科學